Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

- Home

- Azure

- Azure PaaS Blog

- Automate Service Fabric Cluster using Azure Function

Automate Service Fabric Cluster using Azure Function

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

By

Published

Jul 31 2023 08:02 AM

2,869

Views

Jul 31 2023

08:02 AM

Jul 31 2023

08:02 AM

Background Information

While the our work environments became more and more complex, the need of automation is a fact. Therefore ,I made a demo on how you can automate various administrative tasks performed on a Service Fabric Cluster using the sfctl module and trigger them via Azure Function. On Function, we deploy a Docker Container that has all the necessary tools and permissions to access SF Cluster.

The Azure Service Fabric command-line interface (CLI) is a command-line utility for interacting with and managing Service Fabric entities, like cluster, applications and services. Basically, we can automate any kind of operation that is supported via the sfctl package.

Scenario

I have developed a Python based Azure Function project that is used for automating various administrative tasks on a Service Fabric Cluster using the sfctl module. For my demo, I have used an Azure SF Cluster, secured by Self-Signed Thumbprint based certificate.

Using VS Code, I have generated the necessary Azure Function necessary files and used a Python script that will do the necessary work on SF Cluster. I've build the entire project into a container image. I was able to publish the container image to an Azure Container Registry private repository.

Then, using this same image, deployed it into an Azure Function resource which exposes an HTTP endpoint that will trigger the Python Script. The usage of Container was mandatory since we cannot install sfctl package directly on Azure Function.

Prerequisites

-

VS Code

-

VS Code Azure Function Extension

-

VS Code Docker Extension

-

Docker Desktop

-

Azure Functions Core Tools

-

Python 3.x

-

Azure CLI version 2.4 or later

Table of Content

Step 1 - Create new VS Code Project for Azure Function

Step 2 - Update & Test python project

Step 3 - Modify Dockerfile

Step 4 - Create Azure Container Registry resource and push the newly created image

Step 5 - Create Azure Function resource and Deploy the container

Step 6 - Test the function

Step 7 - [Optional] - Integrate with KeyVault & Secure Azure Function endpoints

Conclusions

Step 1 - Create new VS Code Project for Azure Function

Reference: Create your first containerized Azure Functions | Microsoft Learn

1.1 Create virtual python environment

We open new PowerShell Terminal and we create and activate a virtual environment

py -m venv .venv

.venv\scripts\activate

1.2 Create function project in VS Code terminal

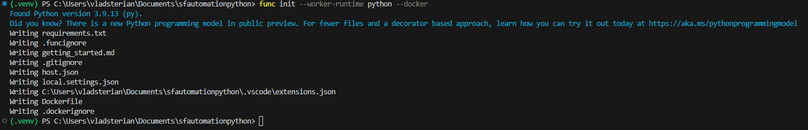

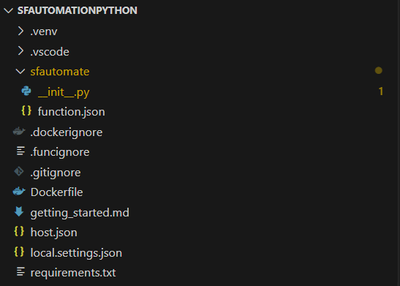

In a new cmd Terminal, we initiate a new function project, specifing the python runtime and the docker parameter. The command will generate the necessary files for an Azure Function project along with the dockerfle. The dockerfile is like a startup file that is needed for container deployment.

func init --worker-runtime python --docker

1.3 Create function in VS Code terminal

Using the same terminal window, we go ahead and create new function using the HTTP trigger template. This function will generate a HTTP endpoint that when is accessed, it will simply return a friendly message. The command will generate a new folder inside the root project folder, containing the .py file and the function.json file, both mandatory for an Azure Function project.

func new --name sfautomate --template "HTTP trigger" --authlevel anonymous

If you want to test this function locally, right away you can use the following command to test it out. It will start the local Azure Functions runtime host in the root of the project folder. It will return a http endpoint that will display the "hello" message.

func start

The form of the endpoint will look like:

http://localhost:7071/api/sfautomate?name=Functions

Step 2 - Update & Test the Python project

As the HTTP trigger template will generate a sample python script that will only return a friendly message, we want to expand the demo with a real-life SF Cluster scenario. We will connect to an SF Endpoint using cluster certificate and perform few administrative tasks using the sfctl commands.

2.1 Python Script

For this demo only, I have build a python script that will:

-

Load a pre-existing cluster certificate in .pfx format from the project root's directory

-

Convert the .pfx certificate in a .pem certificate (sfctl command that is used for cluster connection will only accept certs in .pem format)

-

Connect to an Azure Service Fabric Cluster using the loaded certificate

-

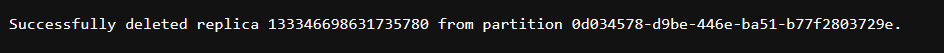

List the partitions & replicas of a specific stateless service hosted on SF Cluster

-

Delete the first replica of that service

-

Return an HTTP Response based on the desired outcome

The entire project can be reviewed/cloned from GitHub repo : vsterian/sfautomationpython-localCert (github.com)

Specifics of sfctl can be accessed from the following link:

Get started with Azure Service Fabric CLI - Azure Service Fabric | Microsoft Learn

Also the full range of SF Cluster commands can be reviewed under: Azure Service Fabric CLI- sfctl - Azure Service Fabric | Microsoft Learn

All of them can be called on Python app code, as per our demo.

2.2 Update Requriments.txt

Make sure that you update all the packages in the requirments.txt .

Since sfctl is quite a different python package, it cannot be installed just by declaring it in the above mentioned file. Therefore, we must install it when we initially deploy the container (see next step)

If you want to test the code implementation on your local machine, you may rerun it at this point again

func start

Since the purpose of this demo is not the code, I'll quickly jump to the next topic. You can find at the end of this article the code implementation and the necessary additional settings to load the certificate directly from the Azure KeyVault, which increases the level of security of this implementation. But for the purpose of this demonstration, we will use the certificate stored on the Root Project folder.

Step 3 - Modify Dockerfile

We have already mentioned that sfctl must be installed on container build, therefore it's a must to modify the proposed dockerfile by adding following line:

# Install Service Fabric CLI

RUN pip install sfctl

Step 4 - Create Azure Container Registry resource and push the newly created image

Reference: Quickstart - Create registry in portal - Azure Container Registry | Microsoft Learn

Create your first containerized Azure Functions | Microsoft Learn

In my demo I have used Azure Container Registry (ACR) to push and store the newly created image in a private repository and utilize it later in Azure Function. ACR allows you to build, store, and manage container images and artifacts in a private registry for all types of container deployments. It is commonly used to build container images in Azure on-demand, or automate builds triggered by source code updates, updates to a container's base image, or timers.

4.1 Create Azure Container Registry

Use the steps highlighted in the following article to create ACR from Azure Portal: Quickstart - Create registry in portal - Azure Container Registry | Microsoft Learn

4.2 Build, Run, tag and push container image to ACR

Going back to VS Code terminal, use the following commands

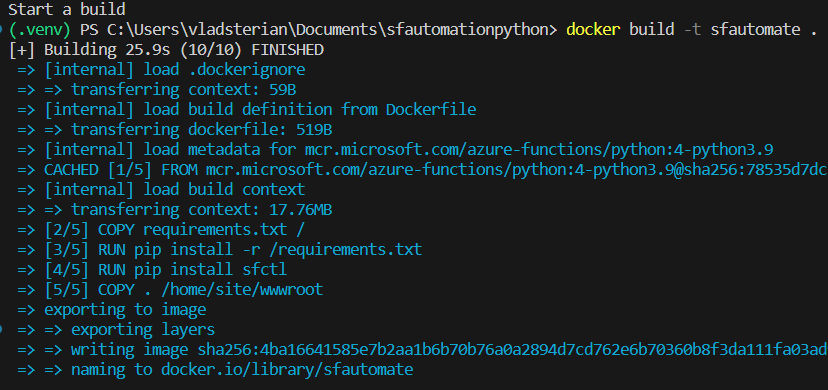

4.2.1 Build on local machine

docker build -t sfautomate .

4.2.2 Run/Test on local machine

docker run sfautomate

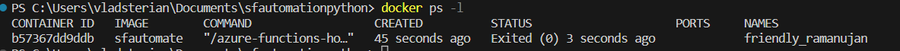

4.2.3 Get the list of the running containers and save the containerID

docker ps -l

4.2.4 Tag

Docker tag 698a26d159ed automatecontainer.azurecr.io/sfautomate:v1

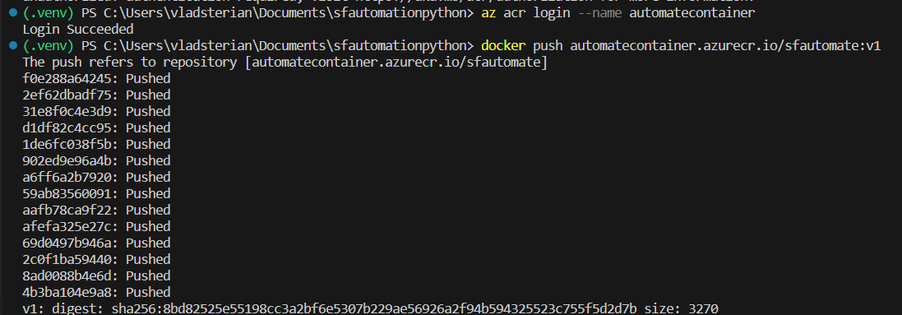

4.2.5 Login to Azure Account and to Azure Container Registry

You can use the same terminal to login to your Azure Account and select your Subscription

Az login

Az account set --subscription "subscriptionName"

az acr login --name "automatecontainer"

4.2.6 Push

This operation will push (save) the newly created local container image to our ACR repository, using the name and version used in the "tag" operation.

docker push automatecontainer.azurecr.io/sfautomate:v1

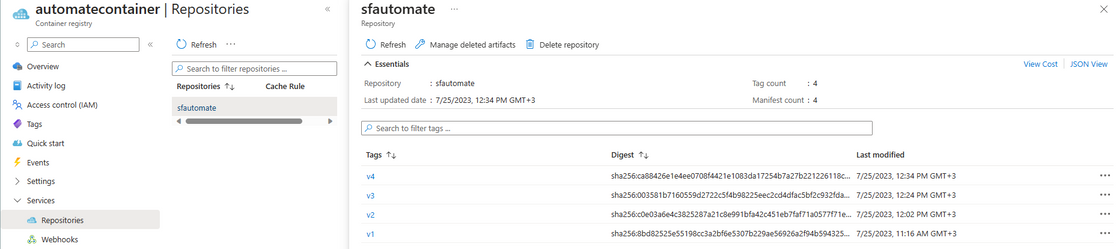

After we have pushed the image to repository, we can also confirm from Azure Portal that everything looks as expected.

On Container Registry resource -> Services blade -> Repository -> Select the repository name -> Confirm that we have the container image saved to Repo:

Step 5 - Create Azure Function resource and Deploy the container

Once we have pushed the newly created container image to ACR, we can proceed with the creation of the Azure Function along with the supporting resources.

We will start by creating the supporting resources that are mandatory for a Azure Function to work properly (App Service Plan + Storage Account). For our demo we have used Azure Function deployed on top of App Service plan. The Storage Account will be used for Azure Function related blobs.

5.1 Create a general-purpose storage account in your resource group

az storage account create --name sfautomatesa --location westeurope --resource-group sfautomate --sku Standard_LRS

5.2 Create App Services plan, as Function will be hosted on it

az functionapp plan create --resource-group sfautomate --name automateplan --location westeurope --number-of-workers 1 --sku B1 --is-linux

5.3 Obtain the ACR credentials used to access the container image when deploying the function

5.3.1 Enable build-in admin account so that Functions can connect to registry with username and password

az acr update -n automatecontainer --admin-enabled true

5.3.2 Retrieve username and password which will use them later

az acr credential show -n automatecontainer --query "[username, passwords[0].value]" -o tsv

5.4 Create new Azure Function resource

5.4.1 Create Azure Function resource using the AppService plan created earlier and refer the container image that we host on ACR

az functionapp create --name sfautomate --storage-account sfautomatesa --resource-group sfautomate --plan automateplan --image automatecontainer.azurecr.io/sfautomate:v1 --registry-username automatecontainer --registry-password S2pdY5z+SWKyFWwladolKQiVi7wBvzZgCyxLGXBbjR+ACRAIJRd9 --runtime python

This command will create the Azure Function resource and will deploy from the beginning the container image that we have specified along with the application insights associated for logging purposes.

5.4.2 Add Environment Variables on Azure Function

Based on our code, where we pass "CLUSTER_ENDPOINT" as an environment variable, we should also declare it on Azure Function: Under Settings blade -> Configuration -> Application Settings -> Add new App Setting -> Save

Cluster endpoint should look like: https://clusterName.clusterLocation.cloudapp.azure.com:19080

Step 6 - Test the function

The container deployment can be studied by accessing the Azure Function -> Deployment Center -> Logs . Once successfully deployed , it should return the following output

You can enable Continuous Deployment from the Deployment Center -> Settings -> Continuous deployment ON

In this manner every time when a new version of the same container image is pushed, it will be initialized automatically on Azure Function

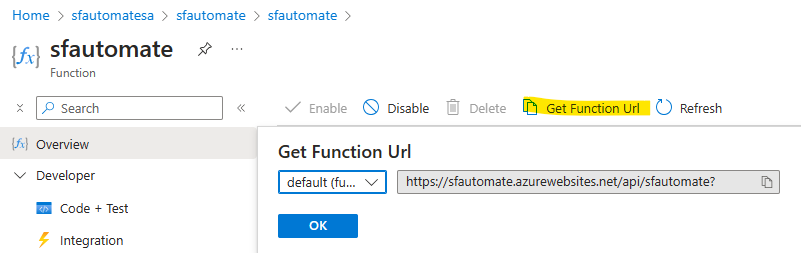

In order to test the function itself, we can manually trigger it by accessing its endpoint. From the Functions blade, we hit the function name and we click on "Get Function Url" .

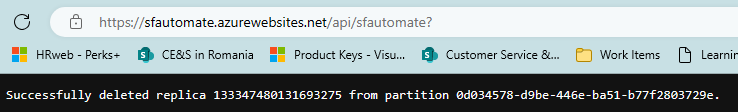

Once executed in browser, it should return the output as per our code:

Step 7 - [Optional] - Integrate with KeyVault & Secure Azure Function endpoints

As it's not usually recommended to embed certificates into App projects, we can rely on KeyVault SDK to access the certificate directly from our App Code.

Adapted Project can be reviewed/cloned from following GitHub repo: vsterian/sfautomationpython-KV (github.com)

Alongside code modifications, some other environment changes must be done in order to implement the change

7.1 Add Environment Variables on Azure Function

Under Settings blade -> Configuration -> Application Settings -> Add two new app settings -> Save

"KEY_VAULT_URL" and "SECRET_NAME", where the KV Url it's the endpoint of your KeyVault where the certificate is stored, and the secret name is the certificate name.

7.2 Enable system-assigned identity for Function to gain access to KeyVault

7.2.1 In Azure Function -> Settings blade -> Identity -> Change the status to ON and Save

Once it's registered, the function app can be granted permissions to access resources protected by Azure AD (in our case the KeyVault where the SF Cluster certificate is stored)

7.2.2 In Key Vault -> Access policies blade -> Create new policy :

-

Permissions : Get and List Certificate and Secret permissions should be enough for our project

-

Principal: search for your function name and select it

-

Create

7.3 Redo the steps from 4.2 all over again . Once the new container version has been deployed, we can test again our function.

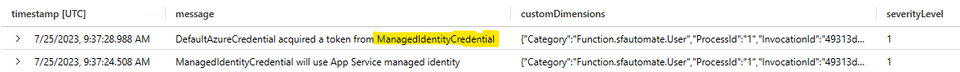

We can see in the AppInsights logs that now we are using the Managed Identity to retrieve the SF Cluster certificate from the KeyVault:

7.4 Secure Azure Function endpoints

Securing Azure Function endpoints that perform administrative tasks on a Service Fabric Cluster is crucial to prevent unauthorized access and potential security risks.

There are a lot of options to secure Azure Function resource. Find here some security considerations:

Azure Functions security - Azure Example Scenarios | Microsoft Learn

Securing Azure Functions | Microsoft Learn

Specifically, on our scenario I would recommend to take into consideration following recommendations:

-

Authentication and Authorization:

-

Implement Azure AD Authentication: Configure your Azure Function to use Azure Active Directory (Azure AD) authentication to restrict access to authorized users or applications only. This ensures that only authenticated users can access the function endpoints.

-

Role-Based Access Control (RBAC): Assign appropriate roles to users or groups in Azure AD to control what actions they can perform on the function endpoints.

-

Secure API Keys or Secrets:

-

Avoid Hardcoding Secrets: Do not hardcode any sensitive information (e.g., passwords, API keys) directly in the code. Instead, use Azure Key Vault or environment variables to store and access secrets securely.

-

Key Vault Integration: Integrate your Azure Function with Azure Key Vault to retrieve secrets at runtime securely. This prevents exposing sensitive information in the code or configuration files.

-

IP Whitelisting and Network Security:

-

IP Whitelisting: Restrict access to the Azure Function endpoints by configuring IP whitelisting, allowing only specific IP addresses or ranges to access the endpoints.

-

Network Security Group (NSG): If your Azure Function and Service Fabric Cluster are deployed in virtual networks, you can use NSGs to control traffic flow between the two.

-

Secure Function access keys

By implementing these security measures, you can ensure that your Azure Function endpoints are well-protected and reduce the risk of unauthorized access or security breaches. Remember that security is an ongoing process, and it's essential to regularly review and update your security measures as needed.

Conclusions

As for now, we have reproduced all the necessary steps to integrate Service Fabric Cluster CLI (used to execute various SF related administrative tasks) with Azure Function using a docker container image. In this manner we can basically automate all sorts of SF Cluster operations by calling Azure Function endpoints.

For production environments we always recommend following and implementing the security guidelines shared by Microsoft so you can avoid breaches. You can integrate your Function App and Environment with KeyVault and implement some security measures to reduce the risk of unauthorized access.

We can later expand this scenario on implementing automated scenarios based on the Azure Monitor SF related traces or App Insights etc.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels