- Home

- Azure

- Azure High Performance Computing (HPC) Blog

- Running GPU accelerated workloads with NVIDIA GPU Operator on AKS

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Dr. Wolfgang De Salvador - EMEA GBB HPC/AI Infrastructure Senior Specialist

Dr. Kai Neuffer - Principal Program Manager, Industry and Partner Sales - Energy Industry

Resources and references used in this article:

- About the NVIDIA GPU Operator — NVIDIA GPU Operator 23.9.1 documentation

- Use GPUs on Azure Kubernetes Service (AKS) - Azure Kubernetes Service | Microsoft Learn

- Create a multi-instance GPU node pool in Azure Kubernetes Service (AKS) - Azure Kubernetes Service |...

As of today, several options are available to run GPU accelerated HPC/AI workloads on Azure, ranging from training to inferencing.

Looking specifically at AI workloads, the most direct and managed way to access GPU resources and related orchestration capabilities for training is represented by Azure Machine Learning distributed training capabilities as well as the related deployment for inferencing options.

At the same time, specific HPC/AI workloads require a high degree of customization and granular control over the compute-resources configuration, including the operating system, the system packages, the HPC/AI software stack and the drivers. This is the case, for example, described in previous blog posts by our benchmarking team for training of NVIDIA NeMo Megatron model or for MLPerf Training v3.0

In these types of scenarios, it is critical to have the possibility to fine tune the configuration of the host at the operating system level, to precisely match the ideal configuration for getting the most value out of the compute resources.

On Azure, HPC/AI workload orchestration on GPUs is supported on several Azure services, including Azure CycleCloud, Azure Batch and Azure Kuberenetes Services

Focus of the blog post

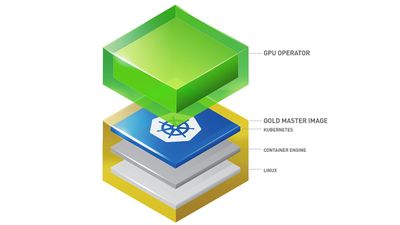

The focus of this article will be on getting NVIDIA GPUs managed and configured in the best way on Azure Kuberentes Services using NVIDIA GPU Operator.

The guide will be based on the documentation already available in Azure Learn for configuring GPU nodes or multi-instance GPU profile nodes, as well as on the NVIDIA GPU Operator documentation.

However, the main scope of the article is to present a methodology to manage totally the GPU configuration leveraging on NVIDIA GPU Operator native features, including:

- Driver versions and customer drivers bundles

- Time-slicing for GPU oversubscription

- MIG profiles for supported-GPUs, without the need of defining exclusively the behavior at node pool creation time

Deploying a vanilla AKS cluster

The standard way of deploying a Vanilla AKS cluster is to follow the standard procedure described in Azure documentation.

Please be aware that this command will create an AKS cluster with:

- Kubenet as Network CNI

- AKS cluster will have a public endpoint

- Local accounts with Kubernetes RBAC

In general, we strongly recommend for production workloads to look the main security concepts for AKS cluster.

- Use Azure CNI

- Evaluate using Private AKS Cluster to limit API exposure to the public internet

- Evaluate using Azure RBAC with Entra ID accounts or Kubernetes RBAC with Entra ID accounts

This will be out of scope for the present demo, but please be aware that this cluster is meant for NVIDIA GPU Operator demo purposes only.

Using Azure CLI we can create an AKS cluster with this procedure (replace the values between arrows with your preferred values):

export RESOURCE_GROUP_NAME=<YOUR_RG_NAME>

export AKS_CLUSTER_NAME=<YOUR_AKS_CLUSTER_NAME>

export LOCATION=<YOUR_LOCATION>

## Following line to be used only if Resource Group is not available

az create group --resource-group $RESOURCE_GROUP_NAME --location $LOCATION

az aks create --resource-group $RESOURCE_GROUP_NAME --name $AKS_CLUSTER_NAME --node-count 2 --generate-ssh-keys

Connecting to the cluster

To connect to the AKS cluster, several ways are documented in Azure documentation.

Our favorite approach is using a Linux Ubuntu VM with Azure CLI installed.

This would allow us to run (be aware that in the login command you may be required to use --tenant <TENTANT_ID> in case you have access to multiple tenants or --identity if the VM is on Azure and you rely on an Azure Managed Identity) in case:

## Add --tenant <TENANT_ID> in case of multiple tenants

## Add --identity in case of using a managed identity on the VM

az login

az aks install-cli

az aks get-credentials --resource-group $RESOURCE_GROUP_NAME --name $AKS_CLUSTER_NAME

After this is completed, you should be able to perform standard kubectl commands like:

kubectl get nodes

root@aks-gpu-playground-rg-jumpbox:~# kubectl get nodes

NAME STATUS ROLES AGE VERSION

aks-nodepool1-25743550-vmss000000 Ready agent 2d19h v1.27.7

aks-nodepool1-25743550-vmss000001 Ready agent 2d19h v1.27.7

Command line will be perfectly fine for all the operations in the blog post. However, if you would like to have a TUI experience, we suggest to use k9s, which can be easily installed on Linux following the installation instructions. For Ubuntu, you can install current version at the time of Blog post creation with:

wget "https://github.com/derailed/k9s/releases/download/v0.31.9/k9s_linux_amd64.deb"

dpkg -i k9s_linux_amd64.deb

k9s allows to easily interact with the different resources of AKS cluster directly from a terminal user interface. It can be launched with k9s command. Detailed documentation on how to navigate on the different resources (Pods, DaemonSets, Nodes) can be found on the official k9s documentation page.

Attaching an Azure Container registry to the Azure Kubernetes Cluster (only required for MIG and NVIDIA GPU Driver CRD)

In case you will be using MIG or NVIDIA GPU Driver CRD, it is necessary to create a private Azure Container Registry and attaching that to the AKS cluster.

export ACR_NAME=<ACR_NAME_OF_YOUR_CHOICE>

az acr create --resource-group $RESOURCE_GROUP_NAME \

--name $ACR_NAME --sku Basic

az aks update --name $AKS_CLUSTER_NAME --resource-group $RESOURCE_GROUP_NAME --attach-acr $ACR_NAME

You will be able to perform pull and push operations from this Container Registry through Docker using this command on a VM with the container engine installed, provided that the VM has a managed identity with AcrPull/AcrPush permissions :

az acr login --name $ACR_NAME

About taints for AKS GPU nodes

It is important to understand deeply the concept of taints and tolerations for GPU nodes in AKS. This is critical for two reasons:

- In case spot instances are used in the AKS cluster, they will be applied the taint

kubernetes.azure.com/scalesetpriority=spot:NoSchedule - In some cases, it may be useful to add on the AKS cluster a dedicated taint for GPU SKUs, like

sku=gpu:NoSchedule

The utility of this taint is mainly related to the fact that, as compared to on-premises and bare-metal Kubernetes clusters, in AKS nodepools are usually allowed to scale down to 0 instances. This means that once the AKS auto-scaler should take a decision on the basis of a “nvidia.com/gpu” resource request, it may struggle in understanding what is the right node pool to scale-up

However, the latter point can also be addressed in a more elegant and specific way using a affinity declaration for Jobs or Pods spec requesting GPUs, like for example:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: node.kubernetes.io/instance-type

operator: In

values:

- Standard_NC4as_T4_v3

Creating the first GPU pool

The currently created AKS cluster has as a default only a node pool with 2 nodes of Standard_DS2_v2 VMs.

In order to test NVIDIA GPU Operator and run some GPU accelerated workload, we should add a GPU node pool.

It is critical, in case the management of the NVIDIA stack is meant to be managed with GPU operator that the node is created with the tag:

SkipGPUDriverInstall=true

This can be done using Azure Cloud Shell, for example using an NC4as_T4_v3 and setting the autoscaling from 0 up to 1 node:

az aks nodepool add \

--resource-group $RESOURCE_GROUP_NAME \

--cluster-name $AKS_CLUSTER_NAME \

--name nc4ast4 \

--node-taints sku=gpu:NoSchedule \

--node-vm-size Standard_NC4as_T4_v3 \

--enable-cluster-autoscaler \

--min-count 0 --max-count 1 --node-count 0 --tags SkipGPUDriverInstall=True

In order to deploy in Spot mode, the following flags should be added to Azure CLI:

--priority Spot --eviction-policy Delete --spot-max-price -1

Recently, a preview feature has been released that is allowing to skip the creation of the tags:

# Register the aks-preview extension

az extension add --name aks-preview

# Update the aks-preview extension

az extension update --name aks-preview

az aks nodepool add \

--resource-group $RESOURCE_GROUP_NAME \

--cluster-name $AKS_CLUSTER_NAME \

--name nc4ast4 \

--node-taints sku=gpu:NoSchedule \

--node-vm-size Standard_NC4as_T4_v3 \

--enable-cluster-autoscaler \

--min-count 0 --max-count 1 --node-count 0 --skip-gpu-driver-install

At the end of the process you should get the appropriate node pool defined in the portal and in status “Succeeded”:

az aks nodepool list --cluster-name $AKS_CLUSTER_NAME --resource-group $RESOURCE_GROUP_NAME -o table

Name OsType KubernetesVersion VmSize Count MaxPods ProvisioningState Mode

--------- -------- ------------------- -------------------- ------- --------- ------------------- ------

nodepool1 Linux 1.27.7 Standard_DS2_v2 2 110 Succeeded System

nc4ast4 Linux 1.27.7 Standard_NC4as_T4_v3 0 110 Succeeded User

Install NVIDIA GPU operator

On the machine with kubectl configured and with context configured above for connection to the AKS cluster, run the following to install helm:

curl -fsSL -o get_helm.sh https://raw.githubusercontent.com/helm/helm/master/scripts/get-helm-3 \

&& chmod 700 get_helm.sh \

&& ./get_helm.sh

To fine tune the node feature recognition, we will install Node Feature Discovery separately from NVIDIA Operator. NVIDIA Operator requires that the label feature.node.kubernetes.io/pci-10de.present=true is applied to the nodes. Moreover, it is important to tune the node discovery plugin so that it will be scheduled even on Spot instances of the Kubernetes cluster and on instances where the taint sku: gpu is applied

helm install --wait --create-namespace -n gpu-operator node-feature-discovery node-feature-discovery --create-namespace --repo https://kubernetes-sigs.github.io/node-feature-discovery/charts --set-json master.config.extraLabelNs='["nvidia.com"]' --set-json worker.tolerations='[{ "effect": "NoSchedule", "key": "sku", "operator": "Equal", "value": "gpu"},{"effect": "NoSchedule", "key": "kubernetes.azure.com/scalesetpriority", "value":"spot", "operator": "Equal"},{"effect": "NoSchedule", "key": "mig", "value":"notReady", "operator": "Equal"}]'

After enabling Node Feature Discovery, it is important to create a custom rule to precisely match NVIDIA GPUs on the nodes. This can be done creating a file called nfd-gpu-rule.yaml containing the following:

apiVersion: nfd.k8s-sigs.io/v1alpha1

kind: NodeFeatureRule

metadata:

name: nfd-gpu-rule

spec:

rules:

- name: "nfd-gpu-rule"

labels:

"feature.node.kubernetes.io/pci-10de.present": "true"

matchFeatures:

- feature: pci.device

matchExpressions:

vendor: {op: In, value: ["10de"]}

After this file is created, we should apply this to the AKS cluster:

kubectl apply -n gpu-operator -f nfd-gpu-rule.yaml

After this step, it is necessary to add NVIDIA Helm repository:

helm repo add nvidia https://helm.ngc.nvidia.com/nvidia && helm repo update

And now the next step will be installing the GPU operator, remembering the tainting also for the GPU Operator DaemonSets and also to disable the deployment of the Node Feature Discovery (nfd) that has been done in the previous step:

helm install --wait --generate-name -n gpu-operator nvidia/gpu-operator --set-json daemonsets.tolerations='[{ "effect": "NoSchedule", "key": "sku", "operator": "Equal", "value": "gpu"},{"effect": "NoSchedule", "key": "kubernetes.azure.com/scalesetpriority", "value":"spot", "operator": "Equal"},{"effect": "NoSchedule", "key": "mig", "value":"notReady", "operator": "Equal"}]' --set nfd.enabled=false

Running the first GPU example

Once the configuration has been completed, it is now time to check the functionality of the GPU operator submitting the first GPU accelerated Job on AKS. In this stage we will use as a reference the standard TensorFlow example that is also documented in the official AKS Azure Learn pages.

Create a file called gpu-accelerated.yaml with this content:

apiVersion: batch/v1

kind: Job

metadata:

labels:

app: samples-tf-mnist-demo

name: samples-tf-mnist-demo

spec:

template:

metadata:

labels:

app: samples-tf-mnist-demo

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: node.kubernetes.io/instance-type

operator: In

values:

- Standard_NC4as_T4_v3

containers:

- name: samples-tf-mnist-demo

image: mcr.microsoft.com/azuredocs/samples-tf-mnist-demo:gpu

args: ["--max_steps", "500"]

imagePullPolicy: IfNotPresent

volumeMounts:

- mountPath: /tmp

name: scratch

resources:

limits:

nvidia.com/gpu: 1

restartPolicy: OnFailure

tolerations:

- key: "sku"

operator: "Equal"

value: "gpu"

effect: "NoSchedule"

volumes:

- name: scratch

hostPath:

# directory location on host

path: /mnt/tmp

type: DirectoryOrCreate

# this field is optional

This job can be submitted with the following command:

kubectl apply -f gpu-accelerated.yaml

After approximately one minute the node should be automatically provisioned:

root@aks-gpu-playground-rg-jumpbox:~# kubectl get nodes

NAME STATUS ROLES AGE VERSION

aks-nc4ast4-81279986-vmss000003 Ready agent 2m38s v1.27.7

aks-nodepool1-25743550-vmss000000 Ready agent 4d16h v1.27.7

aks-nodepool1-25743550-vmss000001 Ready agent 4d16h v1.27.7

We can check that Node Feature Discovery has properly labeled the node:

root@aks-gpu-playground-rg-jumpbox:~# kubectl describe nodes aks-nc4ast4-81279986-vmss000003 | grep pci-

feature.node.kubernetes.io/pci-0302_10de.present=true

feature.node.kubernetes.io/pci-10de.present=true

NVIDIA GPU Operator DaemonSets will start preparing the node and after driver installation, NVIDIA Container toolkit and all the related validation is completed.

Once node preparation is completed, the GPU operator will add an allocatable GPU resource to the node:

kubectl describe nodes aks-nc4ast4-81279986-vmss000003

…

Allocatable:

cpu: 3860m

ephemeral-storage: 119703055367

hugepages-1Gi: 0

hugepages-2Mi: 0

memory: 24487780Ki

nvidia.com/gpu: 1

pods: 110

…

We can follow the process with the kubectl logs commands:

root@aks-gpu-playground-rg-jumpbox:~# kubectl get pods

NAME READY STATUS RESTARTS AGE

samples-tf-mnist-demo-tmpr4 1/1 Running 0 11m

root@aks-gpu-playground-rg-jumpbox:~# kubectl logs samples-tf-mnist-demo-tmpr4 --follow

2024-02-18 11:51:31.479768: I tensorflow/core/platform/cpu_feature_guard.cc:137] Your CPU supports instructions that this TensorFlow binary was not compiled to use: SSE4.1 SSE4.2 AVX AVX2 FMA

2024-02-18 11:51:31.806125: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1030] Found device 0 with properties:

name: Tesla T4 major: 7 minor: 5 memoryClockRate(GHz): 1.59

pciBusID: 0001:00:00.0

totalMemory: 15.57GiB freeMemory: 15.47GiB

2024-02-18 11:51:31.806157: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1120] Creating TensorFlow device (/device:GPU:0) -> (device: 0, name: Tesla T4, pci bus id: 0001:00:00.0, compute capability: 7.5)

2024-02-18 11:54:56.216820: I tensorflow/stream_executor/dso_loader.cc:139] successfully opened CUDA library libcupti.so.8.0 locally

Successfully downloaded train-images-idx3-ubyte.gz 9912422 bytes.

Extracting /tmp/tensorflow/input_data/train-images-idx3-ubyte.gz

Successfully downloaded train-labels-idx1-ubyte.gz 28881 bytes.

Extracting /tmp/tensorflow/input_data/train-labels-idx1-ubyte.gz

Successfully downloaded t10k-images-idx3-ubyte.gz 1648877 bytes.

Extracting /tmp/tensorflow/input_data/t10k-images-idx3-ubyte.gz

Successfully downloaded t10k-labels-idx1-ubyte.gz 4542 bytes.

Extracting /tmp/tensorflow/input_data/t10k-labels-idx1-ubyte.gz

Accuracy at step 0: 0.1201

Accuracy at step 10: 0.7364

…..

Accuracy at step 490: 0.9559

Adding run metadata for 499

Time-slicing configuration

An extremely useful feature of NVIDIA GPU Operator is represented by time-slicing. Time-slicing allows to share a physical GPU available on a node with multiple pods. Of course, this is just a time scheduling partition and not a physical GPU partition. It basically means that the different GPU processes that will be run by the different Pods will receive a proportional time of GPU compute time. However, if a Pod is particularly requiring in terms of GPU processing, it will impact significantly the other Pods sharing the GPU.

In the official NVIDIA GPU operator there are different ways to configure time-slicing. Here, considering that one of the benefits of a cloud environment is the possibility of having multiple different node pools, each with different GPU or configuration, we will focus on a fine-grained definition of the time-slicing at the node pool level.

The steps to enable time-slicing are three:

- Label the nodes to allow them to be referred in the time-slicing configuration

- Creating the time-slicing ConfigMap

- Enabling time-slicing based on the ConfigMap in the GPU operator cluster policy

As a first step, the nodes should be labelled with the key “nvidia.com/device-plugin.config”.

For example, let’s label our node array from Azure CLI:

az aks nodepool update --cluster-name $AKS_CLUSTER_NAME --resource-group $RESOURCE_GROUP_NAME --nodepool-name nc4ast4 --labels "nvidia.com/device-plugin.config=tesla-t4-ts2

After this step, let’s create the ConfigMap object required to allow for a time-slicing 2 on this node pool in a file called time-slicing-config.yaml:

apiVersion: v1

kind: ConfigMap

metadata:

name: time-slicing-config

data:

tesla-t4-ts2: |-

version: v1

flags:

migStrategy: none

sharing:

timeSlicing:

resources:

- name: nvidia.com/gpu

replicas: 2

Let’s apply the configuration in the GPU operator namespace:

kubectl apply -f time-slicing-config.yaml -n gpu-operator

Finally, let’s update the cluster policy to enable the time-slicing configuration:

kubectl patch clusterpolicy/cluster-policy \

-n gpu-operator --type merge \

-p '{"spec": {"devicePlugin": {"config": {"name": "time-slicing-config"}}}}'

Now, let’s try to resubmit the job already used in the first step in two replicas, creating a file called gpu-accelerated-time-slicing.yaml:

apiVersion: batch/v1

kind: Job

metadata:

labels:

app: samples-tf-mnist-demo-ts

name: samples-tf-mnist-demo-ts

spec:

completions: 2

parallelism: 2

completionMode: Indexed

template:

metadata:

labels:

app: samples-tf-mnist-demo-ts

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: node.kubernetes.io/instance-type

operator: In

values:

- Standard_NC4as_T4_v3

containers:

- name: samples-tf-mnist-demo

image: mcr.microsoft.com/azuredocs/samples-tf-mnist-demo:gpu

args: ["--max_steps", "500"]

imagePullPolicy: IfNotPresent

resources:

limits:

nvidia.com/gpu: 1

restartPolicy: OnFailure

tolerations:

- key: "sku"

operator: "Equal"

value: "gpu"

effect: "NoSchedule"

Let’s submit the job with the standard syntax:

kubectl apply -f gpu-accelerated-time-slicing.yaml

Now, after the node has been provisioned, we will find that it will get two GPU resources allocatable and will at the same time take the two Pods running concurrently at the same time.

kubectl describe node aks-nc4ast4-81279986-vmss000004

...

Allocatable:

cpu: 3860m

ephemeral-storage: 119703055367

hugepages-1Gi: 0

hugepages-2Mi: 0

memory: 24487780Ki

nvidia.com/gpu: 2

pods: 110

.....

Namespace Name CPU Requests CPU Limits Memory Requests Memory Limits Age

--------- ---- ------------ ---------- --------------- ------------- ---

default samples-tf-mnist-demo-ts-0-4tdcf 0 (0%) 0 (0%) 0 (0%) 0 (0%) 29s

default samples-tf-mnist-demo-ts-1-67hn4 0 (0%) 0 (0%) 0 (0%) 0 (0%) 29s

gpu-operator gpu-feature-discovery-lksj7 0 (0%) 0 (0%) 0 (0%) 0 (0%) 59s

gpu-operator node-feature-discovery-worker-wbbct 0 (0%) 0 (0%) 0 (0%) 0 (0%) 8m11s

gpu-operator nvidia-container-toolkit-daemonset-8nmx7 0 (0%) 0 (0%) 0 (0%) 0 (0%) 7m24s

gpu-operator nvidia-dcgm-exporter-76rs8 0 (0%) 0 (0%) 0 (0%) 0 (0%) 7m24s

gpu-operator nvidia-device-plugin-daemonset-btwz7 0 (0%) 0 (0%) 0 (0%) 0 (0%) 55s

gpu-operator nvidia-driver-daemonset-8dkkh 0 (0%) 0 (0%) 0 (0%) 0 (0%) 8m6s

gpu-operator nvidia-operator-validator-s7294 0 (0%) 0 (0%) 0 (0%) 0 (0%) 7m24s

kube-system azure-ip-masq-agent-fjm5d 100m (2%) 500m (12%) 50Mi (0%) 250Mi (1%) 9m18s

kube-system cloud-node-manager-9wpsm 50m (1%) 0 (0%) 50Mi (0%) 512Mi (2%) 9m18s

kube-system csi-azuredisk-node-ckqw6 30m (0%) 0 (0%) 60Mi (0%) 400Mi (1%) 9m18s

kube-system csi-azurefile-node-xmfbd 30m (0%) 0 (0%) 60Mi (0%) 600Mi (2%) 9m18s

kube-system kube-proxy-7l856 100m (2%) 0 (0%) 0 (0%) 0 (0%) 9m18s

A few remarks about time-slicing:

- It is critical, in this specific scenario, to benchmark and characterize your GPU workload. Time-slicing is just a method to maximize resource utilization, but is not the solution to multiply available resources. It is suggested that a careful benchmarking of GPU usage and GPU memory usage is carried out to identify if time-slicing is a valid solution. For example, if the average load of a specific GPU process is around 30%, a time-slicing of 2 or 3 could be evaluated

- Of course, also CPU and RAM resources should be considered in the equation

- In AKS it is extremely important to note that once time-slicing configuration is changed for a specific nodepool which has no resource allocated, it is not immediately evident in the next autoscaler operation.

Let’s imagine for example a nodepool scaled-down to zero that has no time-slicing applied. Let’s assume to configure it with time-slicing equal to 2. Submitting a request for 2 GPU resources may still allocate 2 nodes.

This because the autoscaler has in its memory that each node provides only 1 allocatable GPU. In all subsequent operations, once a node will be correctly exposing 2 GPUs as allocatable for the first time, AKS autoscaler will acknowledge that and it will act accordingly in future autoscaling operations.

Multi-Instance GPU (MIG)

NVIDIA Multi-instance GPU allows for GPU partitioning on Ampere and Hopper architecture. This means allowing an available GPU to be partitioned at hardware level (and not at time-slicing level). This means that Pods can have access to a dedicated hardware portion of the GPU resources which is delimited at an hardware level.

In Kubernetes there are two strategies available for MIG, more specifically single and mixed.

In single strategy, the nodes expose a standard “nvidia.com/gpu” set of resources.

In mixed strategy, the nodes expose the specific MIG profiles as resources, like in the example below:

Allocatable:

nvidia.com/mig-1g.5gb: 1

nvidia.com/mig-2g.10gb: 1

nvidia.com/mig-3g.20gb: 1

In order to use MIG, you could follow standard AKS documentation. However, we would like to propose here a method relying totally on NVIDIA GPU operator.

As a first step, it is necessary to allow reboot of nodes to get MIG configuration enabled:

kubectl patch clusterpolicy/cluster-policy -n gpu-operator --type merge -p '{"spec": {"migManager": {"env": [{"name": "WITH_REBOOT", "value": "true"}]}}}'

Let’s start creating a node pools powered by a GPU supporting MIG on Azure, like on the SKU Standard_NC24ads_A100_v4 and let’s label the node with one of the MIG profiles available for A100 80 GiB.

az aks nodepool add \

--resource-group $RESOURCE_GROUP_NAME \

--cluster-name $AKS_CLUSTER_NAME \

--name nc24a100v4 \

--node-taints sku=gpu:NoSchedule \

--node-vm-size Standard_NC24ads_A100_v4 \

--enable-cluster-autoscaler \

--min-count 0 --max-count 1 --node-count 0 --skip-gpu-driver-install --labels "nvidia.com/mig.config"="all-1g.10gb"

There is another important detail to consider in this stage with AKS, meaning that the auto-scaling of the nodes will bring-up nodes with a standard GPU configuration, without MIG activated. This means, that NVIDIA GPU operator will install the drivers and then mig-manager will activate the proper MIG configuration profile and reboot. Between these two phases there is a small time window where the GPU resources are exposed by the node and this could potentially trigger a job execution.

To support this scenario, it is important to consider on AKS the need of an additional DaemonSet that prevents any Pod to be scheduled during the MIG configuration. This is available in a dedicated repository.

To deploy the DaemonSet:

export NAMESPACE=gpu-operator

export ACR_NAME=<YOUR_ACR_NAME>

git clone https://github.com/wolfgang-desalvador/aks-mig-monitor.git

cd aks-mig-monitor

sed -i "s/<ACR_NAME>/$ACR_NAME/g" mig-monitor-daemonset.yaml

sed -i "s/<NAMESPACE>/$NAMESPACE/g" mig-monitor-roles.yaml

docker build . -t $ACR_NAME/aks-mig-monitor

docker push $ACR_NAME/aks-mig-monitor

kubectl apply -f mig-monitor-roles.yaml -n $NAMESPACE

kubectl apply -f mig-monitor-daemonset.yaml -n $NAMESPACE

We can now try to submit the mig-accelerated-job.yaml

apiVersion: batch/v1

kind: Job

metadata:

labels:

app: samples-tf-mnist-demo-mig

name: samples-tf-mnist-demo-mig

spec:

completions: 7

parallelism: 7

completionMode: Indexed

template:

metadata:

labels:

app: samples-tf-mnist-demo-mig

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: node.kubernetes.io/instance-type

operator: In

values:

- Standard_NC24ads_A100_v4

containers:

- name: samples-tf-mnist-demo

image: mcr.microsoft.com/azuredocs/samples-tf-mnist-demo:gpu

args: ["--max_steps", "500"]

imagePullPolicy: IfNotPresent

resources:

limits:

nvidia.com/gpu: 1

restartPolicy: OnFailure

tolerations:

- key: "sku"

operator: "Equal"

value: "gpu"

effect: "NoSchedule"

Then we will be submitting the job with kubectl:

kubectl apply -f mig-accelerated-job.yaml

After the node will startup, the first state will have a taint with mig=notReady:NoSchedule since the MIG configuration is not completed. GPU Operator containers will be installed:

kubectl describe nodes aks-nc24a100v4-42670331-vmss00000a

Name: aks-nc24a100v4-42670331-vmss00000a

...

nvidia.com/mig.config=all-1g.10gb

...

Taints: kubernetes.azure.com/scalesetpriority=spot:NoSchedule

mig=notReady:NoSchedule

sku=gpu:NoSchedule

...

Non-terminated Pods: (13 in total)

Namespace Name CPU Requests CPU Limits Memory Requests Memory Limits Age

--------- ---- ------------ ---------- --------------- ------------- ---

gpu-operator aks-mig-monitor-64zpl 0 (0%) 0 (0%) 0 (0%) 0 (0%) 16s

gpu-operator gpu-feature-discovery-wpd2j 0 (0%) 0 (0%) 0 (0%) 0 (0%) 13s

gpu-operator node-feature-discovery-worker-79h68 0 (0%) 0 (0%) 0 (0%) 0 (0%) 16s

gpu-operator nvidia-container-toolkit-daemonset-q5p9k 0 (0%) 0 (0%) 0 (0%) 0 (0%) 12s

gpu-operator nvidia-dcgm-exporter-9g5kg 0 (0%) 0 (0%) 0 (0%) 0 (0%) 13s

gpu-operator nvidia-device-plugin-daemonset-5wpzk 0 (0%) 0 (0%) 0 (0%) 0 (0%) 13s

gpu-operator nvidia-driver-daemonset-kqkzb 0 (0%) 0 (0%) 0 (0%) 0 (0%) 13s

gpu-operator nvidia-operator-validator-lx77m 0 (0%) 0 (0%) 0 (0%) 0 (0%) 12s

kube-system azure-ip-masq-agent-7rd2x 100m (0%) 500m (2%) 50Mi (0%) 250Mi (0%) 66s

kube-system cloud-node-manager-dc756 50m (0%) 0 (0%) 50Mi (0%) 512Mi (0%) 66s

kube-system csi-azuredisk-node-5b4nk 30m (0%) 0 (0%) 60Mi (0%) 400Mi (0%) 66s

kube-system csi-azurefile-node-vlwhv 30m (0%) 0 (0%) 60Mi (0%) 600Mi (0%) 66s

kube-system kube-proxy-4fkxh 100m (0%) 0 (0%) 0 (0%) 0 (0%) 66s

After the GPU Operator configuration is completed, mig-manager will start being deployed. MIG configuration will be applied and node will then set in a rebooting state:

kubectl describe nodes aks-nc24a100v4-42670331-vmss00000a

nvidia.com/mig.config=all-1g.10gb

nvidia.com/mig.strategy=single

nvidia.com/mig.config.state=rebooting

...

Taints: kubernetes.azure.com/scalesetpriority=spot:NoSchedule

mig=notReady:NoSchedule

sku=gpu:NoSchedule

...

Non-terminated Pods: (14 in total)

Namespace Name CPU Requests CPU Limits Memory Requests Memory Limits Age

--------- ---- ------------ ---------- --------------- ------------- ---

gpu-operator aks-mig-monitor-64zpl 0 (0%) 0 (0%) 0 (0%) 0 (0%) 4m6s

gpu-operator gpu-feature-discovery-6btwx 0 (0%) 0 (0%) 0 (0%) 0 (0%) 3m33s

gpu-operator node-feature-discovery-worker-79h68 0 (0%) 0 (0%) 0 (0%) 0 (0%) 4m6s

gpu-operator nvidia-container-toolkit-daemonset-wplkb 0 (0%) 0 (0%) 0 (0%) 0 (0%) 3m33s

gpu-operator nvidia-dcgm-exporter-vnscq 0 (0%) 0 (0%) 0 (0%) 0 (0%) 3m33s

gpu-operator nvidia-device-plugin-daemonset-d86dn 0 (0%) 0 (0%) 0 (0%) 0 (0%) 3m33s

gpu-operator nvidia-driver-daemonset-kqkzb 0 (0%) 0 (0%) 0 (0%) 0 (0%) 4m3s

gpu-operator nvidia-mig-manager-t4bw9 0 (0%) 0 (0%) 0 (0%) 0 (0%) 2s

gpu-operator nvidia-operator-validator-jrfkn 0 (0%) 0 (0%) 0 (0%) 0 (0%) 3m33s

kube-system azure-ip-masq-agent-7rd2x 100m (0%) 500m (2%) 50Mi (0%) 250Mi (0%) 4m56s

kube-system cloud-node-manager-dc756 50m (0%) 0 (0%) 50Mi (0%) 512Mi (0%) 4m56s

kube-system csi-azuredisk-node-5b4nk 30m (0%) 0 (0%) 60Mi (0%) 400Mi (0%) 4m56s

kube-system csi-azurefile-node-vlwhv 30m (0%) 0 (0%) 60Mi (0%) 600Mi (0%) 4m56s

kube-system kube-proxy-4fkxh 100m (0%) 0 (0%) 0 (0%) 0 (0%) 4m56s

After the reboot, the MIG configuration will switch to state "success" and taints will be removed. Scheduling of the 7 pods of our job will then start:

kubectl describe nodes aks-nc24a100v4-42670331-vmss00000a

...

nvidia.com/mig.capable=true

nvidia.com/mig.config=all-1g.10gb

nvidia.com/mig.config.state=success

nvidia.com/mig.strategy=single

...

Taints: kubernetes.azure.com/scalesetpriority=spot:NoSchedule

sku=gpu:NoSchedule

...

Allocatable:

cpu: 23660m

ephemeral-storage: 119703055367

hugepages-1Gi: 0

hugepages-2Mi: 0

memory: 214295444Ki

nvidia.com/gpu: 7

pods: 110

...

Non-terminated Pods: (21 in total)

Namespace Name CPU Requests CPU Limits Memory Requests Memory Limits Age

--------- ---- ------------ ---------- --------------- ------------- ---

default samples-tf-mnist-demo-ts-0-5bs64 0 (0%) 0 (0%) 0 (0%) 0 (0%) 11m

default samples-tf-mnist-demo-ts-1-2msdh 0 (0%) 0 (0%) 0 (0%) 0 (0%) 11m

default samples-tf-mnist-demo-ts-2-ck8c8 0 (0%) 0 (0%) 0 (0%) 0 (0%) 11m

default samples-tf-mnist-demo-ts-3-dlkfn 0 (0%) 0 (0%) 0 (0%) 0 (0%) 11m

default samples-tf-mnist-demo-ts-4-899fr 0 (0%) 0 (0%) 0 (0%) 0 (0%) 11m

default samples-tf-mnist-demo-ts-5-dmgpn 0 (0%) 0 (0%) 0 (0%) 0 (0%) 11m

default samples-tf-mnist-demo-ts-6-pvzm4 0 (0%) 0 (0%) 0 (0%) 0 (0%) 11m

gpu-operator aks-mig-monitor-64zpl 0 (0%) 0 (0%) 0 (0%) 0 (0%) 9m9s

gpu-operator gpu-feature-discovery-5t9gn 0 (0%) 0 (0%) 0 (0%) 0 (0%) 41s

gpu-operator node-feature-discovery-worker-79h68 0 (0%) 0 (0%) 0 (0%) 0 (0%) 9m9s

gpu-operator nvidia-container-toolkit-daemonset-82dgg 0 (0%) 0 (0%) 0 (0%) 0 (0%) 2m22s

gpu-operator nvidia-dcgm-exporter-xbxqf 0 (0%) 0 (0%) 0 (0%) 0 (0%) 41s

gpu-operator nvidia-device-plugin-daemonset-8gkzd 0 (0%) 0 (0%) 0 (0%) 0 (0%) 41s

gpu-operator nvidia-driver-daemonset-kqkzb 0 (0%) 0 (0%) 0 (0%) 0 (0%) 9m6s

gpu-operator nvidia-mig-manager-jbqls 0 (0%) 0 (0%) 0 (0%) 0 (0%) 2m22s

gpu-operator nvidia-operator-validator-5rdbh 0 (0%) 0 (0%) 0 (0%) 0 (0%) 41s

kube-system azure-ip-masq-agent-7rd2x 100m (0%) 500m (2%) 50Mi (0%) 250Mi (0%) 9m59s

kube-system cloud-node-manager-dc756 50m (0%) 0 (0%) 50Mi (0%) 512Mi (0%) 9m59s

kube-system csi-azuredisk-node-5b4nk 30m (0%) 0 (0%) 60Mi (0%) 400Mi (0%) 9m59s

kube-system csi-azurefile-node-vlwhv 30m (0%) 0 (0%) 60Mi (0%) 600Mi (0%) 9m59s

kube-system kube-proxy-4fkxh 100m (0%) 0 (0%) 0 (0%) 0 (0%) 9m59s

"

Checking on a node the status of MIG will visualize the 7 GPU partitions through nvidia-smi:

nvidia-smi

+---------------------------------------------------------------------------------------+

| NVIDIA-SMI 535.129.03 Driver Version: 535.129.03 CUDA Version: 12.2 |

|-----------------------------------------+----------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+======================+======================|

| 0 NVIDIA A100 80GB PCIe On | 00000001:00:00.0 Off | On |

| N/A 27C P0 71W / 300W | 726MiB / 81920MiB | N/A Default |

| | | Enabled |

+-----------------------------------------+----------------------+----------------------+

+---------------------------------------------------------------------------------------+

| MIG devices: |

+------------------+--------------------------------+-----------+-----------------------+

| GPU GI CI MIG | Memory-Usage | Vol| Shared |

| ID ID Dev | BAR1-Usage | SM Unc| CE ENC DEC OFA JPG |

| | | ECC| |

|==================+================================+===========+=======================|

| 0 7 0 0 | 102MiB / 9728MiB | 14 0 | 1 0 0 0 0 |

| | 2MiB / 16383MiB | | |

+------------------+--------------------------------+-----------+-----------------------+

| 0 8 0 1 | 104MiB / 9728MiB | 14 0 | 1 0 0 0 0 |

| | 2MiB / 16383MiB | | |

+------------------+--------------------------------+-----------+-----------------------+

| 0 9 0 2 | 104MiB / 9728MiB | 14 0 | 1 0 0 0 0 |

| | 2MiB / 16383MiB | | |

+------------------+--------------------------------+-----------+-----------------------+

| 0 10 0 3 | 104MiB / 9728MiB | 14 0 | 1 0 0 0 0 |

| | 2MiB / 16383MiB | | |

+------------------+--------------------------------+-----------+-----------------------+

| 0 11 0 4 | 104MiB / 9728MiB | 14 0 | 1 0 0 0 0 |

| | 2MiB / 16383MiB | | |

+------------------+--------------------------------+-----------+-----------------------+

| 0 12 0 5 | 104MiB / 9728MiB | 14 0 | 1 0 0 0 0 |

| | 2MiB / 16383MiB | | |

+------------------+--------------------------------+-----------+-----------------------+

| 0 13 0 6 | 104MiB / 9728MiB | 14 0 | 1 0 0 0 0 |

| | 2MiB / 16383MiB | | |

+------------------+--------------------------------+-----------+-----------------------+

+---------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=======================================================================================|

| 0 7 0 28988 C python 82MiB |

| 0 8 0 29140 C python 84MiB |

| 0 9 0 29335 C python 84MiB |

| 0 10 0 29090 C python 84MiB |

| 0 11 0 29031 C python 84MiB |

| 0 12 0 29190 C python 84MiB |

| 0 13 0 29255 C python 84MiB |

+---------------------------------------------------------------------------------------+

A few remarks about MIG to take into account:

- MIG provides physical GPU partitioning, so that the GPU associated to one Pod is totally reserved to that Pod

- CPU and RAM resources should be considered in the equation, they won't be partitioned by MIG and should follow the standard AKS limits assignment

- In AKS it is extremely important to note that once MIG configuration is changed for a specific nodepool which has no node allocated, it is not immediately evident in the next autoscaler operation. This means that asking for 7 GPUs on a nodepool scaled down to 0 after the first activation of MIG in the terms above may bring-up 7 nodes

- The Daemonsets described above just prevents scheduling during the boot-up phases of a node provisioned by autoscaler. If the MIG profile should be changed afterwards changing the MIG label on the node, the node should be cordoned. Changes to the labels must be done at AKS node pool level in case label was set through Azure CLI (using az aks nodepool update) or at single node level (using kubectl patch nodes) in case it was done with kubectl

For example, in the case above, if we want to move to another profile, it is important to cordon the node with the commands:

kubectl get nodes

NAME STATUS ROLES AGE VERSION

aks-nc24a100v4-42670331-vmss00000c Ready agent 11m v1.27.7

aks-nodepool1-25743550-vmss000000 Ready agent 6d16h v1.27.7

aks-nodepool1-25743550-vmss000001 Ready agent 6d16h v1.27.7

kubectl cordon aks-nc24a100v4-42670331-vmss00000c

node/aks-nc24a100v4-42670331-vmss00000c cordoned

Be aware that cordoning the nodes will not stop the Pods. You should verify no GPU accelerated workload is running before submitting the label change.

Since in our case we have applied the label at the AKS level, we will need to change the label from Azure CLI:

az aks nodepool update --cluster-name $AKS_CLUSTER_NAME --resource-group $RESOURCE_GROUP_NAME --nodepool-name nc24a100v4 --labels "nvidia.com/mig.config"="all-1g.20gb"

This will trigger a reconfiguration of MIG with new profile applied:

+---------------------------------------------------------------------------------------+

| NVIDIA-SMI 535.129.03 Driver Version: 535.129.03 CUDA Version: 12.2 |

|-----------------------------------------+----------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+======================+======================|

| 0 NVIDIA A100 80GB PCIe On | 00000001:00:00.0 Off | On |

| N/A 42C P0 77W / 300W | 50MiB / 81920MiB | N/A Default |

| | | Enabled |

+-----------------------------------------+----------------------+----------------------+

+---------------------------------------------------------------------------------------+

| MIG devices: |

+------------------+--------------------------------+-----------+-----------------------+

| GPU GI CI MIG | Memory-Usage | Vol| Shared |

| ID ID Dev | BAR1-Usage | SM Unc| CE ENC DEC OFA JPG |

| | | ECC| |

|==================+================================+===========+=======================|

| 0 3 0 0 | 12MiB / 19968MiB | 14 0 | 1 0 1 0 0 |

| | 0MiB / 32767MiB | | |

+------------------+--------------------------------+-----------+-----------------------+

| 0 4 0 1 | 12MiB / 19968MiB | 14 0 | 1 0 1 0 0 |

| | 0MiB / 32767MiB | | |

+------------------+--------------------------------+-----------+-----------------------+

| 0 5 0 2 | 12MiB / 19968MiB | 14 0 | 1 0 1 0 0 |

| | 0MiB / 32767MiB | | |

+------------------+--------------------------------+-----------+-----------------------+

| 0 6 0 3 | 12MiB / 19968MiB | 14 0 | 1 0 1 0 0 |

| | 0MiB / 32767MiB | | |

+------------------+--------------------------------+-----------+-----------------------+

+---------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=======================================================================================|

| No running processes found |

+---------------------------------------------------------------------------------------+

We can then uncordon the node/nodes:

kubectl uncordon aks-nc24a100v4-42670331-vmss00000c

node/aks-nc24a100v4-42670331-vmss00000c uncordoned

Using NVIDIA GPU Driver CRD (preview)

The NVIDIA GPU Driver CRD allows to define in a granular way the driver version and the driver images on each of the nodepools in use in an AKS cluster. This feature is in preview as documented in NVIDIA GPU Operator and not suggested by NVIDIA on production systems.

In order to enable NVIDIA GPU Driver CRD (in case you have already installed NVIDIA GPU Operator you will need to perform helm uninstall, of course taking care of running workloads) perform the following command:

helm install --wait --generate-name -n gpu-operator nvidia/gpu-operator --set-json daemonsets.tolerations='[{"effect": "NoSchedule", "key": "sku", "operator": "Equal", "value": "gpu" }, {"effect": "NoSchedule", "key": "kubernetes.azure.com/scalesetpriority", "value":"spot", "operator": "Equal"}]' --set nfd.enabled=false --set driver.nvidiaDriverCRD.deployDefaultCR=false --set driver.nvidiaDriverCRD.enabled=true

After this step, it is important to create nodepools with a proper label to be used to select nodes for driver version (in this case "driver.config"):

az aks nodepool add \

--resource-group $RESOURCE_GROUP_NAME \

--cluster-name $AKS_CLUSTER_NAME \

--name nc4latest \

--node-taints sku=gpu:NoSchedule \

--node-vm-size Standard_NC4as_T4_v3 \

--enable-cluster-autoscaler \

--labels "driver.config"="latest" \

--min-count 0 --max-count 1 --node-count 0 --tags SkipGPUDriverInstall=True

az aks nodepool add \

--resource-group $RESOURCE_GROUP_NAME \

--cluster-name $AKS_CLUSTER_NAME \

--name nc4stable \

--node-taints sku=gpu:NoSchedule \

--node-vm-size Standard_NC4as_T4_v3 \

--enable-cluster-autoscaler \

--labels "driver.config"="stable" \

--min-count 0 --max-count 1 --node-count 0 --tags SkipGPUDriverInstall=True

After this step, the driver configuration (NVIDIADriver object in AKS) should be created. This can be done with a file called driver-config.yaml with the following content:

apiVersion: nvidia.com/v1alpha1

kind: NVIDIADriver

metadata:

name: nc4-latest

spec:

driverType: gpu

env: []

image: driver

imagePullPolicy: IfNotPresent

imagePullSecrets: []

manager: {}

tolerations:

- key: "sku"

operator: "Equal"

value: "gpu"

effect: "NoSchedule"

- key: "kubernetes.azure.com/scalesetpriority"

operator: "Equal"

value: "spot"

effect: "NoSchedule"

nodeSelector:

driver.config: "latest"

repository: nvcr.io/nvidia

version: "535.129.03"

---

apiVersion: nvidia.com/v1alpha1

kind: NVIDIADriver

metadata:

name: nc4-stable

spec:

driverType: gpu

env: []

image: driver

imagePullPolicy: IfNotPresent

imagePullSecrets: []

manager: {}

tolerations:

- key: "sku"

operator: "Equal"

value: "gpu"

effect: "NoSchedule"

- key: "kubernetes.azure.com/scalesetpriority"

operator: "Equal"

value: "spot"

effect: "NoSchedule"

nodeSelector:

driver.config: "stable"

repository: nvcr.io/nvidia

version: "535.104.12"

This can then be applied with kubectl:

kubectl apply -f driver-config.yaml -n gpu-operator

Now scaling up to nodes (e.g. submitting a GPU workload requesting as affinity exactly the target labels of device.config) we can verify that the driver versions will be the one requested. Running nvidia-smi attaching a shell to the Daemonset container of each of the two nodes:

### On latest

+---------------------------------------------------------------------------------------+

| NVIDIA-SMI 535.129.03 Driver Version: 535.129.03 CUDA Version: 12.2 |

|-----------------------------------------+----------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+======================+======================|

| 0 Tesla T4 On | 00000001:00:00.0 Off | Off |

| N/A 30C P8 15W / 70W | 2MiB / 16384MiB | 0% Default |

| | | N/A |

+-----------------------------------------+----------------------+----------------------+

### On stable

+---------------------------------------------------------------------------------------+

| NVIDIA-SMI 535.104.12 Driver Version: 535.104.12 CUDA Version: 12.2 |

|-----------------------------------------+----------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+======================+======================|

| 0 Tesla T4 On | 00000001:00:00.0 Off | Off |

| N/A 30C P8 14W / 70W | 0MiB / 16384MiB | 0% Default |

| | | N/A |

+-----------------------------------------+----------------------+----------------------+

NVIDIA GPU Driver CRD allows to specify a specific Docker image and Docker Registry for the NVIDIA Driver installation on each node pool.

This becomes particularly useful in the case we will need to install the Azure specific Virtual GPU Drivers on A10 GPUs.

On Azure, NVads_A10_v5 VMs are characterized by NVIDIA VGPU technology in the backend, so they require VGPU Drivers. On Azure, the VGPU drivers comes included with the VM cost, so there is no need to get a VGPU license. The binaries available on the Azure Driver download page can be used on the supported OS (including Ubuntu 22) only on Azure VMs.

In this case, there is the possibility to bundle an ad-hoc NVIDIA Driver container image to be used on Azure, making that accessible to a dedicated container registry.

In order to do that, this is the procedure (assuming wehave an ACR attached to AKS with <ACR_NAME>):

export ACR_NAME=<ACR_NAME>

az acr login -n $ACR_NAME

git clone https://gitlab.com/nvidia/container-images/driver

cd driver

cp -r ubuntu22.04 ubuntu22.04-aks

cd ubuntu22.04-aks

cd drivers

wget "https://download.microsoft.com/download/1/4/4/14450d0e-a3f2-4b0a-9bb4-a8e729e986c4/NVIDIA-Linux-x86_64-535.154.05-grid-azure.run"

mv NVIDIA-Linux-x86_64-535.154.05-grid-azure.run NVIDIA-Linux-x86_64-535.154.05.run

chmod +x NVIDIA-Linux-x86_64-535.154.05.run

cd ..

sed -i 's%/tmp/install.sh download_installer%echo "Skipping Driver Download"%g' Dockerfile

sed 's%sh NVIDIA-Linux-$DRIVER_ARCH-$DRIVER_VERSION.run -x%sh NVIDIA-Linux-$DRIVER_ARCH-$DRIVER_VERSION.run -x \&\& mv NVIDIA-Linux-$DRIVER_ARCH-$DRIVER_VERSION-grid-azure NVIDIA-Linux-$DRIVER_ARCH-$DRIVER_VERSION%g' nvidia-driver -i

docker build --build-arg DRIVER_VERSION=535.154.05 --build-arg DRIVER_BRANCH=535 --build-arg CUDA_VERSION=12.3.1 --build-arg TARGETARCH=amd64 . -t $ACR_NAME/driver:535.154.05-ubuntu22.04

docker push $ACR_NAME/driver:535.154.05-ubuntu22.04

After this, let's create a specific NVIDIADriver object for Azure VGPU with a file named azure-vgpu.yaml and the following content (replace <ACR_NAME> with your ACR name):

apiVersion: nvidia.com/v1alpha1

kind: NVIDIADriver

metadata:

name: azure-vgpu

spec:

driverType: gpu

env: []

image: driver

imagePullPolicy: IfNotPresent

imagePullSecrets: []

manager: {}

tolerations:

- key: "sku"

operator: "Equal"

value: "gpu"

effect: "NoSchedule"

- key: "kubernetes.azure.com/scalesetpriority"

operator: "Equal"

value: "spot"

effect: "NoSchedule"

nodeSelector:

driver.config: "azurevgpu"

repository: <ACR_NAME>

version: "535.154.05"

Let's apply it with kubectl:

kubectl apply -f azure-vgpu.yaml -n gpu-operator

Now, let's create an A10 nodepool with Azure CLI:

az aks nodepool add \

--resource-group $RESOURCE_GROUP_NAME \

--cluster-name $AKS_CLUSTER_NAME \

--name nv36a10v5 \

--node-taints sku=gpu:NoSchedule \

--node-vm-size Standard_NV36ads_A10_v5 \

--enable-cluster-autoscaler \

--labels "driver.config"="azurevgpu" \

--min-count 0 --max-count 1 --node-count 0 --tags SkipGPUDriverInstall=True

Scaling up a node with a specific workload and waiting for the finalization of Driver installation, we will see that the image of the NVIDIA Driver installation has been pulled by our registry:

root@aks-gpu-playground-rg-jumpbox:~# kubectl get nodes

NAME STATUS ROLES AGE VERSION

aks-nodepool1-25743550-vmss000000 Ready agent 6d23h v1.27.7

aks-nodepool1-25743550-vmss000001 Ready agent 6d23h v1.27.7

aks-nv36a10v5-10653906-vmss000000 Ready agent 9m24s v1.27.7

root@aks-gpu-playground-rg-jumpbox:~# kubectl describe node aks-nv36a10v5-10653906-vmss000000| grep gpu-driver

nvidia.com/gpu-driver-upgrade-state=upgrade-done

nvidia.com/gpu-driver-upgrade-enabled: true

gpu-operator nvidia-gpu-driver-ubuntu22.04-56df89b87c-6w8tj 0 (0%) 0 (0%) 0 (0%) 0 (0%) 4m29s

root@aks-gpu-playground-rg-jumpbox:~# kubectl describe pods -n gpu-operator nvidia-gpu-driver-ubuntu22.04-56df89b87c-6w8tj | grep -i Image

Image: nvcr.io/nvidia/cloud-native/k8s-driver-manager:v0.6.2

Image ID: nvcr.io/nvidia/cloud-native/k8s-driver-manager@sha256:bb845160b32fd12eb3fae3e830d2e6a7780bc7405e0d8c5b816242d48be9daa8

Image: aksgpuplayground.azurecr.io/driver:535.154.05-ubuntu22.04

Image ID: aksgpuplayground.azurecr.io/driver@sha256:deb6e6311a174ca6a989f8338940bf3b1e6ae115ebf738042063f4c3c95c770f

Normal Pulled 4m26s kubelet Container image "nvcr.io/nvidia/cloud-native/k8s-driver-manager:v0.6.2" already present on machine

Normal Pulling 4m23s kubelet Pulling image "aksgpuplayground.azurecr.io/driver:535.154.05-ubuntu22.04"

Normal Pulled 4m16s kubelet Successfully pulled image "aksgpuplayground.azurecr.io/driver:535.154.05-ubuntu22.04" in 6.871887325s (6.871898205s including waiting)

Also, we can see how the A10 VGPU profile is recognized successfully attaching to the Pod of device-plugin-daemonset:

+---------------------------------------------------------------------------------------+

| NVIDIA-SMI 535.154.05 Driver Version: 535.154.05 CUDA Version: 12.2 |

|-----------------------------------------+----------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+======================+======================|

| 0 NVIDIA A10-24Q On | 00000002:00:00.0 Off | 0 |

| N/A N/A P8 N/A / N/A | 0MiB / 24512MiB | 0% Default |

| | | Disabled |

+-----------------------------------------+----------------------+----------------------+

Thank you

Thank you for reading our blog posts, feel free to leave any comment / feedback, ask for clarifications or report any issue

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.