- Home

- Azure

- Azure High Performance Computing (HPC) Blog

- Performance & Scalability of HBv4 and HX-Series VMs with Genoa-X CPUs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Article contributed by Amirreza Rastegari, Jon Shelley, Scott Moe, Jie Zhang, Jithin Jose, Anshul Jain, Jyothi Venkatesh, Joe Greenseid, Fanny Ou, and Evan Burness

Azure has announced the general availability of Azure HBv4-series and HX-series virtual machines (VMs) for high performance computing (HPC). This blog provides in-depth technical and performance information about these HPC-optimized VMs.

During the preview announced in November 2022, these VMs were equipped with standard 4th gen AMD EPYCTM processors (codenamed 'Genoa'). Starting with the General Availability announced today, all HBv4 and HX Series VMs have been upgraded to 4th Gen AMD EPYCTM processors with AMD 3D V-cache, codenamed (“Genoa-X”). Standard 4th Gen AMD EPYC processors are no longer available in HBv4 and HX series VMs.

These VMs feature several new technologies for HPC customers, including:

- 4th Gen AMD EPYC CPUs with AMD 3D-V Cache (codenamed “Genoa-X”)

- 2.3 GB L3 Cache per VM

- Up to 780 GB/s of DDR5 memory bandwidth (STREAM TRIAD), up to 5.7 TB/s of 3D V-Cache bandwidth (STREAM TRIAD), and effective (blended) bandwidth of up to 1.2 TB/s

- 400 Gbps NVIDIA Quantum-2 InfiniBand

- 80 Gbps Azure Accelerated Networking

- 3.6 TB local NVMe SSD providing up to 12 GB/s (read) and 7 GB/s (write) of storage bandwidth

HBv4 and HX – VM Size Details & Technical Specifications

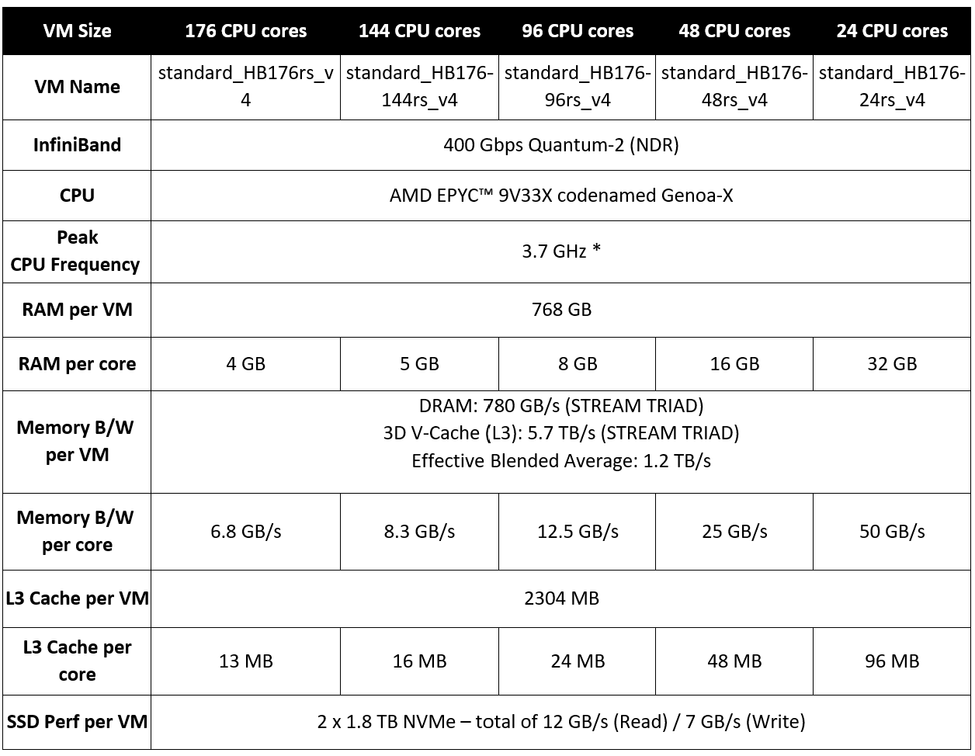

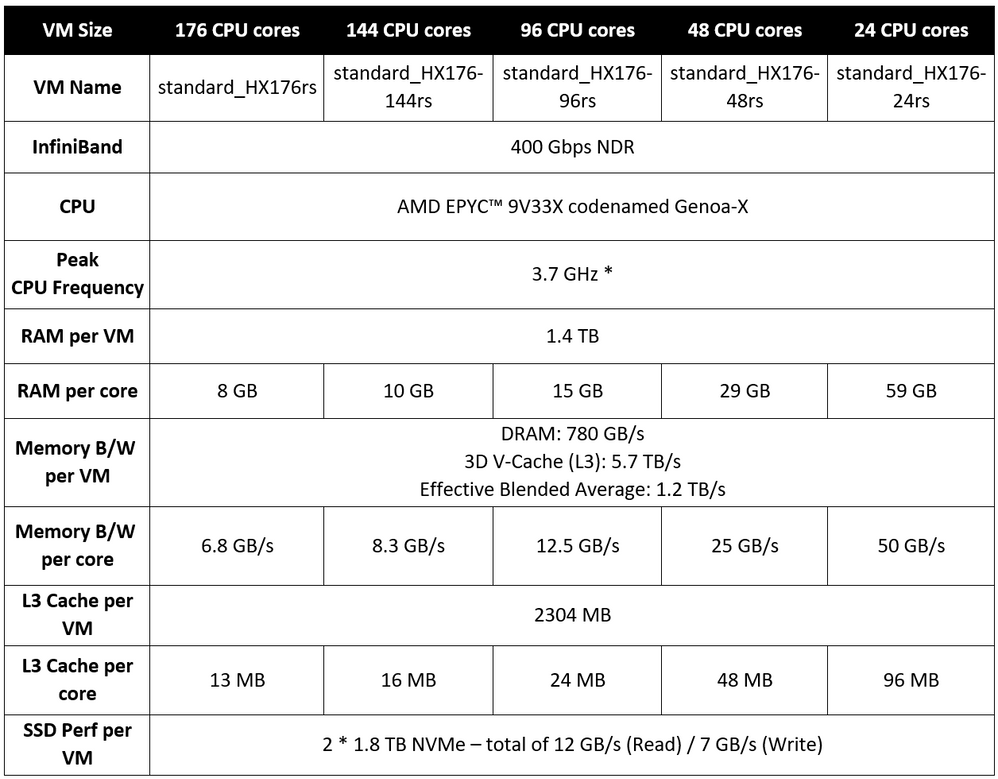

HBv4 and HX VMs are available in the following sizes with specifications as shown in Tables 1 and 2, respectively. Just like existing H-series VMs, HBv4 and HX-series also include constrained cores VM sizes, enabling customers to choose a size along a spectrum of from maximum-performance-per-VM to maximum performance per core.

HBv4-series VMs

Table 1: Technical specifications of HBv4 series VMs

HX-series VMs

Table 2: Technical specifications of HX series VMs

Note: Maximum clock frequencies (FMAX) are based on non-AVX workload scenarios measured by the Azure HPC team with AMD EPYC 9004-series processors. Experienced clock frequency by a customer is a function of a variety of factors, including but not limited to the arithmetic intensity (SIMD) and parallelism of an application.

For more information see official documentation for HBv4-series and HX-series VMs.

How does 3D-V Cache on 4th Gen EPYC CPUs affect HPC performance?

It is useful to understand the stacked L3 cache technology, called 3D V-Cache, and what effect this does and does not have on a range of HPC workloads.

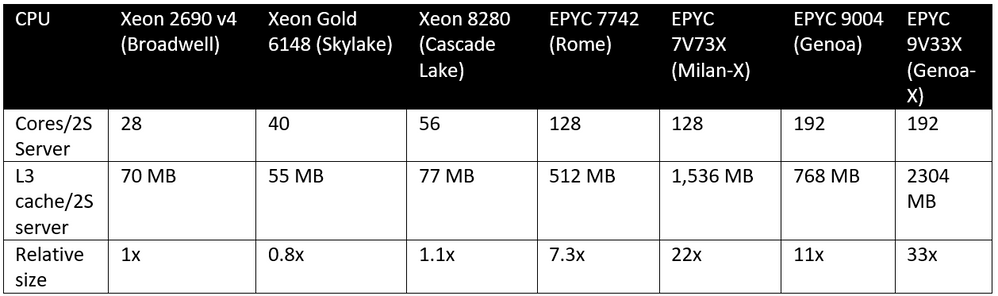

4th Gen EPYC processors with 3D V-Cache differ from their standard 4th gen EPYC counterparts only by virtue of having 3x as much L3 cache memory per Genoa core, CCD, socket, and server. This results in a 2-socket server (such as those underlying HBv4 and HX-series VMs) having a total of:

- (24 CCDs/server) * (96 MB L3/CCD) = 2304 MB of L3 cache per server

To put this amount of L3 cache into context, here’s how several widely used processor models by HPC buyers over the last half decade compare in terms of L3 cache per 2-socket server when juxtaposed against the latest Genoa-X processors in HBv4 and HX VMs.

Table 3: Comparison of L3 cache on 2 socket servers over the past 5 years across multiple generations of CPUs

Note that looking at L3 cache size alone, absent context, can be misleading. Different CPUs balance L2 (faster) and L3 (slower) ratios differently in different generations. For example, while an Intel Xeon “Broadwell” CPU does have more L3 cache per core and often more CPU as well as compared to an Intel Xeon “Skylake” core, that does not mean it has a higher performance memory subsystem. A Skylake core has much larger L2 caches than does a Broadwell CPU, and higher bandwidth from DRAM, plus significant advances in prefetcher capabilities. Instead, the above table is merely intended to make apparent how much larger the total L3 cache size is in a Genoa-X server as compared to all prior CPUs.

Caches of this size have an opportunity to noticeably improve (1) effective memory bandwidth and (2) effective memory latency. Many HPC applications increase their performance partially or fully in-line with improvements to memory bandwidth and/or memory latency, so the potential impact to HPC customers of Genoa-X processors is large. Examples of workloads that fall into these categories include:

- Computational fluid dynamics (CFD) – heavily memory bandwidth bound

- Weather simulation – partially memory bandwidth bound

- Explicit finite element analysis (FEA) – heavily memory bandwidth bound

- EDA RTL simulation – heavily memory latency bound

Just as important, however, is understanding what these large caches do not affect. Namely, they do not improve peak FLOPS, clock frequency, or memory capacity. Thus, workloads whose performance or ability to run at all are limited by one or more of these will, in general, not see a material impact from the extra large L3 caches present in Genoa-X processors. Example of workloads that fall into these categories include:

- EDA full chip design – large memory capacity

- EDA parasitic extraction – clock frequency

- Implicit finite element analysis (FEA) – dense compute

Some compute intensive workloads may even see a modest decrease in performance as the larger SRAM that comprises the 3D V-Cache consumes power from a fixed CPU SoC power budget that would otherwise go to the CPU cores, themselves, to driver higher frequencies. Workloads these intensively compute bound are uncommon but they do exist, and it is worth discussing them so as to not misunderstand 3D V-Cache as a performance enhancing feature for all HPC workloads.

Microbenchmark Performance

This section focuses on microbenchmarks that characterize performance of the memory subsystem and the InfiniBand network of HBv4-series and HX series VMs.

Memory Performance

Benchmarking “memory performance” of servers featuring 4th Gen AMD EPYC CPUs with 3D V-Cache is a nuanced exercise due to the variable and potentially significant impact of the large L3 cache.

To start, however, let us first measure the performance of each type of memory (DRAM and L3) independently to illustrate the large differences between these two values.

To capture this information, we ran the industry standard STREAM benchmark sized both (A) primarily out of system memory (DRAM), as well as (B) intentionally sized down to fit entirely inside of the large L3 cache (3D V-Cache).

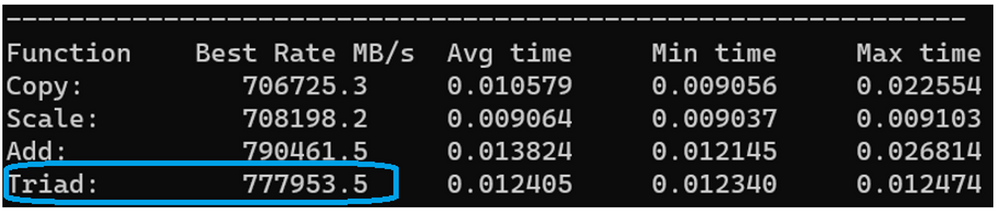

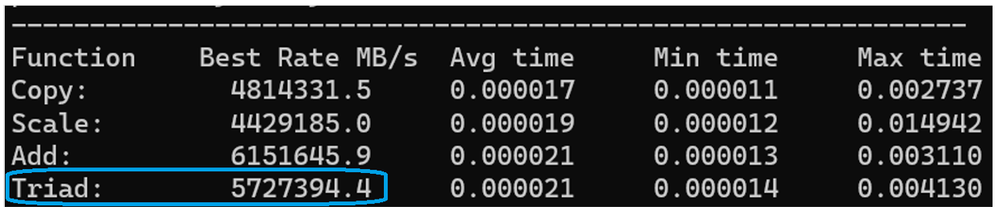

Below in Figure 1, we share the results of running the industry standard STREAM benchmark on HBv4/HX VMs with data size (8.9 GB) too large to fit in the L3 cache (2.3 GB), thereby resulting in memory bandwidth result principally representing DRAM performance.

The STREAM benchmark was run using the following command.

Clang -Ofast -ffp-contract=fast -mavx2 -march=znver4 -lomp -fopenmp -mcmodel=large -fnt-store=aggressive -DSTREAM_ARRAY_SIZE=400000000 -DNTIMES=10 stream.c -o stream

OMP_PLACES=”0,4,8,12,16,20,24,28,32,36,38,42,44,48,52,56,60,64,68,72,76,80,82,86,88,92,96,100,104,108,112,116,120,124,126,130,132,136,140,144,148,152,156,160,164,168,170,174” OMP_NUM_THREADS=48 ./stream

Figure 1: STREAM memory benchmark on HBv4/HX series VMs with 3D V-Cache, 9.6 GB data size

Results shown above are essentially identical to those measured from a standard 2-socket server with “Genoa” processors in a 1 DIMM per channel configuration, such as HBv4/HX Preview VMs (have been replaced by GA HBv4/HX VMs with “Genoa-X” processors). As aforementioned, this is because the test is sufficiently large such that only a small minority of the benchmark fits within the 3D V-Cache, thus minimizing its impact on memory bandwidth. The above result of ~780 GB/s is the expected bandwidth, as there is no difference in the physical DIMMs in these servers as compared to when they ran standard 4th Gen EPYC CPUs.

Below in Figure 2, however, we share the results of running STREAM with a data set sized downward (~80 MB) that fits entirely into the 2.3 GB of L3 cache of a HBv4 or HX series VM, and more precisely into each of the 96 MB of L3 per CCD slices. Here, the STREAM benchmark was run using the following:

clang -Ofast -mavx2 -ffp-contract=fast -march=znver4 -lomp -fopenmp -mcmodel=large -fnt-store=never -DSTREAM_ARRAY_SIZE=3300000 -DNTIMES=100000 stream.c -o stream

OMP_NUM_THREADS=176 ./stream

Figure 2: STREAM memory benchmark on HBv4/HX series VMs fitting entirely into 2.3 GB L3 cache (3D V-Cache)

In Figure 2 above, we see measure bandwidths significantly higher than would result from a standard 4th Gen EPYC CPU or any other CPU without L3 cache so large it stands a reasonable chance to run all or a large percentage of a working dataset out of it. The ~5.7 terabytes/sec of STREAM TRIAD bandwidth measured here is more than 7x the that of the results shown in Figure 1 that principally represent DRAM bandwidth.

So which number is “correct”? The answer is “both” and “it depends.” We can reasonably say both measured numbers are accurate because:

- Each number is a measured, repeatable result and accurately reflects the bandwidth capabilities ; and

- Each number aligns to actual, real-world workload HPC scenarios, and thus the effective bandwidth experienced by an application depends heavily on the circumstances of the model (dataset) in question as well as the scale at which it is run:

- Example1: some workloads by their nature consume several hundreds of gigabytes of memory and thus the impact of the 2.3 GB 3D V-Cache on effective bandwidth is minimized.

- Example2: other workloads are either small to begin with for even a single modern server (VM), or can be strong scaled out to in ways that reduce memory per server (VM) and in turn increase the percentage of data on each server that is running out of L3 as opposed to DRAM. In these cases the effective memory bandwidth is heavily amplified.

Below in the Application Performance section 3D V-Cache effects can be observed by measuring up to 49% uplift compared to standard 4th Gen EPYC CPUs” in highly memory bandwidth bound applications like OpenFOAM. This is strictly subjective to the characteristics of the model in question.

Thus, it can be said that based on the performance data we ourselves have been able to reproducible measure thus far, the amplification effect from 3D V-Cache is up to 1.49x the effective memory bandwidth, because the workload is performing as if it were being fed more like ~1.2 TB/s (1.49 * ~780 GB/s).

Again, the memory bandwidth amplification effect should be understood as an “up to” because the data below shows a close relationship between an increasing percentage of an active dataset running out of cache and an increasing performance uplift. Moreover, Azure’s characterization of the maximum performance uplift may increase over time as we explore greater levels of scaled performance comparisons between 4th Gen AMD EPYC CPUs with and without 3D V-Cache.

InfiniBand Networking Performance

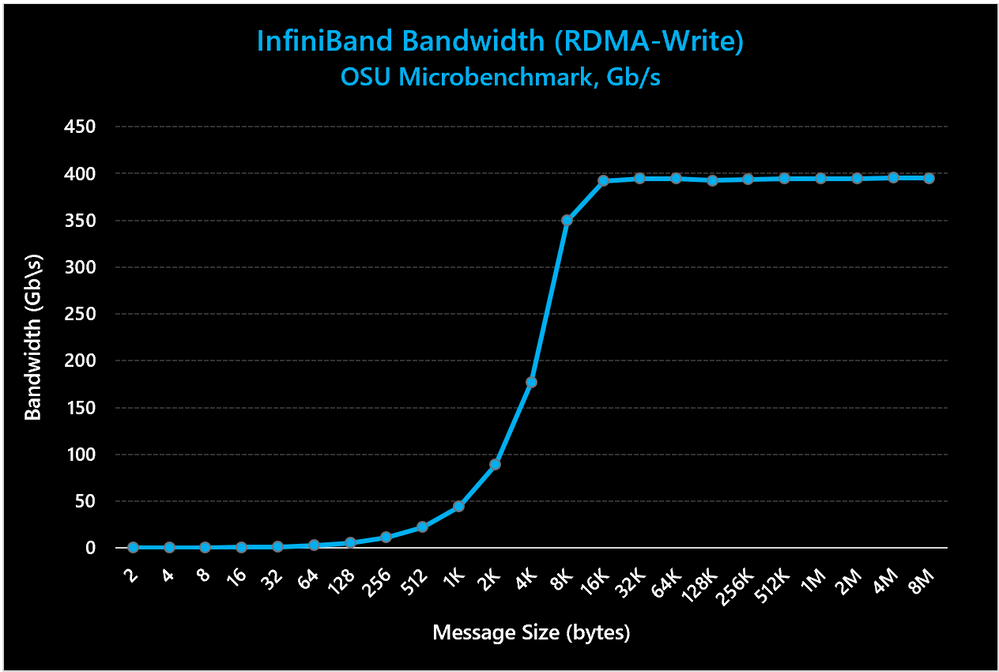

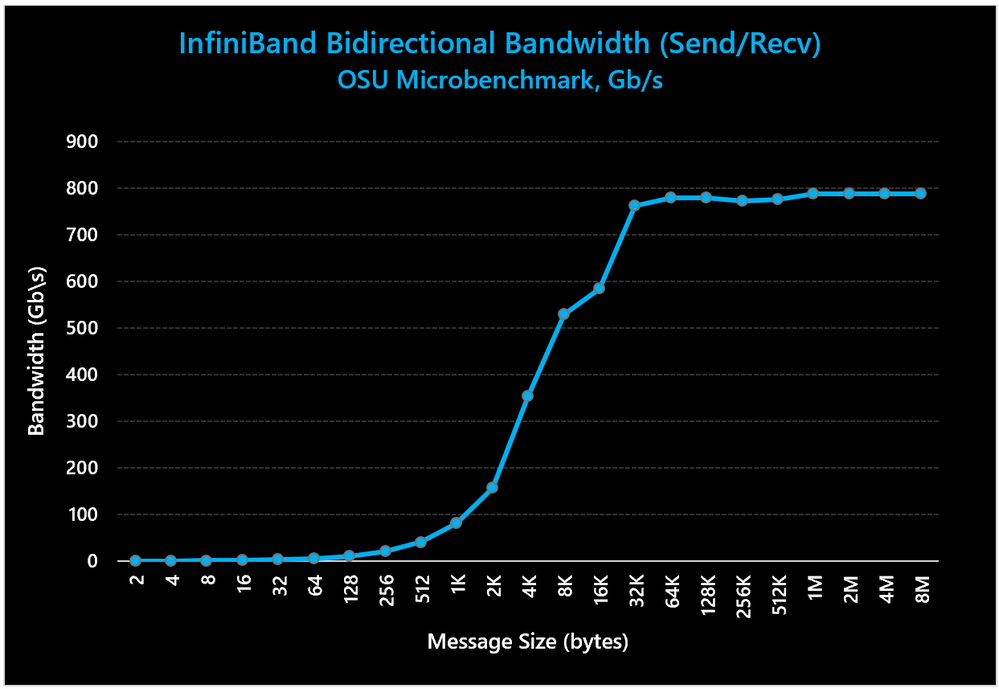

HBv4 and HX VMs are equipped with the latest 400 Gbps NVIDIA Quantum-2 InfiniBand (NDR) networking. We ran the industry standard IB perftests test across two (2) HBv4-series VMs using the following:

Unidirectional bandwidth:

numactl -c 0 ib_send_bw -aF -q 2

Bi-directional bandwidth:

numactl -c 0 ib_send_bw -aF -q 2 -b

Results of these tests are depicted in Figures 3 and 4, below.

Figure 3: Unidirectional InfiniBand bandwidth measuring up to the expected peak bandwidth of 400 Gb/s

Figure 4: Bi-directional InfiniBand bandwidth measuring up to the expected peak bandwidth of 800 Gb/s

As depicted above, Azure HBv4/HX-series VMs achieve line-rate bandwidth performance (99% of peak) for both unidirectional and bi-directional tests.

Application Performance

This section will focus on characterizing performance of HBv4 and HX VMs on commonly run HPC applications. Performance comparisons are also provided across other HPC VMs offered on Azure, including:

- Azure HBv4/HX with 176 cores of 4th Gen AMD EPYC CPUs with 3D V-Cache (“Genoa-X”) (HBv4 full specifications, HX full specifications)

- Azure HBv4/HX with 176 cores of standard 4th Gen AMD EPYC CPUs (no 3D V-Cache) (“Genoa”)

- Azure HBv3 with 120 cores of 3rd Gen AMD EPYC CPUs with 3D V-Cache (“Milan-X”) (full specifications)

- Azure HBv2 with 120 cores of 2nd AMD EPYC CPUs (“Rome”) processors (full specifications)

- Azure HC with 44 cores of 1st Gen Intel Xeon Platinum (“Skylake”) (full specifications)

Note: HC-series represents a highly customer relevant comparison as the majority of HPC workloads, market-wide, still run largely or exclusively in on-premises datacenters and on infrastructure that is operated for, on average, between 4-5 years. Thus, it is important to include performance information of HPC technology that aligns to the full age spectrum that customers may be accustomed to using on-premises. Azure HC-series VMs well-represent the older end of that spectrum and feature highly performant technologies like EDR InfiniBand, 1DPC DDR4 2666 MT/s memory, and 1st Gen Xeon Platinum CPUs (“Skylake”) that comprised a large majority of HPC customer investments and configuration choices during that period. As such, application performance comparisons below commonly use HC-series as a representative proxy for an approximately 4-year-old HPC optimized server.

Note: Standard 4th Gen AMD EPYC CPUs were only available in the Preview of HBv4 and HX series VMs and are no longer available. Starting with General Availability, all HBv4 and HX VMs are available only with 4th Gen EPYC processors with 3D V-Cache (codenamed “Genoa-X”).

Unless otherwise noted, all tests shown below were performed with:

- Alma Linux 8.6 or 8.7 HPC OS found in the Azure Marketplace and Github

- HPC-X MPI 2.15

Computational Fluid Dynamics (CFD)

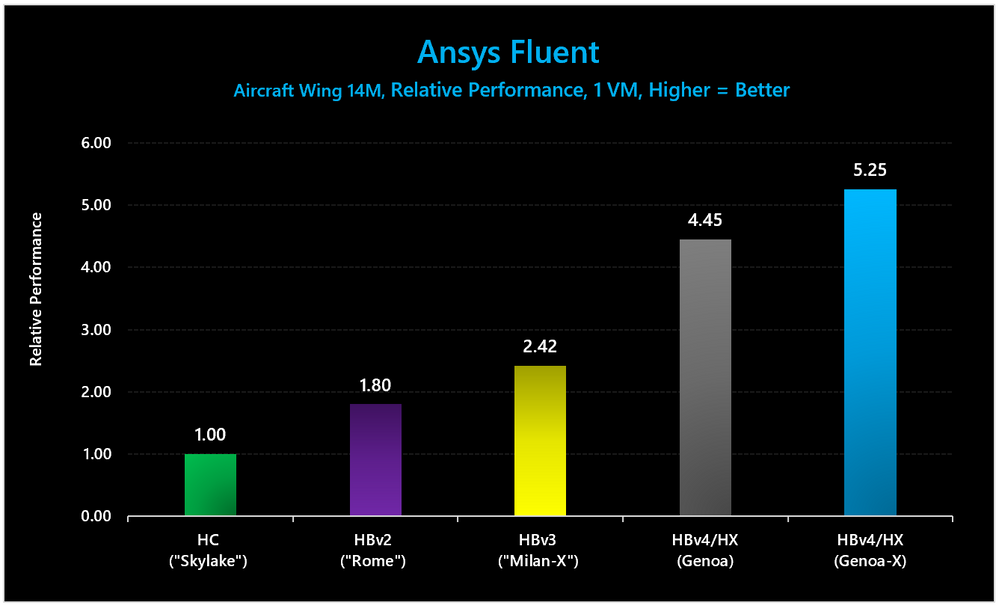

Ansys Fluent

Note: for all ANSYS Fluent tests, HBv4/HX performance data (both with and without 3D V-Cache) were collected using ANSYS Fluent 2022 R2 and HPC-X 2.15 on AlmaLinux 8.6, while all other results were collected with ANSYS Fluent 2021 R1 with HPC-X 2.83 on CentOS 8.1. There are no known performance advantages, however, from using the newer software versions. Rather, each was used due to validation coverage by each of ANSYS, NVIDIA, and AMD.

Figure 6: On Ansys Fluent (Aircraft Wing 14M) HBv4/HX VMs with 3D V-Cache show a 1.18x performance compared to HBv4/HX VMs without 3D V-Cache, and a 2.17x performance uplift compared HBv3 series.

Absolute performance values for the benchmark represented in Figure 6 are shared below:

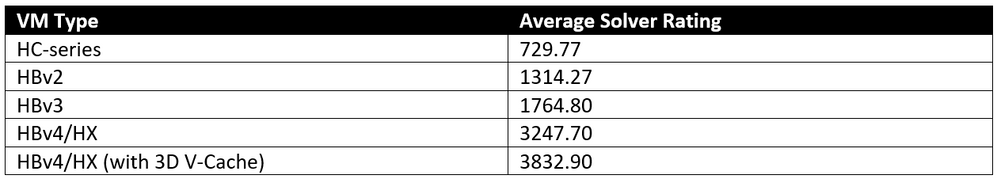

Table 4: Ansys Fluent (Aircraft Wing 14M) absolute performance (average solver rating, higher = better).

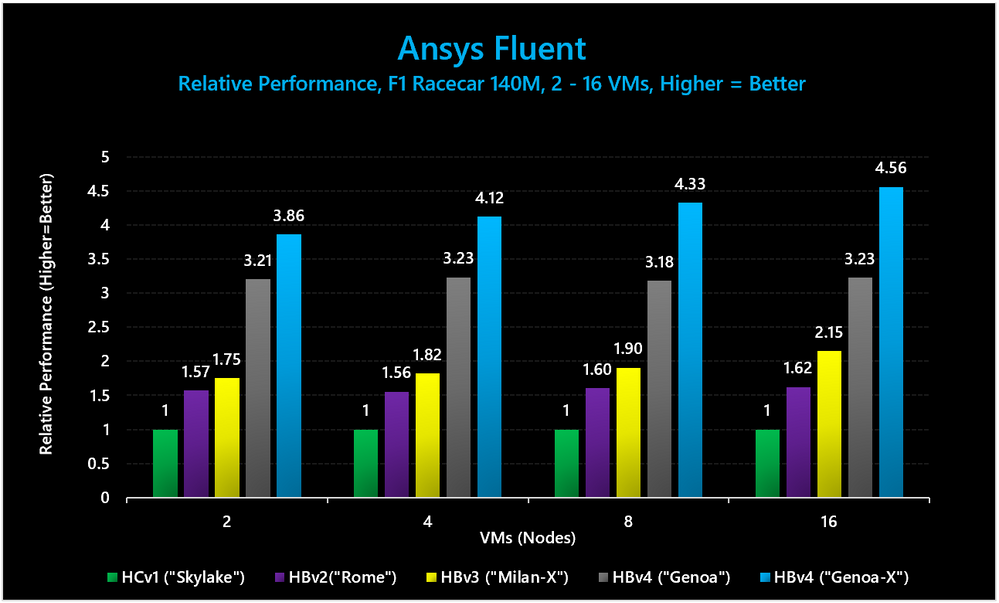

Figure 7: On Ansys Fluent (F1 Racecar 140M) HBv4 VMs with 3D V-Cache show up to 1.42x higher performance than HBv4/HX VMs without 3D V-Cache, and up to 2.12x higher performance compared to HBv3 series.

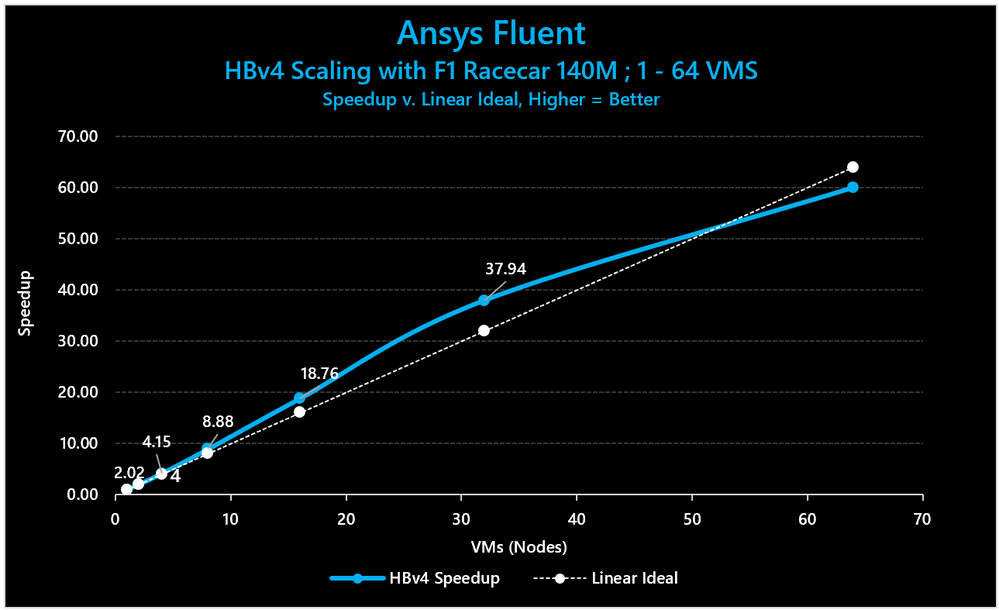

Figure 8: Scaling HBv4 from 1- 64 VMs on Ansys Fluent (F1 Racecar 140M case) shows scaling efficiency up to 118.61% at 32 VMs (37.9x speedup for 32 VMs).

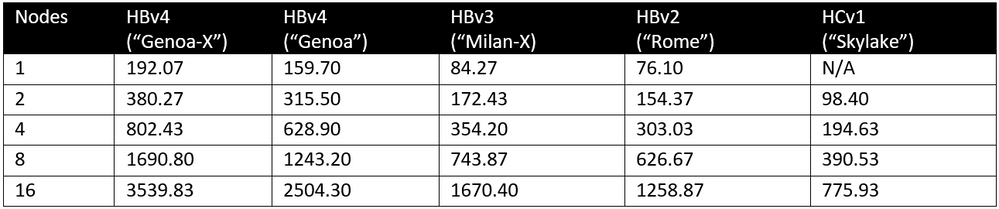

Absolute performance values (solver rating) for the benchmark represented in Figures 7 & 8 are shared below:

Table 5: Ansys Fluent (F1 racecar 140M) absolute performance (average solver rating, higher = better).

Siemens Simcenter STAR-CCM+

Note: for all Siemens Simcenter Star-CCM+ tests, HBv4/HX with 3D V-Cache performance data were collected using Simcenter 18.04.005, while HBv4 performance data without 3D V-Cache used version 18.02.003. Both utilized HPC-X 2.15 on AlmaLinux 8.6. All other results were collected with Siemens Simcenter Star-CCM+ 17.04.008 with HPC-X 2.83 on CentOS 8.1. There are no known performance advantages, however, from using the newer software versions. Rather, each was used due to validation coverage by each of Siemens, NVIDIA, and AMD.

In addition, on AMD-based systems xpmem with Simcenter Star-CCM+ will underperform (~10% performance loss) due to a large increase of page-faults. A workaround is to uninstall the kmod-xpmem package or unload the xpmem module. Applications will fall back to a supported shared memory transport provided by UCX without any other user intervention. A patch for XPMEM should be included in the July 2023 release of UCX/NVIDIA OFED.

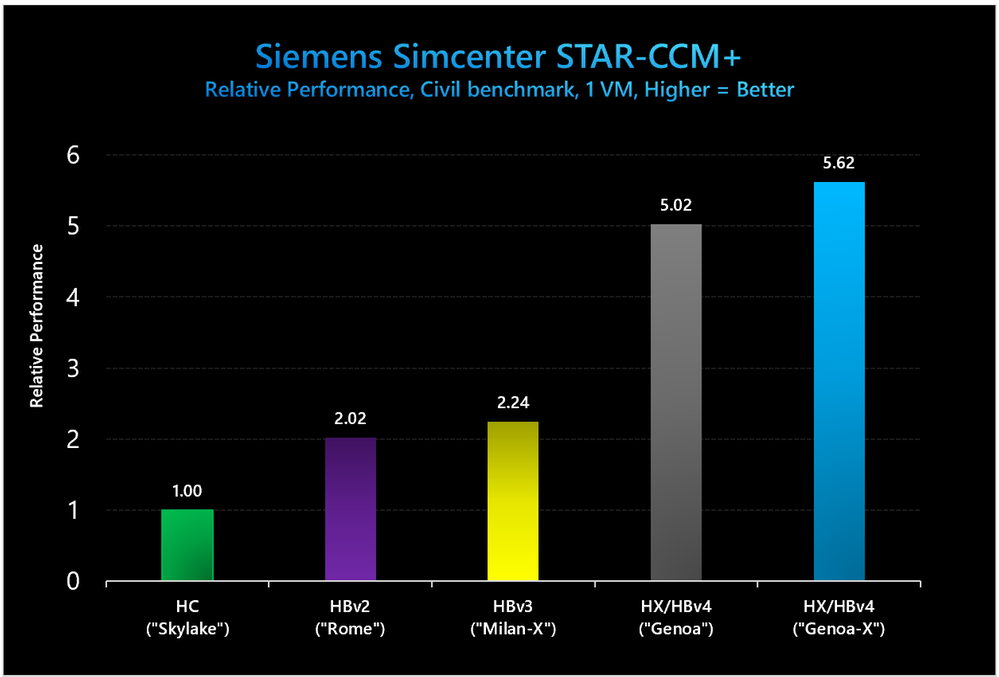

Figure 9: On Siemens Simcenter STAR-CCM+ (Civil) HBv4/HX VMs with 3D V-Cache show a 1.12x performance uplift compared to HBv4/HX without 3D V-Cache, and a 2.5x performance uplift compared to HBv3-series.

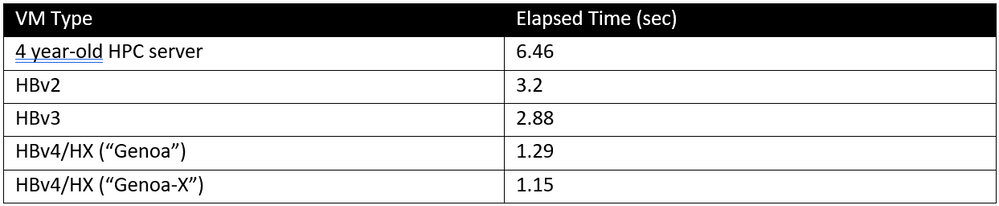

Absolute performance values (elapsed time) for the benchmark represented in Figure 9 are shared below:

Table 6: Siemens Simcenter STAR-CCM+ (Civil) absolute performance (elapsed time, lower = better).

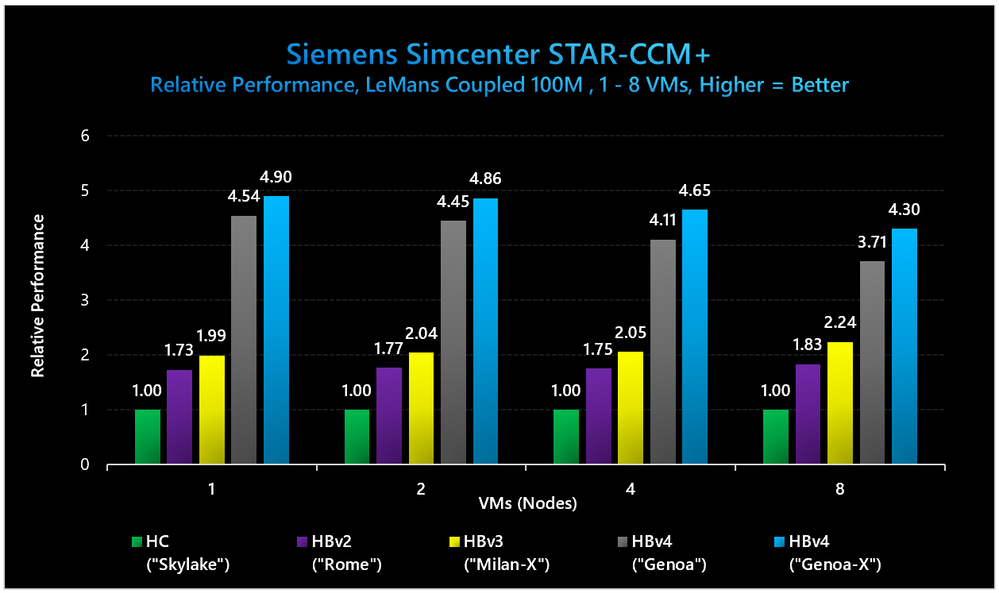

Figure 10: On Siemens Simcenter STAR-CCM+ (LeMans Coupled 100M) HBv4 VMs with 3D V-Cache show a 1.15x performance compared to HBv4/HX without 3D V-Cache, and a 2.24x performance uplift compared HBv3.

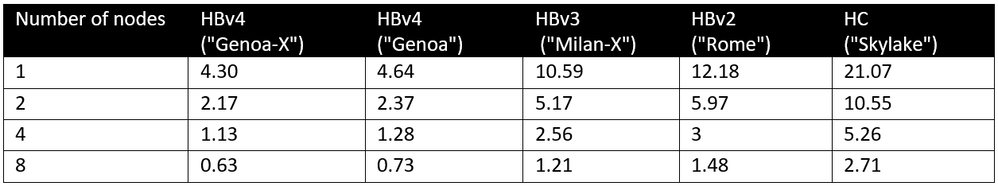

Absolute performance values (elapsed time) for the benchmark represented in Figure 10 are shared below:

Table 7: Siemens Simcenter STAR-CCM+ (LeMans Coupled 100M) absolute performance (elapsed time (s), lower = better) across HBv4/HX VM sizes.

OpenFOAM

Note: All OpenFOAM performance tests used OpenFOAM version 2006, as well as AlmaLinux 8.6 and HPC-X MPI.

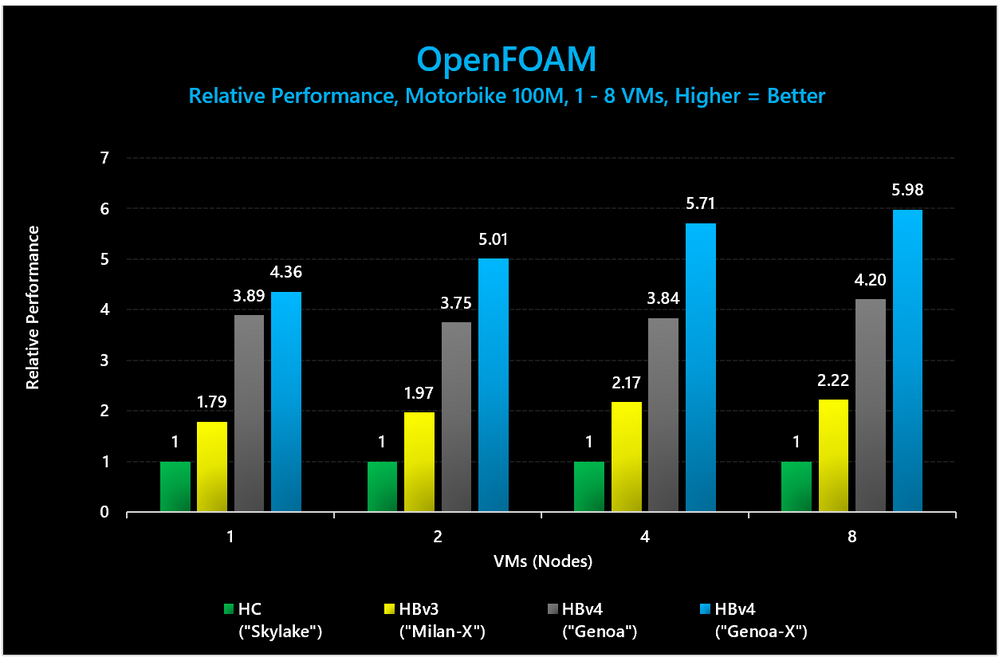

Figure 11: On OpenFOAM (Motorbike 100 M) HBv4/HX VMs with 3D V-Cache provide up to a 1.49x performance compared to HBv4/HX VMs without 3D V-Cache, and up to a 2.7x performance uplift compared to HBv3 series.

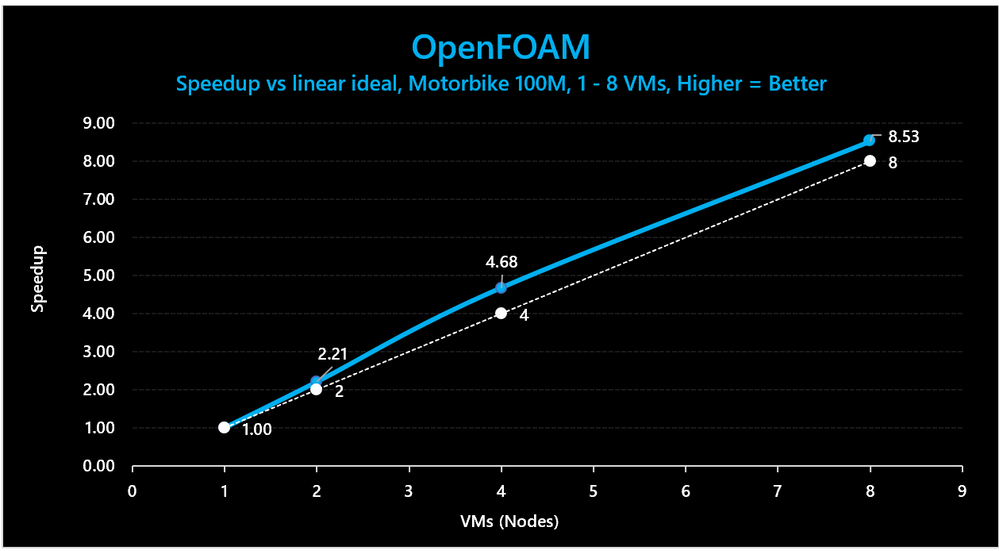

Figure 12: On OpenFOAM (Motorbike 100M) HBv4/HX VMs with 3D V-Cache show up to 117% scaling efficiency going from 1 – 8 VMs.

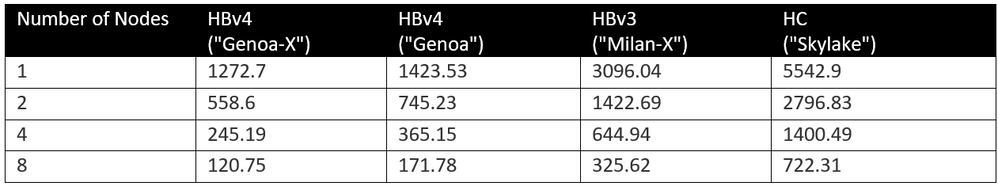

Absolute performance values for the benchmark represented in Figures 11 and 12 are shared below:

Table 8: OpenFOAM (Motorbike 100M cells) absolute performance (execution time (s), lower = better).

Hexagon Cradle CFD

Note: All Hexagon Cradle CFD performance tests used version 2022 with AlmaLinux 8.6.

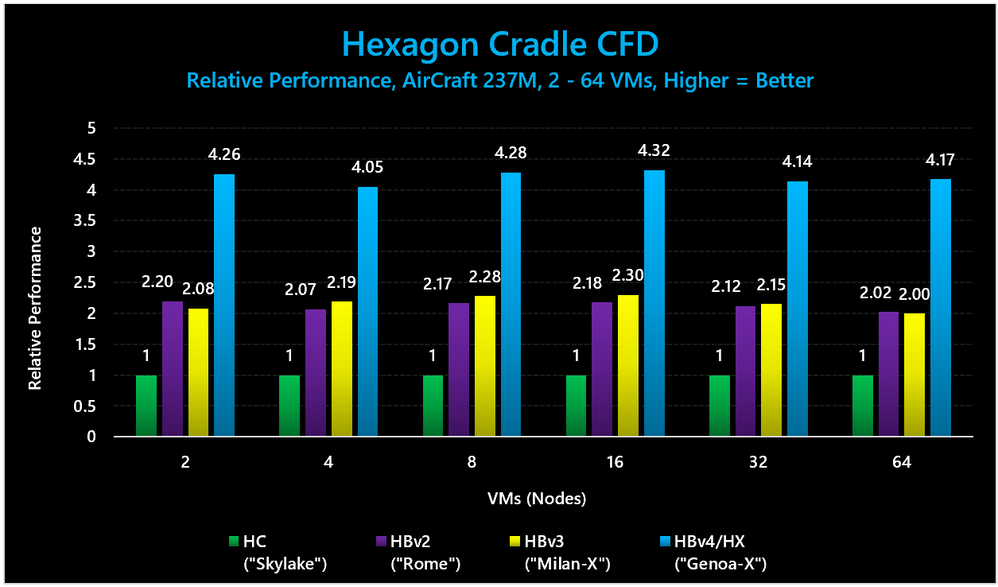

Figure 13: On Hexagon Cradle CFD (AirCraft 237M) HBv4/HX VMs with 3D V-Cache show up to a 2.1x performance uplift compared to HBv3 series.

Absolute performance values (mean time per timestep) for the benchmark represented in Figures 15 and 16 are shared below:

Table 9: Hexagon Cradle CFD (AirCraft 237M) absolute performance (execution time, lower = better).

Finite Element Analysis (FEA)

Altair RADIOSS

Note: All Altair RADIOSS performance tests used RADIOSS version 2021.1 with AlmaLinux 8.6.

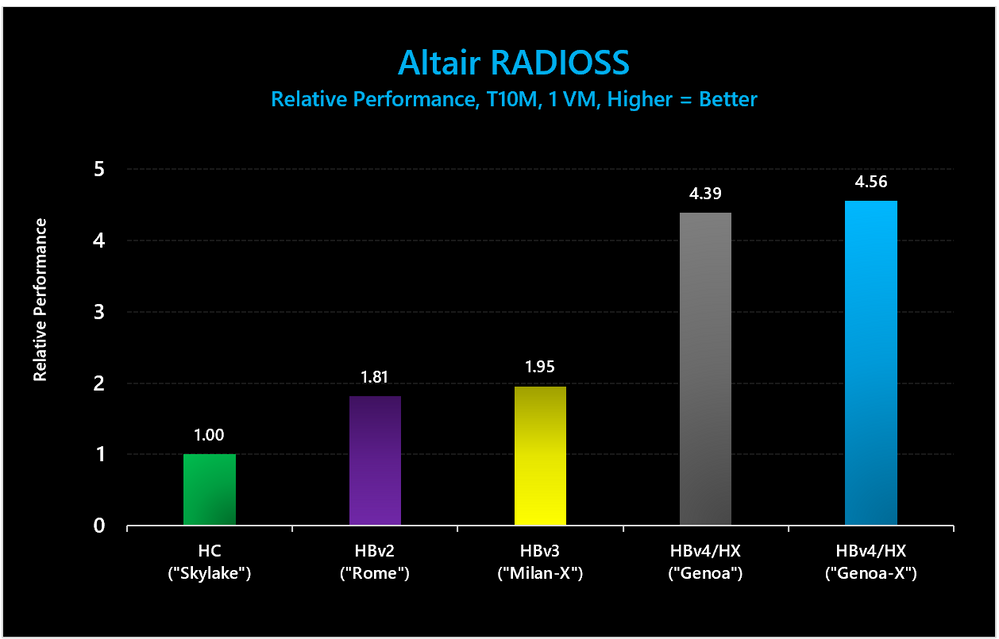

Figure 14: On Altair Radioss (T10M) HBv4/HX VMs with 3D V-Cache show a 1.03x performance uplift compared to HBv4/HX Vms without 3D V-Cache, and a 2.34x uplift compared to HBv3 series.

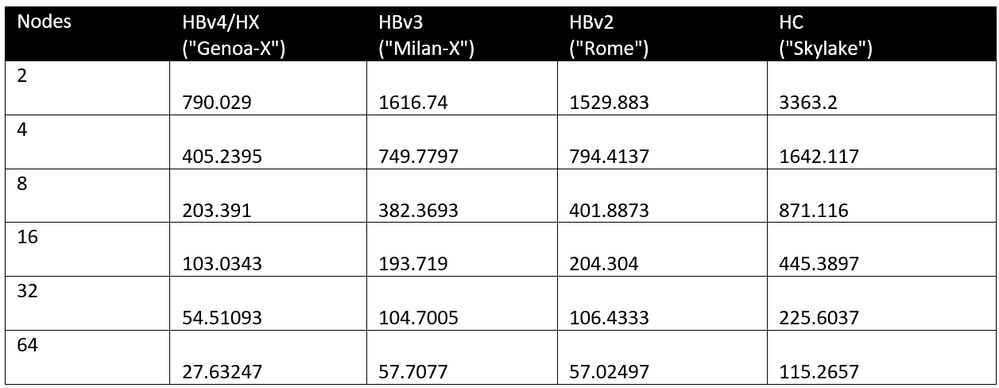

The absolute values for the benchmark represented in Figure 13 are shared below:

Table 10: Altair Radioss (T10M) absolute performance (execution time, lower = better).

MSC Nastran – version 2022.3

Note: All NASTRAN tests performance tests used NASTRAN 2022.3 and AlmaLinux 8.6.

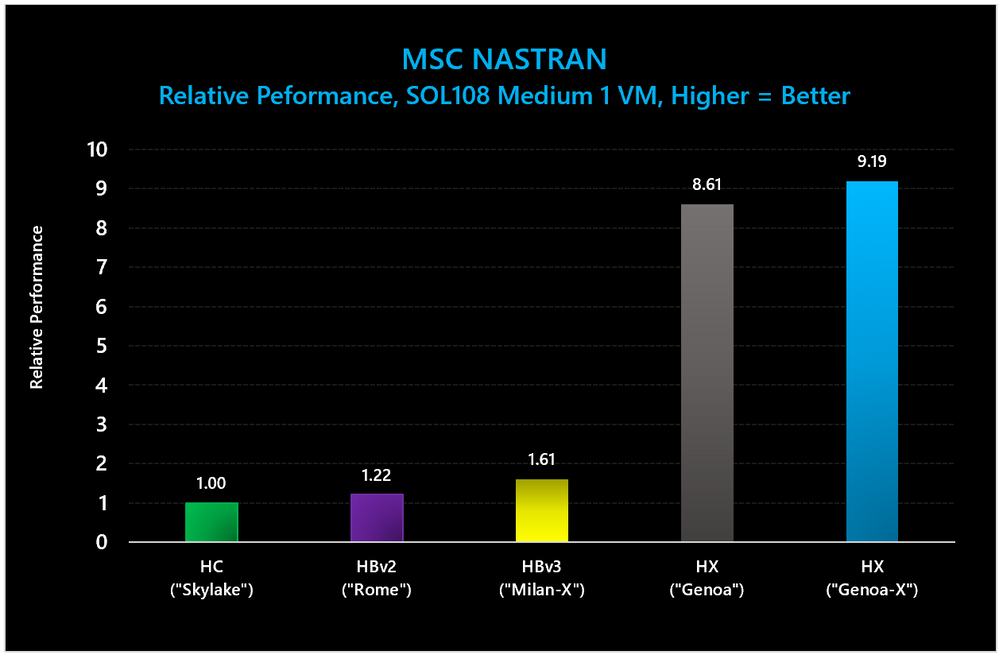

Note: for NASTRAN, the SOL108 medium benchmark was only tested on a HX-series VM because this VM type was created to support such large memory workloads. The larger memory footprint of HX-series (2x that of HBv4 series, and more than 3x that of HBv3 series) allows the benchmark to run completely out of DRAM, which in turn provides additional performance speedup on top of that provided by the newer 4th Gen EPYC processors with 3D V-Cache. As such, it would not be accurate to characterize the performance depicted below as “HBv4/HX” and we have instead marked it simply as “HX.”

Figure 15: On MSC NASTRAN (SOL108 Medium) HX-series VMs with 3D V-Cache show a 1.07x performance uplift compared to HX-series without 3D V-Cache, and a 5.7x performance uplift compared HBv3.

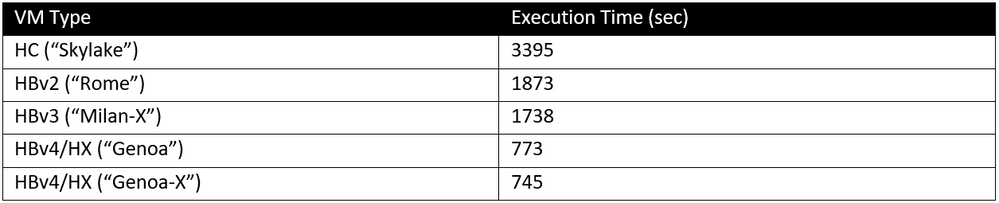

The absolute values for the benchmark represented in Figure 14 are shared below:

Table 11: MSC NASTRAN absolute performance (Execution time: lower = better).

Weather Simulation

WRF

Note: All WRF performance tests on HBv4/HX series (both with and without 3D V-Cache) utilized WRF 4.2.2, HPC-X MPI 2.15, and AlmaLinux 8.6.

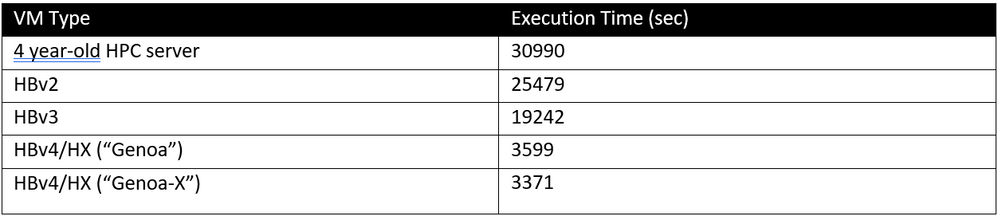

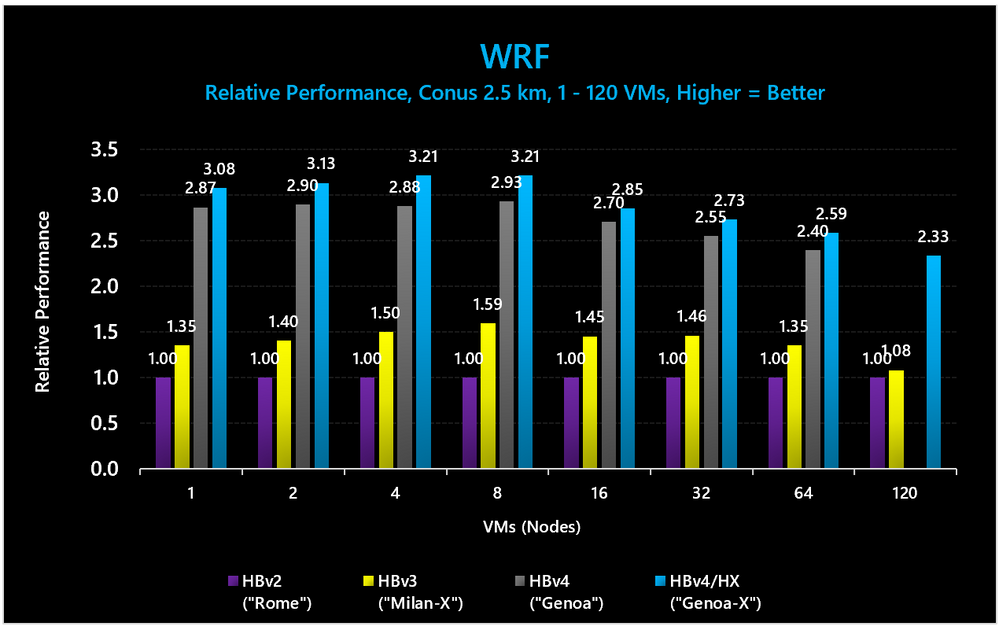

Figure 16: On WRF (Conus 2.5km) HBv4/HX VMs with 3D V-Cache show up to a 1.11x performance uplift compared to HBv4/HX VMs without 3D V-Cache, and up to a 2.24x performance uplift compared to HBv3 series.

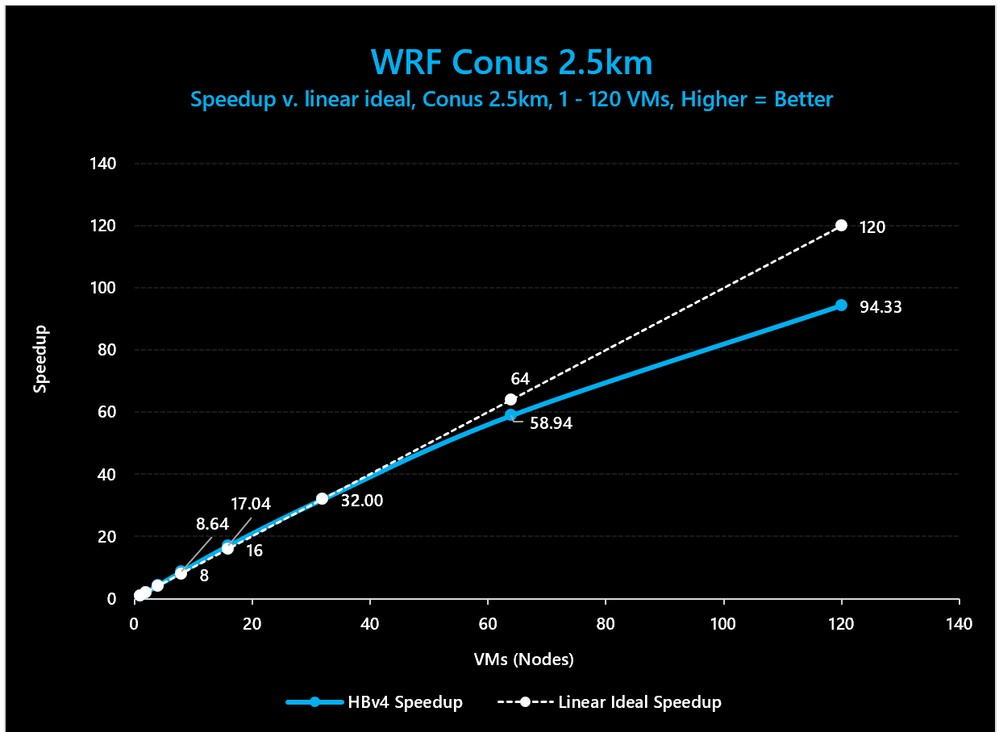

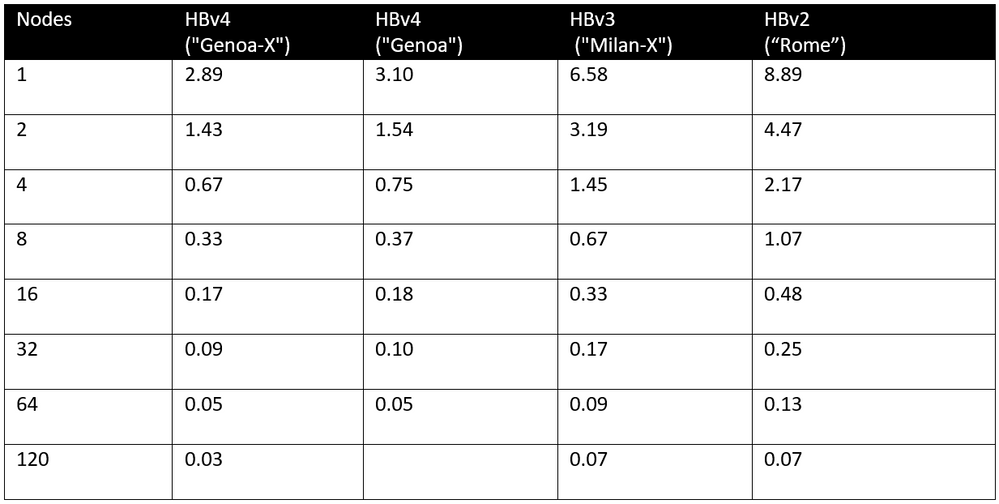

Figure 17: On WRF (Conus 2.5km) HBv4 VMs show 92% scaling efficiency up to 64 VMs. The drop to 79% scaling efficiency at 120 nodes suggests the model size may not be large enough to scale beyond 64 HBv4 VMs with ultra-high efficiency.

Absolute performance values (mean time per timestep) for the benchmark represented in Figures 15 and 16 are shared below:

Table 12: WRF (Conus 2.5km) absolute performance (time/time-step, lower = better).

Molecular Dynamics

NAMD – version 2.15

Note: all NAMD performance tests used NAMD version 2.15 with AlmaLinux 8.6 and HPC-X 2.12. For HBv4/HX and HC-series, the AVX512 Tiles binary was utilized to take advantage of AVX512 capabilities in both Xeon Platinum 1st Gen “Skylake” and 4th Gen EPYC “Genoa” and “Genoa-X” processors. In addition, on AMD systems xpmem will underperform (~10% performance loss) due to a large increase of page-faults. A workaround is to uninstall the kmod-xpmem package or unload the xpmem module. Applications will fall back to a supported shared memory transport provided by UCX without any other user intervention. A patch for XPMEM should be included in the July 2023 release of UCX/NVIDIA OFED.

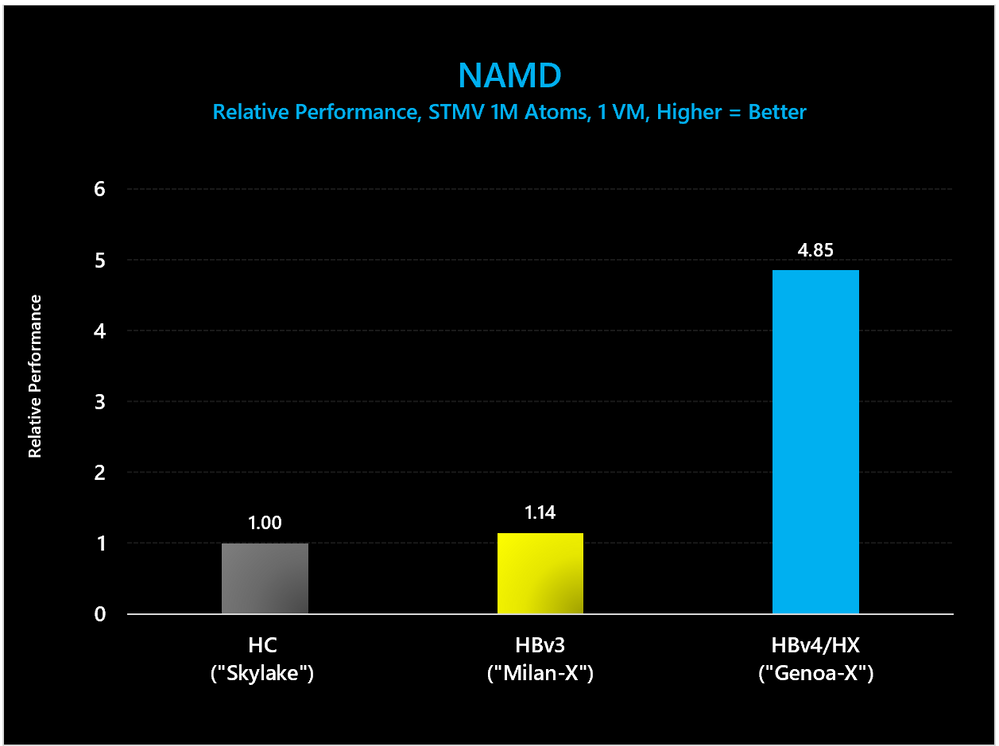

Figure 18: On NAMD (STMV 1M atoms) HBv4/HX VMs with 3D V-Cache show a 4.25x performance uplift compared to HBv3 series.

Absolute performance values (nanoseconds per day) for the benchmark represented in Figure 17 are shared below:

Table 13: NAMD (STMV 1M atoms ) absolute performance (nanoseconds/day, higher = better).

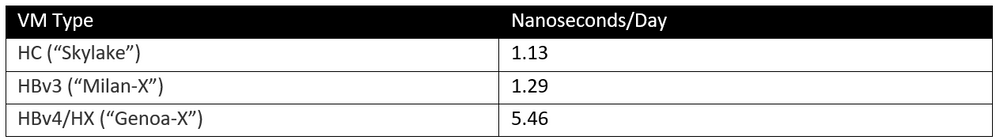

Figure 19: HBv4/HX VMs with 3D V-Cache scaling efficiency on NAMD (STMV 210M atoms), with 100% scaling efficiency at 64 VMs and 92% scaling efficiency at 128 VMs.

Rendering

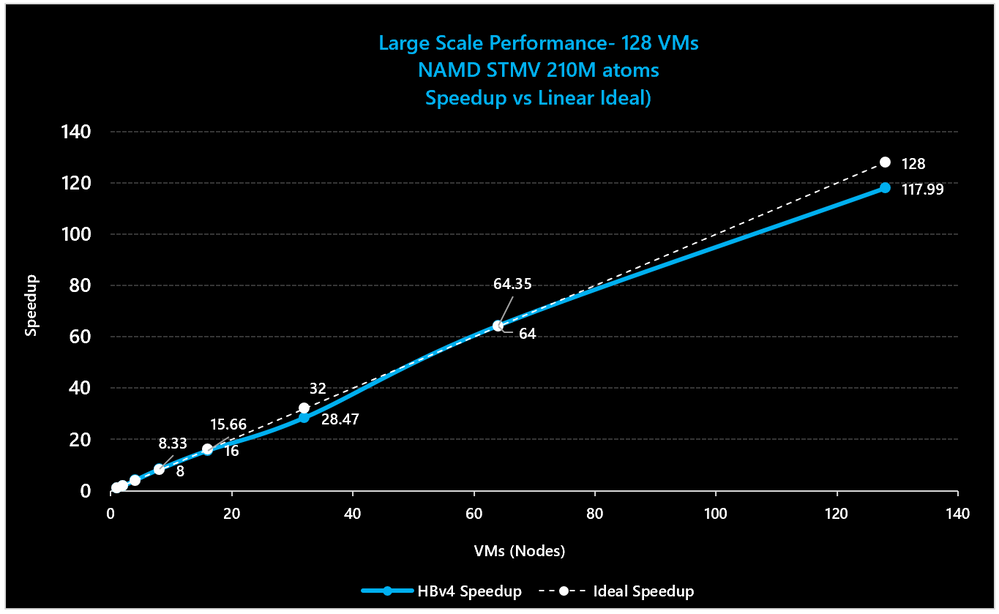

Chaos V-Ray

version 5.02.00. All tests on HBv4/HX VMs used AlmaLinux 8.6, while all tests on HBv3, HBv2, and HC utilized CentOS 7.9. There are no known performance advantages, however, from using the newer software versions. Rather, each was used due to validation coverage by AMD.

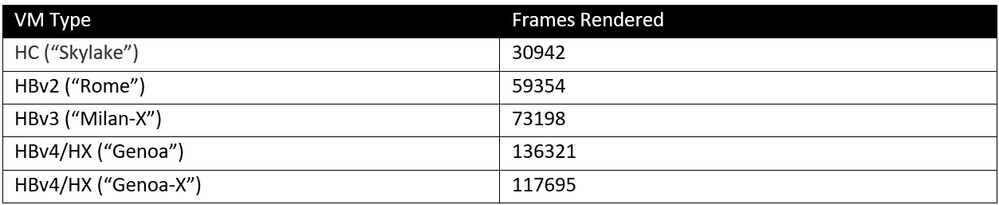

Figure 20: On Chaos V-Ray 5, HBv4/HX VMs with 3D V-Cache yield 14% lower performance than HBv4/HX series VMs without 3D V-Cache, and show a 1.6x performance uplift compared to HBv3 series. The lower performance of the 4th Gen EPYC processors with 3D V-Cache as compared to those without 3D V-Cache is unsurprising, as this workload is heavily compute bound and thus benefits from the higher power budget afforded to the cores on standard 4th Gen EPYC processors.

Absolute performance values (framed rendered) for the benchmark represented in Figure 20 are shared below:

Table 14: Chaos V-Ray 5 absolute performance (frames rendered, higher = better).

Chemistry

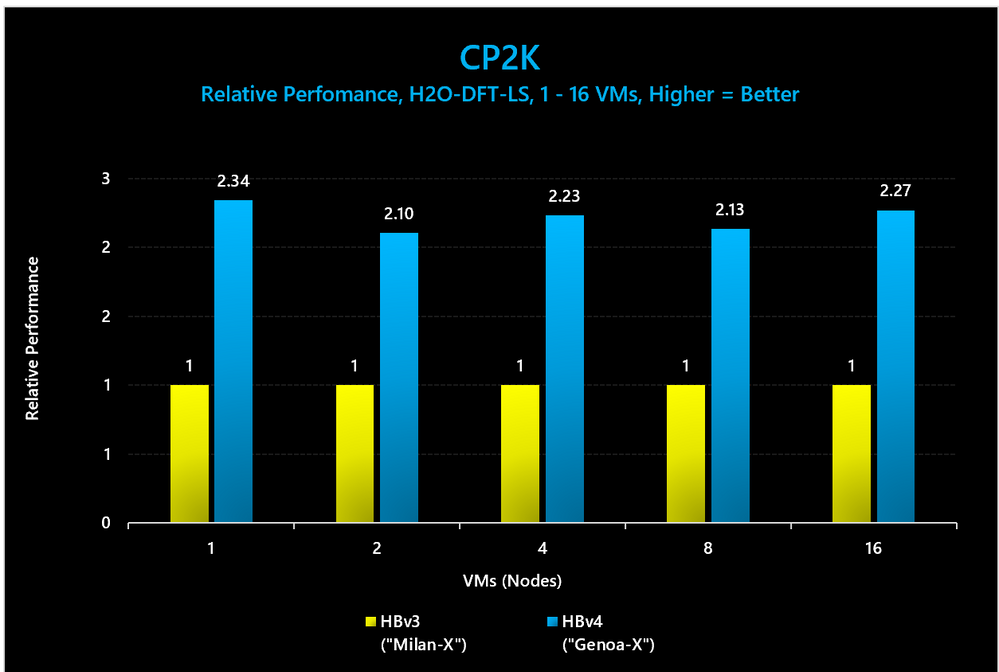

CP2K

version 9.1. All tests on HBv4/HX VMs used AlmaLinux 8.7 and HPC-X 2.15, while all tests on HBv3 VMs CentOS 8.1 and HPC-X 2.8.3. There are no known performance advantages, however, from using the newer software versions. Rather, each was used due to validation coverage by each of NVIDIA and AMD.

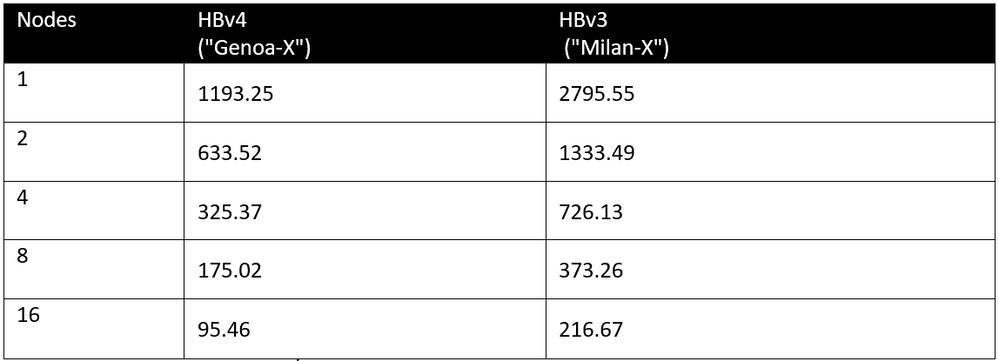

Figure 21: On CP2K (H2O-DFT-LS) HBv4/HX VMs with 3D V-Cache show a performance uplift of up to 2.34x compared to HBv3 series.

Absolute performance values (average execution time) for the benchmark represented in Figure 21 are shared below:

Table 15: CP2K (H2O-DFT-LS) absolute performance (execution time lower = better).

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.