- Home

- Azure

- Azure High Performance Computing (HPC) Blog

- Massive Scaling of Barracuda Virtual Reactor on Azure-GPU

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

1. Introduction:

- We are excited to share with you the latest benchmarks for Barracuda Virtual Reactor on the Azure NDv2 ('Standard_ND40rs_v2') and ND A100 v4 ('Standard_ND96asr_v4') virtual machines (VMs), accelerated by NVIDIA V100 and A100 Tensor Core GPUs.

- The current study is second to the original study that was published back in Jan. 2022 using a relatively small generic model. This generic model is referred to as (Model-1) in the current article.

- The case used in the current study is Encina's production model which is a non-circulating fluidized bed reactor. It is referred to as (Model-2) in the current article.

- Encina's model used in the study is the largest real-world virtual reactor simulation ever run that took full advantage of Azure's advanced GPU system (capacity & sped) therefore produced outstanding results reaching over 506X speedup compared to the CPU serial version of the results.

- The results of this study is considered as a breakthrough for running Barracuda Virtual Reactor on any public cloud platform.

- Full description for the model, Azure's GPU specs, and the results are presented & analyzed in in the next sections.

2. Study Models:

2.1 Model-1: Generic benchmark model for circulating fluidized bed "CFB" reactor.

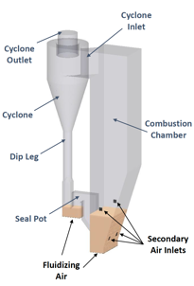

2.1.1 Model Configuration & Mesh: Figure-1 shows the schematic diagram for the model which Figure-2 shows the simulation mesh.

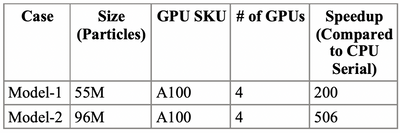

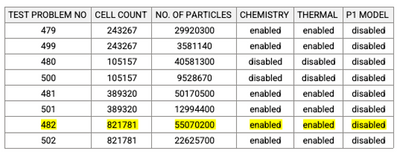

2.1.2 Tests Conducted: Simulation tests for 8 different sizes of Model-a were conducted with the number of solid particles ranging from 30M to 55M. Table-1 presents a summary for all 8 tests including the cell counts for the fluid part, number of particles for the solid part, and the physical models enabled in each test. The biggest model (55M particles) is highlighted in Table-1 and the results were published here.

Figure-2: Simulation Mesh for Model-1

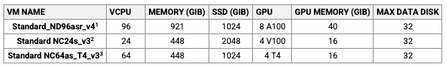

2.1.3 GPU's Used: Table-2 shows the specs of the three Azure VMs used; ND A100 v4 series, NCsv3-series & NCas_T4_v3 series with the results of the ND A100 v4 highlighted.

=================================================================

1. Standard_ND96asr_v4: This VMs is powered by NVIDIA A100 Tensor Core GPUs and 96 physical 2nd-generation AMD EPYC™ CPU cores (2.44GHz). The “ND96asr_v4” VM has 8 GPUs with 40 GB of memory each and supported by 96 AMD processor cores with a total memory of 921GB. Each GPU features NVLINK 3.0 connectivity for communication within the VM.

2. Standard NC24s_v3: This VM belongs to Ncsv3-series This VMs are powered by NVIDIA V100 Tensor Core GPUs. These GPUs can provide 1.5x the computational performance of the NCv2-series.

3. Standard NC64as_T4_v3: This VM Belongs to NCasT4_v3-series. These VMs are powered by Nvidia T4 GPUs and AMD EPYC 7V12 (Rome) CPUs. The “NC64as_T4_v3” VM has 4 NVIDIA T4 GPUs with 16 GB of memory each, up to 64 non-multithreaded AMD processor cores with a total memory of 448GB.

=================================================================

2.1.4 Results: The results of the A100 GPU for Model-1 were presented here.

2.2 Model-2: Encina's production model for a non-circulating fluidized bed reactor.

2.2.1 Model Configuration & Mesh:

* This is a non-circulating fluidized bed reactor with the equations of both the chemical and thermal models enabled.

* As the physical model is proprietary, its configuration can't be presented but the simulation mesh is presented in Figure-3 and simulation details are presented in Table-3.

Figure-3 Simulation Mesh for Model-2

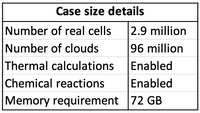

Table-3: Simulation Details of Model-2

* Having 96M solid particles (# of clouds) makes this model much larger than any simulation most Barracuda users typically run and is considered the largest real-world Virtual Reactor simulation ever run.

* Availability of Azure's advanced GPU system (capacity & speed) allowed users to conquer the hardware constraints of running such a mega model.

2.2.2. Azure GPUs Used: Specs and Features

* Two of Azure's GPU accelerated VMs were used as below:

# V100: NDv2 series [instance name: "Standard_ND40rs_v2"]

> Specs:

^ Powered by 8 NVIDIA Tesla V100 Tensor Core NVLINK-connected GPUs, each with 32 GB of GPU memory.

^ Each NDv2 VM also has 40 non-HyperThreaded Intel Xeon Platinum 8168 (Skylake) CPU cores and 672 GiB of system memory.

> Uses: Best suited for AI Training, AI Inference, HPC.

# A100: ND A100 v4 series [instancename: "Standard_ND96ars_v4"]

> Specs:

^ Powered by 8 NVIDIA Ampere A100 Tensor Core GPUs with 40 GB of memory each and 96 physical 2nd-generation AMD EPYC™ CPU cores (2.44GHz) with a total memory of 921GB.

^ Each GPU features NVLINK 3.0 connectivity for communication within the VM.

^ ND A100 v4-based deployments can scale up to thousands of GPUs with 1.6 Tb/s of interconnect bandwidth per VM. Each GPU within the VM is provided with its own dedicated, topology-agnostic NVIDIA Quantum 200Gb/s InfiniBand networking. These connections are automatically configured between VMs occupying the same virtual machine scale set, and support GPUDirect RDMA.

^ The scale-out InfiniBand interconnect is supported by a large set of existing AI and HPC tools built on NVIDIA's NCCL2 communication libraries for seamless clustering of GPUs.

> Uses: Best suited for DL Training, DL Inference, HPC, High Performance Data Analytics.

* Multi Instance GPU "MIG" Features for A100: Taking advantage of the 8 distinct GPUs in each of the A100 VMs, multiple simulations can be done on the same VM but on different GPUs. An analysis for the benefits of this feature will be presented in the "Results" section.

2.2.3 Results:

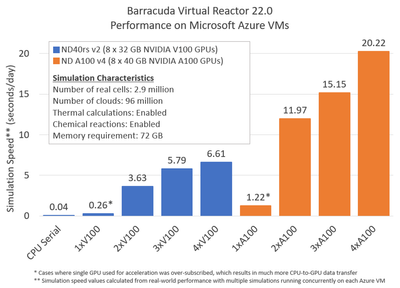

* A scaling study was conducted by running Model-2 on each of the two GPU SKUs (V100 & A100) for different number of GPUs in the VM ranging from 1 to 5 out of the 8 total GPUs in the VM.

* The model scaled well from 1 to 4 GPU's /VM in each of the two GPU SKUs (A100 & V100) as shown in Figure-4 while no extra gain was achieved when using more than 4 GPU's. Therefore, 4 GPUs is considered the "sweet point" in the scaling study for each one of the GPU SKUs.

Figure-4: Scaling Study for Model-2 using both GPU SKU's A100 & V100).

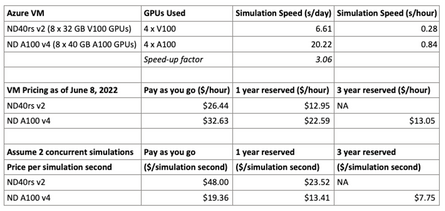

* Performance Comparison between A100 & V100: When using 4 GPUs in the VM for both A100 & V100 the following performance difference is observed:

> Simulation Speed (wall clock seconds/day):

^ A100 GPU achieved a simulation speed of 20.22 wall clock seconds/day outperforming the V100 GPU which achieved only 6.61 wall clock seconds/day.

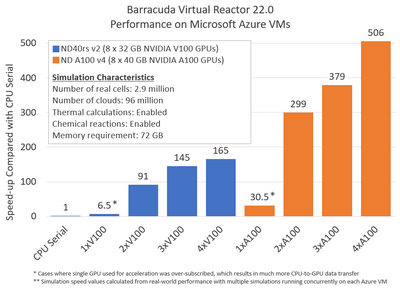

> Simulation speedup in comparison to the CPU Serial case: Simulation speedup = GPU Simulation Speed/CPU Serial Simulation Speed:

^ A100 achieved speed up of 506 = (20.22/0.04) Compared to 165 (6.61/0.04) for V100 as shown in Figure-5.

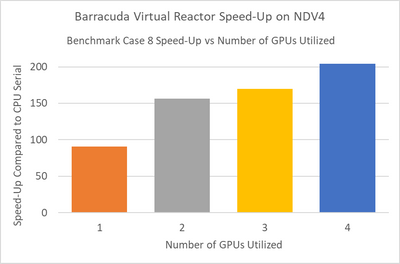

^ When comparing the simulation speedup for both Model-1 (55M particles, Figure-5) and Model-2 (96M particles, Figure-6) using A100 GPU for both, we can clearly see the compute capabilities of A100 GPU in managing the big models. This increased the speedup from 200X (in comparison to CPU serial) for Model-1 to 506X for Model-2 as summarized in Table-4. The massive A100 compute capabilities open wide doors for building the dream big simulation models.

Figure-5: Model-1 Simulation Speedup in Comparison to CPU Serial Case.

Figure-6: Model-2 Simulation Speedup in Comparison to CPU Serial Case.

Table-4: A100 Capability; Increasing Simulation Speedup with Increasing the Model Size.

> Among the many reasons for the superior performance of A100 GPU over V00 are:

^ Memory/VM:

GPU side: 40 GB for A100 compared to 32GB for V100.

CPU side: 921GB for A100 compared to 672GB for V100

^ CPU Specs:

96 CPUs/VM for A100 compared to 40 CPUs/VM for V100

* Capacity Optimization Analysis:

> The Multi Instance GPU "MIG" expands the performance and value of the NVIDIA A100. With MIG, an A100 GPU can be partitioned into as many as seven independent instances, giving multiple users access to GPU acceleration.

> With A100 40GB, each MIG instance can be allocated up to 5GB, and with A100 80GB’s increased memory capacity, that size is doubled to 10GB.

> MIG lets infrastructure managers offer a right-sized GPU with guaranteed quality of service (QoS) for every job, extending the reach of accelerated computing resources to every user.

* Financial Analysis: Cost analysis comparison between 4A100 & 4V100

Table-5 summarizes the simulation results of A100 & V100 VMs with using 4 GPUs in each VM. It includes the 3 available hourly pricing options with their cost analysis (PAYG, 1 year reservation & 3 years reservation). It reports the cost ($/simulation second) for each option when taking advantage of MIG by running 2 simulations on the same VM.

Table-5: Summary of the simulation results of A100 & V100 using 4 GPUs in each VM.

> The fastest hardware A100 (Simulation Speed) has also the best value ($$$/Sim Sec).

> When considering price per simulation second, the A100 system has a better value.

> Given its higher performance to price ratio for Barracuda simulations (compared to the V100-based system), customers can run at maximum speed and save money by using the A100-based system.

3. Summary:

The current study highlighted the great compute capacity of Azure's NDv2 series (instance 'Standard_ND40rs_v2) and ND A100 v4 series (instance 'Stancdard_ND96asr_v4) virtual machines, powered by NVIDIA V100 and A10 Tensor Core GPUs. The study ran the Barracuda Virtual Reactor "BVR" code for simulating the largest real-world, industry grade Virtual Reactor simulation ever run making it much larger than any simulation most Barracuda users typically run. The GPU accelerated ND A100 v4 VM exhibited an outstanding capacity managing such massive model scaling it to a speedup of 506X compared to the CPU serial case. NVDIA's technology of Multi Instance GPU "MIG", expands the performance and value of the NVIDIA A100 allowing users to run as much as 7 concurrent simulations on the same GPU VM. This not only extending the reach of accelerated computing resources to every user but decreases the cost/simulation making the A100 system of a better value.

Credits: This work was made possible by the close collaboration and contribution of the following orgs and individuals:

- CPFD: Sam Clark & Peter Blaser

- Encina: Song Wang

- Microsoft Azure: Ahmed Taha & Gauhar Junnarkar

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.