- Home

- Azure

- Azure High Performance Computing (HPC) Blog

- Integrating Azure Managed Lustre Filesystem (AMLFS) into CycleCloud HPC Cluster

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Overview:

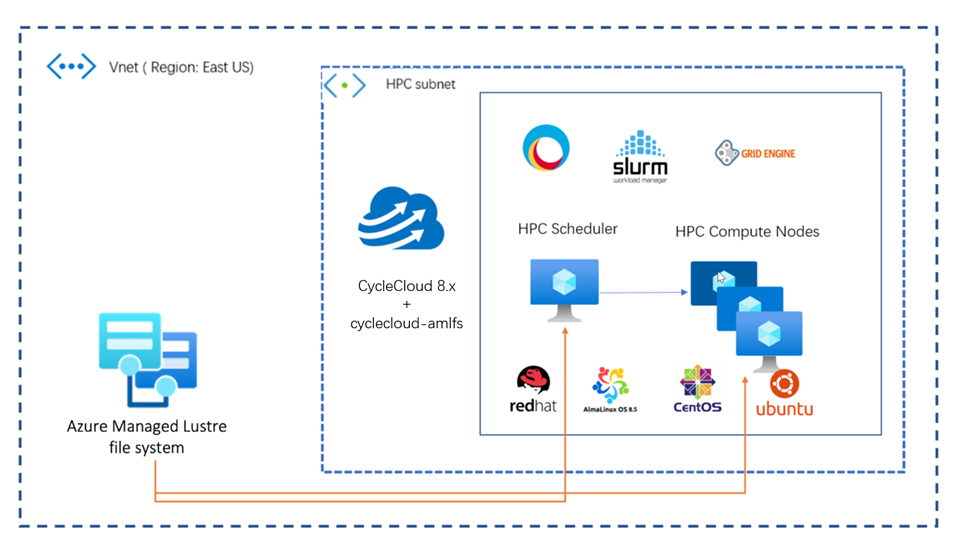

This blog discusses how easily we can integrate Azure Managed Lustre Filesystem into CycleCloud HPC cluster using a custom project named cyclecloud-amlfs.

Azure Managed Lustre delivers the time-tested Lustre file system as a first party managed service on Azure. Long time users of Lustre on-premises can now leverage the benefits of a complete HPC solution, including compute and high-performance storage, delivered on Azure.

Azure CycleCloud is an enterprise-friendly tool for orchestrating and managing High-Performance Computing (HPC) environments on Azure. With CycleCloud, users can provision infrastructure for HPC systems, deploy familiar HPC schedulers (Slurm, PBSpro, Grid Engine, etc.), and automatically scale the infrastructure to run jobs efficiently at any scale.

The cyclecloud-amlfs project will help users install the Lustre Client software and automatically mount the Lustre filesystem to 100 or 1000s of HPC compute nodes, deployed via Azure CycleCloud.

Pre-Requisites:

- CycleCloud must be installed and running (CycleCloud 8.0 or later).

- Azure Managed Lustre filesystem must be configured and running.

- Supported OS versions:

- CentOS 7 / RHEL7

- Alma Linux 8.5 / 8.6

- Ubuntu 18.04 LTS

- Ubuntu 20.04 LTS

- Supported cyclecloud templates.

- Slurm

- PBSpro

- Grid Engine

Configuring the Project

- Open a terminal session in CycleCloud server with the CycleCloud CLI enabled.

- Clone the cyclecloud-amlfs repo.

git clone https://github.com/vinil-v/cyclecloud-amlfs - Switch to cyclecloud-amlfs project directory and upload the project to CycleCloud locker.

cd cyclecloud-amlfs/ cyclecloud project upload <locker name> - Import the required template (Slurm/ OpenPBS or Gridenigne).

cyclecloud import_template -f templates/slurm_301-amlfs.txt

Note: if you are using cyclecloud-slurm 3.0.1 version (Comes with CycleCloud 8.4), please use the template named slurm_301-amlfs.txt. other cyclecloud-slurm releases (2.x) can use slurm-amlfs.txt template.

Configuring AMLFS in CycleCloud Portal

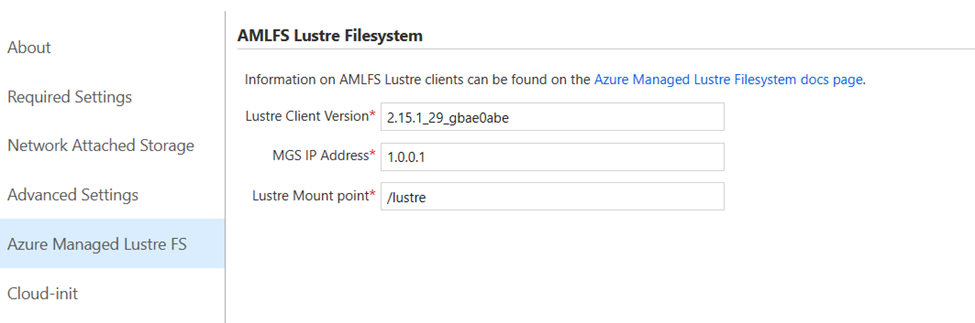

The following parameters required for successful configuration. Please refer Install Clients document to check the pre-built AMLFS client version for the selected OS.

At the time of writing this document, the AMLFS Client version is 2.15.1_29_gbae0ab. Ubuntu version has hyphen ( - ) and EL version has underscores ( _ ). Ubuntu version: 2.15.1-29-gbae0abe & RedHat/ AlmaLinux / CentOS : 2.15.1_29_gbae0ab.

- Lustre Client Version.

- MGS IP Address

- Name of the Lustre Mount Point in the compute nodes

Create new cluster from the imported template (Slurm-AMLFS in this example) and in the Azure Managed Lustre FS section, fill the above-mentioned parameter.

Start the cluster. Make sure that the AMLFS is running, and MGS IP is reachable to all the nodes.

Testing

Login to the node and run df -t lustre to check the mounted lustre filesystem. the following output from CycleCloud 8.4, cyclecloud-slurm 3.0.1 and Almalinux 8.6.

[root@s1-scheduler ~]# jetpack config cyclecloud.cluster_init_specs --json | egrep 'project\"|version'

"project": "slurm",

"version": "3.0.1"

"project": "cyclecloud-amlfs",

"version": "1.0.0"

"project": "slurm",

"version": "3.0.1"

[root@s1-scheduler ~]# df -t lustre

Filesystem 1K-blocks Used Available Use% Mounted on

10.222.1.62@tcp:/lustrefs 17010128952 1264 16151959984 1% /lustre

[root@s1-scheduler ~]# lfs df

UUID 1K-blocks Used Available Use% Mounted on

lustrefs-MDT0000_UUID 628015712 5872 574901108 1% /lustre[MDT:0]

lustrefs-OST0000_UUID 17010128952 1264 16151959984 1% /lustre[OST:0]

filesystem_summary: 17010128952 1264 16151959984 1% /lustre

All the best!

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.