Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

- Home

- Azure

- Azure High Performance Computing (HPC) Blog

- Migrating existing HPC workloads from Azure Cyclecloud to Azure Batch

Migrating existing HPC workloads from Azure Cyclecloud to Azure Batch

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

By

Published

Oct 16 2023 05:01 AM

2,472

Views

Oct 16 2023

05:01 AM

Oct 16 2023

05:01 AM

Understanding Azure Batch for HPC

Azure Batch is a cloud-based service provided by Microsoft Azure that's designed to handle HPC workloads efficiently. It's known for its simplicity and versatility, offering a range of features that make it a modern choice for organisations with compute-intensive tasks. Some key features of Azure Batch include:

- Auto-Scaling: Azure Batch can automatically scale up or down based on the demands of your workload, ensuring optimal resource utilisation and cost-effectiveness.

- Infrastructure-Agnostic: It doesn't tie you to a specific infrastructure; you can use Azure Batch to manage and run tasks on a variety of virtual machine sizes and types.

- REST API: Azure Batch provides a RESTful API that allows you to manage your batch jobs programmatically.

- Application Packages: You can package your applications and dependencies with your jobs, ensuring consistent execution environments.

- Security: Azure Batch offers robust security measures to protect your data and applications.

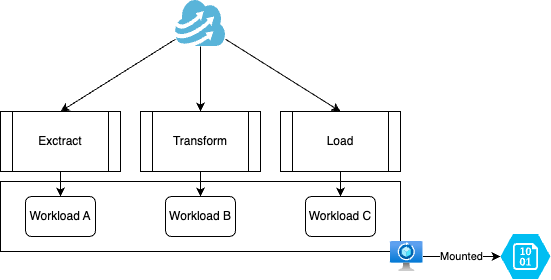

The Challenge of Adapting Existing Workloads

While Azure Batch offers a plethora of advantages, the transition from existing HPC solutions to Azure Batch can be complex. Many organisations have specific workloads that were initially designed for other HPC platforms, like genome sequencing algorithms, weather forecasting models, or data transformation pipelines.

These existing workloads may be tightly coupled with the features and nuances of the platform they were originally developed for. Adapting them to Azure Batch requires careful consideration and often involves overcoming various challenges.

The Integration Imperative

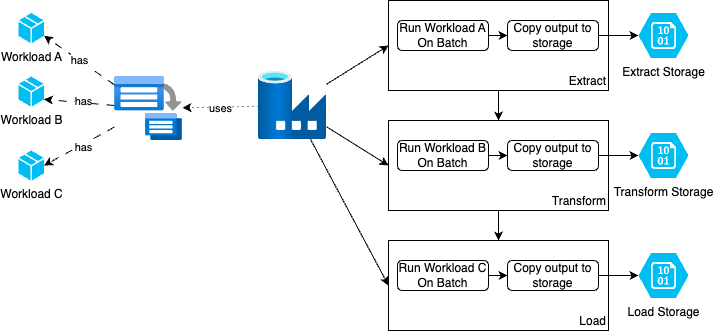

Integration with Azure Batch was a primary concern in the project. Even though Azure Data Factory was already using Azure Batch Accounts for job execution, additional functionality is required.

Addressed Challenges

Migrating existing workloads to new HPC solution is challenging. We listed main pain points to tackle;

1. Legacy codebase of the workloads with obsolete dependencies

2. Not supported virtual machine image that workloads depend on

3. Providing real-time updates to users about job progress.

4. Observability about workloads

5. Unmanaged exception

Introducing the Azure Batch Manager

To streamline the process and abstract the complexities of Azure Batch, we created the Azure Batch Manager Python package(private). This package acts as a bridge between the intricacies of Azure Batch and the needs of HPC workload developers.

With the Azure Batch Manager:

Developers can work at a higher level of abstraction, focusing on their specific workload requirements without delving deep into the inner workings of Azure Batch.

Azure Batch Suggested Best practices, a security-first approach, and a developer-friendly environment are provided out of the box, allowing developers to harness the full potential of Azure Batch.

Programming Model

HPC workloads in this architecture consist of two core packages: the workload package and the Batch Manager package. The workload package contains the specific business logic and tasks for the workload, while the Batch Manager package abstracts the complexities of Azure Batch infrastructure.

- Workload Package and Initialization

In this architecture, the initialization of the HPC application occurs within a __main__.py file, which serves as the entry point for the Batch Manager. This file is responsible for initializing the workload provided by the workload package and executing it. Below is an example excerpt based on the Workload:

logger = logging.getLogger(__name__)

if __name__ == "__main__":

setup_logging(Path(Path(__file__).parent, "logging.yaml"))

logger.debug("Bootstrapping HPC application.")

settings = BatchClientSettings()

client = BatchClientFactory.create(settings)

app = Application(ScrapingWorkload(batch_client=client))

app.run()

- Workload Definition

The workload class, derived from WorkloadBase, defines the essential components of your HPC workload. Two primary components of the workload definition are:

a. The Job Manager

The Job Manager plays a crucial role in orchestrating the execution of workload tasks. It is provided by the Batch Manager and can be customized by creating a custom implementation derived from the JobManager class. The JobManager offers a create_job method that allows you to define the tasks to be executed within a job.

Here's an example of how tasks are defined within a JobManager:

def create_job(self) -> None:

"""Creates the job."""

task = self.add_workload_task(

ExampleWorkloadTask, {"input_example": "hello-world-task-1"}, []

)

# 'task' is a TaskSpecification and can be used to define task input and output files.

The JobManager is initialized via a Builder pattern. The Builder is registered in the Workload Class, like so:

class ExampleWorkload(WorkloadBase):

def __init__(self, batch_client: BatchClient) -> None:

super().__init__(

batch_client=batch_client,

# Here, the JobManagerBuilder is registered:

job_manager_builder_type=ExampleWorkloadJobManagerBuilder,

)

b. TaskSpecification

TaskSpecification classes play a key role in defining the input and output files for tasks within your workload. You can specify where task input files should be downloaded from and where task output files should be uploaded to.

Here's an example of defining input and output files within a TaskSpecification:

# Input file specification:

example_workload_task.input_files.append(

InputFileSpecification(

storage_account_name='<target-storage-account>',

container_name=f"job-<job-id>",

source_blob_path='<source-blob-path>',

target_file_path='<target-relative-file-path-in-working-dir>',

identity_reference='<identity-for-download>'

)

)

# Output file specification:

task.output_files.append(

OutputFileSpecification(

source_file_path='<source-relative-file-path-in-working-dir-of-task>',

storage_account_name='<target-storage-account>',

container_name=f"job-<job-id>",

target_blob_path='<target-relative-blob-path>',

task_id='<task-id>',

identity_reference='<identity-for-upload>',

)

)

- Workload Tasks

Tasks that need to be executed within the workload are encapsulated in classes like ExampleWorkloadTask. To initialize WorkloadTasks effectively, a Builder pattern is employed. Both the Builder and the Task need to be registered within the Workload class:

class ExampleWorkload(WorkloadBase

def __init__(self, batch_client: BatchClient) -> None:

...

# Here, the WorkloadTaskBuilder is registered:

self._entry_points_repository.register_entry_point(

ExampleWorkloadTask, ExampleWorkloadTaskBuilder

)

...

- FinalizeTask

The Batch Manager includes a FinalizeTask, which is a default task provided for handling certain tasks upon completion of the workload execution. In particular, this task is responsible for invoking the callback URL of the workload package. The callback URL is an essential requirement, especially if the default JobManager is in use. The FinalizeTask is automatically added to the job execution process.

The Tech Stack

Our solution leveraged a tech stack that includes:

- Python: The primary programming language for building the Azure Batch Manager package.

- Azure Batch: The core service for running HPC workloads.

- Poetry: A Python package management tool used to manage dependencies.

- PyTest: A testing framework for Python, crucial for ensuring the reliability of our solution.

Testing in a SaaS Environment

Testing HPC workloads for a Software as a Service (SaaS) product like Azure Batch posed unique challenges. Since Azure Batch operates in the cloud, traditional local testing was impractical. To address this issue, we implemented a dispatcher that enabled:

Local Testing: Developers could now test their workloads on their local development machines.

Debugging Capabilities: Debugging became easier with the ability to run and debug workloads locally.

Integration Testing: We could conduct integration testing in a controlled environment.

Ensuring Continuous Delivery

In a rapidly evolving Azure Batch landscape, it's essential to deliver workloads and keep them aligned with the latest version of the service. To address that, we used following approaches;

1. Using application packages with default version

2. Using SemVer and Conventional Commit to version

3. Testing workloads on Dev subscription before publishing

4. Approval mechanism for deployments

5. User Manager Identity for security

Verdict: Unlocking Azure Batch's Potential

In summary, Azure Batch provides extensive features for HPC workloads. Its cloud-based nature, scalability, and rich feature set make it a valuable asset for organizations with compute-intensive tasks. The transition to Azure Batch can be challenging, especially when adapting existing workloads.

The Azure Batch Manager package simplifies this transition by providing a high-level abstraction, best practices, and a developer-friendly environment. With this tool, developers can harness the capabilities of Azure Batch without getting bogged down in the details. It's not just about running workloads; it's about optimizing, securing, and integrating them effectively. Azure Batch, when used strategically, can be a game-changer for your organization's HPC needs.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels