- Home

- Azure

- Azure Architecture Blog

- Provisioning Multiple Egress IP Addresses in AKS

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Introduction

When you create a Kubernetes cluster using the Azure Kubernetes Service (AKS), you can choose between three “outbound” types to suit your application’s specific needs. However, by default with each outbound type, you will end up with a single egress “device” and a single egress IP address for your whole cluster.

Problem Statement

In some situations, generally in multi-tenancy clusters, you may need more than one egress IP address for your applications. You might have downstream customers that your applications need to connect to, and those customers want to whitelist your IP address. Or maybe you need to connect to a database in another virtual network or a third party resource like an API, and they want to whitelist the IP address you can connect from. Either way, you may not want to use your “main” cluster egress IP address for these sorts of traffic, and you may wish to route certain traffic out of your cluster through a separate egress IP address.

Currently in Azure, traffic gets routed within or out of an Azure virtual network (Vnet) using subnets and route tables. Route tables are a set of rules that define how network traffic should be routed, and they are applied to one or more subnets. Subnets can only have one route table applied to them. So, if you want a subset of your traffic to route differently to other traffic, you will need to deploy it into a separate Vnet subnet, and use separate route tables to route traffic using different rules.

The same is true for AKS. If we want to route the egress traffic for some of our applications differently to others, the applications must be running in separate subnets. Let’s look at a few ways we can achieve this.

Note that whilst you can add multiple IP addresses to a NAT gateway, you cannot currently define any rules that allow you to select when any particular IP address is used. Your application traffic will egress from a randomly selected IP address, and that IP address may change between outbound requests.

Proposed Solutions

This blog post will walk through a couple of ways you can provision multiple egress IP addresses for your workloads running on AKS today, and will take a quick look at an upcoming AKS feature that will make this simpler in the future.

For each of the solutions below, the general approach is similar, but the implementation details are different due to differences between the networking models with the different CNIs. The general approach is:

- Create additional subnets where you will run the nodes and/or pods for the applications that need different outbound routing.

- Create additional egress resources to route the traffic through.

- Use node taints and pod tolerations to deploy our application pods into the correct node pool or into the correct pod subnet to achieve the desired outbound routing.

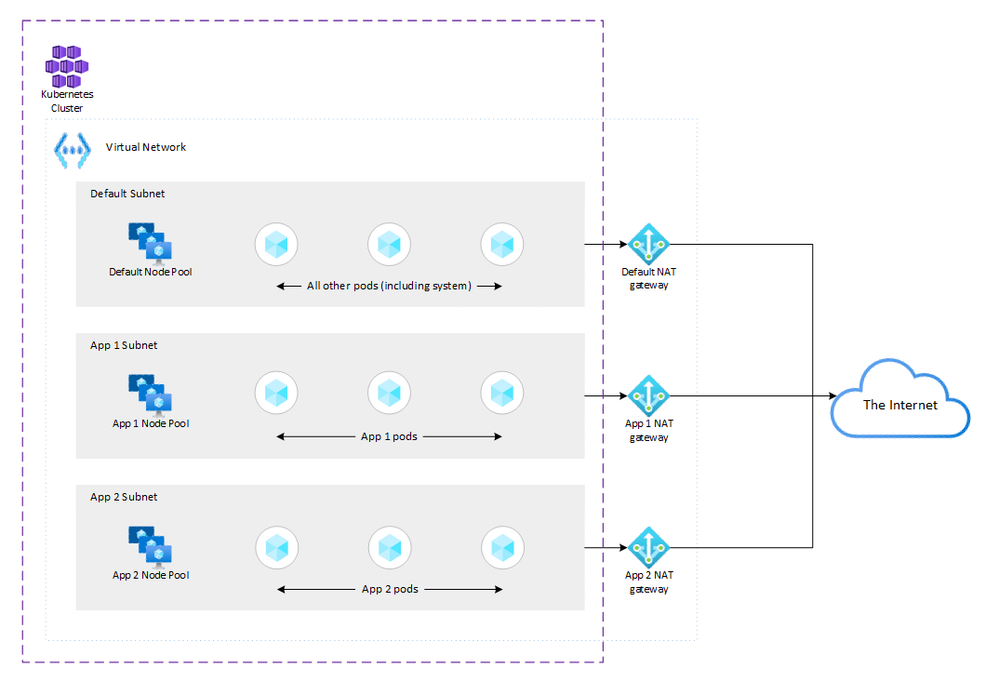

Multiple Outbound IP Addresses with Azure CNI

With the Azure CNI, pods receive an IP address from the same Vnet subnet as the nodes the pods are running on. To create different outbound routes for different apps running in the same cluster, we need to:

- Create at least two node pools running in separate subnets

- Create at least one additional egress resource (NAT gateway)

- Apply taints to the node groups and tolerations to the application pods

This is what our high-level network and cluster architecture will look like:

Let's walk through creating this architecture using the Azure CLI. First we define some environment variables and create a resource group:

rg=rg-azure-cni

location=westeurope

vnet_name=vnet-azure-cni

cluster=azure-cni

vm_size=Standard_DS3_v2

az group create -n $rg -l $location

We can then create the Vnet and the subnets:

az network vnet create -n $vnet_name -g $rg --address-prefixes 10.240.0.0/16 \

--subnet-name default-subnet --subnet-prefixes 10.240.0.0/22 -l $location -o none

az network vnet subnet create --vnet-name $vnet_name -g $rg --address-prefix 10.240.4.0/22 -n app-1-subnet -o none

az network vnet subnet create --vnet-name $vnet_name -g $rg --address-prefix 10.240.8.0/22 -n app-2-subnet -o none

default_subnet_id=$(az network vnet subnet show --vnet-name $vnet_name -n default-subnet -g $rg --query id -o tsv)

app_1_subnet_id=$(az network vnet subnet show --vnet-name $vnet_name -n app-1-subnet -g $rg --query id -o tsv)

app_2_subnet_id=$(az network vnet subnet show --vnet-name $vnet_name -n app-2-subnet -g $rg --query id -o tsv)

echo default_subnet_id: $default_subnet_id

echo app_1_subnet_id: $app_1_subnet_id

echo app_2_subnet_id: $app_2_subnet_id

With our subnets in place we can allocate some public IPs and create our egress resources:

az network public-ip create -g $rg -n default-pip --sku standard --allocation-method static -l $location -o none

az network public-ip create -g $rg -n app-1-pip --sku standard --allocation-method static -l $location -o none

az network public-ip create -g $rg -n app-2-pip --sku standard --allocation-method static -l $location -o none

az network nat gateway create -n default-natgw -g $rg -l $location --public-ip-address default-pip -o none

az network nat gateway create -n app-1-natgw -g $rg -l $location --public-ip-address app-1-pip -o none

az network nat gateway create -n app-2-natgw -g $rg -l $location --public-ip-address app-2-pip -o none

Note that if you want to use a Standard LB for egress, this must be your default cluster egress option as AKS currently only supports one LB per cluster.

Then we allocate our egress resources to our subnets:

az network vnet subnet update -n default-subnet --vnet-name $vnet_name --nat-gateway default-natgw -g $rg -o none

az network vnet subnet update -n app-1-subnet --vnet-name $vnet_name --nat-gateway app-1-natgw -g $rg -o none

az network vnet subnet update -n app-2-subnet --vnet-name $vnet_name --nat-gateway app-2-natgw -g $rg -o none

Next we can create an AKS cluster plus two additional node pools to make use of the "app-1" and "app-2" egress configurations we've created:

az aks create -g $rg -n $cluster -l $location --vnet-subnet-id $default_subnet_id --nodepool-name default \

--node-count 1 -s $vm_size --network-plugin azure --network-dataplane=azure -o none

az aks nodepool add --cluster-name $cluster -g $rg -n app1pool --node-count 1 -s $vm_size --mode User \

--vnet-subnet-id $app_1_subnet_id --node-taints pool=app1pool:NoSchedule -o none

az aks nodepool add --cluster-name $cluster -g $rg -n app2pool --node-count 1 -s $vm_size --mode User \

--vnet-subnet-id $app_2_subnet_id --node-taints pool=app2pool:NoSchedule -o none

az aks get-credentials -n $cluster -g $rg --overwrite

In the commands above we added some Kubernetes taints to our node pools, and each node in each node pool will automatically inherit these taints. This will allow us to control which pods get deployed onto which node pool, by setting tolerations within our application definitions.

For my sample application, I'm deploying the API component of YADA: Yet Another Demo App, along with a public-facing LoadBalancer (inbound) service that we can use to access the YADA app. I'm using this app because it provides functionality that will let us easily check the egress IP address for the pod, as you'll see later. To create your service manifests, you can copy the sample from the GitHub repository, and add the tolerations as shown below.

For my sample application, my tolerations are defined thus:

# yada-default.yaml

...

tolerations:

- key: "pool"

operator: "Equal"

value: "default"

effect: "NoSchedule"

...

# yada-app-1.yaml

...

tolerations:

- key: "pool"

operator: "Equal"

value: "app1pool"

effect: "NoSchedule"

...

# yada-app-2.yaml

...

tolerations:

- key: "pool"

operator: "Equal"

value: "app2pool"

effect: "NoSchedule"

...

We can now deploy our applications:

kubectl apply -f yada-default.yaml

kubectl apply -f yada-app-1.yaml

kubectl apply -f yada-app-2.yaml

If we view the pods using kubectl get pods -o wide we can see that the yada-default pod is running on the aks-default node pool, that the yada-app-1 pod is running on the aks-app1pool node pool, and so on:

$ kubectl get pods -o wide

NAME READY STATUS IP NODE

yada-default-695f868d87-5l4wk 1/1 Running 10.240.0.30 aks-default-35677847-vmss000000

yada-app-1-74b4dd6ddf-px6vr 1/1 Running 10.240.4.12 aks-app1pool-11042528-vmss000000

yada-app-2-5779bff44b-8vkw9 1/1 Running 10.240.8.6 aks-app2pool-28398382-vmss000000

Next, we can view the inbound IP address for each yada service, and then use cURL to query this endpoint to determine the outbound IP address for each yada service we have deployed:

echo "default svc IP=$(kubectl get svc yada-default -o jsonpath='{.status.loadBalancer.ingress[0].ip}'), \

egress IP=$(curl -s http://$(kubectl get svc yada-default \

-o jsonpath='{.status.loadBalancer.ingress[0].ip}'):8080/api/ip | jq -r '.my_public_ip')"

echo "app1 svc IP=$(kubectl get svc yada-app-1 -o jsonpath='{.status.loadBalancer.ingress[0].ip}'), \

egress IP=$(curl -s http://$(kubectl get svc yada-app-1 \

-o jsonpath='{.status.loadBalancer.ingress[0].ip}'):8080/api/ip | jq -r '.my_public_ip')"

echo "app2 svc IP=$(kubectl get svc yada-app-2 -o jsonpath='{.status.loadBalancer.ingress[0].ip}'), \

egress IP=$(curl -s http://$(kubectl get svc yada-app-2 \

-o jsonpath='{.status.loadBalancer.ingress[0].ip}'):8080/api/ip | jq -r '.my_public_ip')"

You will see some output like this, demonstrating that we have three unique inbound IP addresses (as expected with LoadBalancer services), as well as three unique outbound IP addresses:

default svc IP=20.238.232.216, egress IP=51.144.113.217

app1 svc IP=20.238.232.220, egress IP=52.136.231.154

app2 svc IP=20.238.232.251, egress IP=51.144.78.64

Finally, we can compare these inbound IP addresses with the PIPs assigned to our NAT gateways:

$ az network public-ip list -g $rg --query "[].{Name:name, Address:ipAddress}" -o table

Name Address

----------- --------------

app-1-pip 52.136.231.154

app-2-pip 51.144.78.64

default-pip 51.144.113.217

As you can see, the IP addresses match! We can repeat this pattern as necessary to provide additional segregated egress IP addresses for our applications.

If you wish to use Azure CNI with Overlay, you can deploy this solution exactly as described above, with a single change: when you create the cluster, you must add the --network-plugin-mode overlay parameter to the az aks create command. With Azure CNI Overlay, pods are assigned IP addresses from a private CIDR range that is logically separate to the Vnet hosting the AKS cluster: this is the "overlay" network.

Alternative Solution with Dynamic Pod IP Assignment

In the example above, we configured Azure CNI to deploy pods into the same subnet as the nodes the pods are running on. Or, we configured Azure CNI with Overlay to deploy different node pools into separate subnets, and the pods will receive IP addresses from the per-node private CIDR range, and outbound traffic will be routed according to the underlying node's subnet.

There is a third configuration for Azure CNI which allows pods to be assigned IP addresses from subnets in your Vnet that are separate to the node pool subnet. This configuration is called Azure CNI with Dynamic Pod IP Assignment, and the Azure CNI powered by Cilium has a similar operating mode, Assign IP addresses from a virtual network.

This is what our high-level network and cluster architecture will look like when using Azure CNI with Dynamic Pod IP Assignment:

With Azure CNI with Dynamic Pod IP Assignment, pods are assigned IP addresses from a subnet that is separate from the subnet hosting the AKS cluster, but still within the same Vnet. This is very similar to the Cilium model of assigning pod IP addresses from a virtual network. For this option, the solution would be very similar to the one proposed above, but with a few important changes:

- You would only need one node pool subnet, one "default" subnet for pod IP addresses, and one additional pod subnet for each application that needs it's own egress IP.

- You would still need to create multiple node pools, as this is how we control where application pods are deployed and hence which egress route they take.

- When creating your AKS cluster and node pools, all node pools would share the same node pool subnet, and you would need to specify the

--pod-subnet-idoption along with the relevant subnet ID to configure the subnet that pods will receive their IP addresses from. - For Azure CNI Powered by Cilium clusters, you would also need to specify the

--network-dataplane=ciliumoption on theaz aks create command.

Alternative Solution with Kubenet

Finally, with Kubenet CNI, pods are assigned IP addresses from an IP address space that is logically separate from the subnet hosting the AKS cluster, and all pod traffic is NAT'd through the node's Vnet IP address. Again, the overall solution is similar but with a few key differences:

- As pod traffic is NAT'd through the node and appears to originate from the node's Vnet IP address, each application that requires a separate egress IP address will need its own node pool with its own node pool subnet - you do not need to specify the

--pod-subnet-idwhen creating these subnets. - You would need to specify the

--network-dataplane=kubenetoption on theaz aks createcommand.

A Note on Third-Party Solutions

When I was researching ways to solve this problem I came across the concept of Egress Gateways, which Calico, Cilium, and Istio all support. The implementation for all three is very similar; you deploy one or more pods in your cluster to act as Egress Gateways, and then use pod metadata to route traffic out through the appropriate Egress Gateway. The idea behind these is that any traffic egressing from the Egress Gateway pod will appear as the IP address of the underlying node, but that isn't the case for most CNI configurations in AKS.

Because of their similarities, the Egress Gateways all suffer from the same problem. To route the traffic from the node running the Egress Gateway differently to the rest of the cluster egress traffic, the node needs to be running in a separate subnet, much the same as the solutions above. However, you wouldn't want to host your Egress Gateway for your mission-critical applications on a single node or in a single availability zone, and so configuring resilient Egress Gateways would mean multiple pods on multiple nodes across multiple zones. The subnets would also have to be much smaller than in the solution proposed above (to avoid IP address wastage).

Ultimately then, it seems to make more sense to use AKS-native constructs like node pools, and Azure-native features like multi-zone subnets and Vnet routing to create a resilient architecture that allows for different applications to egress with different public IP addresses.

Limitations

The architectures discussed in this article are not general-purpose architectures as they will introduce additional complexity and management overheads, and could lead to additional cost. They should therefore only be considered if you have an explicit requirement to provide different egress IP addresses to different applications within the same AKS cluster.

As you can see, while it is possible to scale this pattern to add more unique egress IP addresses, there are some limitations that are worth calling out:

- Each time you deploy a new subnet and node pool for an application you reduce the overall cost efficiency of your cluster, because the taints on the nodes (rightly) prevent other applications from using those nodes. This could drive the cost of running your AKS cluster up even without using any additional resources.

- Similarly, NAT gateway has an hourly charge as well as a charge for data volume processed, so it will likely cost more to route your egress traffic through two or more NAT gateways compared to one, even if the volume of traffic does not increase.

- Adding a larger number of smaller subnets (for multiple node and/or pod subnets) will likely decrease your IP address usage efficiency compared to a small number of large subnets (e.g. one node pool subnet and one pod subnet). This may not be a problem if you have a large address space, but if your Vnet IP address ranges are small or limited, this architecture will need careful planning to avoid IP address exhaustion.

- If you have a large number of applications that need unique or separate egress IP addresses, you are going to end up managing a large number of subnets and node pools, which may increase your cluster management overheads, depending on how automated that management is. If this is the case, you may find it easier to run applications across different clusters, or even consider an alternative hosting model for those apps.

Future Improvements

There is an AKS-native Static Egress Gateway for Pods feature on the AKS roadmap, and this will allow customers to set different egress IPs for different workloads via Pod annotations. This will likely make all of these separate subnets, node pools and NAT gateways unnecessary once the feature launches. We are expecting this feature to launch in the first half of 2024.

Conclusion

If you need to route some parts of your application via different egress IP addresses than your cluster-default IP address, the solutions described above are your best options today. For a small number of applications/egress IP combinations, the management overhead of multiple node pools and subnets will be low, and the cost implications due to lower resource efficiency and additional network resources will also be small.

If your AKS clusters are running in Vnets with large CIDR ranges, you should use the Azure CNI and make use of the main solution outlined in this blog post. If you have a smaller CIDR range in your Vnet where the number of IP addresses is limited, you may find it preferable to use the Azure CNI Overlay or the Cilium equivalent, and follow the modification outlined in the first solution. You could also use the Azure CNI with Dynamic Pod IP Assignment (or the Cilium equivalent) and apply the modifications for the second solution. If you prefer using Kubenet as your CNI, you should follow the modifications outlined in the third solution.

If this is something you think you will need to do in the future, you should follow the Static Egress Gateway for Pods feature request, and chat to your Microsoft Azure CSA to be notified when it's available. Migrating from the solutions proposed in this article to Static Egress Gateways should simply involve redeploying your application pods with the relevant pod annotations and without the node pool tolerations, and then removing the additional node pools and subnets once all pods are running in the main node pool again.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.