- Home

- Azure

- Azure Architecture Blog

- Security Best Practices for GenAI Applications (OpenAI) in Azure

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Introduction

GenAI applications are those that use large language models (LLMs) to generate natural language texts or perform natural language understanding tasks. LLMs are powerful tools that can enable various scenarios such as content creation, summarization, translation, question answering, and conversational agents. However, LLMs also pose significant security challenges that need to be addressed by developers and administrators of GenAI applications. These challenges include:

- Protecting the confidentiality and integrity of the data used to train and query the LLMs

- Ensuring the availability and reliability of the LLMs and their services

- Preventing the misuse or abuse of the LLMs by malicious actors or unintended users

- Monitoring and auditing the LLMs' outputs and behaviors for quality, accuracy, and compliance

- Managing the ethical and social implications of the LLMs' outputs and impacts

This document provides an overview of the best practices of security for GenAI applications in Azure, focusing on Azure OpenAI but also describing security related to other LLMs that can be used inside of Azure. Azure OpenAI is a cloud-based service that allows users to access and interact with OpenAI's GPT-3 model, one of the most advanced LLMs available today. Other LLMs that can be used inside of Azure include Microsoft's Cognitive Services, such as Text Analytics, Translator, and QnA Maker, as well as custom LLMs that can be built and deployed using Azure Machine Learning.

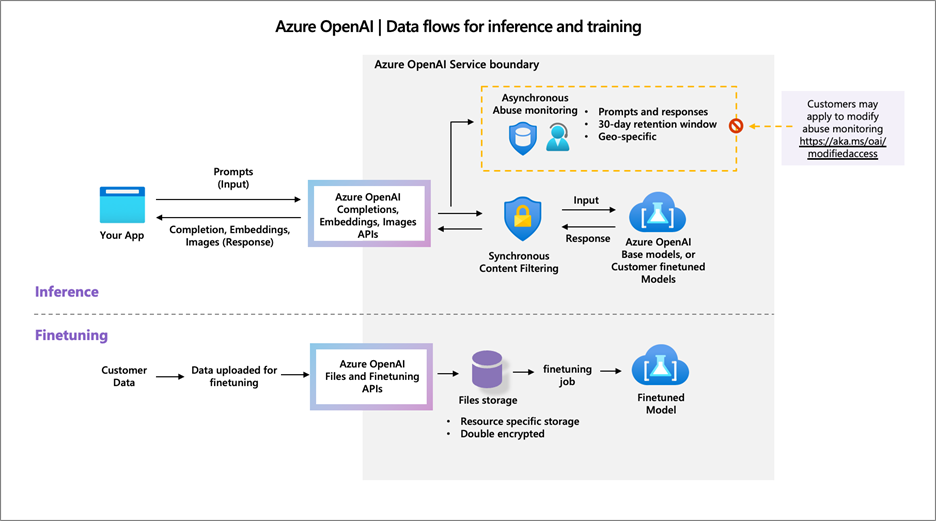

How does the Azure OpenAI Service process data?

Azure OpenAI’s approach to data storage and processing is built on strong, secure foundations. User data is guarded against unauthorized access, ensuring privacy and security in line with Microsoft's data protection standards.

As for the usage of data, the principles are simple. The input you provide, along with the AI responses - your data - remains yours. Microsoft does not use this data to better their own AI offerings. It is kept private unless you choose to use it to train customized AI models.

Regarding the types of processed data, Azure OpenAI processes your input - prompts and the ensuing AI-generated answers. It also processes data submitted for the purpose of training tailored AI models.

The service's content generation capacity comes with a commitment to safety. Input is carefully processed to produce responses, and content filters are in place to prevent the generation of problematic content.

Customizing models is a service feature that’s handled with care. You can train Azure OpenAI models with your data, knowing that it remains secure and for your use only.

To prevent abuse, Azure OpenAI comes equipped with robust content filtering and monitoring systems. This is to make sure that the generation of content complies with guidelines and that nothing harmful slips through.

In cases where human review is necessary, Microsoft ensures high privacy standards are maintained. If there are particular privacy concerns, you can request a review to be handled differently.

Azure OpenAI aims to offer advanced AI capabilities while keeping user data safe and private.

Privacy and Security

In the context of Azure OpenAI services, the discussion around data "privacy" versus "security" often touches upon the distinct yet overlapping nature of these concepts. Let’s explore what each entails and how the principle of shared responsibility plays a role.

Privacy in Azure OpenAI refers to how user data is handled in terms of access and usage. It ensures that data such as inputs, interactions, and outputs are used in a way that respects customer confidentiality. Azure OpenAI's privacy protocol dictates that Microsoft does not view or use this data for its own purposes, unless explicitly permitted for services like model fine-tuning. Privacy measures are about control over and the ethical handling of data.

Security, on the other hand, is about protecting data from unauthorized access, breaches, and other forms of compromise. Azure OpenAI employs a range of security measures, such as encryption in transit and at rest, to safeguard data against threats. Microsoft’s infrastructure provides a secure environment designed to shield your data from security risks.

The principle of shared responsibility highlights that security in the cloud is a two-way street. While Microsoft ensures the security of the Azure OpenAI services, it is the customer's responsibility to secure their end of the interaction.

For instance, Microsoft takes care of:

- Protecting the Azure infrastructure

- Making sure that the Azure OpenAI services are secure by default

- Providing identity and access management capabilities

However, customers are responsible for:

- Setting appropriate access controls and permissions for their use of Azure OpenAI

- Protecting their Azure credentials and managing access to their Azure subscription

- Ensuring that the security measures around their applications are adequate, including input validation, secure application logic, and proper handling of Azure OpenAI outputs

This shared responsibility ensures that while Microsoft provides a foundational level of security, customers can and must tailor additional security controls based on the specific needs and the sensitivity of their data. In simple terms, think of Microsoft as providing a secure apartment while customers need to lock their doors and decide who gets keys.

Customers should carefully consider their security posture around the applications and data they manage in order to complement Microsoft's robust security capabilities. This could involve implementing additional network security measures, ensuring secure coding practices, and regularly auditing access and usage to align with their particular security requirements.

By understanding both the privacy and security provisions of Azure OpenAI and actively engaging in the shared responsibility model, customers can harness the power of AI while preserving both the integrity and confidentiality of their data.

Best Practices of Security for GenAI Applications in Azure

Following a layered approach based on the Zero Trust framework provides a robust security strategy for LLM (Large Language Models) applications, such as those offered by Azure OpenAI.

Zero Trust is an overarching strategy that should be applied across all security domains; it assumes that a threat could exist on the inside as well as from the outside. Therefore, every access request, regardless of where it originates, must be fully authenticated, authorized, and encrypted before access is granted.

By integrating these practices into a cohesive strategy, Azure customers can create a secure environment for their GenAI applications. Moreover, as threats evolve, it is essential to continually assess and adapt these security measures to maintain a robust defense against potential cyber threats.

Here's how you might implement security best practices across different domains:

Data Security

Data security refers to the protection of the data used to train and query the LLMs, as well as the data generated by the LLMs. Data security involves ensuring the confidentiality, integrity, and availability of the data, as well as complying with the data privacy and regulatory requirements. Some of the best practices of data security for GenAI applications in Azure are:

- Data Classification and Sensitivity: Classify and label your data based on its sensitivity level. Identify and protect sensitive data such as personal identifiable information (PII), financial data, or proprietary information with appropriate encryption and access controls.

- To implement classification and labeling in a governance-consistent manner, Microsoft Purview is a valuable tool. It enables the automatic categorization and governance of data across diverse platforms. With Purview, users can scan their data sources, classify and label assets, apply sensitivity tags, and monitor how data is utilized, ensuring that the foundations on which Generative AI operate are secure and resilient.

- There is no direct integration for the moment between Purview and AI Search, however if you have data that resides in other sources you can use Purview to classify them before generating embeddings and storing them into AI Search..

- Encryption at Rest and in Transit: Regarding the implementation of encryption for data used with Retrieval-Augmented Generation (RAG) in Azure OpenAI, it’s important to ensure the security of your data both at rest and in transit. For Azure services, data at rest is securely encrypted using built-in Azure Storage Service Encryption and Azure Disk Encryption, and you have the option to manage your encryption keys in Azure Key Vault. As for data in transit, it is protected via Transport Layer Security (TLS) and enforced usage of secure communication protocols. According to your specific requirements, make sure that the services where data is retrieved or stored are compliant with your encryption requirements. If you're utilizing Azure services like Azure Cognitive Search, they come with inherent security controls; moreover, you can leverage advanced features like Customer-Managed Keys for additional control. In the case that external services are interfaced with Azure, verify that they adopt equivalent encryption standards to maintain robust security across your data's lifecycle.

- Role-Based Access Controls (RBAC): Use RBAC to manage access to data stored in Azure services. Assign appropriate permissions to users and restrict access based on their roles and responsibilities. Regularly review and update access controls to align with changing needs.

- Data Masking and Redaction: Implement data masking or redaction techniques to hide sensitive data or replace it with obfuscated values in non-production environments or when sharing data for testing or troubleshooting purposes.

- Data Backup and Disaster Recovery: Regularly backup and replicate critical data to ensure data availability and recoverability in case of data loss or system failures. Leverage Azure's backup and disaster recovery services to protect your data.

- Threat Detection and Monitoring: Utilize Azure Defender to detect and respond to security threats and set up monitoring and alerting mechanisms to identify suspicious activities or breaches. Leverage Azure Sentinel for advanced threat detection and response.

- Data Retention and Disposal: Establish data retention and disposal policies to adhere to compliance regulations. Implement secure deletion methods for data that is no longer required and maintain an audit trail of data retention and disposal activities.

- Data Privacy and Compliance: Ensure compliance with relevant data protection regulations, such as GDPR or HIPAA, by implementing privacy controls and obtaining necessary consents or permissions for data processing activities.

- Regular Security Assessments: Conduct periodic security assessments, vulnerability scans, and penetration tests to identify and address any security weaknesses or vulnerabilities in your LLM application's data security measures.

- Employee Awareness and Training: Educate your employees about data security best practices, the importance of handling data securely, and potential risks associated with data breaches. Encourage them to follow data security protocols diligently.

Data Segregation for Different Environments

It is very important to take into account data segregation or data environment separation, which is a security practice that involves keeping different types of data apart from each other to reduce risks. This is especially important when dealing with sensitive data across production and non-production environments.

In the case of Azure OpenAI and services like Azure AI Search (formerly known as Azure Cognitive Search), it's crucial to adopt a strategy that isolates sensitive data to avoid accidental exposure. Here's how you might go about it:

- Separation by Environment: Keep production data separate from development and testing data. Only use real sensitive data in production and utilize anonymized or synthetic data in development and test environments.

- Search Service Configuration: In Azure AI Search, you can tailor your search queries with text filters to shield sensitive information. Ensure that search configurations are set up to prevent exposing sensitive data unnecessarily.

- Index Segregation: If you have varying levels of data sensitivity, consider creating separate indexes for each level. For instance, you could have one index for general data and another for sensitive data, each governed by different access protocols.

- Sensitive Data in Separate Instances: Take segregation a step further by placing sensitive datasets in different instances of the service. Each instance can be controlled with its own specific set of RBAC policies.

Treating Data in Large Language Models (LLM)

The data used in models, like embeddings and vectors, should be treated with the same rigor as other data types. Even though this data may not be immediately readable, it still represents information that could be sensitive. Here’s how you’d handle it:

- Sensitive Data Handling: Recognize that embeddings and vectors generated from sensitive information are themselves sensitive. This data should be afforded the same protective measures as the source material.

- Strict RBAC Application: Apply Role-Based Access Controls strictly on any service that stores or processes embeddings and vectors. Access should only be granted based on necessity for the given role.

- Scoped Access Policies: Using RBAC, ensure that policies are scoped appropriately to the role's necessity, which could mean read-only access to certain data, or no access at all to particularly sensitive datasets.

By treating the data used and generated by GenAI applications with the same concern for privacy and security as traditional data storage, you'll better protect against potential breaches. A segregated approach reduces the risk profile by limiting exposure only to those who need access for their specific role, minimizing the potential 'blast radius' in the event of a data leak. Regularly reviewing and auditing these practices is also essential to maintaining a secure data environment.

Network Security

Network security refers to the protection of the network infrastructure and communication channels used by the LLMs and their services. Network security involves ensuring the isolation, segmentation, and encryption of the network traffic, as well as preventing and detecting network attacks. Some of the best practices of network security for GenAI applications in Azure are:

- Use Secure Communication Protocols: Utilize secure communication protocols such as HTTPS/TLS to ensure that data transmitted between the LLM application and clients or external services is encrypted and protected from interception.

- Implement Firewall and Intrusion Detection Systems (IDS): Set up firewalls and IDS to monitor network traffic and identify any unauthorized access attempts or suspicious activities targeting the LLM application.

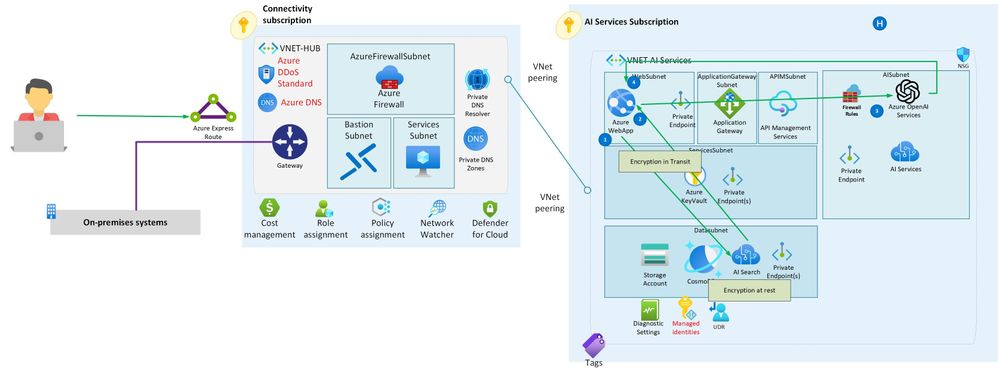

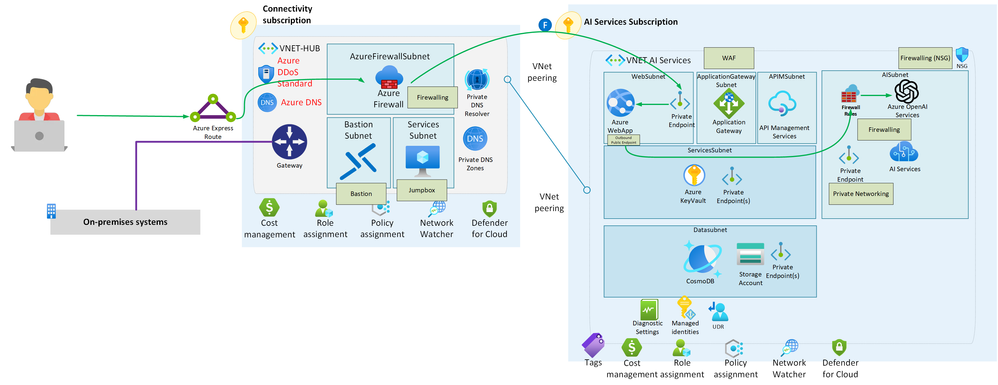

- To secure AI Services within Azure, implementing a comprehensive network security strategy using private endpoints, firewall rules, Network Security Groups (NSGs), and a well-architected hub-and-spoke network topology is crucial. Start by configuring private endpoints for AI services; this ensures that the services are only accessible within your virtual network. Complement this with strict firewall rules to control inbound and outbound traffic. Use NSGs to further define fine-grained traffic control at the subnet level or for specific resources. At the landing zone level, adopt a hub-and-spoke topology where the hub serves as the central point of connectivity and hosts a Network Virtual Appliance (NVA) like Azure Firewall. Traffic routed through User Defined Routes (UDRs) in the hub allows for centralized inspection and governance. This layered approach provides a robust security posture for protecting AI workloads in Azure.

- Control Network Access: Implement network segmentation and access controls to restrict access to the LLM application only to authorized users and systems. This helps prevent unauthorized access and potential lateral movement within the network.

- Secure APIs and Endpoints: Ensure that APIs and endpoints used by the LLM application are properly secured with authentication and authorization mechanisms, such as API keys or OAuth, to prevent unauthorized access.

- Implement Strong Authentication: Enforce strong authentication mechanisms, such as multi-factor authentication, to prevent unauthorized access to the LLM application and associated network resources.

- Regularly Update and Patch Network Infrastructure: Keep network devices, routers, switches, and firewalls up to date with the latest firmware and security patches to address any vulnerabilities.

- Use Network Monitoring and Logging: Implement network monitoring tools to detect and analyze network traffic for any suspicious or malicious activities. Enable logging to capture network events and facilitate forensic analysis in case of security incidents.

- Perform Regular Security Audits and Penetration Testing: Conduct security audits and penetration testing to identify and address any network security weaknesses or vulnerabilities in the LLM application's network infrastructure.

- Implement Strong Password Policies: Enforce strong password policies for network devices, servers, and user accounts associated with the LLM application to prevent unauthorized access due to weak passwords.

- Educate Users and Employees: Provide training and awareness programs to educate users and employees about network security best practices, such as the importance of strong passwords, avoiding suspicious links or downloads, and reporting any security incidents or concerns.·

Access and Identity Security

Access security refers to the protection of the access and identity management of the LLMs and their services. Access security involves ensuring the authentication, authorization, and auditing of the users and applications that interact with the LLMs and their services, as well as managing the roles and permissions of the LLMs and their services. Some of the best practices of access security for GenAI applications in Azure are:

- Use Managed Identities: Leverage Azure Managed Identities to authenticate and authorize your LLM application without the need to manage keys or credentials manually. It provides a secure and convenient identity solution.

- Implement Role-Based Access Control (RBAC): Utilize RBAC to grant appropriate permissions to users, groups, or services accessing your LLM application. Follow the principle of least privilege, granting only the necessary permissions for each entity.

- Secure Key Management: Store and manage keys securely using Azure Key Vault. Avoid hard-coding or embedding sensitive keys within your LLM application's code. Instead, retrieve them securely from Azure Key Vault using managed identities.

- Implement Key Rotation and Expiration: Regularly rotate and expire keys stored in Azure Key Vault to minimize the risk of unauthorized access. Follow the recommended key rotation practices specific to your use case and compliance requirements.

- Encrypt Data at Rest and in Transit: Utilize Azure services and encryption mechanisms to protect data at rest and in transit. Encrypt sensitive data stored within your LLM application and use secure communication protocols like HTTPS/TLS for data transmission.

- Monitor and Detect Anomalous Activities: Set up monitoring and logging mechanisms to detect anomalous activities or potential security threats related to your LLM application. Utilize Azure Security Center or Azure Sentinel to monitor for suspicious behavior.

- Enable Multi-Factor Authentication (MFA): Implement MFA to add an extra layer of security for user authentication. Enforce MFA for privileged accounts or sensitive operations related to your LLM application.

- Regularly Review and Update Access Controls: Conduct periodic access control reviews to ensure that permissions and roles assigned to users and services are up to date. Remove unnecessary access rights promptly to minimize security risks.Implement Secure Coding Practices: Follow secure coding practices to prevent common vulnerabilities such as injection attacks, cross-site scripting (XSS), or security misconfigurations. Use input validation and parameterized queries to mitigate security risks.

Application Security

Application security refers to the protection of the application logic and functionality of the LLMs and their services. Application security involves ensuring the quality, accuracy, and reliability of the LLMs' outputs and behaviors, as well as preventing and mitigating the misuse or abuse of the LLMs by malicious actors or unintended users. Some of the best practices of application security for GenAI applications in Azure are:

- Implement Input Validation: Validate and sanitize user inputs to prevent injection attacks and ensure the integrity of data being processed by the LLM.

- Apply Access Controls: Implement strong authentication and authorization mechanisms to control access to LLM-enabled features and ensure that only authorized users can interact with the application.

- Secure Model Training Data: Protect the training data used to train the LLM from unauthorized access and ensure compliance with data protection regulations. Use techniques like data anonymization or differential privacy to further enhance data security.

- Monitor and Audit LLM Activity: Implement logging and monitoring mechanisms to track LLM activity, detect suspicious behavior, and facilitate forensic analysis in case of security incidents.

- Regularly Update and Patch LLM Components: Keep the LLM framework, libraries, and dependencies up to date with the latest security patches and updates to mitigate potential vulnerabilities.

- Secure Integration Points: Carefully validate and secure any external data sources or APIs used by the LLM to prevent unauthorized access or injection of malicious code.

- Implement Least Privilege Principle: Apply the principle of least privilege to limit the capabilities and access rights of the LLM and associated components. Only grant necessary permissions to prevent potential misuse.

- Encrypt Sensitive Data: Use encryption mechanisms to protect sensitive data, both at rest and in transit, to ensure confidentiality and integrity.

- Conduct Regular Security Testing: Perform regular security assessments, including penetration testing and vulnerability scanning, to identify and address any security weaknesses or vulnerabilities in the application.

Remember that security is an ongoing process, and it is crucial to continually assess and improve the security posture of GenAI applications to protect against evolving threats.

Governance Security

Governance security refers to the protection of the governance and oversight of the LLMs and their services. Governance security involves ensuring the ethical and social implications of the LLMs' outputs and impacts, as well as complying with the legal and regulatory requirements.

Some of the best practices of governance security for GenAI applications in Azure are:

- Use Azure OpenAI or other LLMs' terms of service, policies, and guidelines to understand and adhere to the rules and responsibilities of using the LLMs and their services

- Use Azure OpenAI or other LLMs' documentation, tutorials, and examples to learn and follow the best practices and recommendations of using the LLMs and their services

- Use Azure OpenAI or other LLMs' feedback and support channels to report and resolve any issues or concerns related to the LLMs and their services

- Use Azure Responsible AI or other frameworks and tools to assess and mitigate the potential risks and harms of the LLMs' outputs and impacts, such as fairness, transparency, accountability, or privacy

- Use Azure Compliance or other resources to understand and comply with the legal and regulatory requirements and standards related to the LLMs and their services, such as GDPR, HIPAA, or ISO

- Use Azure Policy or Azure Security Center to define and enforce governance security policies and compliance standards

- Use Azure Monitor or Azure Sentinel to monitor and alert on governance security events and incidents

Conclusion

GenAI applications are powerful and versatile tools that can enable various scenarios and use cases in natural language processing and generation. However, GenAI applications also pose significant security challenges that need to be addressed by developers and administrators of GenAI applications. This document provided an overview of the best practices of security for GenAI applications in Azure, focusing on Azure OpenAI but also describing security related to other LLMs that can be used inside of Azure. By following these best practices, developers and administrators can ensure the security of their GenAI applications and their data, network, access, application, and governance domains.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.