- Home

- Azure

- Azure Architecture Blog

- Case Study: Integrating Scheduled Events into a Decentralized Database Deployment

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

This case study focuses on Contoso, an Azure customer who uses Cassandra DB along with scheduled events to power their highly available internal and customer-facing applications. It will cover a brief background on the customer, scheduled events, and Cassandra DB before discussing how Contoso uses scheduled events to keep their application running. The case study will finish by covering important topics to keep in mind while integrating scheduled events into your own application. The hope is that developers and administrators can learn from the experiences of Contoso and successfully use scheduled events in your own application deployments.

Background on the Customer:

Contoso is a multi-national organization with thousands of employees around the world as well as a large number of partners who must integrate seamlessly with their operations. To assist their internal developer teams, the Contoso Cassandra team provides an internal only PaaS service to other developers in the organization along based on Azure’s IaaS offerings along with their own datacenters.

The key requirements that Contoso’s Cassandra DB team must meet are:

- Highly available

- Performant – sub-second latencies on all read requests

- Highly scalable during peak times

- Highly reliable

The Contoso team must also be able to create new instances on request from any internal team and scale out quickly to meet their needs. To meet all these requirements the Contoso Cassandra team needs an automated way to react to any changes in the underlying cloud environment and they use scheduled events to provide this information on Azure.

Azure Scheduled Events

Scheduled events provide advance notifications before Azure undertakes any impactful operation on your VM. This includes regularly scheduled maintenance, such as host updates, and unexpected operations due to degraded host hardware. By listening for scheduled events, Azure IaaS customers can provide high availability services to their customer by preparing their workload before any impactful operations by the platform.

In general, an impactful operation – also referred to as an impact – is any operation that makes an VM unavailable for normal operations. Common examples of impacts are freezes to the VM during a live migration, a reboot to the VM for a guest OS update, or the termination of a spot VM. The type and estimated duration of any upcoming impact is included in the scheduled events payload to help guide your workload’s response to it.

Scheduled events can be retrieved by making a call to the Instance Metadata Service from within any VM hosted by Azure. The event will contain information about any upcoming or already started operations pending on your VM. The important fields for this case study are listed in the table below.

|

Property |

Description |

|

Document Incarnation |

Integer that increases when the events array changes. Documents with the same incarnation contain the same event information, and the incarnation will be incremented when an event changes. |

|

EventId |

Globally unique identifier for this event. |

|

Resources |

List of resources this event affects. |

|

EventStatus |

Status of this event. See below for a detailed explanation of the state |

|

NotBefore |

Time after which this event can start without approval. The event can start prior to this time if all the impacted VMs approve it first. Will be blank if the event has already started |

|

EventSource |

Initiator of the event, either the platform (Azure) or a user (typically a subscription admin) |

Note that depending on your application, you may need to track additional information before reacting to a scheduled event. However, to maintain their performance and availability guarantees Contoso chose to react to all events the same, regardless of the event type or impact duration.

Lifecyle of a Maintenance Event on Azure

Maintenance events follow a similar life cycle, with scheduled events being generated to notify your application of changes, as shown in the diagram below. When Azure schedules a maintenance event on a node, the most common first step is to create a scheduled event with EventStatus=”Scheduled”. This initial event will include all the information listed above and give the app time to prepare for the coming impact.

Once you’ve taken the necessary actions to prepare your workload for the event you should approve the event using the scheduled event API. Otherwise, the event will be automatically approved when the NotBefore time is reached. In the case of a VM on shared infrastructure, the system will then wait for all other tenants on the same hardware to also approve the job or timeout. Once approvals are gathered from all impacted VMs or the NotBefore time is reached then Azure generates a new scheduled event payload with EventStatus=”Started” and triggers the start of the maintenance event.

While the scheduled event is in the started state the following operations happen:

- The maintenance operation is completed by Azure

- The VM experiences the impact with the type and duration advertised in the scheduled event.

- The host is monitored for stability following the scheduled event.

Once Azure has determined the maintenance operation was successful it will remove the scheduled event from the events array, marking the event as complete. At this time, it is safe to restore the application workload to your machine.

As scheduled events are often used for applications with high availability requirements, there are a few exceptional cases that should be considered:

- Once a scheduled event is completed and removed from the array there will be no further impacts without a new event including another “Scheduled” event and warning period

- Azure continually monitors maintenance operations across the entire fleet and in rare circumstances determines that a maintenance operation is no longer needed or too high risk to apply. In that case the scheduled event will go directly from “Scheduled” to being removed from the events array

- In the case of hardware failure, Azure will bypass the “Scheduled” state and immediately move to the “Started” state without waiting for a timeout or approval. This is to reduce recovery time when VMs are unable to respond.

- In rare cases, while the event is still in “Started” start, there may be a second impact identical to what was advertised in the scheduled event. This can occur when the host fails a stability check and is rolled back to the previous version.

As part of Azure’s availability guarantee, VMs in different fault domains will not be impacted by routine maintenance operations at the same time. However, they may have operations serialized one after another. VMs in one fault domain can receive scheduled events with EventStatus=”Scheduled” shortly after another fault domain’s maintenance is completed. Regardless of what architecture you chose, always keep checking for new events pending against your VMs.

While the exact timings of events vary, the following diagram provides is a rough guideline for a typical maintenance operation:

- EventStatus:“Scheduled” à Approval Timeout: 15 minutes

- Impact Duration: 7 seconds

- EventStatus:”Started” à Completed (event removed from Events array): 10 minutes

Cassandra and Contoso’s High-Level Architecture:

Cassandra is a distributed noSQL database that provides reliable, fault-tolerant, and easily scalable storage for a wide range of datatypes. It operates using a masterless architecture where every node runs on an independent machine and can speak to any other node via a gossip protocol. This allows for the database to appear as a single whole to external callers and tolerate the loss of a node in the network.

This fault tolerance is achieved by replicating data across multiple nodes in the network and waiting for a quorum of nodes to confirm any operation before reporting the result back to a caller. Contoso typically uses a replication factor of 3 which means that at least 2 nodes must confirm every operation.

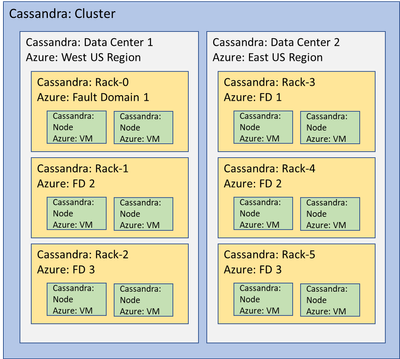

Contoso has about 300 different Cassandra clusters they use across their different apps. Each cluster is replicated across 3 regions and contains approximately 150 nodes. Contoso uses a replication factor of at least 3, with some business-critical clusters running at higher replication factors for additional protection. This enables them to disable nodes during a maintenance event while still maintaining high availability for the cluster. Contoso selected Azure regions that provide 3 fault domains to match the requirements from their Cassandra deployment.

Integrating with Scheduled Events:

Since each node in a cluster can operate independently, Contoso has chosen to have each node responsible for monitoring scheduled events for only itself. On each node running in a Cassandra cluster, there is a separate monitoring script that starts running at boot time and is responsible for notifying and preparing and restoring the node for upcoming impacts. It constantly polls with the Instance Metadata Service (IMDS) for new events and confirms events with the service once the node has prepared for the update.

The monitoring script polls the scheduled events end point once per second waiting for a new event to arrive. Polling the endpoint is done by making an HTPP GET request to an specific endpoint and Contoso polls the endpoint every second to ensure their nodes have ample time to respond to upcoming events.

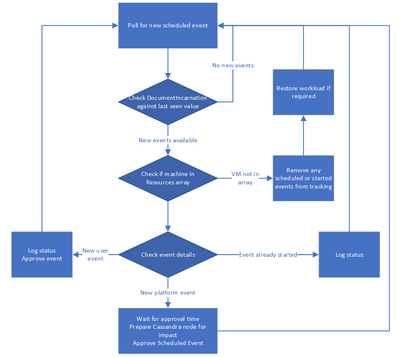

The script checks if a new event is available by comparing the DocumentIncarnation of the most recent event with their last process incarnation. The DocumentIncarnation is guaranteed to change when there is new information in the Event array. When a new event is available it also checks the Resources array to see if it will be impacted by the event. If this node is not impacted, then it will store the latest DocumentIncarnation and move onto the next event.

In the case of a new event, the monitoring script checks the Resources array in the scheduled event to see if the VM is impacted. All impacted resources will be listed by name in this array. If this VM is impacted by the event there are 4 different possible operations:

- If the event has already started it logs the event and continues polling for the completion of the event.

- If it is a user event, it immediately approves the event. This is to prevent delays in recovery in the case an admin is trying to restart a node due to an unexpected app failure.

- If it is a platform event that is not impactful for this workload, such as a 0 second freeze event, it immediately approves the event, logs the action, and continues polling.

- If it is a platform event that is impactful for this workload, it prepares the node for the operation. This will be explored in more detail below.

Any new information from scheduled events or actions taken by the monitoring script are logged to an external logging provider. This information is used by the operations team in the case of any unexpected incidents and to correlate scheduled events across multiple VMs in the same VMSS placement group. For correlation, Contoso uses the EventId which is provided with each scheduled event. The DocumentIncarnation is unique to each VM in a scale set and thus cannot be used for cross-VM correlation.

The diagram below outlines the flow of the monitoring script after it notices a new scheduled event. It is important to note the script is flexible enough to handle any of the state transitions outlined in the state diagram above. While the ordering of Scheduled à Started à Removed (Completed) is the most likely, to operate at high availability it is important that Contoso can handle all possible transitions.

Preparing for an Event

When the monitoring script detects that an event is scheduled on the VM it must disable the Cassandra node running on that VM and then prepare itself for the impact. Contoso has chosen not to react immediately to any scheduled event. Instead, it sets a timer for 30 seconds before the NotBefore time to start disabling the Cassandra node. It waits this time to reduce the time the node is disabled for in the case that other VMs on the same Azure host do not approve the job immediately. Once the timer expires the script disables read/write on the Cassandra node and then disables the gossip, removing it from the cluster. Other nodes in the cluster recognize that the node is disabled when it does not respond to their gossip.

Contoso then saves the state of the monitoring script to disk in case of a restart. It also logs the pending interruption into their monitoring solution, and finally acknowledges the event through the scheduled events endpoint. At a minimum the state includes the last seen DocumentIncarnation on that VM, the last event type expected, and if that event was expected to impact the VM. Having this information saved to disk lets the script immediately check for new events after a restart using the DocumentIncarnation. It also checks the last event type expect to see if the restart was an expected part of the maintenance or an unexpected failure. Finally, Contoso has configured the system to immediately restart the Cassandra service after a node reboot, however some workloads may require the monitoring script to intervene and start them after a VM restart. In those cases, the monitoring script would also have to confirm the workload is running after the restart.

At this point, the monitoring script goes back to monitoring for new scheduled events. In the case of a freeze event, it will eventually see the event removed from the events array and know that the impact is completed. In the case of a restart, the script is set to start on boot so it will have to load its state from memory before continuing monitoring. At this time Azure guarantees there will be no further impact without another scheduled event created so the monitoring script can reenable the Cassandra node.

The Cassandra node is reenabled for reading and writing and it rejoins the cluster via the gossip protocol. As the node rejoins the cluster it immediately begins syncing its state with the rest of the cluster and any writes that have happened while it was disabled. Contoso has sized their cluster and setup fault tolerance to ensure that while the node is catching up there are no latency spikes for callers.

From their experience we recommend testing for any changes in latency while a node is catching up on missed transactions. It is also important to consider the impact if multiple nodes need to catch up after maintenance as well. In rare cases, all nodes in the same fault domain could be impacted by an operation simultaneously so choose the correct number of fault domains and VM sizes such that your cluster can avoid data loss while recovering after the impact.

Design Notes

While working with Contoso on the design of their scheduled events monitor, there were a few key design considerations that had to be addressed. Making the right architecture choices early on can prevent headaches later on in the development or deployment process.

Cluster Fault Tolerance and Azure Fault Domains

Azure fault domains are sets of hardware with a possible single point of failure, such as a power supply or router. Although uncommon, any high availability application built on Azure IaaS should assume an entire fault domain might be impacted by a failure. Maintenance operations, both software and hardware, are also done within fault domains. Multiple machines in the same fault domain might be impacted by a maintenance event at the same time, and thus receive simultaneous scheduled events. So, any application offering high availability must consider fault domains when creating VMs and configuring replication in Cassandra.

Having data replicated within the same fault domain can result in it not being available if a maintenance event impacts an entire fault domain at once. For local quorum with Contoso’s Cassandra configuration, they are using 3 FDs each one assigned as a different Cassandra Rack. They are mapping Cassandra’s concept of data centers to Azure’s region pairs to increase their resiliency by replicating data across multiple locations.

Distributed vs Centralized Control

Contoso’s Cassandra implementation relies on decentralized control where each node is responsible for adding and removing itself from the network. Assigning control to each node works well for Contoso since Cassandra is a fault tolerant application and the loss of a single node does not result in a failure of the cluster. However, this can cause issues for applications where a loss of a single node requires immediate reaction by the rest of the application. In the case of a hardware failure a Contoso node may not have time to remove the node from the cluster, however this is acceptable for them as Casandra can easily manage a single node failure.

However, for centralized applications, having a decentralized architecture for monitoring scheduled events does not work very well. Other customers with centralized applications have had the most success in nominating at least two nodes in their workload, typically the primary and one secondary, to monitor scheduled events for the entire cluster and each other. Since scheduled events are delivered to all VMs in a VMSS or Availability Group a single VM can track pending events for all other VMs and issue control commands. The primary node is responsible for handling all scheduled events in a nominal case, however if there is a failure impacting the primary node, then the secondary can take over, nominate a new primary node, and inform all other nodes of the change.

It is highly discouraged to have only a single VM in VMSS or Availability Group monitoring scheduled events. It is possible in rare circumstances to have a hardware failure impact that VM without giving it time to handoff the responsibility to another VM. Always having a second VM monitoring in a different fault domain reduces the chances of a cluster ending up leaderless.

Approving Scheduled Events

Approving a scheduled event using a POST back to the API endpoint signals to Azure that your workload is prepared, and the maintenance event can start. By sending that signal the maintenance can start, and end, sooner and increase the gap between maintenance operations on subsequent fault domains.

Azure interprets an approval back to the API endpoint to mean that your workload has completed any preparations and is ready for the impact stated in the impact statement. Make sure to complete any preparation before approving or the NotBefore time is reached, as Azure will start the maintenance shortly after either of those signals.

Azure applies maintenance operations by moving between fault domains one at a time, using a fixed time window for each fault domain. By approving an operation using scheduled events, you can move the operation on your node earlier in the time window and increase the time between the end of the event and the start of the operations on the next fault domain. This can give your workload more time to recover before the next set of maintenance operations and scheduled events are created.

Correlating and Identifying Events in Logs

It is important for Contoso to have a log of any event impacting VM availability for their operations team, thus they log scheduled events to Grafana after processing. When logging, they use the event ID for correlation as the same event will be delivered to multiple VMs. An event will always have the same unique event ID when being delivered to multiple VMs, however the Document Incarnation is specific to each VM and cannot be used for correlation of events in any external tracking system.

Wrap-Up

This case study covered the background on scheduled events, Contoso’s availability needs, and finally how they are using scheduled events to achieve their goals. While their Contoso deployment is larger than what many customers use, the same principles can be applied to smaller deployments or other decentralized applications. Scheduled events provide a window into upcoming impactful operations against VMs in Azure and should be leveraged by anyone looking to provide a high availability service on Azure IaaS.

Contributors:

Principal Author:

- Adam Wilson | Technical Program Manager

Contributors:

- Vince Johnson-Thacker | Principal Architect

- David Read | Principal Architect

Next Steps:

- Review scheduled events documentation on Microsoft Learn

- Learn more about planned maintenance on virtual machines on Microsoft Learn

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.