- Home

- Azure

- Microsoft Developer Community Blog

- Infra in Azure for Developers - The How (Part 1)

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

We've looked at the why and what of infra for developers so now it's time to put it into action with the how. What separates this from a more generic tutorial on Azure infra? This is not meant to cover the whole gamut of Azure services - it is intended to solve an isolated part of the puzzle; namely the deployment of developer output. You can certainly build on this or re-use for other purposes, but this isn't about creating a complete platform as such.

Disclaimer: While I strive to follow best practices the focus is on the concepts and not a battle hardened solution. This is not production grade.

I noticed during production that this would become a rather lengthy post, so I have split things into two parts with this laying the foundation with regards to the infrastructure. (I feel we cover quite a lot here as well so feel free to top up your coffee cup first.)

We need a problem to solve here - I believe the eShop sample is a good example of a reference application. There are actually multiple eShop versions:

- eShopOnWeb - https://github.com/dotnet-architecture/eShopOnWeb This is a monolithic implementation.

- "New" eShop - https://github.com/dotnet/eShop A microservices-based implementation using containers.

- eShopOnAzure - https://github.com/Azure-Samples/eShopOnAzure The microservice-based implementation using Azure services.

Both monolith and microservices are valid options for demonstrating how .NET works, but we will base ourselves on the microservices implementation here.

The code for this article can be found here: https://github.com/ahelland/infra-for-devs

It could make sense to base ourselves on the Azure version, but that one seems to not quite be ready yet as I'm seeing various issues trying to build it so for now we will use the plain version.

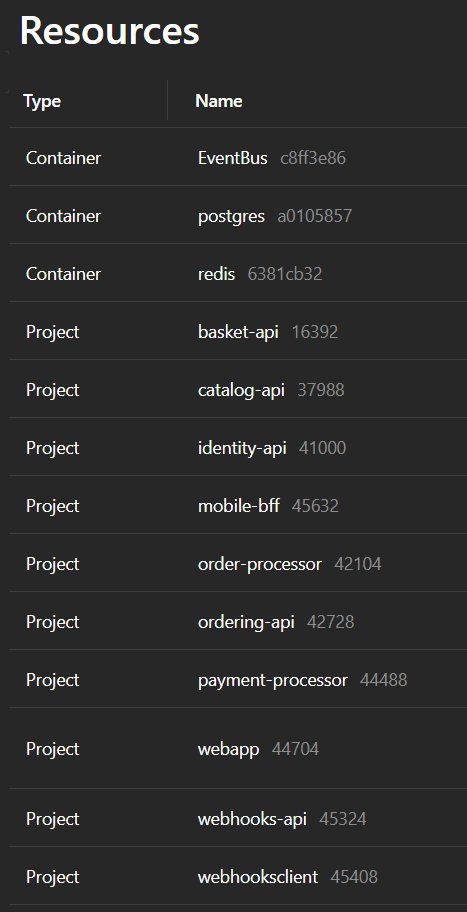

I cloned it to my disk and tried spinning it up using Aspire. The web app works, but the interesting bit right now is the Aspire Dashboard:

We can see that there are three external components to the code (in line with the architecture diagram) packaged in containers and the different microservices running un-containerized. For running in Azure it would probably make sense to put everything in containers. There is also a dependency on Duende IdentityServer for login functionality, but that is part of the code so it doesn't require any extras as such.

Container hosting

This leads to the first infrastructure related design question - which service should be used for hosting the containers? As a developer you have to make architectural choices about your code; which logging framework to use, which testing strategy, etc. The same applies for infrastructure.

"But I don't know about infra", or "I don't what to figure out those things"? If that is what you're thinking you are bringing up a good point. My point in writing these posts is de-mystifying the infrastructure parts of bringing a solution to Azure, but as I said in the first post this is not about making developers do everything.

In our case we will go for Azure Container Apps because I believe that is a good abstraction on top of Kubernetes. Azure Kubernetes Service is also good, but unless you have a need for digging into Kubernetes details and customize accordingly Container Apps is more developer friendly.

I wanted to include a list of design goals, but it felt a little contrived. Sure, I want to follow best practices from Microsoft where possible; we'll tackle that as we go along. An extra challenge we will throw in is to see if we can make eShop run on a private network in Azure. (In the enterprise space many will prefer that.)

Tooling

Before we dive into the implementation a few notes on the tooling. Visual Studio 2022 supports Bicep, but in my own experience it's a better experience to use Visual Studio Code for IaC.

You should also install the Azure CLI and PowerShell. While techically not required (for Bicep in general or this post), I also find it very useful to have WSL installed as some things are just plain easier to do on Linux :)

And throw in the Bicep extension in VS Code as well. I also highly recommend the Polyglot Notebooks extension - that serves as my tool for deploying Bicep locally.

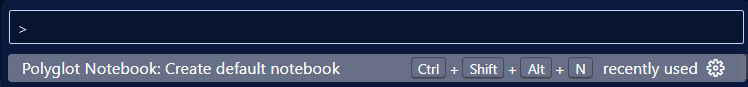

After installing the Polyglot notebooks extension it can be invoked with Ctrl+Shift+P (default on Windows) to create a new notebook:

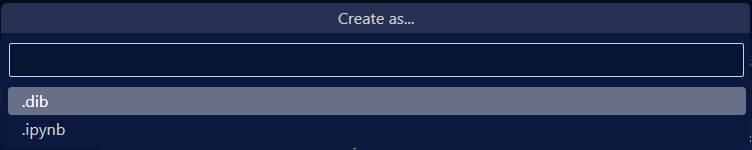

I've found the .dib format most useful for this purpose:

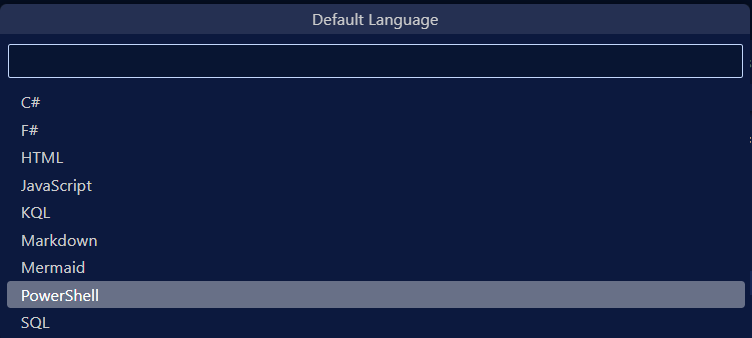

It's just the defaults - it's flexible afterwards.

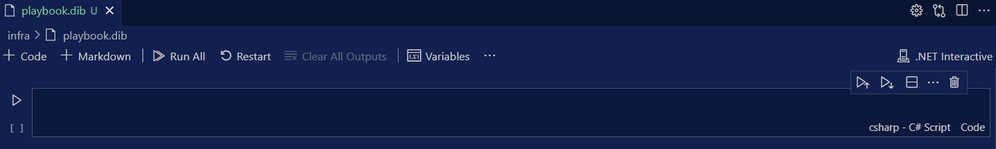

I chose to save it directly under /infra as playbook.dib. And now you have the option to execute code inline and explain with Markdown along the way:

Folder structure

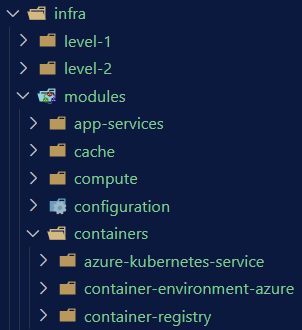

For now we just have infra in our repo (code to be added later) so we put the Bicep in a folder called /infra with sub-folders for modules and the levels.

I've seen various setups with regards to where one puts infrastructure-as-code (regardless of whether it's Bicep, Terraform or something else). Should it be in the same repo as the application code or in a separate repo? I have taken the decision to co-locate it here to reduce complexity, but I tend to prefer at least the modules being in a separate repo. (The approach here is very solution centric so if you go down the route of building a platform you probably need to structure things differently.) But of course the whole monorepo vs multi-repo is a discussion of its own in which IaC is just another piece of the puzzle.

CI/CD

I think we can all agree that the age of compiling code and putting in on a USB stick for someone else to copy on to the server is over and I believe you should build and deploy through Azure DevOps or GitHub. In this post I will not be using those services for two reasons:

- Running things through a pipeline adds some complexity since you have to type up some yaml, possibly build some private agents, fix permissions and so on. Maybe I'll do a follow-up post on that - haven't decided.

- As a .NET developer your inner loop is pressing F5. You don't create a pull request for every piece of code you write before verifying you are able to compile locally. While the ARM APIs enable a basic level of verification to happen locally attempting to deploy to Azure is basically the IaC developer's "F5 experience". (To a subscription where you are allowed to mess things up.)

Azure resources

Here is the component diagram of eShop: https://github.com/dotnet/eShop/blob/main/img/eshop_architecture.png

So, what will we need to create? A few things come to mind:

- Virtual network

- Private DNS Zone

- Azure Container App Environment

- Azure Container Registry

- Container Apps

If I were to create an app from scratch I would probably have used different components - replacing RabbitMQ with Azure Service Bus is an obvious choice. But the choices made while coding eShop is not our concern for now.

Bicep modules

A thing that might be a bit frustrating at first using Bicep is how you're forced to use modules. In C# you can choose if you want to use interfaces, use dependency injection, put things in a shared dll and so on - you don't want to go down the route of "Hello World Enterprise Edition" for all your apps. Due to how scopes work with ARM you don't get the same flexibility with Bicep. Once you get used to it it's fairly pain-free though and I think it provides a clear abstraction between definitions and instantiations. (Side note: Terraform has solved this by creating additional abstractions on top of ARM that takes care of this when you use the AzureRM provider, but underneath it's the same.)

Personally I use the Bicep Registry Module tool which is an additional installation:

https://www.nuget.org/packages/Azure.Bicep.RegistryModuleTool

So, for something like a container app environment module you would do the following:

- Create a subfolder containers for the namespace.

- Create a subfolder container-environment. (You can choose the name.)

- Change to the subfolder and run brm generate to scaffold the files.

- Fill in metadata.

- Write the actual module code.

- Create main.test.bicep (in the modules test subfolder).

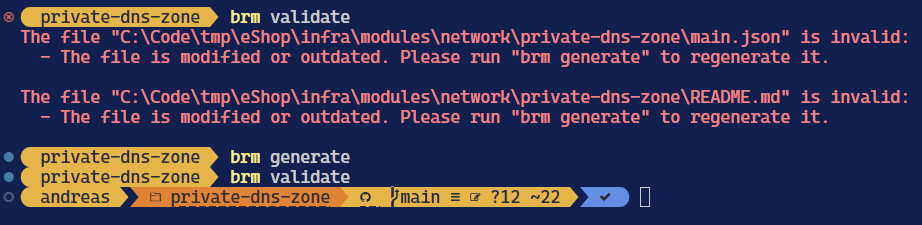

- Run brm validate to check you have missed a description or something.

I usually apply a PSRule step at the end as well, but we can get back to that later. (PSRule checks your Bicep against the Azure baselines to make suggestions on improvements.)

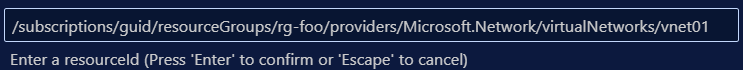

How to create a module? Let's use the private DNS Zone as an example. (Mostly because it's not too code heavy.)

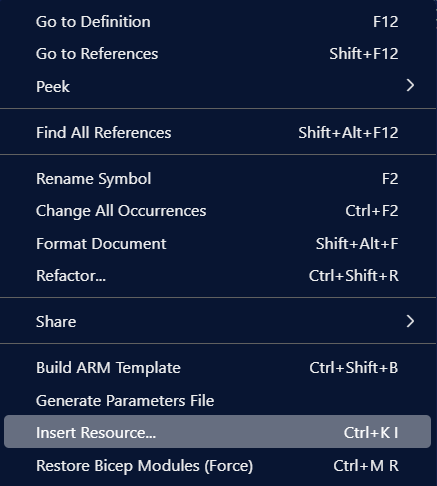

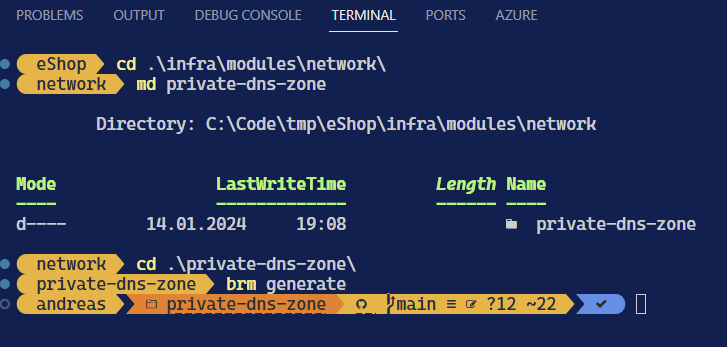

You can create it through click-ops in the Azure Portal and import to VS Code afterwards with the Insert Resource action:

That will create Bicep for that specific instance so you need to make it generic yourself.

Once you get things more into your fingers you can start with the reference docs and work it out manually:

https://learn.microsoft.com/en-us/azure/templates/

Or some combination of the two as it's not always that easy to figure out the reference docs either.

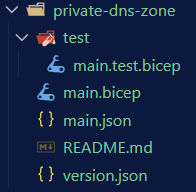

The docs tells us that the Private DNS Zone is part of the Network provider so while in the /infra/modules/network folder we create a sub-folder and let brm scaffold the files for us:

Which will give you the following files:

main.json will be generated by the tooling so ignore. README.md will also be generated though you can add to it manually if you will.

version.json needs a version number added manually like this:

{

"$schema": "https://aka.ms/bicep-registry-module-version-file-schema#",

"version": "0.1",

"pathFilters": [

"./main.json"

]

}main.bicep is the important part and you need to fill in some metadata first as a minimum:

metadata name = 'Private DNS Zone'

metadata description = 'A module for generating an empty Private DNS Zone.'

metadata owner = 'ahelland'And then you add some more Bicep code:

@description('Tags retrieved from parameter file.')

param resourceTags object = {}

@description('The name of the DNS zone to be created. Must have at least 2 segments, e.g. hostname.org')

param zoneName string

@description('Enable auto-registration for virtual network.')

param registrationEnabled bool

@description('The name of vnet to connect the zone to (for naming of link). Null if registrationEnabled is false.')

param vnetName string?

@description('Vnet to link up with. Null if registrationEnabled is false.')

param vnetId string?

resource zone 'Microsoft.Network/privateDnsZones@2020-06-01' = {

name: zoneName

location: 'global'

tags: resourceTags

resource vnet 'virtualNetworkLinks@2020-06-01' = if (!empty(vnetName)) {

name: '${vnetName}-link'

location: 'global'

properties: {

registrationEnabled: registrationEnabled

virtualNetwork: {

id: vnetId

}

}

}

}You should be able to figure out most of these things I assume.

For static code validation purposes you also need to add code to main.test.bicep:

targetScope = 'subscription'

param location string = 'norwayeast'

param resourceTags object = {

value: {

IaC: 'Bicep'

Environment: 'Test'

}

}

resource rg_dns 'Microsoft.Resources/resourceGroups@2022-09-01' = {

name: 'contoso-dns'

location: location

tags: resourceTags

}

module dnsZone '../main.bicep' = {

scope: rg_dns

name: 'dns'

params: {

resourceTags: resourceTags

registrationEnabled: false

vnetId: ''

vnetName: ''

zoneName: 'contoso.com'

}

}Note that I am not supplying properties for the virtual network here. I could of course supply dummy values, but this module in isolation does not provide a virtual network. It is also a valid use case to not enable automatic registration (They are also nullable params in the main.bicep file so that's why I'm not getting any complaints.)

Now your module is done so it can be verifed with the brm validate command. As you will see you do need to run brm generate once more to regenerate files (it will also call out things like you forgetting to decorate params with descriptions):

Do I have to write all these modules from scratch? No, you don't actually have to do that. That is, as so many things in life - it depends. Microsoft has several options on offer to reduce your module making job:

- Bicep Registry Modules: https://github.com/Azure/bicep-registry-modules

- CARML: https://github.com/Azure/ResourceModules

- Azure Verified Modules: https://azure.github.io/Azure-Verified-Modules/

Yeah, that's not confusing at all :) The current line of thinking is that they will converge into the Verified Modules initiative, but we're not quite there yet. In short - there might be a ready-made module for you, and there might not.

Another nifty thing about modules is that you can push them to a container registry and consume them like independent artifacts. Which enables use cases like Microsoft offering said Bicep modules and you sharing internally between projects. This brings up the question - how can I consume modules when I use Bicep to create the registry? You clearly cannot consume from a registry if you haven't created it yet. You're not forced to do so either; you can refer directly through the file system. In a more complex setup you might want to have a separate registry for Bicep modules that are bootstrapped at an earlier stage than deploying the apps infra.

Bicep modules can be published (to the registry) like this:

$target="br:contoso.azurecr.io/bicep/modules/private-dns-zone"

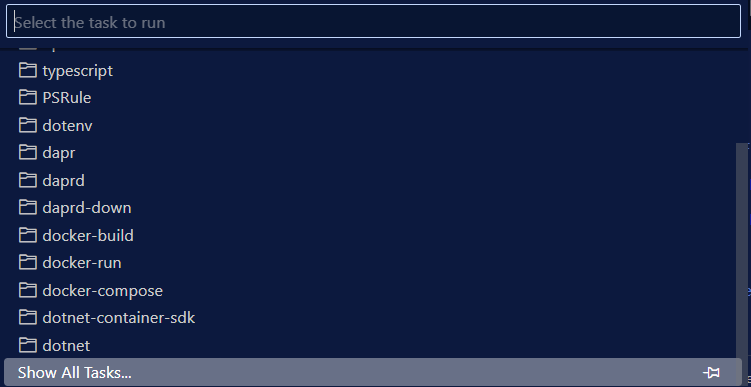

az bicep publish --file main.bicep --target $target --verbosePro-tip: this doesn't scale so use the tasks feature in VS Code.

- Create a subfolder called tasks under /infra (you can choose a different name)

- Create a PowerShell script - I called mine publish_modules.ps1 (replace the value of $registryName):

#Loop through the modules directory, retrieve all modules, extract version number and publish.

$rootList = (Get-ChildItem -Path modules -Recurse -Directory -Depth 0 | Select-Object Name)

foreach ($subList in $rootList)

{

$namespace=$subList.name

foreach ($modules in $(Get-ChildItem -Path ./modules/$namespace -Recurse -Directory -Depth 0 | Select-Object Name))

{

$module=$modules.Name

$version=(Get-Content ./modules/$namespace/$module/version.json -Raw | ConvertFrom-Json).version

$registryName="contosoacr"

$target="br:" + $registryName + ".azurecr.io/bicep/modules/" + $namespace + "/" + $module + ":v" + $version

az bicep publish --file ./modules/$namespace/$module/main.bicep --target $target --verbose

}

}- Create .vscode as a subfolder under /infra and create a tasks.json with the following contents:

{

"version": "2.0.0",

"tasks": [

{

"label": "Publish modules to container registry",

"type": "shell",

"command": "./tasks/publish_modules.ps1",

"presentation": {

"reveal": "always",

"panel": "dedicated"

}

}

]

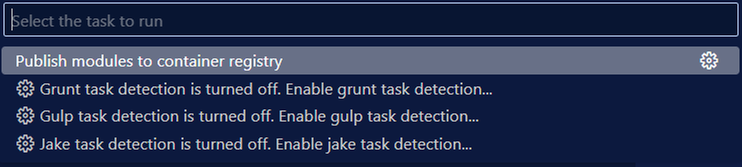

}If you invoke Ctrl-Shift-P now you will have a new task at your disposal:

Since we haven't created the registry yet it is not going to work yet though.

Infrastructure levels

I mentioned previously that the layered infra approach from the Cloud Adoption Framework makes sense so we will follow that pattern and deploy accordingly. Mind you - it probably makes sense to explain my numbering here. Sometimes you have a level 0 - that's where you do bootstrapping that isn't part of the actual deployment of resources, but it could be you need to do some prepwork like registering resource providers to enable the rest to work.

Level 1 is where you would do governance things like setting up a log analytics workspace for the infra resources. Maybe a Key Vault for encryption keys you need later. We will not use a level 1 and skip straight to Level-2.

Level 2

This is where we start creating stuff. Since we want things to run on a private network we need to create a network (with subnets) and a private DNS zone. Some extra notes on these are probably helpful to set the context.

Take care of where you deploy your vnet as it needs to align with the services you need. If you deploy your network to North Europe but the services you want are only available in East US that's not going to work. If you like trying out services that are in preview it is very common that these are not offered in all regions. (You can create multiple networks in different regions and connect them, but that is far out of scope for this blog post.)

Private DNS zones takes care of name resolution, but as the name implies it is private. You can create microsoft.com and as it is not exposed to the internet it's not a problem. Until you sit on your developer laptop and have no means of connecting to resolve those names. The easy fix in our context is to create Azure Dev Boxes attached to the vnet. The "proper" fix is to create a connection between your on-premises network and Azure (using S2S VPN or ExpressRoute) and set up a private DNS resolver. For that matter you could also set up P2S VPN and have each developer tunneling their laptop into Azure. Once again - slightly out of scope here.

And by now you're probably thinking your head hurts. I know. Isn't there an easy way out? Well, yes, avoid using private networks and follow the defaults that give you public access on every resource. Clearly not the response you will get from the network team though if you have to run things by them :)

Our level-2 ends up being fairly stripped down:

targetScope = 'subscription'

@description('Azure region to deploy resources into.')

param location string

@description('Tags retrieved from parameter file.')

param resourceTags object = {}

resource rg_vnet 'Microsoft.Resources/resourceGroups@2021-04-01' = {

name: 'rg-eshop-vnet'

location: location

tags: resourceTags

}

resource rg_dns 'Microsoft.Resources/resourceGroups@2021-04-01' = {

name: 'rg-eshop-dns'

location: location

tags: resourceTags

}

param vnetName string = 'eshop-vnet-weu'

module vnet 'br/public:network/virtual-network:1.1.3' = {

scope: rg_vnet

name: 'eshop-vnet-weu'

params: {

name: vnetName

location: location

addressPrefixes: [

'10.1.0.0/16'

]

subnets: [

{

name: 'snet-devbox-01'

addressPrefix: '10.1.1.0/24'

privateEndpointNetworkPolicies: 'Enabled'

}

{

name: 'snet-cae-01'

addressPrefix: '10.1.2.0/24'

privateEndpointNetworkPolicies: 'Enabled'

delegations: [

{

name: 'Microsoft.App.environments'

properties: {

serviceName: 'Microsoft.App/environments'

}

type: 'Microsoft.Network/virtualNetworks/subnets/delegations'

}

]

}

{

name: 'snet-pe-01'

addressPrefix: '10.1.3.0/24'

privateEndpointNetworkPolicies: 'Enabled'

}

]

}

}

//We import the vnet just created to be able to read the properties

resource vnet_import 'Microsoft.Network/virtualNetworks@2023-06-01' existing = {

scope: rg_vnet

name: vnetName

}

//Private endpoint DNS

module dnsZoneACR '../modules/network/private-dns-zone/main.bicep' = {

scope: rg_dns

name: 'eshop-private-dns-acr'

params: {

resourceTags: resourceTags

registrationEnabled: false

vnetId: vnet_import.id

vnetName: vnetName

zoneName: 'privatelink.azurecr.io'

}

}

The network module is from the Microsoft public registry because the needs here do not require a custom module.

And here's where the polyglot notebooks come in handy. I create small blocks of code like this which can be run with the Play icon in the upper left corner:

# A what-if to point out errors not caught by the linter

#az deployment sub what-if --location westeurope --name level-2 --template-file .\level-2\main.bicep --parameters .\level-2\main.bicepparam

# A stand-alone deployment

# az deployment sub create --location westeurope --name DevCenterStack --template-file main.bicep --parameters .\main.bicepparam

# A deployment stack

az stack sub create --name eshop-level-2 --location westeurope --template-file .\level-2\main.bicep --parameters .\level-2\main.bicepparam --deny-settings-mode noneWe haven't really touched upon the functionality of Deployment Stacks, but I use it here as a logical collection of everything that goes into a level.

Since this is a private virtual network, and I'm not able to provide a working configuration for a VPN specific to your needs I've also included an option for deploying Dev Boxes to connected to this vnet. The details of Dev Box was covered in my previous post if you want to know more about that:

Level 3

A lot of the good things happen here. This is where we deploy our container environment and our container registry. You might think this is where you deploy the apps as well, but we will have them on the subsequent level. The Azure Portal experience is a bit misleading here - if you try to create a container environment you must create an app at the same time. However, if you work from IaC this is not a requirement. If you try to set up deployment of more than one container app you will appreciate splitting up the environment instantiation from the apps deployment.

Networking creeps in here even if it belongs to the previous level. The container registry needs private endpoints so they are created here, and attached to the dedicated subnet we have created. A more interesting bit is that we need to create a private DNS zone for the container environment. The environment gets a Microsoft-generated name so we can't pre-create this. We also choose to put it in the resource group for the environment rather than our dedicated DNS resource group. Remember - resource groups are about life cycle. This DNS zone only has value when the container environment exists so that's where it belongs. This also highlights that the levels are general guidelines not strict rules.

Think about dependency hierarchies in code. Having class A depend on class B works. But if class B depends on class C that might introduce an indirect dependency from class A to class C and those can be tricky to figure out when things break. Work through the mental model of your resources in Azure the same way.

Our level-3 ends up like this:

targetScope = 'subscription'

@description('Azure region to deploy resources into.')

param location string

@description('Tags retrieved from parameter file.')

param resourceTags object = {}

resource rg_vnet 'Microsoft.Resources/resourceGroups@2021-04-01' existing = {

name: 'rg-eshop-vnet'

}

resource rg_dns 'Microsoft.Resources/resourceGroups@2021-04-01' existing = {

name: 'rg-eshop-dns'

}

resource rg_cae 'Microsoft.Resources/resourceGroups@2021-04-01' = {

name: 'rg-eshop-cae'

location: location

tags: resourceTags

}

param vnetName string = 'eshop-vnet-weu'

resource vnet 'Microsoft.Network/virtualNetworks@2023-06-01' existing = {

scope: rg_vnet

name: vnetName

}

resource rg_acr 'Microsoft.Resources/resourceGroups@2021-04-01' = {

name: 'rg-eshop-acr'

location: location

tags: resourceTags

}

param acrName string = 'acr${uniqueString('eshop-acr')}'

//Private Endpoints require Premium SKU

param acrSku string = 'Premium'

param acrManagedIdentity string = 'SystemAssigned'

module containerRegistry '../modules/containers/container-registry/main.bicep' = {

scope: rg_acr

name: acrName

params: {

resourceTags: resourceTags

acrName: acrName

acrSku: acrSku

adminUserEnabled: false

anonymousPullEnabled: false

location: location

managedIdentity: acrManagedIdentity

publicNetworkAccess: 'Disabled'

}

}

//Private endpoints (two required for ACR)

module peAcr 'acr-pe-endpoints.bicep' = {

scope: rg_acr

name: 'pe-acr'

params: {

resourceTags: resourceTags

location: location

peName: 'pe-acr'

serviceConnectionGroupIds: 'registry'

serviceConnectionId: containerRegistry.outputs.id

snetId: '${vnet.id}/subnets/snet-pe-01'

}

}

module acr_dns_pe_0 '../modules/network/private-dns-record-a/main.bicep' = {

scope: rg_dns

name: 'dns-a-acr-region'

params: {

ipAddress: peAcr.outputs.ip_0

recordName: '${containerRegistry.outputs.acrName}.${location}.data'

zone: 'privatelink.azurecr.io'

}

}

module acr_dns_pe_1 '../modules/network/private-dns-record-a/main.bicep' = {

scope: rg_dns

name: 'dns-a-acr-root'

params: {

ipAddress: peAcr.outputs.ip_1

recordName: containerRegistry.outputs.acrName

zone: 'privatelink.azurecr.io'

}

}

module containerenvironment '../modules/containers/container-environment/main.bicep' = {

scope: rg_cae

name: 'eshop-cae-01'

params: {

location: location

environmentName: 'eshop-cae-01'

snetId: '${vnet.id}/subnets/snet-cae-01'

}

}

module dnsZone '../modules/network/private-dns-zone/main.bicep' = {

scope: rg_cae

name: '${containerenvironment.name}-dns'

params: {

resourceTags: resourceTags

registrationEnabled: false

zoneName: containerenvironment.outputs.defaultDomain

vnetName: 'cae'

vnetId: vnet.id

}

}

module userMiCAE '../modules/identity/user-managed-identity/main.bicep' = {

scope: rg_cae

name: 'eshop-cae-user-mi'

params: {

location: location

miname: 'eshop-cae-user-mi'

}

}

module acrRole '../modules/identity/role-assignment-rg/main.bicep' = {

scope: rg_acr

name: 'eshop-cae-mi-acr-role'

params: {

principalId: userMiCAE.outputs.managedIdentityPrincipal

principalType: 'ServicePrincipal'

roleName: 'AcrPull'

}

}

A few things of note here:

- We need properties from the virtual network we created. We solve this by importing it with the existing keyword and referencing it's properties.

- The private networking feature of the container registry requires the Premium SKU. (Whenever a feature is "enterprisey" don't be surprised if it costs more.)

- While the private endpoint concept works across all resources the details can differ. For ACR we require two endpoints. For something like Storage you need endpoints for all services - one for blob, one for tables, etc. We create a module specifically for ACR in this case.

- The DNS zone has a dependency on the Container Environment being created first so we can retrieve the domain name, but Bicep figures out this based on our cross-resource references since we work in the same scope.

- We create a user-assigned identiity to be able to pull images from ACR when creating the container apps. A container app can have a system-assigned identity as well (great for granular access control), but it can't be used for pulling the image as that would create a chicken and egg problem since the identity doesn't exist until the app has been created. (Workarounds available, but not recommended.)

This takes care of the classic infra stuff if you will - feels more like coding, right? It may very well be that this is handled by your DevOps engineer should you have one, or your platform team for that matter. At this lab scale it should be manageable for a dev as well; either way - it's useful background information to broaden your horizon.

It also closes off part one of this walkthrough. The rest of the code can be found here: https://github.com/ahelland/infra-for-devs

We still have the actual services to deploy, and that will be covered in part two of this walkthrough.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.