- Home

- Azure

- Microsoft Developer Community Blog

- Semantic Kernel-Powered OpenAI Plugin Development Lifecycle

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Introduction

OpenAI's Language Model (LLM) holds immense potential for integrating AI capabilities into third-party apps, but it also comes with challenges. In this blog, we'll explore the Process of creating an OpenAI Plugins.

We'll discuss the capabilities and challenges of integrating LLM into third-party apps and introduce OpenAI Plugins, with a focus on the Semantic Kernel.

Our practical journey involves creating an OpenAI plugin for log analytics, specifically for querying Signin logs. We'll cover native and semantic functions, including crafting effective prompts and eliciting desired responses from the model. Memory utilization's role in personalizing user experiences and integrating Bing for up-to-date information are key topics.

We'll conclude by discussing the dynamic execution sequence with a planner. In just 10 minutes of reading, you'll have a solid grasp of OpenAI Plugins and the tools to start building your own. Let's embark on this journey to master the world of OpenAI Plugin development!

Understanding LLM Model's Capabilities and Confronting Challenges

In our quest to harness the potential of AI within our software, it is imperative to not only appreciate the capabilities of the Language Model but also to recognize the challenges it presents. Let's explore the key facets we need to consider in this journey.

Capabilities

- Prose and Code Development:

Our Language Model excels in the realm of both prose and code development. It possesses the unique ability to generate and enhance written content, ensuring a natural and coherent flow of language. Moreover, it serves as an invaluable tool for code development, significantly enhancing the efficiency and precision of coding tasks.

- Text Summarization:

One of the most remarkable features of our model is its aptitude for text summarization. Whether you're dealing with extensive documents or intricate research papers, this AI is proficient at distilling vast volumes of information into concise and informative summaries, effectively saving you time and effort.

- Content Classification:

If you require content categorization and classification, our model is well-equipped for this task. It can efficiently identify and classify text or data, simplifying content management processes. This capability proves particularly advantageous for organizations dealing with substantial volumes of data that demand efficient organization.

- Question Answering:

For those seeking precise and contextually relevant answers to their questions, our AI model is an invaluable resource. It is highly proficient in research, information retrieval, and more, making it a valuable asset for individuals and professionals alike.

- Language Translation and Conversion:

When confronted with language barriers or the need to seamlessly translate between different languages, our Language Model rises to the occasion. It can effortlessly translate text and even facilitate conversion between programming languages, making it an indispensable asset for global communication and various coding tasks.

In summary, our Language Model offers a versatile toolkit that enhances your work across a spectrum of tasks. From generating and enhancing prose and code, summarizing text, classifying content, and providing accurate answers to questions, to facilitating language translation and conversion, it stands as a powerful asset for professionals in diverse fields.

Challenges:

While large language models are remarkable at generating text, there are several tasks that they cannot autonomously tackle. These challenges include, but are not limited to:

- Retrieving data from external sources.

- Knowing real-time information, such as the current time.

- Performing complex mathematical calculations.

- Executing physical tasks in the real world.

- Memorizing and recalling specific pieces of information.

To comprehend these challenges, we can categorize them as follows:

- The model is text-based and focused on natural language processing.

- It has been trained on data available only up to 2021.

- The training data is publicly available.

- Addressing bias and ensuring model fairness is an ongoing concern.

- Integration with other components, including in-house applications, requires thoughtful consideration.

- Efficient usage demands attention to cost and token limits.

Understanding these capabilities and challenges is essential in effectively leveraging the Language Model's potential while remaining cognizant of its inherent limitations. It's a journey of exploration and comprehension that holds significant promise for the future of AI integration.

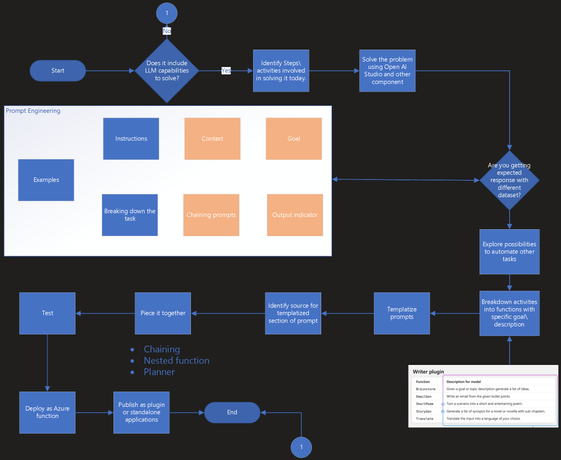

Development Process: Using problem of running Natural Language against Log Analytics

Imagine you have a scenario where you need to extract information from your log analytics data, and it involves tasks that require the capabilities of the Language Model. Let's walk through the development process for solving this scenario using OpenAI Studio and other components.

- Tasks that Require LLM Capabilities: Yes, the scenario involves complex language-based tasks.

- Steps/Activities Involved in Solving It today:

- Share the request, such as "please share all sign-in locations," with Kusto Query Language (KQL) and Log Analytics experts.

- Create a KQL query.

- Log in to the Log Analytics workspace.

- Run the query against the Log Analytics workspace.

- If the KQL doesn't provide the desired response, iterate through trial and error.

- Solving the Problem using Open AI Studio and Other Components:

- Request OpenAI to generate a KQL query using natural language.

- Connect with the Log Analytics workspace.

- Execute the generated query.

- Refine Prompt:

- Context: Given an input question, create a syntactically correct {dialect} query and retrieve the results.

- Input Data: Understand the schema of the Signin Table.

- Specific Goal: Create a syntactically correct {dialect} query.

- Output Indicator: Provide a sample question and its corresponding KQL query.

- Examples: Generate KQL queries for questions like "Show all SigninLogs events," "Show all Failed MFA challenges," and "Show all Failed login counts."

- Explore Possibilities to Automate Other Tasks:

- Connect with Log Analytics.

- Run the KQL query.

- Identify Functions with Specific Goals:

The key advantage of using a semantic kernel is that it allows you to run AI services alongside native code, treating calls to AI services as their own first-class citizens called "semantic functions". This makes it easier to build complex applications that leverage both traditional code and AI services.

Look for semantic and native functions that are relevant to your problem. Semantic functions are typically used for tasks involving AI services, while native functions are used for tasks that can be handled by traditional code.

- `KQLquerySignin`: To retrieve KQL queries for the Signin table.

- `exequery`: Execute Kusto Query against the Log Analytics workspace.

- Templatize Prompts

The content discusses the importance of templatizing prompts in semantic function development. It explains that this approach, using Semantic Kernel's templating language, dynamically generates prompts, making it more scalable and maintainable than hardcoding options into the prompts.

The key points include:

- Templatizing prompts allows for constraining the output of semantic functions by providing a list of options, making functions more reusable and adaptable to various scenarios.

- The process involves adding variables to prompts, such as "options" and "history," to provide context and choices for the Language Learning Model (LLM).

- Examples of templatized prompts are provided, showing how variables like history and options are included in the prompts.

- The content also discusses the ability to call nested functions within a semantic function, which helps break up prompts into smaller pieces, keeps LLMs focused, avoids token limits, and allows for the integration of native code.

Examples:

```

[History] {{$history}}

User: {{$input}}

---------------------------------------------

Provide the intent of the user. The intent should be one of the following: {{$options}}

INTENT:

```

We can leverage the `ConversationSummarySkill` plugin that's part of the core plugins package to summarize the conversation history before asking for the intent.

```

{{ConversationSummarySkill.SummarizeConversation $history}}

User: {{$input}}

---------------------------------------------

Provide the intent of the user. The intent should be one of the following: {{$options}}

INTENT:

```

In summary, templatizing prompts is a powerful technique in semantic function development, enhancing reusability and scalability by dynamically generating prompts based on variables and allowing nested function calls.

- Identifying Source for Templatized Section of the Prompt in Semantic Kernel

Semantic Kernel, a powerful tool for building conversational AI systems, offers a unique way to identify the source for templatized sections of the prompt. This involves using memory collections to integrate with an external data source.

Understanding Templated Prompts

In Semantic Kernel, prompts and templated prompts are referred to as functions, clarifying their role as a fundamental unit of computation within the kernel. Templated prompts allow for dynamic generation of prompts based on variables.

Leveraging Memory Collections

Memory collections in Semantic Kernel are a set of data structures that allow you to store the meaning of text that comes from different data sources. These texts can be from the web, e-mail providers, chats, a database, or from your local directory, and are hooked up to the Semantic Kernel through data source connectors.

Integrating with External Data Sources

Semantic Kernel allows you to download any GitHub repo, store it in memories (collections of embeddings), and query it with a chat UI. You don’t need to clone the repo or install any dependencies. You just need to provide the URL of the repo and let the sample app do the rest.

Identifying the source for templatized sections of the prompt in Semantic Kernel involves leveraging memory collections and integrating with an external data source. This powerful feature enhances reusability and scalability, making it an essential tool in developing effective and efficient semantic functions.

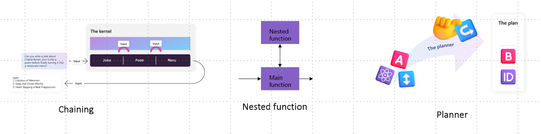

- Piecing It Together in Semantic Kernel

Semantic Kernel offers a powerful way to build complex conversational AI systems. Here are three key techniques:

Chaining Functions

Chaining functions is a technique where the output of one function is used as the input to another. This allows for complex workflows and can greatly enhance the capabilities of your conversational AI.

Nested Functions

Nested functions are functions that are called within another function. This allows for more modular and maintainable code, as each function can focus on a specific task. It also allows for more complex workflows, as the output of one function can be used as the input to another.

Semantic Planner

Planning the sequence involves determining the order in which functions should be called. This is crucial for ensuring that your conversational AI behaves in a logical and coherent manner. It involves understanding the dependencies between different functions and planning accordingly.

In conclusion, chaining functions, using nested functions, and planning the sequence are powerful techniques for piecing together your conversational AI in Semantic Kernel. They allow for more complex workflows, more maintainable code, and a more logical and coherent conversational AI.

- Test

Testing OpenAI plugins is of paramount importance to ensure their reliability, security, and performance. Here are several key reasons. why testing is crucial:

- Quality Assurance: Thorough testing helps identify and address any bugs or issues within the plugin. This ensures that the plugin functions as intended, providing a high-quality user experience. It helps in minimizing unexpected errors or failures.

- Security: Testing is vital for identifying vulnerabilities that could be exploited by malicious actors. Plugins often interact with sensitive data, so ensuring that they are secure and resistant to attacks is critical.

- User Experience: Testing helps in fine-tuning the user experience. By evaluating how the plugin responds to various inputs and scenarios, you can ensure that it delivers accurate and user-friendly results.

- Performance Optimization: Performance testing is essential to determine how the plugin behaves under different workloads. It helps in optimizing the plugin's efficiency and responsiveness, ensuring it can handle high usage without degradation.

- Compliance: Depending on the domain and data the plugin interacts with, it may need to adhere to specific regulations (e.g., GDPR, HIPAA). Testing helps ensure compliance with these regulations, which is crucial for avoiding legal issues.

- Integration Compatibility: OpenAI plugins often interact with other software and systems. Testing ensures that the plugin integrates seamlessly with the intended platforms and doesn't disrupt existing workflows.

- Edge Cases: Comprehensive testing should include edge cases to see how the plugin behaves under unusual or unexpected circumstances. This helps in identifying and addressing potential issues that might not be evident during standard testing.

- User Data Privacy: Privacy is a significant concern when using AI plugins. Thorough testing should confirm that the plugin handles user data with care, doesn't leak sensitive information, and adheres to privacy policies.

- Scalability: As usage grows, plugins need to scale efficiently. Performance testing should include scalability tests to ensure the plugin can handle increased loads without issues.

- Deploy as Azure Function

Refer How to Deploy Semantic Kernel to Azure in Minutes | Semantic Kernel (microsoft.com)

- Publish as Plugin or Use It as Standalone Application.

In this development process, we've demonstrated how OpenAI Studio and other components can streamline the task of running natural language queries against log analytics, enhancing efficiency and making complex tasks more accessible. With this process in hand, you can tackle various tasks that demand language understanding and automate them, saving time and resources.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.