- Home

- Azure

- Apps on Azure Blog

- Azure PowerShell Functions - connect SFTP and Storage Account

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Background

Recently, I have touched on a case when the customer would like to interact with Azure PowerShell Function to work with Storage Account and SFTP server. There are some advantages why customers would like to apply for this solution:

- PowerShell allows IT admins to apply system-level integration with other components more easily.

- Azure Functions provide the convenience of URI/timer-triggered maintenance tasks.

Demand Analysis

We will first start with the demand analysis. The task will be divided into the following sections:

- Pull the required file contents from Azure Storage Account, and store them inside Azure Function App.

- Upload files to another VM via SFTP.

- To work with Azure resources, we also need to apply proper authentication to enhance security.

Sample Code

If you are looking for a quick sample code to start with, please navigate to the link below:

https://github.com/SuperChenSSS/GrabSA_SendSFTP

The credentials and sensitive data in the project have been erased, so that you can directly use them by changing the property name. For more details about the implementation, please check the following part.

Prepare for authentication

In order to work with different Azure resources, we need to grant access to the Azure Function level. The solution will use:

- Key Vault to retrieve the SFTP login credentials.

- Managed Identity to allow Azure Functions to work with Key Vault and Storage Account.

- Service Principal for local debugging during test phase.

You can expand to learn more details, or skip this part if you have already configured these.

Key Vault

Key Vault is the product for retrieving credentials in a safer way. For info on how to store the credentials and retrieve values, see below links:

Quickstart - Set & retrieve a secret from Key Vault using PowerShell

Azure Quickstart - Set and retrieve a secret from Key Vault using Azure portal

# Azure KeyVault

$kvName = "kvSFTP0"

# SFTP credentials

$sftpServer = Get-AzKeyVaultSecret -VaultName $kvName -Name "sftpServer" -AsPlainText

$sftpUsername = Get-AzKeyVaultSecret -VaultName $kvName -Name "sftpUsername" -AsPlainText

$sftpPassword = Get-AzKeyVaultSecret -VaultName $kvName -Name "sftpPassword" -AsPlainText

Managed Identity

Managed Identity allows Azure Function products to authenticate and work with other resources easily. Normally you would need to maintain many credentials in order to get the permission, but managed identity enables much less configuration.

Please refer to the below links for detailed steps:

Managed identities - Azure App Service

Tutorial: Use a managed identity to access Azure Key Vault - Windows - Microsoft Entra

No code-level implementation is needed for managed identity.

Service Principal

In most cases, before publishing to Azure Functions, you need to conduct code tests to make sure it works as expected. Managed Identity won’t work if it’s in local debugging. This time we will need to add Service Principal for such authentication.

Service Principal is another way to authenticate, and it will use some parameters to grant the permissions. Please follow the below steps:

- Create a Service Principal to access storage via Azure Active Directory (AAD). Access storage with Azure Active Directory

- Go to Key Vault → IAM Control → Assign Key Vault Contributor role under the newly created Service Principal, so that we have permissions from both sides.

- Invoke the Service Principal during code implementation.

# ServicePrincipal for Local Testing

$AppId = $ENV:APPID

$AppSecret = $ENV:APPSECRET

$TenantID= $ENV:TENANTID

$SecureSecret = $AppSecret | ConvertTo-SecureString -AsPlainText -Force

$Credential = New-Object -TypeName System.Management.Automation.PSCredential -ArgumentList $AppId,$SecureSecret

Connect-AzAccount -ServicePrincipal -Credential $Credential -Tenant $TenantID

Write-Host "Service Principal Connected"

Note that I have referenced the local env variables, and this can be configured in local.settings.json file with project folder. All the parameters can be found by searching for your Service Principal Name → Overview and get all credentials.

Before publishing to the Azure side, please comment on this section to allow function app to work with managed identity instead.

Once this is ready, we can move forward to connect to storage and publish via SFTP.

Connect to Storage Account

This part will mainly deal with downloading blob files from Storage Account into Azure Functions. We will use Azure Storage SDK to achieve this.

# Azure Storage info

$kvName = ""

$storageAccountName = ""

$containerName = ""

$blob_name = ""

# Interact with query parameters or the body of the request.

$cName = $Request.Query.cName

$bName = $Request.Query.bName

$saName = $Request.Query.saName

if(-not $cName -or -not $bName -or -not $saName) {

$body = "One of the need params (saName, bName, cName) is missing, Will use default storage account, container and blob: ", $containerName, $blob_name, $storageAccountName , "\\nspecify cName and bName in query parameters to override"

Write-Host $body

} else {

$containerName = $cName

$blob_name = $bName

$storageAccountName = $saName

$body = "Will download from following storage account, container and blob: ", $storageAccountName, $containerName, $blob_name

Write-Host $body

}

# Connect and download from storage

$ctx = New-AzStorageContext -StorageAccountName $storageAccountName

$blob = Get-AzStorageBlob -Context $ctx -Container $containerName -Blob $blob_name

$sourcePath = "../" + $blob.Name # Store Location in Azure Function

Get-AzStorageBlobContent -Context $ctx -Container $containerName -Blob $blob.Name -Destination $sourcePath -Force # Download

Note that for this task, I simply retrieve one blob file from Storage Account, and the SDK would allow you to achieve much more than this. Reference to Azure Storage SDK:

New-AzStorageContext (Az.Storage)

Get-AzStorageBlob (Az.Storage)

Get-AzStorageBlobContent (Az.Storage)

Publish file contents with SFTP

Secure File Transfer Protocol (SFTP) is a safer way to publish the site contents compared with FTP, and is commonly used in enterprise-level publishing events. To connect with SFTP, we can use default port 22, or custom port to enhance the security.

# Use Posh-SSH module to create and use SFTP session

Import-Module Posh-SSH

# SFTP credentials

$sftpServer = Get-AzKeyVaultSecret -VaultName $kvName -Name "sftpServer" -AsPlainText

$sftpUsername = Get-AzKeyVaultSecret -VaultName $kvName -Name "sftpUsername" -AsPlainText

$sftpPassword = Get-AzKeyVaultSecret -VaultName $kvName -Name "sftpPassword" -AsPlainText

$sftpPort = 22

# Establish SFTP session

$session = New-SFTPSession -ComputerName $sftpServer -Port $sftpPort -Credential (New-Object System.Management.Automation.PSCredential($sftpUsername, (ConvertTo-SecureString $sftpPassword -AsPlainText -Force))) -AcceptKey

# Judge if connection is successful

If (($session.Host -ne $sftpServer) -or !($session.Connected)){

Write-Host $session.Connected + " " + $session.Host

Write-Host "SFTP server Connectivity failed..!"

exit 1

}

# Upload each file to the SFTP server

$destinationPath = "/" #SFTP Server Location

$sourcePath = "../" + $blob.Name # Store Location in Azure Function

Set-SFTPItem -SessionId $session.SessionId -Path $sourcePath -Destination $destinationPath -Force # Upload to SFTP Server

# Close the SFTP session

Remove-SFTPSession -SessionId $session.SessionId

Instead of directly invoking sftp from SSH session, we will need to create one in the PowerShell function. Note that we will use Posh-SSH as the supported module to create and use the SFTP session. Below are references to the SDK:

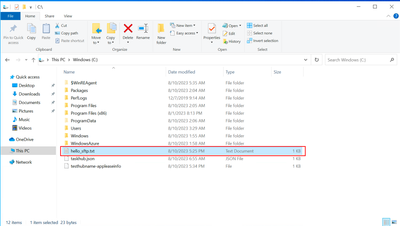

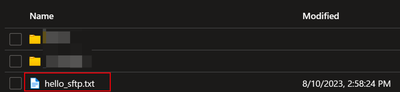

Result

As you can see, we have managed to retrieve the blob files and send them to VM via SFTP.

Conclusion

Before publishing the blog, I have done some research and found there are rarely any posts talking about this implementation, while it is a common scenario if the IT admin wants to use Azure Functions to perform some periodic maintenance tasks.

Hope the explanation could be helpful, and feel free to let me know if any further questions or queries, thank you.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.