- Home

- Azure

- Apps on Azure Blog

- Introduction and Deployment of Backstage on Azure Kubernetes Service

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Introduction to Backstage

Backstage, originally created by a small team during a hack week, is now a CNCF Incubation project. An incubation project means that it is considered stable and successfully being used in production, at scale by enterprises. Backstage is a platform for building developer portals which act as an operational platform between developers and the services they need to deploy. Developer portals can accelerate developer velocity providing push button deployment of production ready services and infrastructure.

In this Walkthrough we will learn about what Backstage is as well as how we can build and deploy it on Azure using multiple services including:

- Azure Kubernetes Service

- Azure Container Registry

- Azure Database for PostgreSQL

- Microsoft Entra ID

Backstage Features:

- Software Catalogue: Backstage's software catalogue shows developers the services available too them including key information such as the connected repository, the service owners, the latest build and API's exposed.

- Software Templates: Software templates allow users to create components within Backstage that allows for the loading, variable setting and publishing of a template to GitHub.

- TechDocs: Backstage centralises documentation under "TechDocs" which allows for engineers to write markdown files in parallel to development to live with the service code.

- Integrations: Backstage allows for integrations to be created in order to pull or publish data from external sources with GitHub and Azure as supported identity providers.

- Plugins: One of the best parts of Backstage is the open source plugin library designed to allow you to extend Backstage's core feature set. Some notable plugins include the Azure Resources plugin to view the status of services deployed related to a component and an ArgoCD plugin to view the status of your GitOps deployments for your services.

- Search: Finally tying all these features together is Backstage's search feature allowing for document and service discovery across the platform.

This means that deployment, documentation and search are all integrated into a single portal. Backstage also (using the Kubernetes plugin) centralises your services deployed across clusters into one central dashboard, abstracting the underlying cloud from the software developers and providing all the important information regarding that deployments status.

Backstage Architecture

Backstage is split into three parts. These components are split this way based on the three different groups of contributors for each part.

- Core - Base functionality built by core developers in the open source project.

- App - The app is an instance of a Backstage app that is deployed and tweaked. The app ties together core functionality with additional plugins. The app is built and maintained by app developers, usually a productivity team within a company.

- Plugins - Additional functionality to make your Backstage app useful for your company. Plugins can be specific to a company or open sourced and reusable.

The backstage "App" layer may already give you an idea that Backstage itself does not provide a docker image for deployment. This is because it is assumed customisation at the "App" layer is required for each enterprise. This means we have to build our own image which we will cover later in this article.

The system architecture for Backstage has some flexibility and will differ depending on your requirements. I would advise reviewing the material here: Architecture overview | Backstage Software Catalog and Developer Platform to make an informed decision. The three components are the Frontend (Backstage UI), the Backend (Core/Plugin App logic & proxy) and a database which for production is recommended to be PostgreSQL. Backstage also now supports Caches. You may want to decouple your front and back end to enable static hosting of the frontend, Backstage supports this although limits the features of the backend plugin API. In this example we will deploy both in a single container. It is certainly advisable to deploy your database outside of your cluster.

Getting Started with Backstage on AKS

Now we know a bit more about Backstage and how it works, let's look at how we can build it and get it set up and integrated with a variety of Azure services.

Creating your Database

To start with we will need to create our Postgres Database. As we are already building in Azure we will use our fully managed flexible Azure Database for PostgreSQL as our Backstage database. This service is simple to setup and manage, for enterprises deploying into production please refer to your own internal data standards.

First lets use our terminal of choice and ensure we are logged in with the correct Azure subscription set:

az login

az account set --subscription <subscription id>

Now lets create our resource group for this project:

az group create --name backstage--location eastus

We can now create our server. We will append our own initials to our server name to avoid conflicts. One server can contain multiple databases:

az postgres flexible-server create --name backstagedb-{your initals} --resource-group backstage

Since the default connectivity method is Public access (allowed IP addresses), the command will prompt you to confirm if you want to add your IP address, and/or all IPs (range covering 0.0.0.0 through 255.255.255.255) to the list of allowed addresses. For the objective of this blog I will add my own IP address when prompted. This will be the same authorised IP that our AKS cluster will use. In production it is advisable to adjust these deployments to leverage full private networking with VNET integration and private endpoints.

The server created has the following attributes:

- The same location as your resource group

- Auto-generated admin username and admin password (which you should save in a secure place)

- A default database named "flexibleserverdb"

- Service defaults for remaining server configurations: compute tier (General Purpose), compute size/SKU (

Standard_D2s_v3- 2 vCore, 8 GB RAM), backup retention period (7 days), and PostgreSQL version (13)

Once deployed we can now check our connection details for our server:

az postgres flexible-server show --name backstagedb-{your initals} --resource-group backstage

Make a note of the admin username output at the top of the above command.

We can then change the admin password of the server.

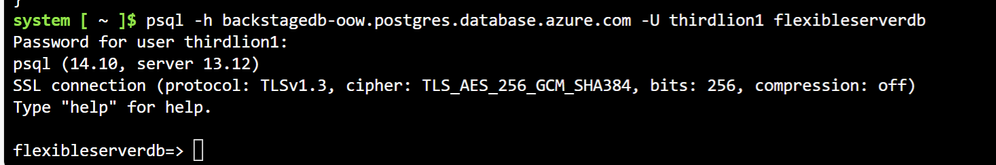

az postgres flexible-server update --resource-group backstage--name backstagedb-{your initals} --admin-password <new-password>We can now test connecting from our command line by using psql. If you are using Cloud Shell psql is already installed. If not you can install it here. You can now use your admin user and the admin password you have set to connect to the database.

psql -h backstagedb-{your initals}.postgres.database.azure.com -U {your generated admin user} flexibleserverdbProviding everything has been configured correctly you should now be logged in and able to verify your database is running as seen below:

You can type "\q" to exit the database.

Let's now add a database that will be referenced by Backstage. We can do this with the following command:

az postgres flexible-server db create --resource-group backstage --server-name backstagedb-{your inital} --database-name backstage_plugin_catalog

For this article we will not require secure connections for our database so we can make connections from our application easier. This means we need to update our server parameter with the following:

az postgres flexible-server parameter set --resource-group testGroup --server-name servername --name require_secure_transport --value off

Building Backstage

Things now get slightly more complicated. As mentioned earlier Backstage does not come ready with an image that can be deployed into your cluster due to the degree of customisation required such as the database connection and plugins. This means we will have to build Backstage ourself.

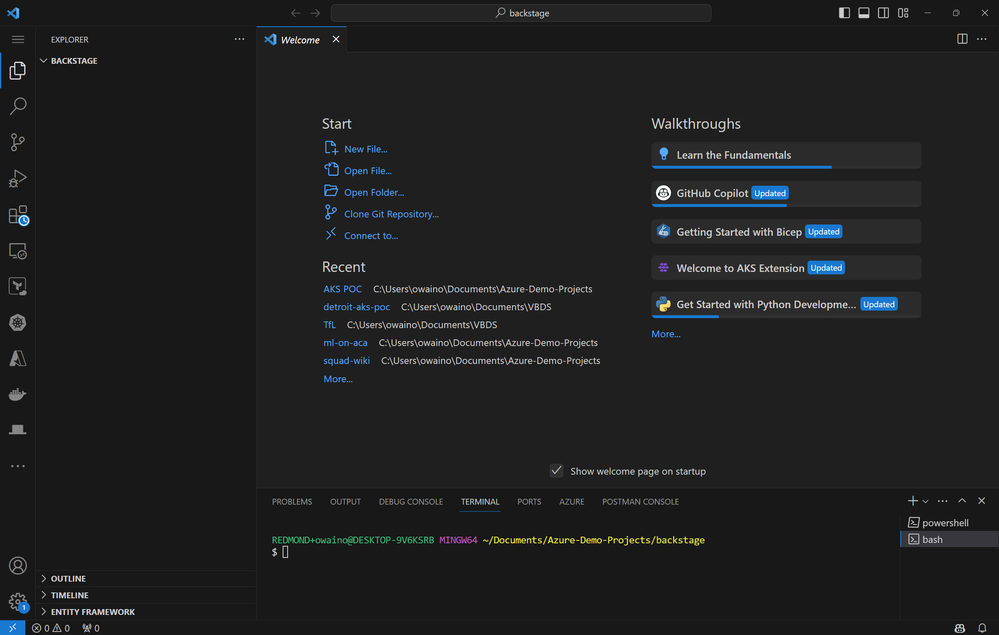

Backstage is built with Node. For the next steps we can do this through CloudShell or an IDE of your choice. For the sake of readability I will use Visual Studio Code as we need to make some changes to files and this will be easier for those unfamiliar with Vi/Vim.

To start I created a new folder and opened the empty directory in Visual Studio. I also opened a WSL Terminal.

Within the WSL Terminal we then run the following command which will install backstage and create a backstage app subdirectory within the directory we execute the command in:

npx @backstage/create-app@latest

Once this is complete we can test the demo version of the application locally. We can do this with the following commands:

cd backstage

yarn dev

Once we verify that Backstage is running locally we can add the Postgres client to our application:

# From your Backstage root directory

yarn --cwd packages/backend add pg

Our next step is to add our database config to our application. To do this we need to open app-config.yaml and add our PostgreSQL configuration in the root directory of our Backstage app using the credentials from the previous steps.

Host: backstagedb-{your initals}.postgres.database.azure.com

Port: 5432

backend:

database:

client: better-sqlite3 <---- Delete this existing line

connection: ':memory:' <---- Delete this existing line

# config options: https://node-postgres.com/apis/client <---- Add all lines below here

client: pg

connection:

host: ${POSTGRES_HOST}

port: ${POSTGRES_PORT}

user: ${POSTGRES_USER}

password: ${POSTGRES_PASSWORD}

# https://node-postgres.com/features/ssl

# ssl:

# host is only needed if the connection name differs from the certificate name.

# This is for example the case with CloudSQL.

# host: servername in the certificate

# ca:

# $file: <file-path>/server.pem

# key:

# $file: <file-path>/client.key

# cert:

# $file: <file-path>/client-cert.pem

For the sake of this demo we will pass our user and password in as hard coded values to our yaml file. This is not advisable in production. Please use your accepted application config method if deploying into production.

If you have deployed your sql database in Cloudshell and have now moved to your local machine you will need to add your local machines IP address to the authorised IP range in your servers firewall under the networking tab on the portal or update it through the CLI.

Authentication

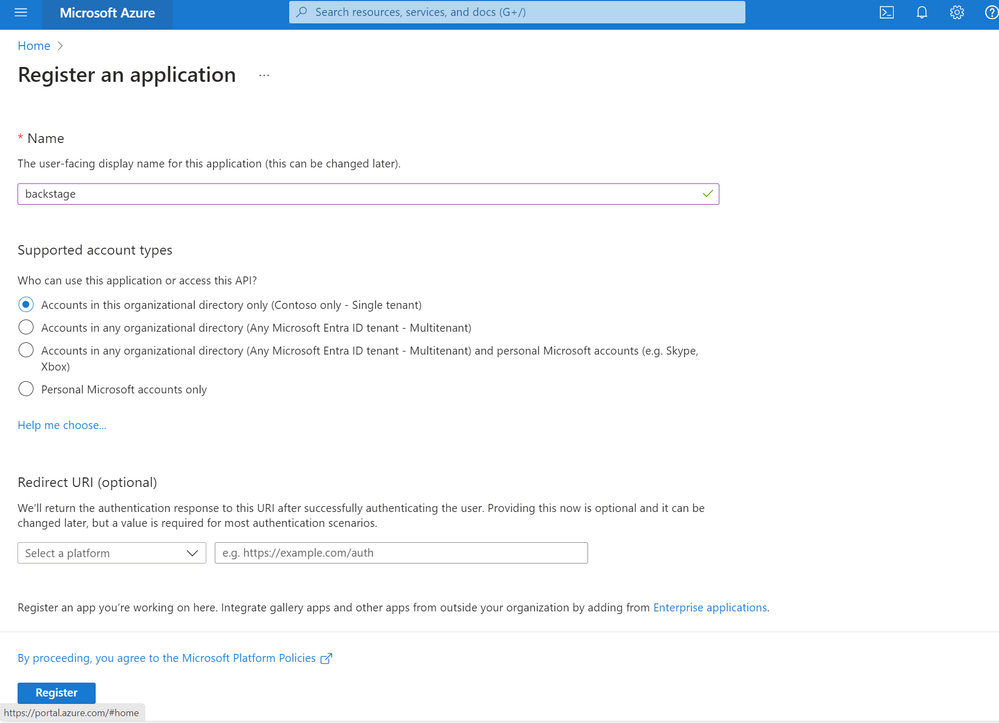

Now we have setup and connected our database we can move on to authentication. To do this we will be using our Microsoft Entra ID tenant. We can do this through the CLI however for readability I will create the app registration etc through the Portal. To start with we need to access our Microsoft Entra ID tenant and select "App Registrations".

We then select "New Registration" and provide a name such as "Backstage". We can keep the scope to this single tenant and put our local development url in for now under the redirect this would be:

http://localhost:7007/api/auth/microsoft/handler/frame

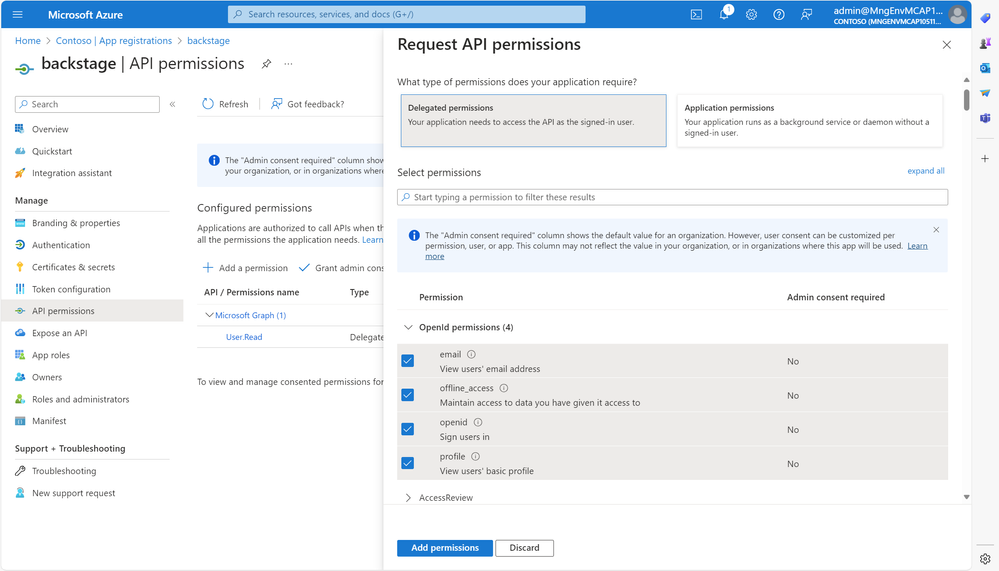

We now can go to the API Permissions of the app registration we have created and add the following permissions:

- offline_access

- openid

- profile

- User.Read

We also need to add some application permissions to allow us to onboard our users to Backstage later. To do this we follow the same method as the last step however select Application permissions instead of delegated. Here we need to add two permissions:

- User.Read.All

- GroupMember.Read.All

You may need to grant admin consent to the application if your organisation requires it. This can be done through the portal once the permissions are added by clicking the "Grant admin consent to ___" button. You may want to do this anyway so users do not need to provide consent individually on first sign in.

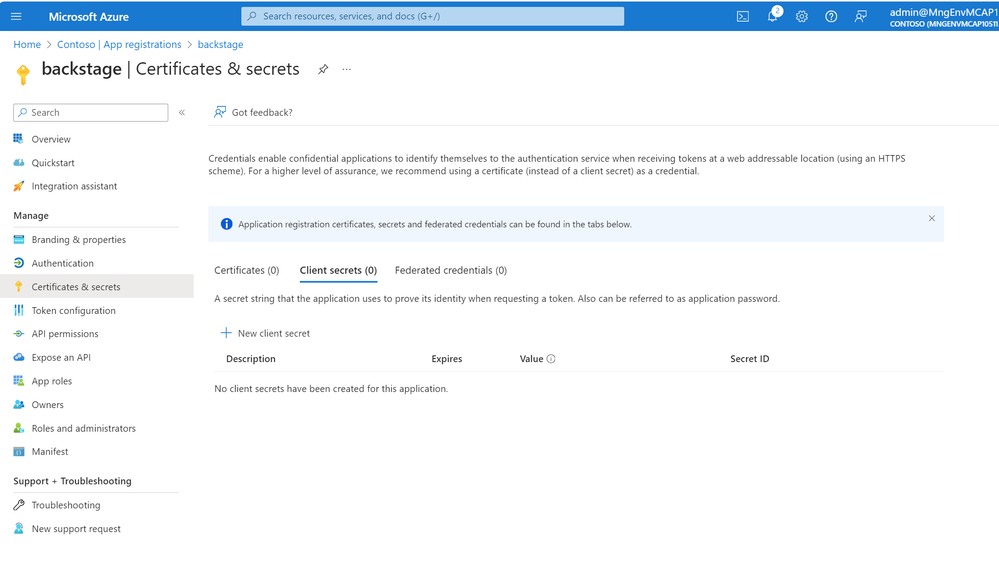

We next need to add a client secret for this app registration. This will be used in our application config. To do this we go to secrets and certificates in your application registration. Once created make a note of the client secret as it is only shown once.

We now need to add this to our application config. This can be done in the same app-config.yaml file as before under the "Auth" header. We need to add the following block:

auth:

environment: development

providers:

microsoft:

development:

clientId: ${AZURE_CLIENT_ID}

clientSecret: ${AZURE_CLIENT_SECRET}

tenantId: ${AZURE_TENANT_ID}

domainHint: ${AZURE_TENANT_ID}

additionalScopes:

- Mail.Send

Like the database connection I will be passing the hard coded values into the file. For production it would again be recommended to pass these through as secrets.

We also need to make some changes to the application source code to add the front end sign in page. To do this we need to add the following block to packages/app/src/App.tsx.

import { microsoftAuthApiRef } from '@backstage/core-plugin-api';

import { SignInPage } from '@backstage/core-components';

We then need to add another block further down the same file. Search for "Const app = createApp" and then below "apis," paste the following block:

components: {

SignInPage: props => (

<SignInPage

{...props}

auto

provider={{

id: 'microsoft-auth-provider',

title: 'Microsoft',

message: 'Sign in using Microsoft',

apiRef: microsoftAuthApiRef,

}}

/>

),

},

e.g. the full create app block should look like this:

const app = createApp({

apis,

components: {

SignInPage: props => (

<SignInPage

{...props}

auto

provider={{

id: 'microsoft-auth-provider',

title: 'Microsoft',

message: 'Sign in using Microsoft',

apiRef: microsoftAuthApiRef,

}}

/>

),

},

bindRoutes({ bind }) {

bind(catalogPlugin.externalRoutes, {

createComponent: scaffolderPlugin.routes.root,

viewTechDoc: techdocsPlugin.routes.docRoot,

createFromTemplate: scaffolderPlugin.routes.selectedTemplate,

});

bind(apiDocsPlugin.externalRoutes, {

registerApi: catalogImportPlugin.routes.importPage,

});

bind(scaffolderPlugin.externalRoutes, {

registerComponent: catalogImportPlugin.routes.importPage,

viewTechDoc: techdocsPlugin.routes.docRoot,

});

bind(orgPlugin.externalRoutes, {

catalogIndex: catalogPlugin.routes.catalogIndex,

});

},

});

The other addition to our application code that we must add is the Microsoft resolver. This is not mentioned anywhere in the backstage authentication documentation so this will save you the time I spent figuring out why my sign it was failing. We need to remove the GitHub example block from the \packages\backend\src\plugins\auth.ts file and replace it. I have pasted the full file below:

import {

createRouter,

providers,

defaultAuthProviderFactories,

} from '@backstage/plugin-auth-backend';

import { Router } from 'express';

import { PluginEnvironment } from '../types';

export default async function createPlugin(

env: PluginEnvironment,

): Promise<Router> {

return await createRouter({

logger: env.logger,

config: env.config,

database: env.database,

discovery: env.discovery,

tokenManager: env.tokenManager,

providerFactories: {

...defaultAuthProviderFactories,

microsoft: providers.microsoft.create({

signIn: {

resolver:

providers.microsoft.resolvers.emailMatchingUserEntityAnnotation(),

},

}),

},

});

}

If we try to login in now we shall be taken through the Entra ID Auth flow however we will see the error "User not found". This is because Backstage also requires us to setup the ingestion of users from our Entra tenant. This is because the user entity in Backstage has no concept of accounts but rather matches the user logging in to a user entity registered with Backstage itself. To onboard our Entra users we need to add the following to our app-config.yaml:

catalog:

providers:

microsoftGraphOrg:

providerId:

target: https://graph.microsoft.com/v1.0

authority: https://login.microsoftonline.com

tenantId: ${TENANT_ID}

clientId: ${CLIENT_ID}

clientSecret: ${CLIENT_SECRET}

queryMode: basic

We are adding our secrets in plain text again for the sake of getting this up and running quickly without building out a CI/CD pipeline. In production cases we would use the ${CLIENT_SECRET} at run time to pass the secrets through from a secure store.

We could add user or group querying to this provider to avoid loading our entire tenant and only select specific users however in this example I have kept it simple. You can learn how to add the user/group filtering parameters here:

Microsoft Entra Tenant Data | Backstage Software Catalog and Developer Platform

We also need to add the MS Graph plugin to our application packages. We do this with the following command:

# From your Backstage root directory

yarn --cwd packages/backend add @backstage/plugin-catalog-backend-module-msgraph

We also then have to register the Microsoft entity provider in our plugin catalog. We do this with the following changes to the /packages/backend/src/plugins/catalog.ts file:

builder.addEntityProvider(

MicrosoftGraphOrgEntityProvider.fromConfig(env.config, {

logger: env.logger,

schedule: env.scheduler.createScheduledTaskRunner({

frequency: { hours: 1 },

timeout: { minutes: 50 },

initialDelay: { seconds: 15},

}),

}),

);

Our catalog.ts file should look like this now:

import { CatalogBuilder } from '@backstage/plugin-catalog-backend';

import { ScaffolderEntitiesProcessor } from '@backstage/plugin-catalog-backend-module-scaffolder-entity-model';

import { Router } from 'express';

import { PluginEnvironment } from '../types';

import { MicrosoftGraphOrgEntityProvider } from '@backstage/plugin-catalog-backend-module-msgraph';

export default async function createPlugin(

env: PluginEnvironment,

): Promise<Router> {

const builder = await CatalogBuilder.create(env);

builder.addEntityProvider(

MicrosoftGraphOrgEntityProvider.fromConfig(env.config, {

logger: env.logger,

schedule: env.scheduler.createScheduledTaskRunner({

frequency: { hours: 1 },

timeout: { minutes: 50 },

initialDelay: { seconds: 15},

}),

}),

);

builder.addProcessor(new ScaffolderEntitiesProcessor());

const { processingEngine, router } = await builder.build();

await processingEngine.start();

return router;

}

We also need to add this new provider to our providers block in our app-config.yaml file. We can add the following below our existing auth provider block.

microsoftGraphOrg:

default:

tenantId: ${TENANT_ID}

user:

filter: accountEnabled eq true and userType eq 'member'

group:

filter: >

securityEnabled eq false

and mailEnabled eq true

and groupTypes/any(c:c+eq+'Unified')

schedule:

frequency: PT1H

timeout: PT50M

If your environment has restrictions on outgoing access, for example when we deploy it into a UDR egress AKS cluster we need to make sure our Backstage backend has access to the following hosts:

login.microsoftonline.com, to get and exchange authorization codes and access tokensgraph.microsoft.com, to fetch user profile information (as seen in this source code). If this host is unreachable, users may see anAuthentication failed, failed to fetch user profileerror when they attempt to log in.

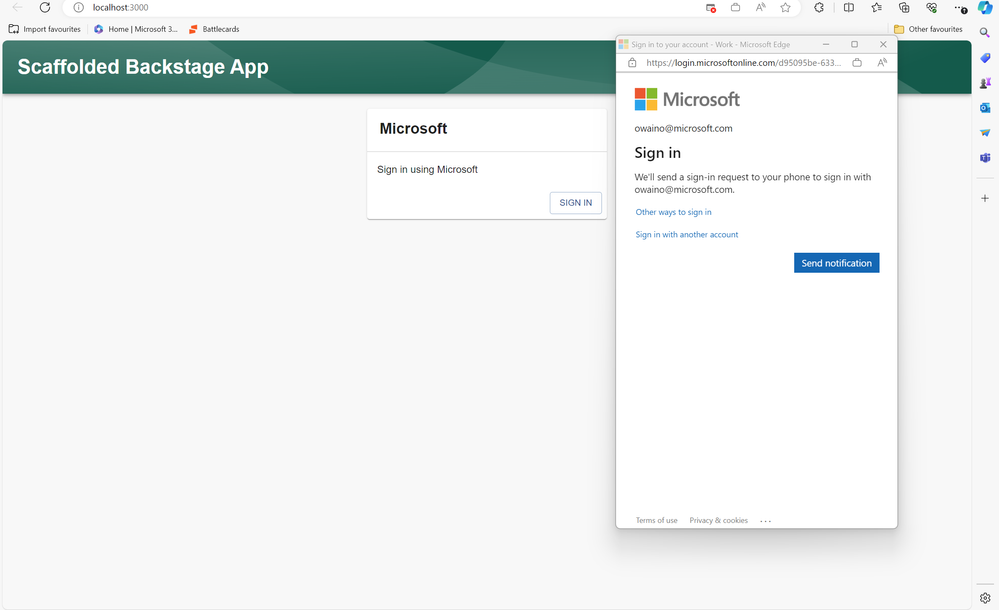

If we now stop Backstage in our terminal using ctrl/cmd + c and restart with Yarn Dev we should be greeted by a Microsoft Auth login button.

Once were logged in feel free to configure Backstage to include any other integrations or plugins you feel are suitable. Once done we are now ready to create our container images. This will allow us to deploy backstage on our Azure Application service of our choosing.

We also need to update our app.baseUrl in our app-config.yaml ready for deploying our application outside of our local environment. This is to avoid CORS policy issues once deployed on AKS.

app:

title: Scaffolded Backstage App

baseUrl: http://localhost:7007

organization:

name: My Company

backend:

# Used for enabling authentication, secret is shared by all backend plugins

# See https://backstage.io/docs/auth/service-to-service-auth for

# information on the format

# auth:

# keys:

# - secret: ${BACKEND_SECRET}

baseUrl: http://localhost:7007

listen:

port: 7007

# Uncomment the following host directive to bind to specific interfaces

# host: 127.0.0.1

csp:

connect-src: ["'self'", 'http:', 'https:']

# Content-Security-Policy directives follow the Helmet format: https://helmetjs.github.io/#reference

# Default Helmet Content-Security-Policy values can be removed by setting the key to false

cors:

origin: http://localhost:7007

methods: [GET, HEAD, PATCH, POST, PUT, DELETE]

credentials: true

Access-Control-Allow-Origin: '*'

Containerise Backstage

First let's start provisioning a private Azure Container Registry. We can do this with the following command:

az acr create -n backstageacr${YOUR INTIALS} -g backstage --sku Premium --public-network-enabled true --admin-enabled true

While this resource spins up lets now create a Dockerfile for our Backstage application. We will be doing a host build to save some time. We'll build the backend on our host whether thats local or a CI pipeline and then we will build our docker image. To start with from our backstage root folder we need to run the following commands:

yarn install --frozen-lockfile

# tsc outputs type definitions to dist-types/ in the repo root, which are then consumed by the build

yarn tsc

# Build the backend, which bundles it all up into the packages/backend/dist folder.

# The configuration files here should match the one you use inside the Dockerfile below.

yarn build:backend --config ../../app-config.yaml

We now need to create the Dockerfile if the app creation didn't initialise one in the first place (version dependent). The Dockerfile is below:

FROM node:18-bookworm-slim

# Install isolate-vm dependencies, these are needed by the @backstage/plugin-scaffolder-backend.

RUN --mount=type=cache,target=/var/cache/apt,sharing=locked \

--mount=type=cache,target=/var/lib/apt,sharing=locked \

apt-get update && \

apt-get install -y --no-install-recommends python3 g++ build-essential && \

yarn config set python /usr/bin/python3

# Install sqlite3 dependencies. You can skip this if you don't use sqlite3 in the image,

# in which case you should also move better-sqlite3 to "devDependencies" in package.json.

RUN --mount=type=cache,target=/var/cache/apt,sharing=locked \

--mount=type=cache,target=/var/lib/apt,sharing=locked \

apt-get update && \

apt-get install -y --no-install-recommends libsqlite3-dev

# From here on we use the least-privileged `node` user to run the backend.

USER node

# This should create the app dir as `node`.

# If it is instead created as `root` then the `tar` command below will

# fail: `can't create directory 'packages/': Permission denied`.

# If this occurs, then ensure BuildKit is enabled (`DOCKER_BUILDKIT=1`)

# so the app dir is correctly created as `node`.

WORKDIR /app

# This switches many Node.js dependencies to production mode.

ENV NODE_ENV production

# Copy repo skeleton first, to avoid unnecessary docker cache invalidation.

# The skeleton contains the package.json of each package in the monorepo,

# and along with yarn.lock and the root package.json, that's enough to run yarn install.

COPY --chown=node:node yarn.lock package.json packages/backend/dist/skeleton.tar.gz ./

RUN tar xzf skeleton.tar.gz && rm skeleton.tar.gz

RUN --mount=type=cache,target=/home/node/.cache/yarn,sharing=locked,uid=1000,gid=1000 \

yarn install --frozen-lockfile --production --network-timeout 300000

# Then copy the rest of the backend bundle, along with any other files we might want.

COPY --chown=node:node packages/backend/dist/bundle.tar.gz app-config*.yaml ./

RUN tar xzf bundle.tar.gz && rm bundle.tar.gz

CMD ["node", "packages/backend", "--config", "app-config.yaml"]

We can now use ACR Tasks to build our image. After the image is successfully built it's pushed to the registry we created earlier. Azure provides a hosted pool to build these images however ACR also now supports using a self hosted pool for production environments. If we had set our endpoint to private we would use a self hosted pool to build the image.

As we require Buildkit to be enabled we need to use ACR's multi-step YAML file. Create a file called acr-task.yaml. It should contain the following:

version: v1.0.0

stepTimeout: 1000

env:

[DOCKER_BUILDKIT=1]

steps: # A collection of image or container actions.

- build: -t backstageacr${YOUR_INITALS}.azurecr.io/backstageimage:v1 -f Dockerfile .

- push:

- backstageacr${YOUR_INITALS}.azurecr.io/backstageimage:v1

And we can run it with the following ACR command:

az acr run -r backstageacroow -f acr-task.yaml .

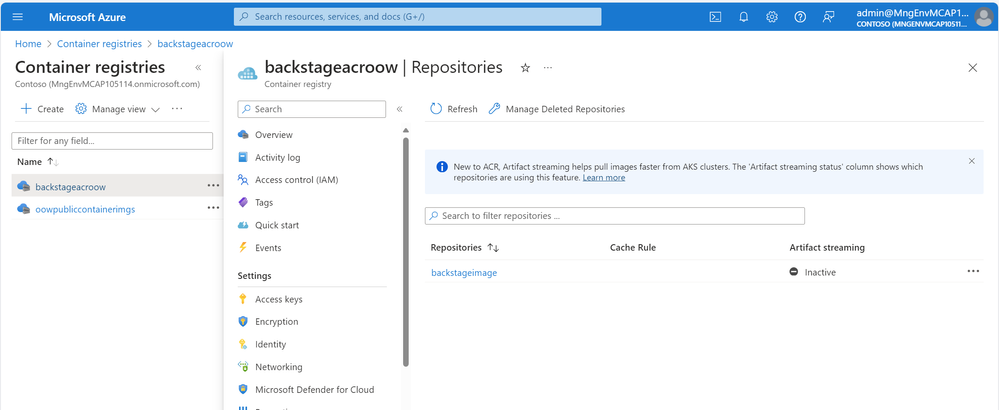

Once this has run we should see our image in our registry:

We can now look to deploy this image on Azure. Deploying Backstage now as a container gives us a variety of platforms to select. We could use App Service, Azure Container Apps or AKS. ACA and AKS are the most suitable depending on your use case and architecture. One thing to keep in mind with both services is that Backstage, as we described earlier sits as an operational platform between developers and infrastructure. This means that we will need to strongly consider the networking implications, if we are using a single Backstage per organisation or product we will need to be able to make inbound and outbound calls to multiple environments. For example using the Kubernetes plugin we will need to ensure that we can connect to all of the clusters that we want to add to our single view.

For the rest of the article we will focus on deploying Backstage to AKS however I believe ACA is a brilliant destination for Backstage within a hub network for easy and managed hosting. I have deployed and verified Backstage working in ACA.

Deploy Backstage on Azure Kubernetes Service

To deploy Backstage we first need to create our AKS cluster if we have not already. To do this we can use the following command. For simplicity we will not use Azure Firewall with UDR to restrict ingress and egress traffic. If deploying in production please use your own standards for your deployment. The cluster will need networking access to any other clusters you want to onboard with the Kubernetes plugin, GIT repositories and the Postgres Database.

To add an admin user we will need to get our user ID. If deploying in production this may not be necessary as your service deployment will be different.

To get your user ID you can use the following command:

az ad signed-in-user show --query id -o tsv

We can then create our cluster with the following command:

az aks create -g backstage -n backstagecluster --enable-aad --enable-oidc-issuer --enable-azure-rbac --load-balancer-sku standard --outbound-type loadBalancer --network-plugin azure --attach-acr backstageacr${YOUR_INITALS} --aad-admin-group-object-ids {YOUR USER ID}

We now need to create our manifest for our Backstage deployment. This file can be created either in your backstage root folder or in a sub Kubernetes folder. Depending on your requirements your manifest should look something like the following replacing the container image with your location:

# kubernetes/backstage.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: backstage

namespace: backstage

spec:

replicas: 1

selector:

matchLabels:

app: backstage

template:

metadata:

labels:

app: backstage

spec:

containers:

- name: backstage

image: backstageacr${YOUR_INITALS}.azurecr.io/backstageimage:v1

imagePullPolicy: IfNotPresent

ports:

- name: http

containerPort: 7007

We can then use the command invoke feature of AKS to deploy the manifest. Command Invoke uses the Azure backbone network to avoid having to directly connect to the cluster, this works brilliantly for administration of private clusters.

For now we will also assign cluster admin permissions to our user so we can create our namespace and deployment. In production we would want to roles with with limited scope if we are assigning individual roles to users at all. First we need our cluster ID:

AKS_ID=$(az aks show -g backstage -n backstagecluster --query id -o tsv)

We can then assign the role to our user for the cluster using the user ID we got earlier:

az role assignment create --role "Azure Kubernetes Service RBAC Cluster Admin" --assignee <AAD-ENTITY-ID> --scope $AKS_ID --scope subscriptions/<YOUR SUBSCRIPTION ID>

We can now deploy our application and service. To do this we need to create a manifest for each. We can do this in our backstage root folder or in a Kubernetes folder within our backstage root folder. For ease I would advise a separate Kubernetes folder.

apiVersion: v1

kind: Namespace

metadata:

name: backstage

---

# kubernetes/backstage.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: backstage

namespace: backstage

spec:

replicas: 1

selector:

matchLabels:

app: backstage

template:

metadata:

labels:

app: backstage

spec:

containers:

- name: backstage

image: backstageacroow.azurecr.io/backstageimage:v1

imagePullPolicy: IfNotPresent

ports:

- name: http

containerPort: 7007

And our service should look like this:

# kubernetes/backstage-service.yaml

apiVersion: v1

kind: Service

metadata:

name: backstage

namespace: backstage

spec:

selector:

app: backstage

type: LoadBalancer

ports:

- name: http

port: 80

targetPort: http

We can then deploy them with the following commands:

az aks command invoke --name backstagecluster --resource-group backstage --command "kubectl apply -f deployment.yaml" --file kubernetes/deployment.yaml

az aks command invoke --name backstagecluster --resource-group backstage --command "kubectl apply -f backstage-service.yaml" --file kubernetes/backstage-service.yaml

It is likely that you will want to create an ingress of some description for internal access to your backstage application. If you are doing this on Azure Container Apps the ingress creation can be enabled with a click of a button, on AKS you may want to use our Application Gateway for Containers service to use a managed Gateway resource (Learn more about this product in a previous blog I wrote here.). In both instances you will need to change the base url of your application to the custom domain that you want to host Backstage on as well as subsequently your application redirect URI to now also point at that domain. For the sake of this demo we will verify our deployment by using portforwading meaning we can keep our redirect URI to localhost:7007 and not return to the app config/setup our domain and certs on the cluster.

Lets first check our deployment has been successful:

az aks command invoke --name backstagecluster --resource-group backstage --command "kubectl get pods -n backstage" --file kubernetes/backstage-service.yaml

We should see our backstage pod up and running.

Up until this point we have used command invoke as a best practice for our full configuration and deployment. As we now want to tunnel traffic from our cluster to our local host we will need to use kubectl on our own command line. First to do this we need to login in to our cluster in our terminal with the following command:

az aks get-credentials --resource-group backstage --name backstagecluster --overwrite-existing

We can then execute the following to setup port forwarding:

kubectl port-forward deployment/backstage -n backstage 7007:7007

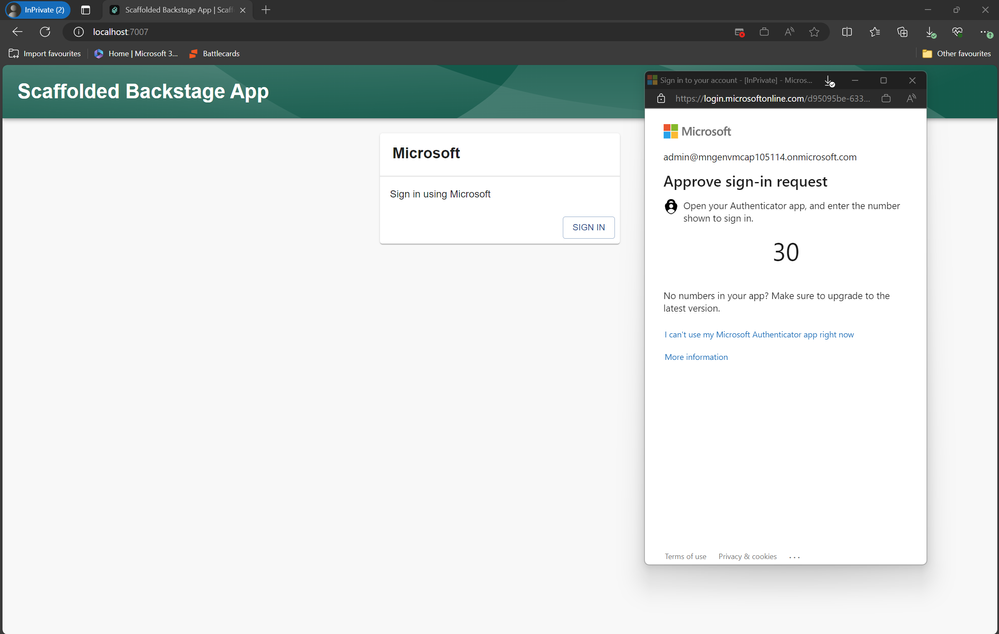

If we now open up a private browser and navigate to http://localhost:7007 we should be greeted by our backstage application.

If we navigate to that localhost:7007 we will be able to view our application and complete our login flow. I advise testing this in a private window otherwise you may skip the login flow and use your existing sessions credentials.

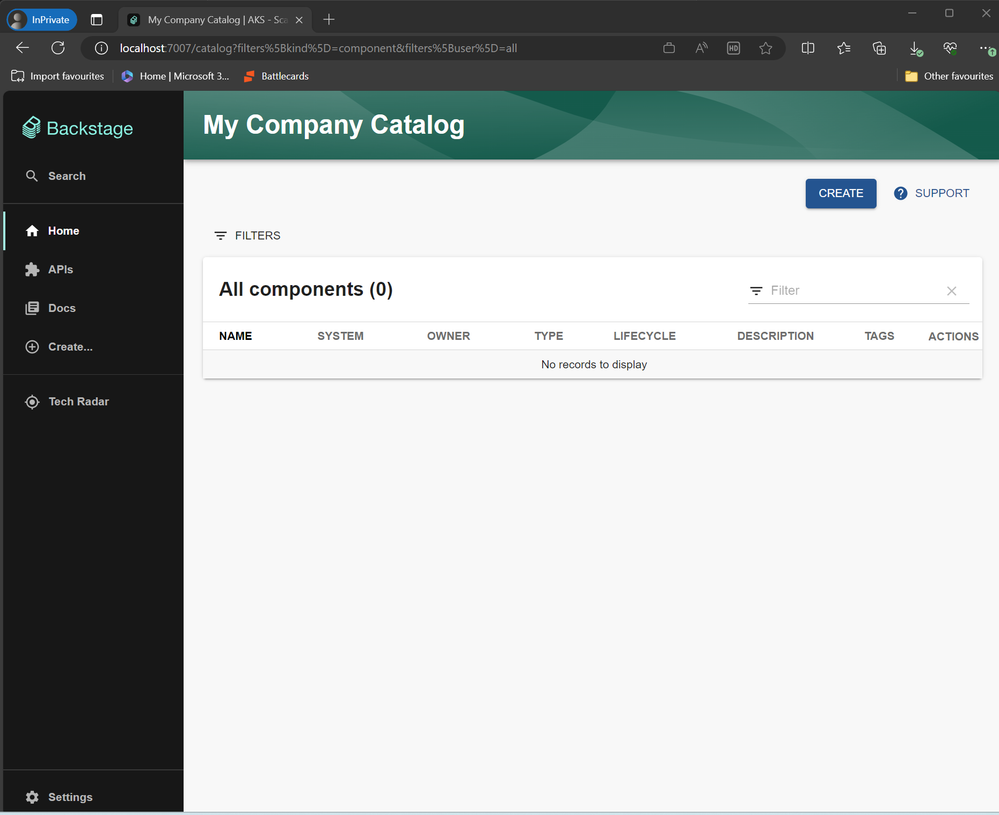

Once authenticated we are greeted by our backstage application!

Conclusion:

We have now managed to deploy Backstage on AKS with a managed Postgres backend and Entra ID authentication configured with users in our tenant onboarded. We have used Azure Container Registry to simplify our image build and deploy process too. We can now begin to further develop out Backstage instance by installing one of the many community supported additional plugins and onboard our Kubernetes Clusters. As mentioned Azure Container Apps has the potential to be a great platform for centralised Backstage deployments. This would allow deployment in a Hub with the ability to deploy & monitor clusters or applications/apis in spokes without managing an entire AKS cluster.

Next steps to build upon this walkthrough if looking to move towards production are:

- Custom Domains and an Internal LB for secure ingress within the network.

- CI/CD Pipeline using environment variables and secrets to build, push and deploy the application. This can be great if needing to overwrite the base app url once a randomly generated URL has been assigned e.g. Container Apps without custom domain. See more here: Writing Backstage Configuration Files | Backstage Software Catalog and Developer Platform

- Secure networking between Backstage and your ideally private Postgres instance.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.