- Home

- Azure

- Apps on Azure Blog

- Build an enterprise-ready Azure OpenAI solution with Azure API Management

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

With the rise of Artificial intelligence (AI), many industries and users are looking into ways to leverage this technology for their own development purposes and use cases. The field is expected to continue growing in the coming years as more companies invest in AI research and development.

Microsoft’s Azure OpenAI Service provides REST API access to OpenAI's powerful language models the GPT-4, GPT-35-Turbo, and Embeddings model series. With Azure OpenAI, customers get the security capabilities of Microsoft Azure while running the same models as OpenAI. Azure OpenAI offers private networking, regional availability, and responsible AI content filtering. However, the integration and embrace of new technology can present a range of difficulties and apprehensions, with foremost among these being the concern pertaining to security and governance. This is where Azure API Management comes in, providing a robust solution to address hurdles like throttling and monitoring. It facilitates the secure exposure of Azure OpenAI endpoints, ensuring their safeguarding, expeditiousness, and observability. Furthermore, it offers comprehensive support for the exploration, integration, and utilization of these APIs by both internal and external users.

Especially, because a majority of users are currently looking for a quick and secure way to start using Azure OpenAI. The most popular question we get is, “How can I safely and securely create my own ChatGPT with our own company specific data?”. Once an MVP is up and running, the next question is “How can I scale this into production”? For our use case today, we want to leverage the power of Azure API Management to meet production-ready requirements and provide a secure and reliable solution.

Getting-Started with Azure API Management and Azure OpenAI:

- Azure OpenAI provides you with REST API references, that can easily be imported into Azure API Management or can be included in your CI/CD pipeline.

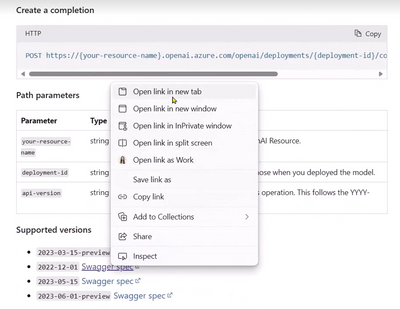

- For our use case, we are using the completions endpoint and download the Swagger specification.

- In our OpenAPI spec, we will modify a few parameters to make this work with our Azure OpenAI resource, that we previously created (see documentation). Make sure to modify the following parameters in your OpenAPI spec:

"servers": [

{

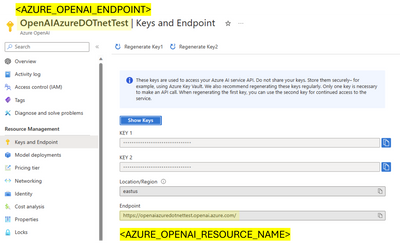

"url": "<AZURE_OPENAI_ENDPOINT>/openai",

"variables": {

"endpoint": {

"default": "<AZURE_OPENAI_RESOURCE_NAME>.openai.azure.com"

}

}

}

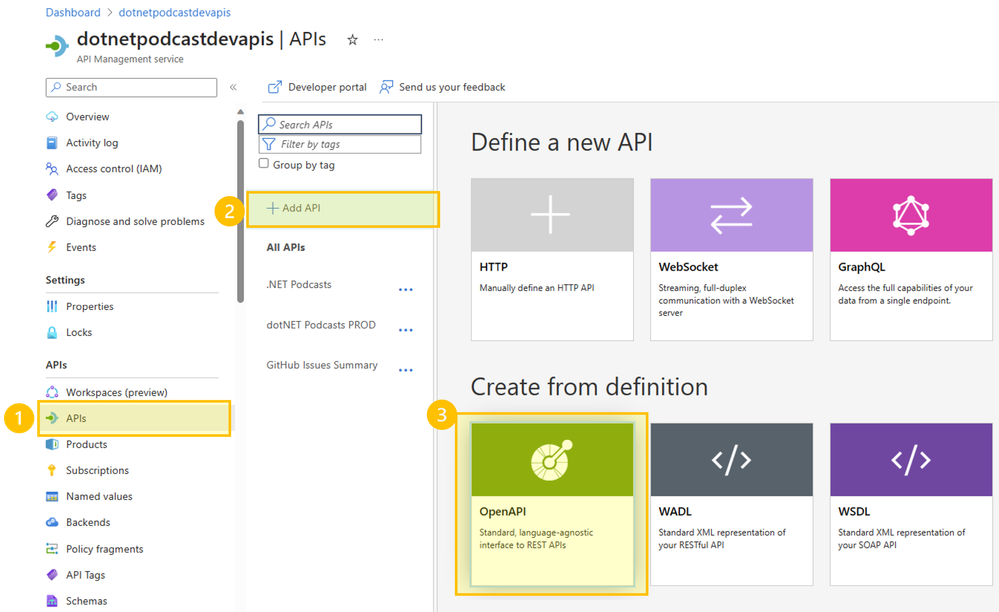

- Next, sign into the Azure portal and open your Azure API Management resource (see Quickstart on how to created API Management instance). Under APIs, + Add API, search for OpenAPI. Upload your OpenAPI spec that we downloaded and edited in Step 2 & 3.

- You are now able to see operations created for you based on your OpenAPI spec. These endpoints will call your Azure OpenAI resource.

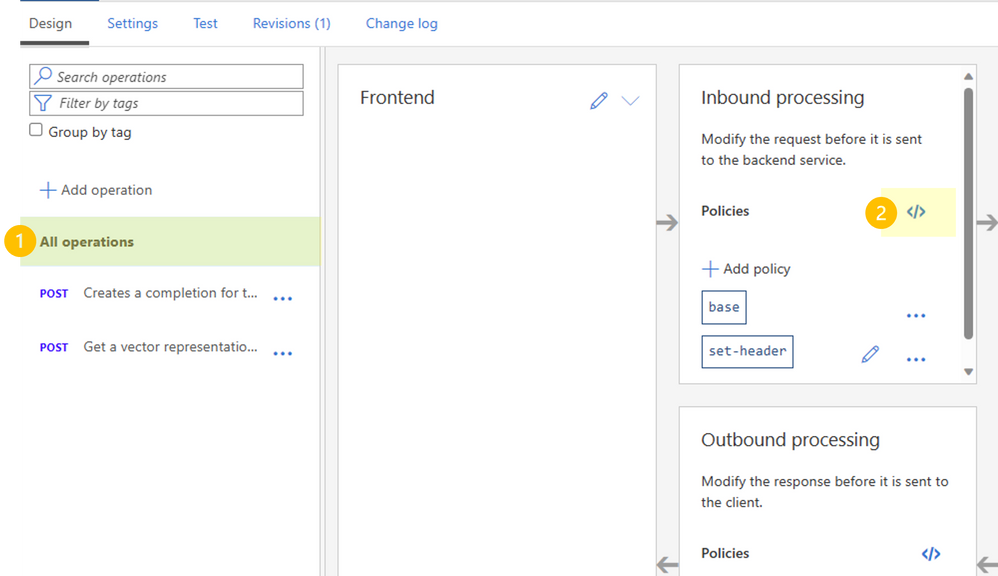

- Azure OpenAI is protected using the concept of API keys. An API key is a unique access code that acts as a secure identifier. We will use Azure API Management policies to append an API key to our operations. Select All operations, and open the inbound policy editor and edit the policy as the following:

<policies>

<inbound>

<base />

<set-header name="api-key" exists-action="override">

<value>{{APPSVC_KEY}}</value>

</set-header>

</inbound>

<backend>

<base />

</backend>

<outbound>

<base />

</outbound>

<on-error>

<base />

</on-error>

</policies>

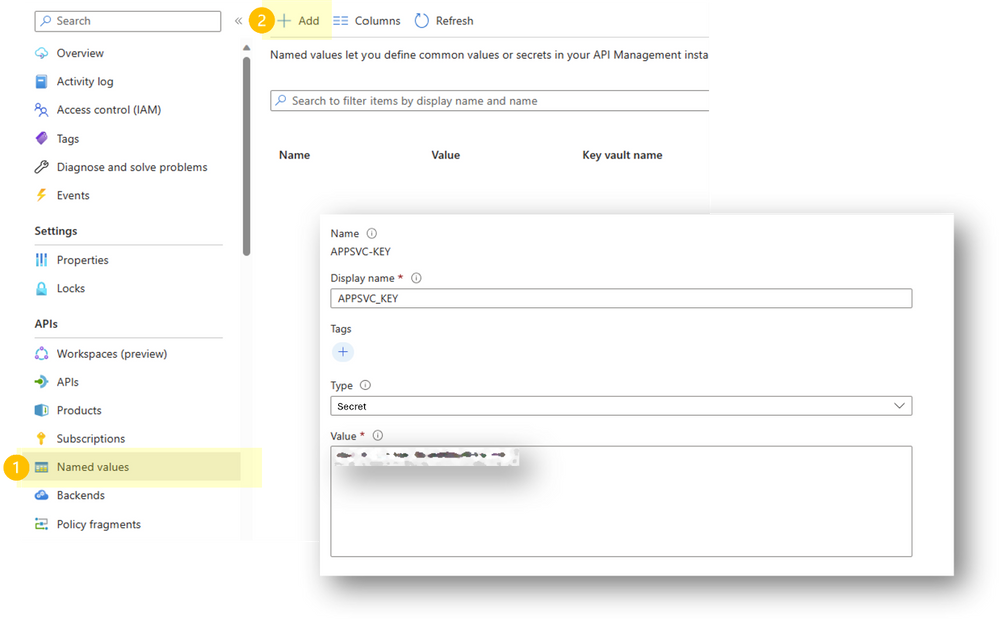

- For security reasons, we want to use Named values in Azure API Management and don’t paste the API key directly into our policy. For this open, Named values and + Add a new value with your API key as the value. The API key can be found in your Azure OpenAI resource under Keys and Endpoints.

Note: This demonstrates a simplified implementation, ideally you want to use the third type, Key Vault, to source the secret externally.

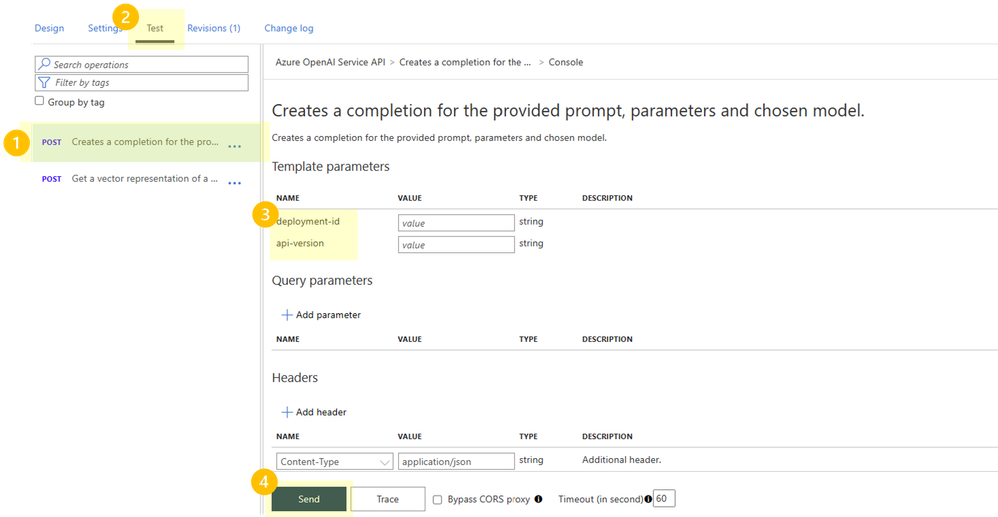

- Finally, you are able to test your API call in Azure API Management. For this open the Test tab and select the operation you want to call. Make sure to provide the required parameters (e.g. deployment-id, api-version) and hit the Send button.

- You should now get a 200 and see the response in the body.

Common Production-Ready Challenges:

Any AI project can be delicately architected. However, with how new Azure OpenAI, there are a few delicate details we need to consider that we have to take into consideration before building AI projects:

1. Advanced request throttling with Azure API Management:

API throttling, also known as rate limiting, is a mechanism used to control the rate at which clients can make requests to an API. It is implemented to prevent abuse, ensure fair usage, protect server resources, and maintain overall system stability and performance. Throttling restricts the number of API requests that a client can make within a specified time period.

Azure API Management helps with flexible throttling. Flexible throttling allows you to set different throttling limits for different types of clients or requests based on various criteria, such as client identity, subscription level, or API product.

Below is an example of an API management policy in Azure API Management that implements rate limiting for cost control purposes. This policy will limit the number of requests a client can make to your API within a specified time period:

<inbound>

<base />

<rate-limit-by-key calls="1000" renewal-period="3600" />

</inbound>

<backend>

<base />

</backend>

<outbound>

<base />

</outbound>

<on-error>

<base />

</on-error>

Explanation of the policy:

- <rate-limit-by-key>: This policy element is used to set up rate limiting based on a client's key. The client's key can represent various attributes such as subscription level, client identity, or any other criteria you want to use for rate limiting.

- calls: This attribute specifies the maximum number of API calls (requests) that a client with a specific key can make within the defined renewal-period.

- renewal-period: This attribute defines the time period (in seconds) during which the rate limiting counter is reset. In this example, the renewal period is set to 3600 seconds, which is equivalent to 1 hour.

For a more advanced example, check out our Open AI Cost Gateway Pattern, where you can track spending by product for each and every request and rate limit by product based on spending limits.

2. Use Application Gateway and Azure API Management together for API Monitoring:

Logging and monitoring are critical aspects of managing and maintaining APIs, ensuring their availability, performance, and security. When integrating Azure OpenAI models into your solutions through Azure API Management and an API gateway, you need to establish robust logging and monitoring practices to gain insights into API usage, detect and troubleshoot issues, and optimize performance. Implementation example:

- Azure Monitor Integration:

- Leverage Azure Monitor to collect telemetry data from your API Management instance.

- Configure Application Insights to track API requests, response times, and error rates.

- Set up alerts to be notified of unusual patterns or high error rates.

- Custom Logging:

- Implement custom logging in your API using policies in API Management.

- Capture relevant data, such as request headers, payload, and response details.

- Send custom logs to Azure Monitor or other logging services.

- Usage Analytics:

- Utilize API Management's built-in usage analytics to monitor API consumption and traffic patterns.

- Identify popular endpoints, peak usage times, and user behavior.

- Alerting and Notifications:

- Configure alerts and notifications for critical events, such as API downtime or high error rates.

- Use Azure Logic Apps to trigger workflows based on specific conditions.

See Tutorial: Monitor published APIs for more detailed information.

3. Use Application Gateway for load balancing:

Load balancing is crucial in Azure OpenAI projects to efficiently distribute incoming API requests across multiple resources, ensuring optimal performance and resource utilization. The challenge lies in managing uneven traffic distribution during peak hours, achieving scalability while avoiding underutilization or overloading of servers, ensuring fault tolerance, and dynamically allocating resources based on fluctuating workloads. Proper load distribution strategies and session persistence play vital roles in maintaining high availability and seamless user experiences.

You can use it to route traffic to different endpoints. For more detailed information, check out this documentation.

For this we can use Azure Application Gateway. Application Gateway sits between clients and services. It acts as a reverse proxy, routing requests from clients to services. API Management doesn't perform any load balancing, so it should be used in conjunction with a load balancer such as Azure Application Gateway. You can use it to route traffic to different endpoints. For more detailed information, check out this documentation.

Azure OpenAI Solutions:

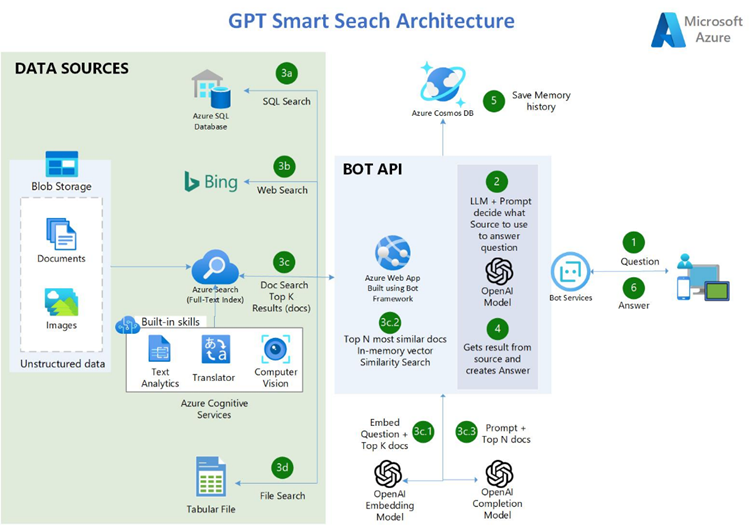

If you are looking for a way to create your first Azure OpenAI solution, you might be overwhelmed by the many options and possibilities. However, a good strategy is to start with a low-risk, high-value use case that targets your internal audience. For instance, one of the common solutions for adoption is the below architecture, which is available through one of our Microsoft Accelerators. This architecture enables you to build a MVP solution that leverages Azure GPT to create a private search engine and a chat experience powered by Azure ChatGPT and Azure GPT-4 for your web application or integrated into various different channels through our Azure Bot Framework, like Microsoft Teams. The advantage of this architecture is that it allows you to use your own data securely within your own Azure Tenant, and to access data from various sources and types. As shown in the green highlighted box, the model is grounded with data from Azure Cognitive Search, Azure SQL Database, specific tabular files, and even the internet via our Azure Bing API.

For more information on the details of the accelerator, kindly find our Github Repo here and the introduction deck here.

As mentioned, the above accelerator architecture is an amazing place to start to build your MVP solution. To make this an enterprise ready solution and to address some of the challenges that we mentioned above, like API Management and Load Balancing. Post MVP, we would highly recommend starting to adopt the Microsoft reference architecture below. This architecture highlights the key components to ensure we can properly manage our API’s through Azure API Management, utilize Application Gateway to help with load balancing, and monitoring our overall API’s with Azure monitoring. Overall, the solution enables advanced logging capabilities for tracking API usage and performance and robust security measures to help protect sensitive data and help prevent malicious activity.

Note: Reference to Architecture

Feel free to reach out with any questions about these solutions or maybe even proposals on future blog posts!

A big thank you goes to our specialists in these subject areas, make sure to follow them for any updates or reach out to them directly:

- Senior Data & AI Cloud Solution Architect, Julia Heseltine

- Senior Cloud Solution Architect, Simon Kurtz

- Azure Architect GBB, David Barkol

- Senior Technical Specialist, Preston Hale

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.