- Home

- Azure

- Apps on Azure Blog

- Step by Step Practical Guide to Architecting Cloud Native Applications

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

This is a step by step, no fluff guide to building and architecting cloud native applications.

Cloud application development introduces unique challenges: applications are distributed, scale horizontally, communicate asynchronously, have automated deployments, and are built to handle failures resiliently. This demands a shift in both technical approach and mindset.

So Cloud Native is not about technologies, if you can do all of the above, then you are Cloud Native. You don’t have to use Kubernetes for instance, to become Cloud Native.

Steps:

Say you want to architect an application in the cloud, and let’s use an E-Commerce Website as an example, how do you go about it and what decisions do you need to make?

Regardless of the type of application, we have to go through six distinct steps:

- Define Business Objectives.

- Define Functional and None Functional Requirements.

- Choose an Application Architecture.

- Make Technology Choices.

- Code using Cloud Native Design Patterns.

- Adhere to the Well Architected framework.

Business Objectives:

Before starting any development, it’s crucial to align with business objectives, ensuring every feature and decision supports these goals. While microservices are popular, their complexity isn’t always discussed.

It’s essential to evaluate if they align with your business needs, as there’s no universal solution. For external applications, the focus is often on enhancing customer experience or generating new revenue. Internal applications, however, aim to reduce operational costs and support queries.

For instance, in E-commerce, improving customer experience may involve enhancing user interfaces or ensuring uptime, while creating new revenue streams could require capabilities in data analytics and product recommendations.

By first establishing and then rigorously adhering to the business objectives, we ensure that the every functionality in the application — whether serving external customers or internal users — delivers real value.

Functional and None Functional Requirements:

Now that we understand and are aligned with the business objectives, we go one step further by getting a bit more specific. And that is by defining functional and none-functional requirements.

Functional requirements define the functionalities you will have in your application:

- Users should be able to create accounts, sign in, and there should be password recovery mechanisms.

- The system should allow users to add, modify, or delete products.

- Customers should be able to search for products and filter based on various criteria.

- The checkout process should support different payment methods.

- The system should check order status from processing to delivery.

- Customers should be able to rate products & leave reviews.

None functional requirements define the “quality” of the application:

- Application must support hundreds of concurrent users.

- The application should load pages in under 500ms even under heavy load.

- Application must be able to scale during peak times.

- All internal communication between systems should be happen through private IPs.

- Application should be responsive to adapt to different devices.

- What SLA will we support, what RTO & RPO are we comfortable with?

Application Architecture:

We understand the business objectives, we are clear on what functionalities our application will support, and we understand the constraints our applications need to adhere to.

Now, it is time to make a decision on the Application Architecture. For simplicity, you either choose a Monolith or a Microservices architecture.

Monolith: A big misconception is that all monoliths are big ball of muds — that is not necessary the case. Monolith simply means that the entire application with all of its functionalities are hosted in a single deployment instance. The internal architecture though, can be designed to keep the components of different subsystems isolated from each other but calls between them are in process function calls as opposed to network calls.

Microservices: Modularity has always been a best practice in software development whether you use a Monolith (logical modularity) or Microservice (physical modularity). Microservices is when functionalities of your application are physically separate into different services communicating over network calls.

What to pick: Choose microservices if technical debt is high and your codebase has become so large that feature releases are slow, affecting market responsiveness, or if your application components need different scaling approaches. Conversely, if your app updates infrequently and doesn’t require detailed scalability, the investment in microservices, which introduces its own challenges, might not be justified.

Again, this is why we start with business objectives and requirements.

Technology Choices:

It is time to make some technology choices. We won’t be architecting yet, but we need to get clear on what technologies we will choose, which is now much easier because we understand the application architecture and requirements.

Encompassing all technology choices, you should be thinking about containers if you are architecting cloud native applications. Away from all the fluff, this is how containers work: The code, dependencies, and run-time are all packaged into a binary (an executable package) known as an image. That binary or image is stored in a container registry and you can run this binary on any computer that has a supported container runtime (such as Docker). With that, you have a portable and lightweight deployable package.

Compute:

- N-Tier: If you are going with N-tier (a simple Web, Backend, Database for instance), the recommended option for compute is to go with a PaaS Managed service such as Azure App Service. The decision point ultimately comes down to control & productivity. IaaS gives you the most control, but least productivity as you have to manage many things. It is also easier to scale managed services as they often come with an integrated load balancer as opposed to configuring your own.

- Microservices: On the other hand, the most common platform for running Microservices is Kubernetes. The reason for that is Microservices are often developed using containers, and Kubernetes is the most popular container orchestrator which can handle thing such as self healing, scale, load balancing, service-service discovery, etc. The other option, however, is using Azure Container Apps which is a layer of abstraction above Kubernetes allowing you to run multiple containers at scale without managing Kubernetes. If you require access to the Kubernetes API, Container Apps is not a good option. Many apps, however, don’t require access to the Kubernetes API so it is not worth the effort managing the cluster, hence Container Apps is a good choice. You can run microservices in a PaaS Service such as Azure App Service, but it becomes difficult to manage, specifically from a CI/CD perspective.

Storage

- Blob Storage: Use Blobs if you want to store unstructured objects like images. Calls are done via a HTTPS and blobs are generally inexpensive.

- Files: If your applications would like native file shares, you can use services such as Azure Files which is accessible over SMB and HTTPS. For containers that want persistent volume, Azure Files is very popular.

Databases:

Most applications model data. We represent different entities in our applications (users, products) using objects with certain properties (name, title, description).

When we pick a database, we need to take into consideration how will we represent our data model (JSON, Tables, Key Value/Pairs). In addition, we need to understand relationships between different models, scalability multi-regional replication, schema flexibility, and of course, team skillsets.

- Relational Databases: Use this when you want to ensure strong consistency, you want to enforce a schema, and you have a lot of relationships between data (especially many to one and many to many).

- Document Database: Use this if you want a flexible schema, you primarily have a one to many relationships i.e. a tree hierarchy (such as posts with multiple comments), and you are dealing with millions of transactions per second.

- Key/Value store: Suitable for simple lookups. They are extremely fast and extremely scalable. Caching is excellent for that.

- Graph Databases: Great for complex relationships and ones that change over time. Data is organized into nodes and edges. Building a recommendation engine for an e-commerce application to implement “frequently bought together” would probably use a Graph Database under the hood.

One of the advantages of Microservices is that each service can pick its own database. A product service with reviews follows a tree like structure (one to many relationship) and would fit a document database. An inventory service on the other hand, where each product can belong to one or more category and each order can contain multiple products (many to many relationships) might use a relational database.

Messaging:

The difference between an event and message is that with message (apart from having a larger payload), a producer expects the message to be processed while an event is just a broadcast with no expectation of acting on it.

For an event, it is either discrete (such as a notification) or in a sequence such as IOT streams. Streams are good for statistical evaluation. For instance, if you are tracking inventory through a stream of events, you can analyze in the last 2 hours, how much stock has been moved.

Pick:

- Azure Service Bus: If you’re dealing with a message.

- Azure Event Grid: If you’re dealing with discrete events.

- Azure Event Hub: If you’re dealing with a stream of events.

Many times, a combination of these services are used. Say you have an e-commerce, and when new orders come, you send a message to a queue where inventory system listens to confirm & update stock. Since orders are infrequent, the inventory system will constantly poll for no reason. To mitigate that, we can use an Event Grid listening to the Service Bus Queue, and the Event Grid triggers the inventory system whenever a new message comes in. Event Grid helps convert this inefficient polling model into an event-driven model.

Identity:

Identity is one of the most critical elements in your application. How you authenticate & authorize users is a considerable design decision. Often times, identity is the first thing that is considered before apps are moved to the cloud. Users need to authenticate to the application and the application itself needs to authenticate to other services like Azure.

The recommendation is to outsource identity to an identity provider. Storing credentials in your databases makes you a target for attacks. By using an Identity as a Service (IDaaS) solution, you outsource the problem of credential storage to experts who can invest the time and resources in securely managing credentials. Whichever service you choose, make sure it supports Passwordless & MFA which is more secure. Open ID & OAuth are the standard protocols for Authentication and Authorization.

Code using Cloud Native Design Patterns:

We defined requirements, picked the application architecture, made some technology choices, and now it is time to think about how to design the actual application.

No matter how good the infrastructure is and how good of a technology choice you’ve made, if the application is poorly designed or is not designed to operate in the cloud, you will not meet your requirements.

API Design:

- Basic Principles: The two key principles of API design is that they should be platform independent (any client should be able to call the API) and designed for evolution (APIs should be updated without affecting clients, hence proper versioning should be implemented).

- Verbs not nouns: URLs should be around Data Models & Resources not verbs: /orders not /create-orders .

- Avoid Chatty URLs: For instance, instead of fetching product details, pricing, reviews separately, fetch them in one call. But there is a balance here, ensure the response doesn’t become too big otherwise latency will increase.

- Filtering and Pagination: It is generally not efficient to do a GET Request over a large number of resources. Hence, your API should support a filtering logic, pagination (instead of getting all orders, get a specific number), and sorting (avoid doing that on the client).

- Versioning: Maintaining multiple versions can increase complexity and costs since you have to manage multiple versions of documentation, test suites, deployment pipelines, and possibly different infrastructure configurations. It also requires a clear deprecation policy so clients know how long v1 will be available and can plan their migration to v2 but it is well worth the effort to ensure updates does not disrupt our clients. Versioning is often done on the URI, Headers, or Query Strings.

Design for Resiliency:

In the cloud, failures are inevitable. This is the nature of distributed systems, we rarely run everything on one node. Here is a checklist of things you need to account for:

- Retry Mechanism: A Retry mechanism is a way to deal with transient failures and you need to have retry logic in your code. If you are interacting with Azure services, most of that logic is embedded in the SDKs.

- Circuit Breaking: Retry logic is great for transient failures, but not for persistent failures. For persistent failures, implement circuit breaking. In practical terms, we monitor calls to a service, and upon a certain failure threshold, we prevent further calls, it is as simple as that.

- Throttling: The reality is, sometimes the system cannot keep up even if you have auto-scaling setup so you need throttling to ensure your service still functions. You can implement throttling in your code logic or use a service like Azure API Management.

Design for Asynchronous Communication:

Asynchronous communication is when services talk to each other using events or messages. The web was built on synchronous (direct) Request-Response communication, but it becomes problematic when you start chaining multiple requests, such as in a distributed transaction because if one call fails, the entire chain fails.

Say we have a distributed transaction in a microservices application. A customer places an order processed by order service, which does a direct call to the payment service to process payments. If payment system is down, then the entire chain fails, and order will not go through. With asynchronous communication, a customer places an order, the order puts an order request in a queue, and when payment is up again, an order confirmation is sent to the user. You can see, such small design decisions can make massive impacts on revenues.

Design for Scale:

You might think scalability is as simple as defining a set of auto-scaling rules. However, your application should first be designed to become scalable.

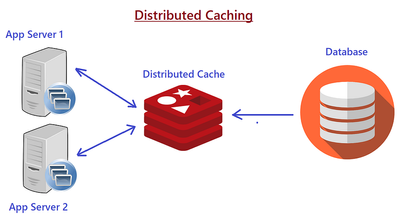

The most important design principle is having a stateless application, which means logic should not depend on a value stored on a specific instance such as a user session (which should be stored in a shared cache). The simple rule here is: don’t assume your request will always hit the same instance.

Consider using connection pooling as well, which is re-using established database connections instead of creating a new connection for every request. It also helps avoid SNAT exhaustion especially when you’re operating at scale.

Design for Loose Coupling:

An application consists of various pieces of logic & components working together. If these are tightly coupled, it becomes hard to change the system i.e. changing one system, means coordinating with multiple other systems.

Loosely coupled services means you can change one service without changing the other. Asynchronous communication, as explained previously, helps with designing loosely coupled components.

Also, using a separate database per microservice is an example of loose coupling (changing the schema of one DB will not affect any other service, for instance).

Lastly, ensure services are deployed individually without having to re-deploy the entire system.

Design for Caching:

If performance is crucial, then caching is a no brainer and cache management is the responsibility of the client application or intermediate server.

Caching, essential for performance, involves storing frequently accessed data in fast storage to reduce latency and backend load. It’s suitable for data that’s often read but seldom changed, like product prices, minimizing database queries. Managing cache expiration is critical to ensure data remains current. Techniques include setting max-age headers for client-side caching and using ETags to identify when cached data should be refreshed.

Service Side Caching: Server-side caching often employs Redis, an in-memory database ideal for fast data retrieval due to its RAM-based storage, not suitable for long-term persistence. Redis enhances performance by caching data that doesn’t frequently change, using key-value pairs and supporting expiration times (TTLs) for data.

Client Side Caching: Content Delivery Networks (CDNs) are often used to cache data closer to frontend clients, and more often, it is static data. In Web Lingo, static data refers to files that are static, such as CSS, Images, HTML, etc. Those are often stored in CDNs, which are nothing more than a set of reverse proxies (spread around the world) between the client and backend. Client will usually request these static resources from these CDN URLs (sometimes called edge servers) and the CDN will return the static content along with TTL headers and if TTL expires, CDN will request static resources again from the origin.

Well-Architected Framework:

You’ve defined business objectives & requirements, made technology choices, and coded your application using cloud native design patterns.

This section is about how you architect your solution in the cloud, defined through a cloud agnostic framework known as Well-Architected framework encompassing reliability, performance, security, operational excellence, and cost optimization.

Note that many of these are related. Having scalability will help with reliability, performance, and cost optimization. Loose coupling between services will help with both reliability and performance.

Reliability:

A reliable architecture must be fault tolerant and must survive outages automatically. We discussed how to increase reliability in our application code through retry mechanisms, circuit breaking patterns, etc. This is about how do we ensure reliability is at the forefront of our architecture.

Here is a summary of things to think about when it comes to reliability:

- Avoid Overengineering: Simplicity should be a guiding principle. Only add elements to your architecture if they directly contribute to your business goals. For instance, if having a complex recommendation engine doesn’t significantly boost sales, consider a simpler approach. Another thing to consider is avoiding re-inventing the wheel, if you can integrate a third party payment system, why invest in building your own?

- Redundancy: This helps minimize single points of failures. Depending on your business requirements, implement zonal and/or regional redundancy. For regional redundancies, appropriately choose between active-active (maximum RTO) and active-passive (warm, cold, or redeploy) and always have an Automatic failover but manual fallback to prevent switching back to an unhealthy region. Every Azure component in your architecture should account for redundancy in case things go wrong by conducting a failure mode analysis (analyzing risk, likelihood, and effect in case things go wrong).

- Backups: Every stateful part of your system such as your database should have automatic immutable backups to meet your RPO objectives. If your RPO is 1 hour (any data loss should not exceed 1 hour), you might need to schedule backups every 30 minutes. Backups are your safety archives in case things such as bugs or security breaches happen, but for immediate recovery from a failed component, consider data replication.

- Chaos Engineering: Deliberately injecting faults into your system allows you to discover how your system responds before they become real problems for your customers.

Performance:

The design patterns you implement in your applications, will influence performance. This focuses more on the architecture.

- Capacity Planning: You always need to start here. How do you pick the resources (CPU, memory, storage, bandwidth) you need to satisfy your performance requirements? Best place to start is by conducting load testing on expected traffic and peak loads to validate if your capacity meets your performance needs. However, most importantly, capacity planning is an ongoing process which should be reviewed and adjusted.

- Consider Latency: Services communicating across regions or availability zones can face increased latency. Also, ensure services are placed closer to users. Lastly, use private backbone networks (Private endpoints & service endpoints) instead of traversing the public internet.

- Scale Applications: You need to choose the right auto-scaling metrics. Queue length has always been popular metric because it shows there is a long backlog of tasks to be completed. However, a better metric is now used which is critical time, that is the total time when a message is sent to a queue & processed. Yes queue length is important, but critical time actually shows you how efficiently the backend is processing it. For an e-commerce platform that sends confirmation emails based on orders on a queue, you can have 50k orders but if the critical time is 500ms (for oldest message), then we can see that the worker is keeping up despite all these messages.

- Scale Databases: A big chunk of performance problems are usually attributed to databases. If it is a none-relational database, horizontal scaling is straightforward, but often-times, you need to define a partition key to split the data (for instance, if customer address is used as a partition key, users are split into different customer address partitions). If it is a relational database, partitioning can also be done through sharding (splitting tables into separate physical nodes), but it is more challenging to implement.

Security:

Security is about embracing two mindsets, zero trust and a defense in depth approach.

- Zero Trust: In the past, we used to have all components in the same network so we used to trust any component that was within the network. Nowadays, we have the internet and various components that connect to our application from anyone. Zero trust is about verifying explicitly, where all users & applications must authenticate & authorize even if they are inside the network. If an application is trying to access a database in the same network, it should authenticate & authorize. Azure Managed Identities, for instance, support this mechanism without exposing secrets. The second principle is using least privelege by assigning Just in Time (JIT) and Just Enough Access (JEA) to users and applications. Lastly, always assume breach. Even if an attacker penetrated our network, how can we minimize the damage? A common methodology is to use network segmentation. Only allow specific ports open between subnets and only allow certain subnets to communicate with each other. Just because they are all in the same network, does not mean you allow all communication.

- Defense in Depth: Everything in your application & architecture should embed security. Your data should be encrypted at transit & rest, your applications should not store secrets or use vulnerable dependencies or insecure code practices (consider using a static security analysis tool to detect these), your container images should be scanned, etc.

Operational Excellence:

While the application, infrastructure, and architecture layer are important. We need to ensure we have the operations in place to support our workaloads. I boiled them down into four main practices.

- DevOps Practice: Set of principles & tooling to allow automated, reliable, and predictable tests to ensure faster & more stable releases. Testing & Security should be shifted to the left, and zero down-time must be achieved through blue/green deployments or canary releases.

- Everything as Code: Infrastructure should be defined using declarative code that is stored in source control repository for version control & rollbacks. IaC allows more reliable and re-usable infrastructure deployments.

- Platform Engineering Practice: Outside of buzz words, this is when a centralized platform team creates a set of self-service tools (utilizing preconfigured IaC templates under the hood) for developers to deploy resources & experiment in a more controlled environment.

- Observability: This is about gathering metrics, logs, and traces to provide our engineers with better visibility into our systems, and in-turn, fix issues faster. I wrote another article about observability so I won’t go into detail here, make sure to check it out.

Cost Optimization:

If costs are not optimized, your solution is not well architected, it is as simple as that. Here are things you should be thinking about:

- Plan for Services: Every service has a set of cost optimization best practices. For instance, if you are using Azure Cosmos DB, ensure you implement proper partitioning. If you are using Azure Kubernetes, ensure you define resource limits on Pods.

- Accountability: All resources should be tracked back to their owners and divisions to be linked back to a cost center. We do that by enforcing tags.

- Utilize Reserved Instances: If you know you will have predictable load on a production application that will always be on. Utilize Reserved Instances. Every cloud provider should support that.

- Leverage Automation: Switch off things during none traffic times.

- Auto-scale: Leverage dynamic auto-scaling to use less resources at low peak times.

Conclusion

I hope this overview has captured the essentials of building cloud-native applications, highlighting the shift required from traditional development practices. While not exhaustive, I’m open to exploring any specific areas in more detail based on your interest. Just let me know in the comments, and I’ll gladly delve deeper.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.