- Home

- Azure

- Apps on Azure Blog

- Harness the power of scaling using KEDA add-on for AKS which is now generally available.

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

We are thrilled to announce the General Availability of KEDA add-on for AKS. This will enable you to auto-scale your applications easily and seamlessly. KEDA Add-on for AKS can be enabled on a new or an existing Azure Kubernetes Service (AKS) cluster by using an ARM template or Azure CLI!

The KEDA add-on provides a fully managed and supported installation of KEDA that is integrated with AKS. The KEDA add-on makes it even easier by deploying a managed KEDA installation, providing you with a rich catalog of 60+ KEDA scalers that you can scale your applications with on your Azure Kubernetes Services (AKS) cluster.

Microservices encounter unpredictable spikes of high use. Modern microservice infrastructures have several components that require different scale metrics. Scaling based on memory or processor utilization alone is insufficient in this landscape.

Previously customers needed to scale pods/deployments using Metrics Server and Horizontal Pod Autoscaler (HPA). In this case they are required to specify CPU request limits. When this rule was “triggered” HPA could “scale” up pods to meet that demand. Although this is a better option than manually scaling in both directions, using a HPA won't focus on the number of events received to the event source list. What if the app was tied to a service bus or storage queue? The AKS administrator would have to manually scale these workloads.

The Kubernetes ecosystem provides a variety of metrics servers to bring metrics ranging from Prometheus to Datadog and similar technologies, but this is a lot of scaling infrastructure to manage. Also, Kubernetes only allows you to install one of these metric servers which limits the use-cases.

Providing several ways for customers to scale based on their application needs , and enable them to use compute when needed to build more sustainable platforms would be an incredible value add.

Kubernetes-based Event Driven Autoscaler (KEDA) is an application autoscaler that strives to make application autoscaling on Kubernetes dead-simple. With KEDA, you can drive the scaling of Deployments, Jobs, or practically anything through custom CRDs that implements the /scale subresource in Kubernetes. The scaling will be based on the number of events needing to be processed.

It initially started as a partnership between Microsoft & Red Hat in 2019, joined the CNCF as a sandbox project on March 12, 2020, moved to the Incubating maturity level on August 18, 2021, and then moved to the Graduated maturity level on August 22, 2023. The Kubernetes community has embraced and adopted KEDA over time and is also powering Microsoft products such as AKS, Azure Container Apps.

Let’s see it in action

The KEDA community provides a great example of how you can autoscale a .NET Core worker processing Azure Service Bus Queue.

We will use the Azure CLI to enable KEDA on an AKS cluster allowing you to use the existing KEDA capabilities to autoscale your application.

- You can now easily enable KEDA when creating an AKS cluster:

az aks create \

--resource-group myResourceGroup \

--name myAKSCluster \

--enable-keda

- If you already have a cluster, you can use az aks update to opt-in for KEDA:

az aks update \

--resource-group myResourceGroup \

--name myAKSCluster \

--enable-keda

- You can verify if KEDA is installed by using the following command:

az aks show -g "myResourceGroup" --name myAKSCluster --query "workloadAutoScalerProfile.keda.enabled"

Your AKS cluster is ready to start autoscaling your applications! Head over to KEDA’s official sample to get started as if it would be any KEDA installation.

Workload Identity

If wish to connect the application workloads running on AKS using managing identity you need to enable the workload identity add-on to the AKS cluster enabled with KEDA add-on.

1. Enable the workload identity add-on to the AKS cluster and then enable the KEDA Add-on

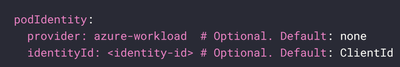

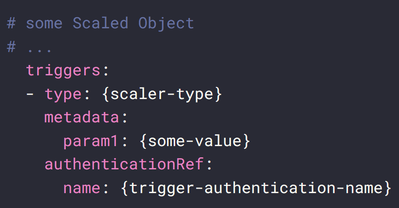

2. Deploy Trigger Auth in AKS with provider and identity Id

3. Trigger Auth name needs to be referenced in the corresponding scaled objects under authenticationRef config property

If you're using Microsoft Entra Workload ID and you enable KEDA before Workload ID, you need to restart the KEDA operator pods so the proper environment variables can be injected:

- Restart the pods by running kubectl rollout restart deployment

keda-operator -n kube-system - Obtain KEDA operator pods using

and find pods that begin with keda-operator.kubectl get pod -n kube-system - Verify successful injection of the environment variables by running

Under Environment, you should see values for AZURE_TENANT_ID, AZURE_FEDERATED_TOKEN_FILE, and AZURE_AUTHORITY_HOST.kubectl describe pod <keda-operator-pod> -n kube-system

For more details check out the code sample.

Want to learn more?

KEDA makes application autoscaling simple, but now it is even simpler for Azure customers with the managed offering through the AKS add-on. Stop worrying about the infrastructure, focus on scaling your applications.

Refer to how the KEDA add-on can help you scale applications, and the integrations it provides.

If you want to post questions or submit feature asks then drop them here

References

Kubernetes Event-driven Autoscaling (KEDA) (Preview) - Azure Kubernetes Service | Microsoft Docs

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.