- Home

- Azure

- Apps on Azure Blog

- Use Nginx as a reverse proxy in Azure Container App

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

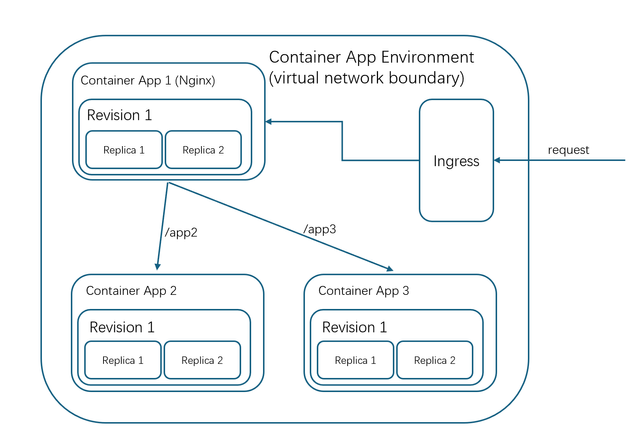

A recent requirement from a customer was to host multiple container apps on Azure Container Apps within the same environment to optimize resource usage. To limit the traffic within the container app environment, an Nginx container was utilized as a reverse proxy server and public endpoint to direct all inbound traffics to the appropriate container app based on the URL. The traffic flow for this architecture is as follows:

To demonstrate how to achieve this, I created a simple proof-of-concept (POC) and would like to share the configuration details in case anyone is interested in creating a similar system.

Prerequisites

As stated in our official documentation for Azure Container Apps, there are two types of ingress that can be enabled: External and Internal. In addition, it supports both HTTP and TCP for ingress protocols.

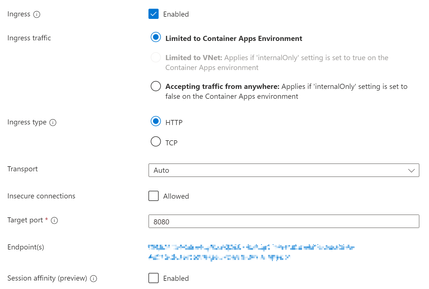

Using this knowledge, I developed two basic container apps using Python Flask that just displayed a simple "hello world" message on the screen. Next, I created an Nginx container app to act as a reverse proxy server to forward requests to the appropriate container app. All three containers are deployed into the same Azure Container App environment. The Nginx container app was configured to accept incoming traffic from "anywhere"(External), while the two basic containers only accepted traffic "limited to within the Container App environment"(Internal).

Senario1: HTTP ingress

My upstream container app only allows HTTP ingress.

To establish an HTTP connection between the upstream container and my Nginx container app, I created the following default.conf file. This configuration directs all requests made to the /app2 path to a container app hosted at https://app2.internal.clustername.region.azurecontainerapps.io/. Additionally, it provides a default HTML page to users when accessing the root path.

server{

listen 80;

server_name _;

location /app2{

proxy_pass https://app2.internal.clustername.region.azurecontainerapps.io/;

proxy_ssl_server_name on;

proxy_http_version 1.1;

}

location /{

root /usr/share/nginx/html;

index index.html;

}

}

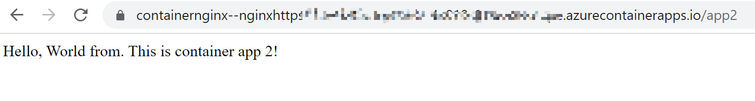

Result:

Reference doc: Nginx HTTP Load Balancing.

Senario2: TCP ingress

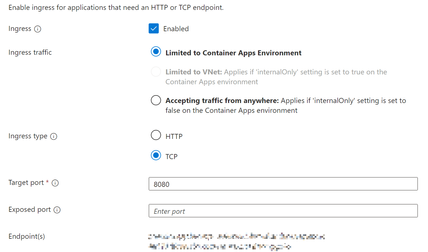

My upstream container app only allows TCP ingress.

Per Nginx documentation - Nginx TCP Load Balancing, configurations for the TCP ingress must be instream {} block. Such as:

stream {

upstream rtmp_servers {

least_conn;

server 10.0.0.2:1935;

server 10.0.0.3:1935;

}

server {

listen 1935;

proxy_pass rtmp_servers;

}

}

However, the stream {} block must be defined at the same level as the http {} block in the nginx.conf file. This means, if you add your stream {} configurations to the default.conf file, as we did above, it may cause an error, and the Nginx container may fail to start with the following message:

"nginx: [emerg] "stream" directive is not allowed here in /etc/nginx/conf.d/default.conf"

To avoid this error, you need to check the nginx.conf file, and confirm that the default.conf file is included outside the http {} block and at the same level as http {}.

nginx.conf is the main configuration file for NGINX. It contains directives that specify how NGINX should behave, and it controls the behavior of the entire NGINX process. This file typically includes configuration blocks for HTTP, mail, and stream servers.

default.conf is an example configuration file that can be included in nginx.conf to specify the configuration for a specific HTTP server. This file typically specifies settings, such as server name, listen ports, SSL/TLS certificates, and its location.

Below is my solution:

nginx.conf

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log notice;

pid /var/run/nginx.pid;

events {

worker_connections 1024;

}

http {

include /etc/nginx/mime.types;

default_type application/octet-stream;

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

sendfile on;

#tcp_nopush on;

keepalive_timeout 65;

#gzip on;

}

include /etc/nginx/conf.d/*.conf;

default.conf

stream {

upstream mybackend_server {

server containerapp3:8080;

}

server {

listen 80;

proxy_pass mybackend_server;

}

}

Dockerfile:

FROM nginx:alpine

COPY ./nginx.conf /etc/nginx/nginx.conf

COPY ./default.conf /etc/nginx/conf.d/default.conf

EXPOSE 80

I hope this helps! I believe this solution could potentially be applied to other platforms as well.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.