- Home

- Azure

- Apps on Azure Blog

- Securing your AKS deployments - SSL Termination on NGINX ILB using Front Door and Private Link

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Recently I have noticed an increase in questions from customers pertaining to different security practices for AKS deployments. I have decided to create a series of blogs covering some of the common ways to increase cluster security. I have plans for blog posts regarding Azure AD user authentication on micro services, utilizing Open Service Mesh and Network Policies to implement internal cluster networking security and the different options regarding metrics, alerting and telemetry for AKS clusters.

Today however I will start by discussing using Azure Front Door, Private Link Service and NGINX Ingress Controller to create a secure ingress to private back end services with SSL termination.

Before we jump into the use case & implementation its important to understand the various components if unfamiliar. I will speak about benefits of certain technologies as I go through but it is worth taking a quick look at these links as a level set if you need it:

- Azure Front Door - https://learn.microsoft.com/en-us/azure/frontdoor/front-door-overview

- Azure DNS - https://learn.microsoft.com/en-us/azure/dns/dns-overview

- Azure Private Endpoints - https://learn.microsoft.com/en-us/azure/private-link/private-endpoint-overview

- Azure Private Link Service - https://learn.microsoft.com/en-us/azure/private-link/private-link-service-overview

- Azure Load Balancer - https://learn.microsoft.com/en-us/azure/load-balancer/load-balancer-overview

- Nginx Ingress Controller - https://docs.nginx.com/nginx-ingress-controller/intro/overview/

- Azure Kubernetes Service - https://azure.microsoft.com/en-us/products/kubernetes-service

Use Case

A common pattern when deploying AKS is to protect your cluster by having restrictive network access. This could mean deploying your cluster with a private API server or using a private VNET & fronting your applications with a public gateway. In this case we will be using an internal AKS cluster deployed in its own VNET without a public load balancer.

This will mean the only public IP address will be the Kubernetes API server (which is mainly for ease of setup and demo). If you did decide to use a private api server nothing would change apart from requiring a bastion within your VNET to create resources on your cluster.

In this example we will be fronting our AKS cluster directly with Azure Front Door which will use private link service to ensure a secure connection to our internal Azure load balancer.

This will ensure that once requests are routed to our Front Door all subsequent traffic is private and being routed through the Azure Backbone network.

We will also handle SSL Termination with our NGINX Ingress Controller. This allows us to use our own custom certificates to secure our connection and abstract the SSL termination from our application, reducing developer workload and the processing burden on backend services.

Implementation - Setup

To start with I am using a domain that I purchased from namecheap.com. I am using Azure DNS to host my domain. This setup is outside of the scope of this blog. If you want to learn how to use Azure DNS to host your domain take a look at this link.

I also will store my secrets as Kubernetes secrets without mounting a Key Vault. I will cover using Key Vault for Kubernetes secrets in a later blog post. Using built in Kubernetes secrets in production is not advised.

Prerequisites

- Create an Azure Kubernetes Service Cluster.

- An existing custom domain.

- Configure Azure DNS for your domain.

- A valid SSL certificate associated with your domain.

- A .pfx, .crt and .key file for your certificate.

Implementation - Configuration

These configuration files are available at the following Repo: https://github.com/owainow/ssl-termination-private-aks

Azure Kubernetes Service

With our existing Azure Kubernetes cluster up and running we can begin to configure the cluster.

To start with we need to connect to our cluster. The commands required to connect to your specific cluster can be found conveniently in the Azure Portal under the connect tab in overview.

The first thing we need to deploy is our example application. The image I am deploying is a very simple API I created that supports a couple of different request methods. The image is public so feel free to use in for your testing purposes. The important thing to know about this application is that it is only configured for HTTP on port 80.

apiVersion: v1 kind: Namespace metadata: labels: app.kubernetes.io/name: platforms name: platforms --- apiVersion: apps/v1 kind: Deployment metadata: name: platforms-depl spec: replicas: 1 selector: matchLabels: app: platform-service template: metadata: labels: app: platform-service spec: containers: - name: platform-image image: owain.azurecr.io/platforms:latest imagePullPolicy: Always resources: requests: memory: "64Mi" cpu: "250m" limits: memory: "128Mi" cpu: "500m" --- apiVersion: v1 kind: Service metadata: name: platforms-clusterip-srv spec: type: ClusterIP selector: app: platform-service ports: - name: platform-service-http protocol: TCP port: 80 targetPort: 80

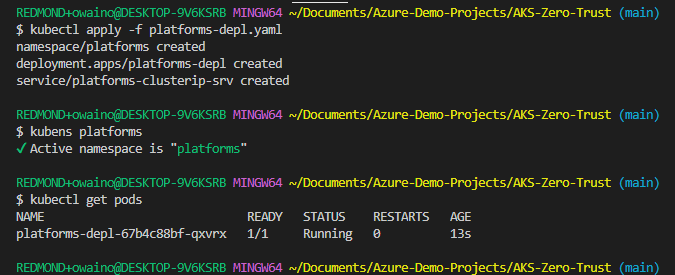

As this is the first apply command i'll also include it as a snippet. From now on you can assume any YAML will have been applied in the same way.

kubectl apply -f platforms-depl.yaml

We can confirm the application is up and running by switching to the "platforms" namespace (If you don't already use kubens for namespace switching it can be a great time saver) and running kubectl get pods.

We also now have to create the NGINX ingress controller. There are multiple ways to install NGINX ingress controller.

I added the manifest recommended when deploying on Azure which can be found here: https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.5.1/deploy/static/provider/...

This allows us to make a couple of changes required for this deployment. The first is to change the NGINX ingress service. The first thing we add under service is the annotation to create the service as an internal load balancer.

We do that by adding the following annotation to the service:

apiVersion: v1 kind: Service metadata: annotations: service.beta.kubernetes.io/azure-load-balancer-internal: "true"

We can then leverage a feature that is currently in public preview that allows us to create and configure a private link service from our NGINX ingress controller manifest.

It is worth noting that public preview means that support is "best effort" until its full release. If you do encounter any issues using this feature please raise them here.

Underneath our internal annotation we now add the following:

The networking values in this YAML are arbitrary. Feel free to replace them as appropriate for your configuration.

service.beta.kubernetes.io/azure-pls-create: "true service.beta.kubernetes.io/azure-pls-ip-configuration-ip-address: "10.224.10.224" service.beta.kubernetes.io/azure-pls-ip-configuration-ip-address-count: "1" service.beta.kubernetes.io/azure-pls-ip-configuration-subnet: "default" service.beta.kubernetes.io/azure-pls-name: "aks-pls" service.beta.kubernetes.io/azure-pls-proxy-protocol: "false" service.beta.kubernetes.io/azure-pls-visibility: '*'"

As NGINX Ingress Controller currently doesn't support any annotations to prevent HTTP communication to the controller itself I have also added the optional step to disable port 80 for the service to ensure no HTTP traffic is accepted. This means the service within your NGINX yaml manifest should look like the following:

apiVersion: v1 kind: Service metadata: annotations: service.beta.kubernetes.io/azure-load-balancer-internal: "true" service.beta.kubernetes.io/azure-pls-create: "true" service.beta.kubernetes.io/azure-pls-ip-configuration-ip-address: "10.224.10.224" service.beta.kubernetes.io/azure-pls-ip-configuration-ip-address-count: "1" service.beta.kubernetes.io/azure-pls-ip-configuration-subnet: "default" service.beta.kubernetes.io/azure-pls-name: "aks-pls" service.beta.kubernetes.io/azure-pls-proxy-protocol: "false" service.beta.kubernetes.io/azure-pls-visibility: '*' labels: app.kubernetes.io/component: controller app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.5.1 name: ingress-nginx-controller namespace: ingress-nginx spec: externalTrafficPolicy: Local ipFamilies: - IPv4 ipFamilyPolicy: SingleStack ports: # - appProtocol: http # name: http # port: 80 # protocol: TCP # targetPort: http - appProtocol: https name: https port: 443 protocol: TCP targetPort: https selector: app.kubernetes.io/component: controller app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx type: LoadBalancer

Before applying this manifest we need to create our TLS secret. We can do that with a command similar to the following, replace my key and certificate filename with your own.

kubectl create secret tls test-tls --key owainonline.key --cert owain_online.crt -n platforms

The final change we now can make to our NGINX yaml manifest is to add an argument to the nginx-Ingress-Controller pod itself. We need to add the "Default SSL Certificate" as otherwise NGINX by default will use a fake certificate that it creates itself. Under the container arguments add the following:

- --default-ssl-certificate=platforms/test-tls

We can now apply the entire NGINX manifest and view all of the resources that are created.

kubectl apply -f pls-nginx.yaml

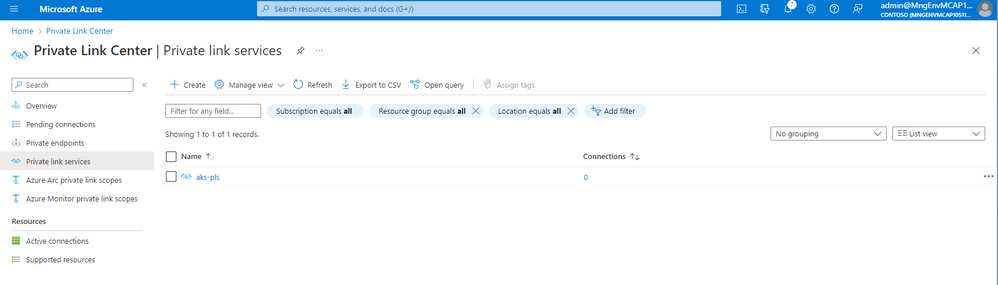

After a couple of minutes if we navigate to Private Link Service in the Azure portal we will see our AKS-PLS that has been created with 0 connections. Take note of the alias we will use that in a second when we configure Azure Front Door.

The last manifest we need to apply is our actual ingress crd. This file will tell NGINX where to route requests. The Ingress object looks as follows:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-srv

namespace: platforms

annotations:

kuberentes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/use-regex: 'true'

nginx.ingress.kubernetes.io/affinity: "cookie"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

labels:

app: platform-service

spec:

ingressClassName: nginx

tls:

- hosts:

- owain.online

secretName: test-tls

rules:

- host: owain.online

- http:

paths:

- path: /api/platforms

pathType: Prefix

backend:

service:

name: platforms-clusterip-srv

port:

number: 80

It is worth noting here by default when you add a TLS block to your NGINX ingress "ssl-redirect" is true by default. I include it here for visibility. Once we apply this manifest we can describe the resource with:

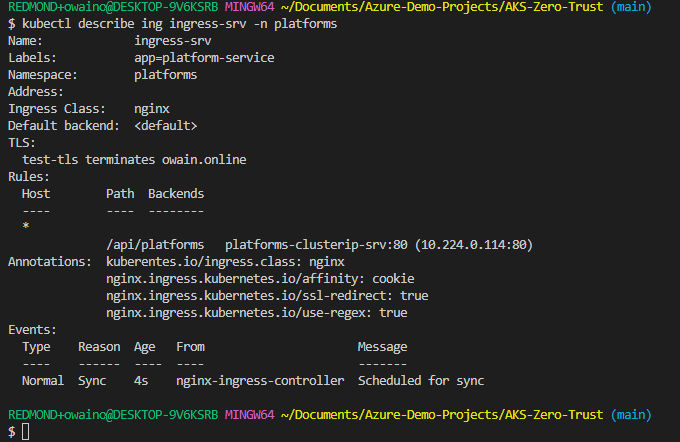

kubectl describe ing ingress-srv -n platforms

The output should look similar to this:

Here we can see that our routing rules have been successfully created but most importantly that our TLS block is configured where it states "test-tls terminates owain.online".

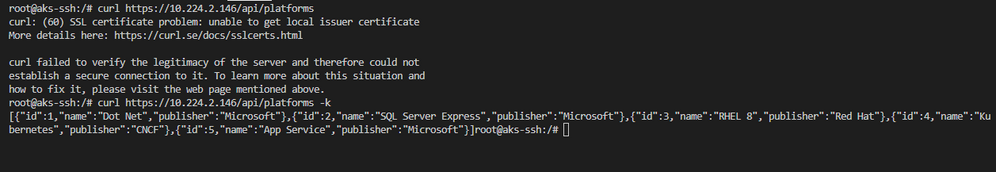

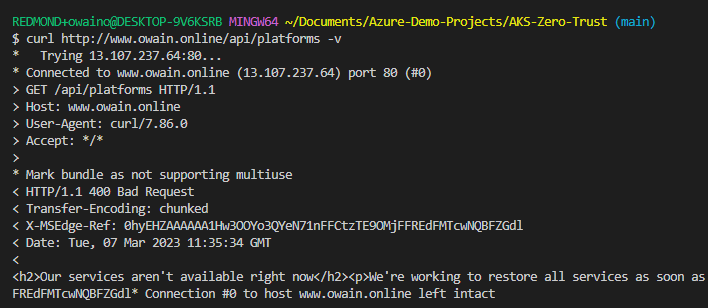

To start with lets check the internal IP of the service:

$ kubectl get services -n ingress-nginx NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE ingress-nginx-controller LoadBalancer 10.0.13.93 10.224.2.146 443:32490/TCP 19mx

Then we need to start our test pod in the cluster:

kubectl run -it --rm aks-ssh --image=debian:stable:

Once the pod is running we will see a shell and can enter the following commands to install curl:

apt-get update -y && apt-get install dnsutils -y && apt-get install curl -y.

We can then test our service.

# Curl your service /api/platforms curl https://10.224.2.146/api/platforms # Curl your service without validating certificate curl https://10.224.2.146/api/platforms -k # Curl to check http fails after closing port 80 curl http://10.224.2.146/api/platforms

In the screen shot we can see that we were unable to create a secure connection from our pod as we didn't have access to a local user certificate. This is fine and expected as we have specified in our ingress rule that the host we are expecting requests from is "owain.online". If we add the -k to accept an insecure connection we can see our API response. If we curl using http we can see that it hangs which is expected as we have closed port 80.

Azure Front Door

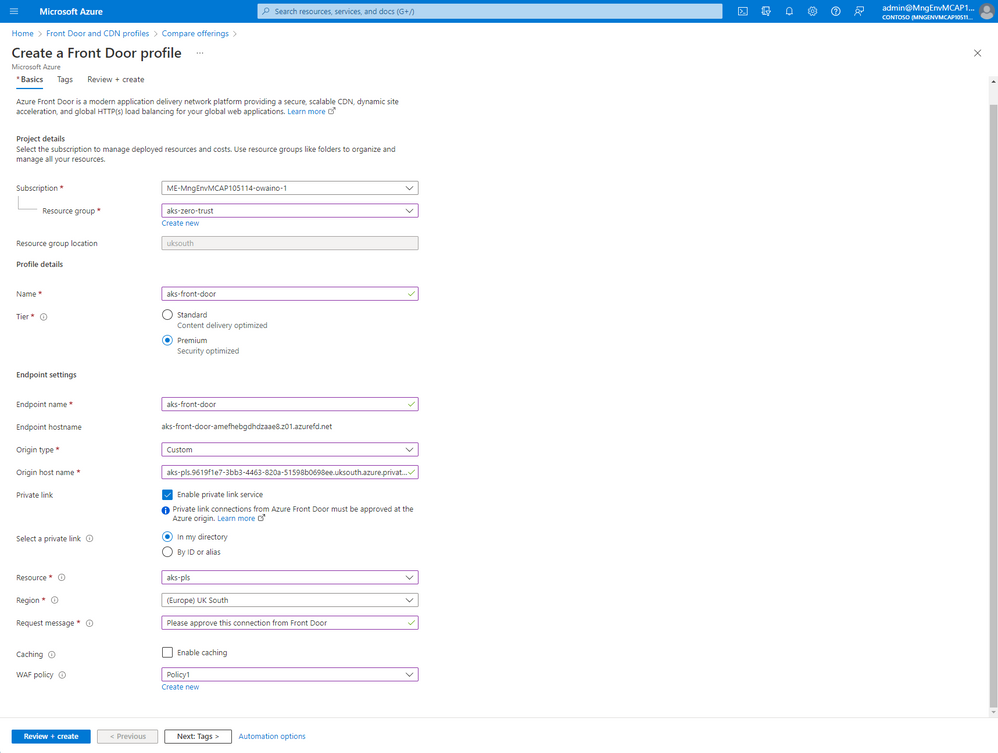

With that now configured we can move on to creating and configuring Azure Front Door.

As we are using Private Link Service and Private Endpoints we will need to use the Azure Front Door Premium SKU.

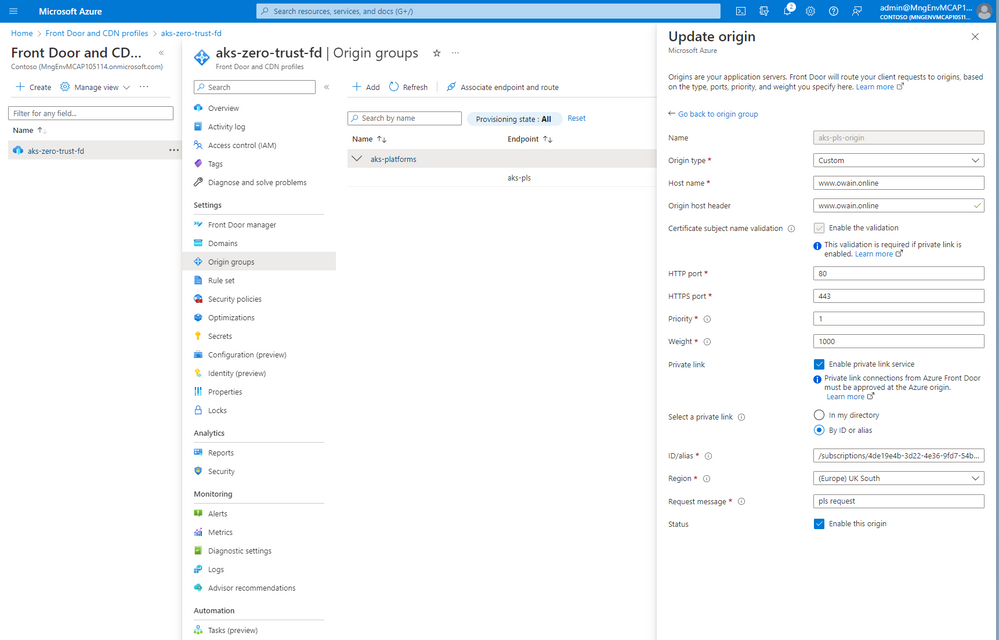

We will then use either the Alias or selection from directory for the private link service that we created earlier under a "Custom" origin type and select enable private link service.

It is worth noting that if you are using a wildcard certificate on your ingress controller you will need to use a dummy name for your Certificate Subject Name Validation. For example if owain.online had a wildcard certificate I could enter dummydomain.owain.online as the hostname to match within the origin. This is because Frontdoor is only validating the certificate on the ingress controller is valid for the hostname that it is expected (set here).

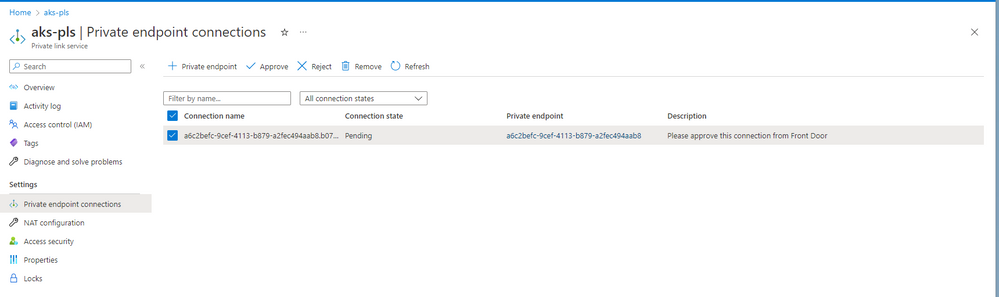

Once the front door instance is created we can then go to our PLS service and see we now have a pending connection to approve.

Configuring a custom domain in Azure Front Door and using your own certificate is outside of the scope for this post but the process is outlined here.

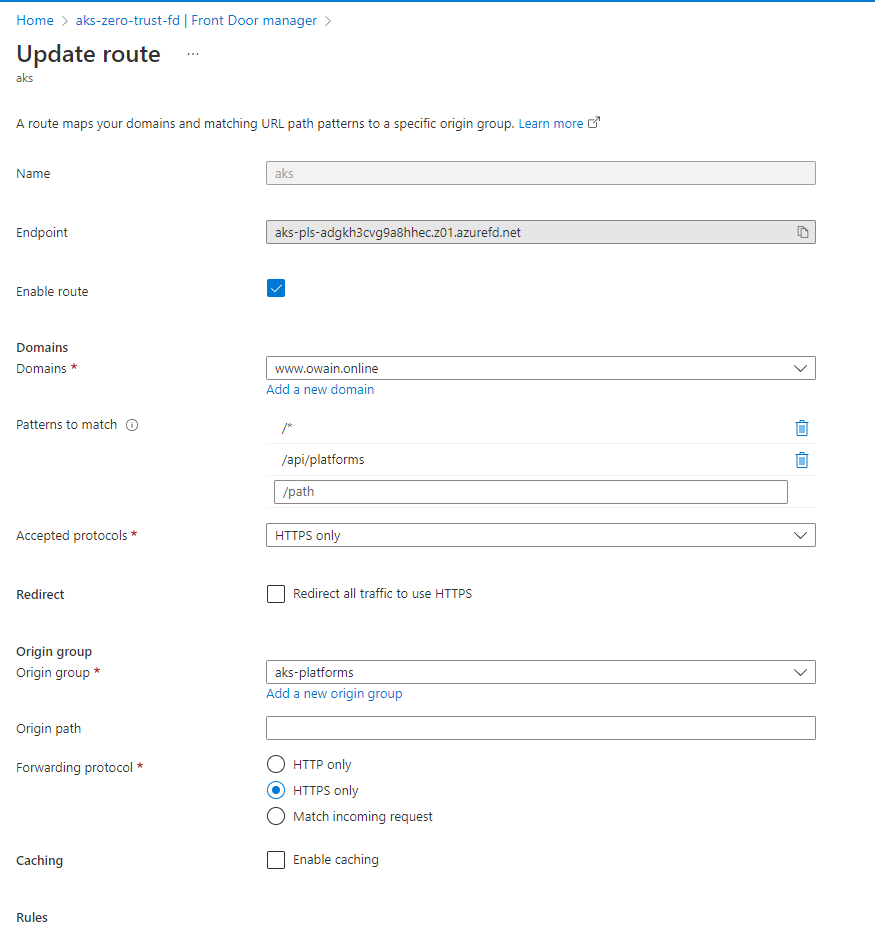

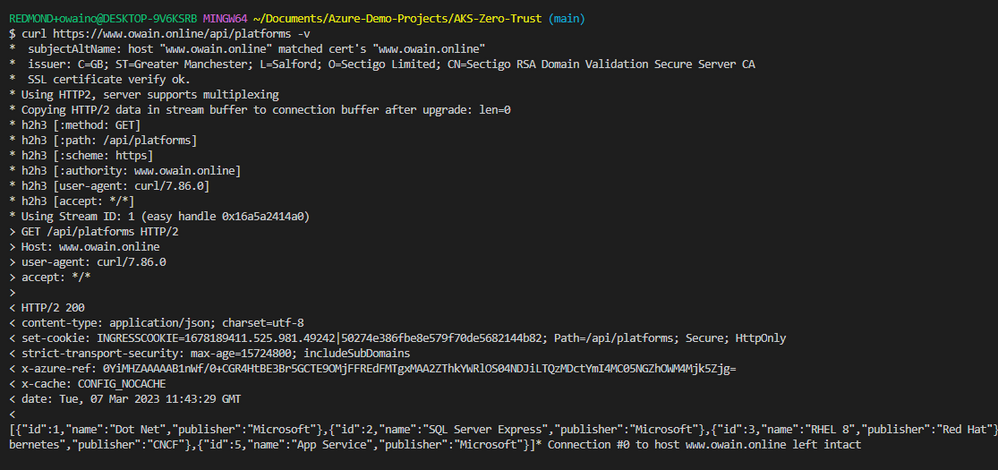

Once our private endpoint is approved and the link is created we can then look at our route configuration in Front Door and ensure that we are only accepting HTTPS requests.

Although we could allow http & https connections with redirects by selecting "HTTPS Only" we give users of our system more insight into what is happening with their HTTP request as instead of a curl returning a redirect the curl provides a 400 Bad Request error if we use HTTP.

If we use do a verbose curl using https we can see the certificate being used and most importantly we get the expected result with the TLS termination taking place on the ingress because as we mentioned at the start our application is not configured to use HTTPS.

We can also verify that the requests are being forwarded to our backed as HTTP requests if we take a look at the logs of our NGINX ingress controller by using the following command:

kubectl logs ingress-nginx-controller-<YOUR VARCHAR>

We can see that the above requests are being forwarded using HTTP on Port 80 of the Platforms-clusterip-srv.

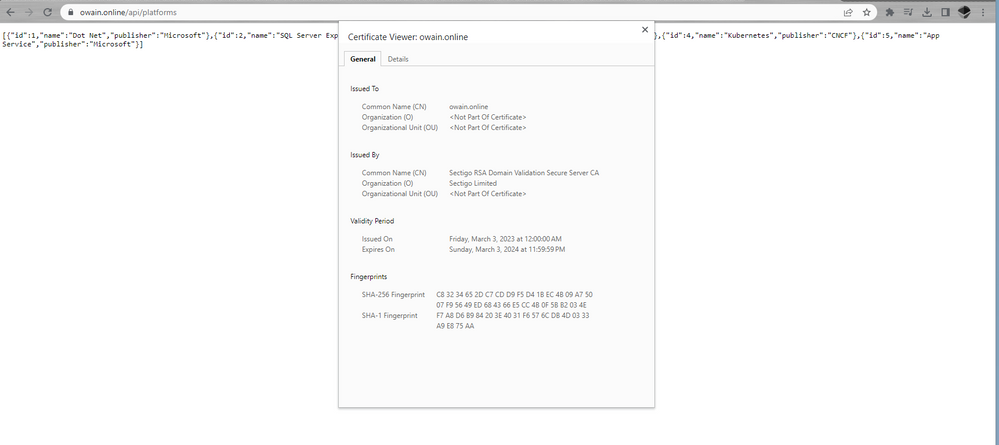

If we navigate to https://www.owain.online/api/platforms we will also be able to see our API and by clicking on the HTTPS padlock in the URL we can view the certificate securing this connection.

Conclusion

Making the most of time saving features such as using azure front door managed PE's and PLS annotations on your ingress means that getting a secure private TLS connection from Front Door to your internal Kuberenetes cluster is much simpler. In a production environment it would certainly be advisable to use IaaC approaches throughout the deployment including for your secret creation and Azure Resource creation. Tools like Bicep and Terraform have great modules to get you up and running quickly.

If you are looking at creating your Kubernetes cluster as code the AKS Construction Helper is a great way to visually configure your cluster and receive an automated script for IaaC deployment.

Of course using HTTPS with SSL termination is only one small part of best practices for creating secure AKS deployments. You can find the full security base line here.

In my next blog I will be building on this architecture and looking at using the OAuth2 Reverse Proxy alongside Azure AD to secure your AKS hosted microservices.

As mentioned all of the complete configuration files are available on Github here: https://github.com/owainow/ssl-termination-private-aks

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.