- Home

- Azure

- Azure Integration Services Blog

- Automate Azure Open AI custom model training data collection and deployment via Logic App

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Background

Recently, I'm planning to train a custom Open AI model which based Logic App cases in real-life. As per the situation, we have following challenges need to be resolved.

- We need huge amounts of records which cannot be provided from a single person. So we need to have a way to collect data from different people.

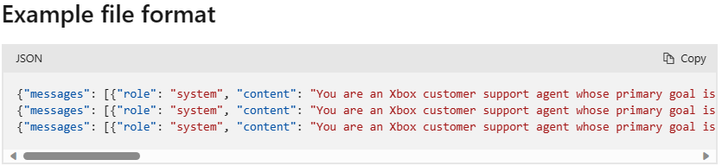

- Generate Jsonl files which requested by Open AI custom model.

- The training data is growing, so we need to automate the model training and deployment in schedule.

Mechanism

After some research and test, I found we can use Microsoft Form + Azure Storage Account + Logic App as resolution.

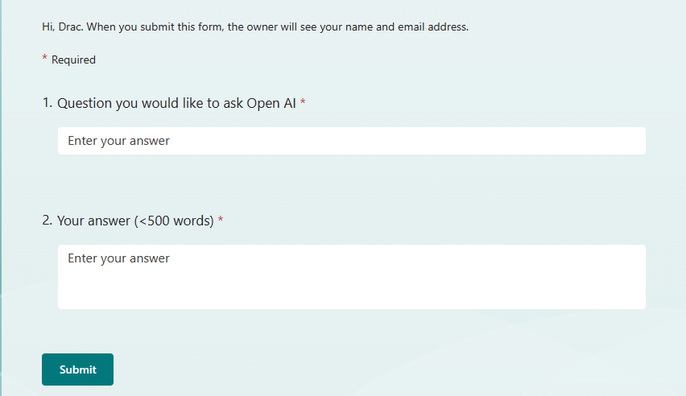

Microsoft Form: It is a very easy using services which can collect data from different teammates, here the sample form:

Azure Storage Account: I'm using Storage Table and Blob container in this scenario, the Storage Table maintains the raw data which collected from Microsoft Form and blob stores the Jsonl files which generated by Logic App.

Logic App: provide main data collection and automate deployment flows.

Prerequisites

- Azure Open AI resource in North Central US or Sweden Central (As per the document Azure OpenAI Service models - Azure OpenAI | Microsoft Learn, recently only those 2 regions support fine-tuning models).

- Three Logic App Consumption with Managed Identity enabled which assigned "Storage Table Data Contributor", "Storage Blob Data Contributor" and "Cognitive Services OpenAI Contributor" role (Logic App Standard also can be a choice, then you need to have 3 workflows).

- Microsoft Form of your own template.

Detail Introduction of Logic App Implementation

Logic App (Consumption) sample code can be found in Drac-Zhang/Logic-App-Azure-Open-AI-custom-model-automation (github.com)

(PS: ARM template will be provided later)

Data Collector

This Logic App will be triggered once anyone submit a Microsoft Form response, it pick up the response and then ingest into Storage Table.

You need to change Form ID of Form trigger based on your Form ID.

Sample data in Storage Table:

Training Dataset Generator

It will be triggered every day, retrieve all the data in Storage Table, create required Jsonl format training data and save in Blob container.

Every time it generates a new dataset, it will also update Storage Table with latest generated dataset file name.

Reference: Customize a model with Azure OpenAI Service - Azure OpenAI | Microsoft Learn

Parameters need to be modified:

| Action Name | Variable Name | Comments |

| Initialize variable - Table Base Url | TableBaseUrl |

Format: https://[StorageName].table.core.windows.net/[TableName]?$select=Question,Answer |

Custom Model Deploy

This Logic App implements the custom model deployment which is the most complex workflow in our resolution.

Backend logic is following:

- Get latest training dataset from Blob container.

- Upload dataset to Open AI "Data Files" via API (https://[OpenAIName].openai.azure.com/openai/files/import/?api-version=2023-09-15-preview) and waiting for file processed

- Create custom model via API (https://[OpenAIName].openai.azure.com/openai/fine_tuning/jobs?api-version=2023-09-15-preview) and waiting for model generated.

- Filter for deployments which have the same provided "Deployment Name", delete the existing deployments and re-deploy with new model via API ([ManagementUrl]/deployments/[DeploymentName]?api-version=2023-10-01-preview)

Parameters need to be modified:

| Action Name | Variable Name | Comments |

| Initialize variable - OpenAI name | OpenAIName | Your Open AI resource name. |

| Initialize variable - Management Url | ManagementUrl |

Format: https://management.azure.com/subscriptions/[Sub ID]/resourceGroups/[RG]/providers/Microsoft.CognitiveServices/accounts/[OpenAI Name]} |

| Initialize variable - Deployment Name | Deployment Name | Your deployment name |

Additional Information

- In Azure Storage Table connector, I don't find a place to fill in "Next page marker" for pagination in "Get Entities" action. So in "Training Data Generator" workflow, I have to use Http action to query Storage Table directly.

- Logic App ARM template is not available yet, so you need to prepare API connection yourselves.

- Based on the dataset size and load of backend, the custom model might need to take sometime to generate, default timeout for "Until" loop of waiting model creation is 12 hours, you might need to change to longer.

- In my scenario, there's no request during weekend, so I can safely delete deprecated deployment. You may need to change this behavior as per your requirement.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.