In the virtual event, Azure Quantum: Accelerating Scientific Discovery, our Chairman and Chief Executive Officer Satya Nadella said it best, “Our goal is to compress the next 250 years of chemistry and materials science progress into the next 25.”

In keeping with that goal, we are making three important announcements today.

- Azure Quantum Elements accelerates scientific discovery so that organizations can bring innovative products to market more quickly and responsibly. This system empowers researchers to make advances in chemistry and materials science with scale, speed, and accuracy by integrating the latest breakthroughs in high-performance computing (HPC), AI, and quantum computing. The private preview launches in a few weeks, and you can sign-up today to learn more.

- Copilot in Azure Quantum helps scientists use natural language to reason through complex chemistry and materials science problems. With Copilot in Azure Quantum a scientist can accomplish complex tasks like generating the underlying calculations and simulations, querying and visualizing data, and getting guided answers to complicated concepts. Copilot also helps people learn about quantum and write code for today’s quantum computers. It’s a fully integrated browser-based experience available to try for free that has a built-in code editor, quantum simulator, and seamless code compilation.

- Roadmap to Microsoft’s quantum supercomputer is now published along with peer-reviewed research demonstrating that we’ve achieved the first milestone.

Quantum Computing Implementation Levels

The path to quantum supercomputing is not unlike the path to today’s classical supercomputers. The pioneers of early computing machines had to advance the underlying technology to improve their performance before they could scale up to large architectures. That’s what motivated the change from vacuum tubes to transistors and then to integrated circuits. Fundamental changes to the underlying technology will also precipitate the development of a quantum supercomputer.

As the industry progresses, quantum hardware will fall into one of three categories of Quantum Computing Implementation Levels:

Level 1—Foundational: Quantum systems that run on noisy physical qubits which includes all of today’s Noisy Intermediate Scale Quantum (NISQ) computers.

Microsoft has brought these quantum machines—the world’s best, with the highest quantum volumes in the industry—to the cloud with Azure Quantum including IonQ, Pasqal, Quantinuum, QCI, and Rigetti. These quantum computers are great for experimentation as an on-ramp to scaled quantum computing. At the Foundational Level, the industry measures progress by counting qubits and quantum volume.

Level 2—Resilient: Quantum systems that operate on reliable logical qubits.

Reaching the Resilient Level requires a transition from noisy physical qubits to reliable logical qubits. This is critical because noisy physical qubits cannot run scaled applications directly. The errors that inevitably occur will spoil the computation. Hence, they must be corrected. To do this adequately and preserve quantum information, hundreds to thousands of physical qubits will be combined into a logical qubit which builds in redundancy. However, this only works if the physical qubits’ error rates are below a threshold value; otherwise, attempts at error correction will be futile. Once this stability threshold is achieved, it is possible to make reliable logical qubits. However, even logical qubits will eventually suffer from errors. The key is that they must remain error-free for the duration of the computation powering the application. The longer the logical qubit is stable, the more complex an application it can run. In order to make a logical qubit more stable (or, in other words, to reduce the logical error rate), we must either increase the number of physical qubits per logical qubit, make the physical qubits more stable, or both. Therefore, there is significant gain to be made from more stable physical qubits as they enable more reliable logical qubits, which in turn can run increasingly more sophisticated applications. That’s why the performance of quantum systems in the Resilient Level will be measured by their reliability, as measured by logical qubit error rates.

Level 3—Scale: Quantum supercomputers that can solve impactful problems which even the most powerful classical supercomputers cannot.

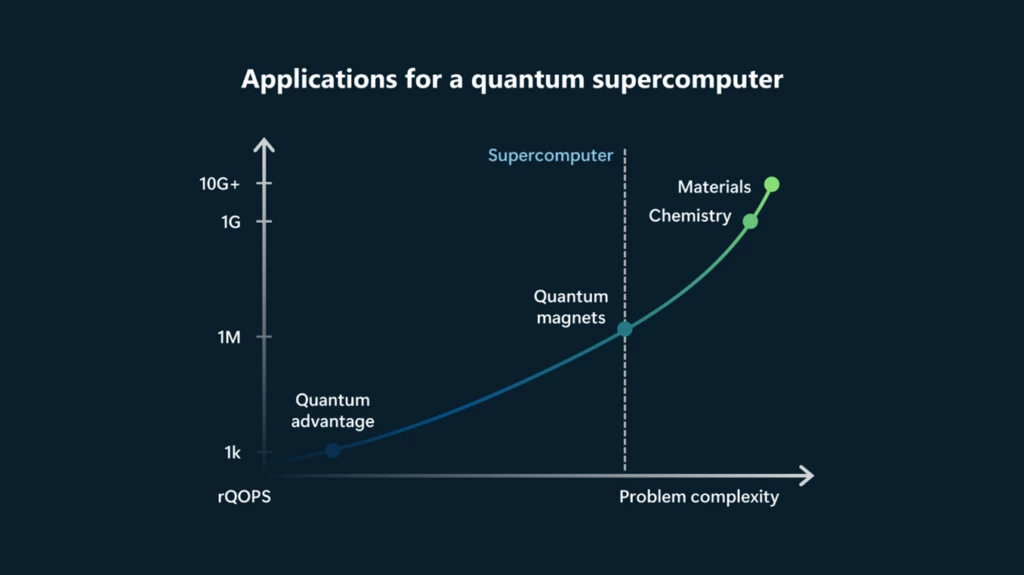

This level will be reached when it becomes possible to engineer a scaled, programmable quantum supercomputer that will be able to solve problems that are intractable on a classical computer. Such a machine can be scaled up to solve the most complex problems facing our society. As we look ahead, we need to define a good figure of merit that captures what a quantum supercomputer can do. This measure of a supercomputer’s performance should help us understand how capable the system is of solving impactful problems. We offer such a figure of merit: reliable Quantum Operations Per Second (rQOPS), which measures how many reliable operations can be executed in a second. A quantum supercomputer will need at least one million rQOPS.

Measuring a quantum supercomputer

The rQOPS metric counts operations that remain reliable for the duration of a practical quantum algorithm so that there is an assurance that it will run correctly. As we shall see below, this metric encapsulates the full system performance (as opposed to solely the physical qubit performance) and combines three key factors that are critical for scaling up to execute valuable quantum applications: scale, reliability, and speed.

The first time rQOPS is detected is at Level 2, but, it becomes meaningful at Level 3. To solve valuable scientific problems, the first quantum supercomputer will need to deliver at least one million rQOPS, with an error rate of, at most, 10-12 or only one for every trillion operations. At one million rQOPS, a quantum supercomputer could simulate simple models of correlated materials, aiding in the creation of better superconductors, for example. In order to solve the most challenging commercial chemistry and materials science problems, a supercomputer will need to continue to scale to one billion rQOPS and beyond, with an error rate of at most 10-18 or one for every quintillion operations. At one billion rQOPS, chemistry and materials science research will be accelerated by modeling new configurations and interactions of molecules.

Our industry as a whole has yet to achieve this goal, which can only happen once we transition from the NISQ era to achieving a reliable qubit. While today’s quantum computers are all performing at an rQOPS value of zero, this metric quantifies where tomorrow’s quantum computers need to be to deliver value.

Calculating rQOPS

A rQOPS is given by the number Q of logical qubits in the quantum system multiplied by the hardware’s logical clock speed f :

rQOPS = Q· f .

It is expressed with a corresponding logical error rate pL, which indicates the maximum tolerable error rate of the operations on the logical qubits.

The rQOPS accounts for the three key factors of scale, speed, and reliability: scale through the number of reliable qubits; speed through the dependence on the clock speed; and reliability through encoding of physical qubits into logical qubits and the corresponding logical error rate pL.

To facilitate calculating how many rQOPS an algorithm will require, we’ve updated the Azure Quantum Resource Estimator to output the rQOPS and pL for the user’s choice of quantum algorithm and quantum hardware architecture. This tool enables quantum innovators to develop and refine algorithms to run on tomorrow’s scaled quantum computers by revealing the rQOPS and run time required to run applications on different hardware architectures.

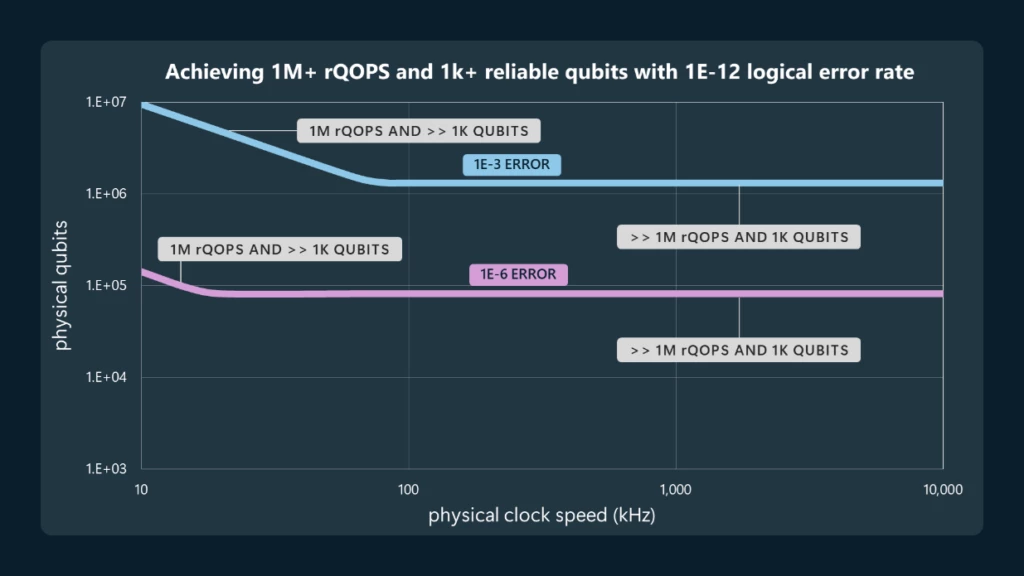

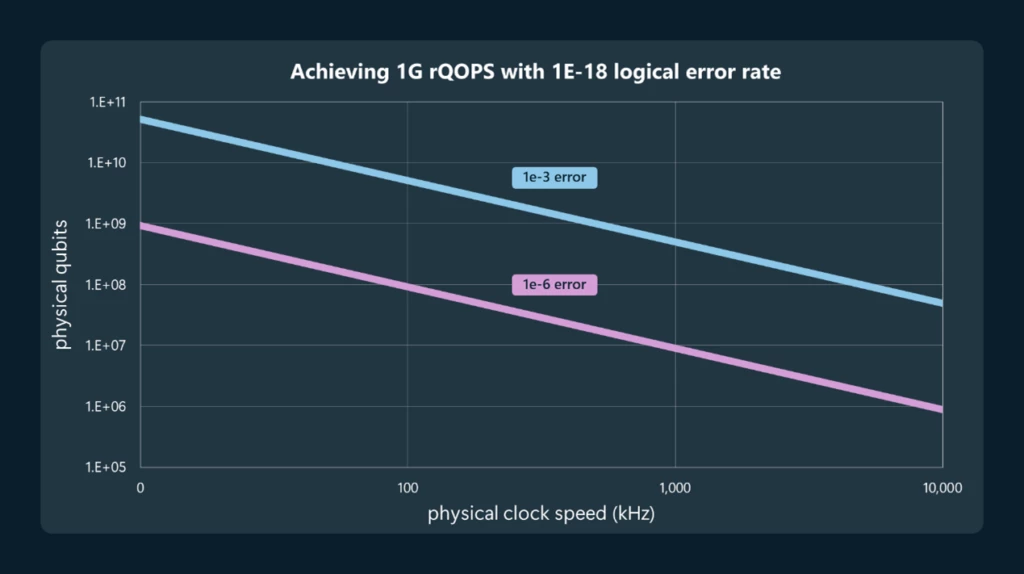

In the plots shown below, we illustrate the requirements (numbers of physical qubits and physical clock speed) needed for one million rQOPS with pL=10-12 and for one billion rQOPS with pL=10-18. We plot these requirements for the two cases in which the underlying physical qubits have error rates of either 10-3 or 10-6.

Figure 1: Requirements to achieve 1M rQOPS, with a 10-12 logical error rate and at least 1,000 reliable logical qubits. The physical hardware trade-offs between clock speed and qubits are shown for devices with physical error rates of 1/1000 and 1/1,000,000.

Figure 2: Requirements to achieve 1G rQOPS, with a 10-18 logical error rate. The physical hardware trade-offs between clock speed and qubits are shown for devices with physical error rates of 1/1000 and 1/1,000,000.

The first milestone towards a quantum supercomputer

A quantum supercomputer must be powered by reliable logical qubits, each of which is formed from many physical qubits. The more stable the physical qubit is, the easier it is to scale up because you need fewer of them. Over the years, Microsoft researchers have fabricated a variety of qubits used in many of today’s NISQ computers, including spin, transmon, and gatemon qubits. However, we concluded that none of these qubits is perfectly suited to scale up.

That’s why we set out to engineer a brand-new qubit with inherent stability at the hardware level. It has been an arduous development path in the near term because it required that we make a physics breakthrough that has eluded researchers for decades. Overcoming many challenges, we’re thrilled to share that a peer-reviewed paper, published in Physical Review B, a journal of the American Physical Society, establishes that Microsoft has achieved the first milestone towards creating a reliable and practical quantum supercomputer.

In this paper we describe how we engineered a device in which we can controllably induce a topological phase of matter characterized by Majorana Zero Modes (MZMs).

The topological phase can enable highly stable qubits with small footprints, fast gate times, and digital control. However, disorder can destroy the topological phase and obscure its detection. Our paper reports on devices with low enough disorder to pass the topological gap protocol, thereby demonstrating this phase of matter and paving the way for a new stable qubit. The published version of the paper shows data from additional devices measured after initial presentations of this breakthrough. We have added extensive tests of the TGP with simulations that further validate it. Moreover, we have developed a new measurement of the disorder level in our devices which demonstrates how we were able to accomplish this milestone and has seeded further improvements.

To learn more about this accomplishment, you can read the paper, analyze the data yourself in our interactive Jupyter notebooks, and watch this summary video.

The Microsoft roadmap to a quantum supercomputer

1. Create and control Majoranas: Achieved.

2. Hardware-protected qubit: The hardware-protected qubit (historically referred to as a topological qubit) will have built-in error protection. This unique qubit will scale to support a reliable qubit, and will enable engineering of a quantum supercomputer because it will be:

- Small—Each of our hardware-protected qubits will be less than 10 microns on a side, so one million can fit in the area of the smart chip on a credit card, enabling a single-module machine of practical size.

- Fast—Each qubit operation will take less than one microsecond. This means problems can be solved in weeks rather than decades or centuries.

- Controllable—Our qubits will be controlled by digital voltage pulses to ensure that a machine with millions of them doesn’t have an excessive error rate or require unattainable input/output bandwidth.

3. High quality hardware-protected qubits: Hardware-protected qubits that can be entangled and operated through braiding, reducing error rates with a series of quality advances.

4. Multi-qubit system: A variety of quantum algorithms can be executed when multiple qubits operate together as a programmable Quantum Processing Unit (QPU) in a full stack quantum machine.

5. Resilient quantum system: A quantum machine operating on reliable logical qubits, that demonstrates higher quality operations than the underlying physical qubits. This breakthrough enables the first rQOPS.

6. Quantum supercomputer: A quantum system capable of solving impactful problems even the most powerful classical supercomputers cannot with at least one million rQOPS with an error rate of at most 10-12 (one in a trillion).

We will reach Level 2, Resilient, of the Quantum Computing Implementation Levels at our fifth milestone and will achieve Level 3, Scale, with the sixth.

Join the journey

Today marks an important moment on our path to engineering a quantum supercomputer and ultimately empowering scientists to solve many of the hardest problems facing our planet. To learn more about how we’re accelerating scientific discovery with Azure Quantum, check-out the virtual event with Satya Nadella, Microsoft Chairman and Chief Executive Officer, Jason Zander, Executive Vice President of Strategic Missions and Technologies, and Brad Smith, Vice Chair and President. To follow our journey and get the latest insider news on our hardware progress, register here.