- Home

- Azure

- Azure PaaS Blog

- How to calculate Container Level Statistics in Azure Blob Storage with Azure Databricks

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Background

This article describes how to get container level stats in Azure Blob Storage, and how to work with the information provided by blob inventory.

The approach presented here uses Azure Databricks and is most suited to be used in storage accounts with a huge amount of data.

At the end of this article, you would be able to create a script to calculate:

- The total number of blobs in the container

- The total container capacity (in bytes)

- The total number of snapshots in the container

- The total container snapshots capacity (in bytes)

- The total number of versions in the container

- The total container versions capacity (in bytes)

- The total number of blobs in the container by BlobType

- The total number of blobs in the container by Content-Type

Approach

This approach is based on two steps:

-

Use Blob Inventory to collect data from Storage account

-

Use Azure Databricks to analyse the data collected with Blob Inventory

Each step has a theoretical introduction and a practical example.

Use Blob Inventory to collect data from Storage account

Theoretical introduction

The Azure Storage blob inventory feature provides an overview of your containers, blobs, snapshots, and blob versions within a storage account. Use the inventory report to understand various attributes of blobs and containers such as your total data size, age, encryption status, immutability policy, and legal hold and so on. The report provides an overview of your data for business and compliance requirements (please see more here: Azure Storage blob inventory).

The steps to enable inventory report are presented here Enable Azure Storage blob inventory reports.

Please keep in mind the following:

- Inventory run - A blob inventory run is automatically scheduled every day. It can take up to 24 hours for an inventory run to complete.

- Inventory output - Each inventory rule generates a set of files in the specified inventory destination container for that rule.

- Inventory files - Each inventory run for a rule generates the following files: Inventory file, Checksum file, Manifest file.

- Pricing and billing - Pricing for inventory is based on the number of blobs and containers that are scanned during the billing period.

- Known issues Please find the kwon issues associated with blob inventory here.

Practical example

This practical example is intended to help the user to understand the theoretical introduction.

Please see below the steps I followed to create an inventory rule:

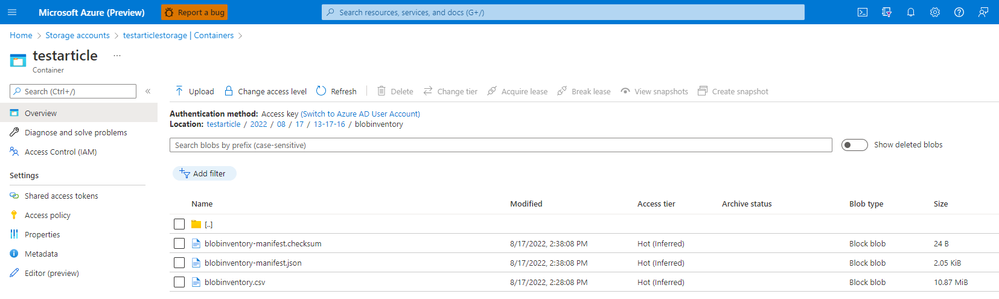

- I created a storage account with a container named "testarticle". On this container, I uploaded 50K blobs.

- I followed the steps presented above to create an inventory rule associated to the testarticle container (steps presented here Enable Azure Storage blob inventory reports) with the following configuration:

- Rule name: blobinventory

- Container: testarticle

- Object type to inventory: Blob

- Blob types: Block blobs, Page blobs, Append blobs

- Subtypes: Include blob versions, include snapshots, include deleted blobs

- Blob inventory fields: All fields

- Inventory frequency: Daily

- Export format: CSV

As I mentioned above, it can take up to 24 hours for an inventory run to complete. After 24 hours, it is possible to see that the inventory rule was executed for the Aug 17th.

The file generated has almost 11 MiB. Please keep in mind that for files of this size we can use Excel. Azure Databricks should be used when the regular tools like Excel are not able to read the file.

Use Azure Databricks to analyse the data collected with Blob Inventory

Theoretical introduction

Azure Databricks is a data analytics platform optimized for the Microsoft Azure cloud services platform. Azure Databricks offers three environments for developing data intensive applications: Databricks SQL, Databricks Data Science & Engineering, and Databricks Machine Learning (What is Azure Databricks?).

Please be aware of Azure Databricks Pricing before deciding to use it.

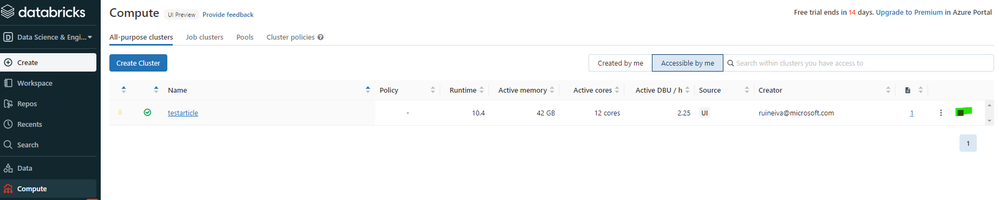

To start working with Azure Databricks we need to create and deploy an Azure Databricks workspace, and we also need to create a cluster. Please find here a QuickStart to Run a Spark job on Azure Databricks Workspace using the Azure portal.

Practical example

Now that we have an Azure Databricks workspace and a cluster, we will use Azure Databricks to read the csv file generated by the inventory rule created above, and to calculate the container stats.

To be able to connect Azure Databricks workspace to the storage account where the blob inventory file is, we have to create a notebook. Please follow the steps presented here Run a Spark job with focus on steps 1 and 2.

With the notebook created, it is necessary to access the blob inventory file. Please copy the blob inventory file to the root of the container/filesystem.

If you have a ADLS GEN2 account, please follow step 1. If you do not have, please follow step 2:

- To read the blob inventory file please replace storage_account_name, storage_account_key, container, and blob_inventory_file with the information related to your storage account and execute the following code

from pyspark.sql.types import StructType, StructField, IntegerType, StringType import pyspark.sql.functions as F storage_account_name = "StorageAccountName" storage_account_key = "StorageAccountKey" container = "ContainerName" blob_inventory_file = "blob_inventory_file_name" # Set spark configuration spark.conf.set("fs.azure.account.key.{0}.dfs.core.windows.net".format(storage_account_name), storage_account_key) # Read blob inventory file df = spark.read.csv("abfss://{0}@{1}.dfs.core.windows.net/{2}".format(container, storage_account_name, blob_inventory_file), header='true', inferSchema='true') - To read the blob inventory file please replace storage_account_name, storage_account_key, container, and blob_inventory_file with the information related to your storage account and execute the following code

from pyspark.sql.types import StructType, StructField, IntegerType, StringType import pyspark.sql.functions as F storage_account_name = "StorageAccountName" storage_account_key = "StorageAccountKey" container = "ContainerName" blob_inventory_file = "blob_inventory_file_name" # Set spark configuration spark.conf.set("fs.azure.account.key.{0}.blob.core.windows.net".format(storage_account_name), storage_account_key) # Read blob inventory file df = spark.read.csv("wasbs://{0}@{1}.blob.core.windows.net/{2}".format(container, storage_account_name, blob_inventory_file), header='true', inferSchema='true')

Please see below how to calculate the container stats with Azure Databricks organized as follow. First is presented the code sample and after the code execution result).

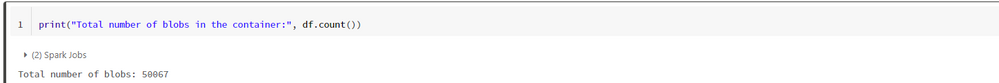

Calculate the total number of blobs in the container

print("Total number of blobs in the container:", df.count())

Calculate the total container capacity (in bytes)

display(df.agg({'Content-Length': 'sum'}).withColumnRenamed("sum(Content-Length)", "Total Container Capacity (in bytes)"))

Calculate the total number of snapshots in the container

from pyspark.sql.functions import *

print("Total number of snapshots in the container:", df.where(~(col("Snapshot")).like("Null")).count())

Calculate the total container snapshots capacity (in bytes)

dfT = df.where(~(col("Snapshot")).like("Null"))

display(dfT.agg({'Content-Length': 'sum'}).withColumnRenamed("sum(Content-Length)", "Total Container Snapshots Capacity (in bytes)"))

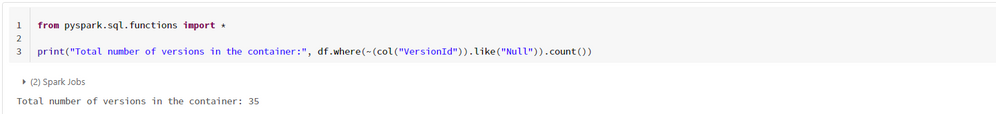

Calculate the total number of versions in the container

from pyspark.sql.functions import *

print("Total number of versions in the container:", df.where(~(col("VersionId")).like("Null")).count())

Calculate the total container versions capacity (in bytes)

dfT = df.where(~(col("VersionId")).like("Null"))

display(dfT.agg({'Content-Length': 'sum'}).withColumnRenamed("sum(Content-Length)", "Total Container Versions Capacity (in bytes)"))

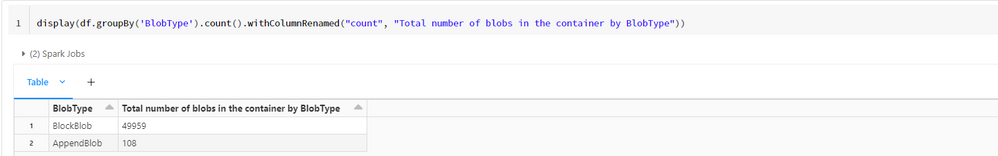

Calculate the total number of blobs in the container by BlobType

display(df.groupBy('BlobType').count().withColumnRenamed("count", "Total number of blobs in the container by BlobType"))

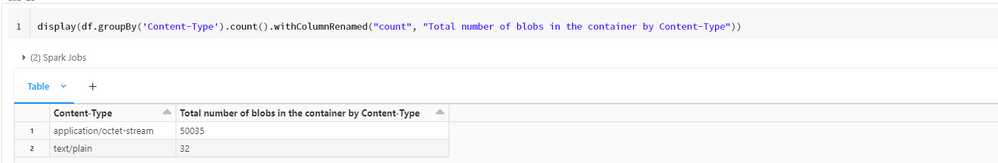

Calculate the total number of blobs in the container by Content-Type

display(df.groupBy('Content-Type').count().withColumnRenamed("count", "Total umber of blobs in the container by Content-Type"))

Final remarks:

- Review all the presented information with attention, mainly the pricing information.

-

When you finish, please go to compute and stop the cluster to avoid extra costs.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.