- Home

- Azure

- Azure High Performance Computing (HPC) Blog

- Integrating HPC Pack with Azure Files - the Preferred Storage Solution

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

When deploying an HPC Pack workload to the Azure public cloud, until recently, it could be challenging to deploy a highly available, Active Directory-integrated file share to host your SMB shares related to the workload. Most of the options were self-managed or third-party appliances. HPC Pack environments typically feature several shares that are used for Compute Node application installs, hosting of model or resource files, and runtime scratch shares. The latter two shares require increased bandwidth focus due to the number of nodes pulling similar files at the same time.

A brief overview of the AD-Integrated SMB Share ecosystem:

- Windows Server VMs –

Benefits: Familiar, well-understood by Enterprise IT

Building a Windows Failover Cluster or Scale File System Cluster to provide highly available file shares is a valid alternative. This solution addresses availability concerns customers had with single-instance VM-based file servers. However, either of these solutions requires the customers to manage their file server and disk subsystems and miss out on the benefits of managed file service solutions (reduced TCO and simplicity). The performance of the cluster file share requires recognition of multiple limits on aspects of the virtual machine and managed disks. Cloud architects will need to design their cluster storage platform cognizant of these limits and make appropriate choices to meet known requirements. - Azure NetApp Files –

Benefits: First-party Azure service with a rich ecosystem of support by Microsoft and NetApp, ability to target share size and performance tiers to meet performance requirements, replication, and ease in integration with Active Directory Domain Services (AD DS). It also features 3 different tiers of performance levels to meet customers’ requirements for IOPS (IO Read Operations Per Second) and bandwidth.

• Third Party NAS (Network Attached Storage) Appliances from the Azure Marketplace

Overview: There are many third-party marketplace offerings for SMB Shares. Some have performed very well in performance testing. With any third-party appliance, With marketplace offerings, there are always two support channels to investigate.

Several Azure Files enhancements have been made over the last few years. Azure Files can now not only meet customer requirements to host your HPC Pack SMB Shares for your Windows Compute Nodes but is the preferred solution to host them.

- On-Premises Active Directory Domain Services (AD DS) integrated - https://docs.microsoft.com/en-us/azure/storage/files/storage-files-identity-auth-active-directory-en...

Integration with an AD DS forest is simply critical for most HPC Pack environments as inputs and outputs to most HPC jobs are secured by Active Directory identities whether by Shares permissions and NTFS (New Technology File System) ACLs. - Default Share Level Permissions - https://docs.microsoft.com/en-us/azure/storage/files/storage-files-identity-ad-ds-assign-permissions...

Active Directory Domain Services (AD DS) initially required all share-level permissions to be synchronized with Azure AD and in the same forest as the synchronized domain. This was problematic for some organizations due to the complex AD DS sync scenarios with multiple forests or tenants. Default Share Level Permissions ended those challenges and allowed all Authenticated Users (AU) to have access configuration while still maintaining NTFS User-/Group- Level permissions.

- SMB Multichannel - https://docs.microsoft.com/en-us/azure/storage/files/storage-files-smb-multichannel-performance

For multicore and operations with multiple threads, enabling SMB Multichannel showed a 40% increase in bandwidth to a single node during my testing. SMB Multichannel enables multiple pathways to each Storage target to fully utilize a high-speed network interface bandwidth connection.

- Increased IOPS - https://docs.microsoft.com/en-us/azure/storage/files/files-whats-new for Azure Files on premium storage through burst IO support and increased base IOPS.

With these enhancements, Azure Premium Files provides excellent performance to cost balance to HPC Pack use cases on Azure.

More on Azure Files

Azure Files is a fully managed cloud-based file share product, accessible over SMB on Windows and Linux operating systems, fulfilling common HPC Pack use cases. There are several identity-based authentication options for Azure Files over SMB. The option to set a default share-level permission provides flexibility for many deployment scenarios and requirements, whether your organization is ready to synchronize identities to the cloud or not.

Azure Files also offers a wide range of network access options, including tunneling traffic over a VPN (virtual private networks), Azure ExpressRoute connection, or a private endpoint. To ensure high availability and enable disaster recovery scenarios, Azure Files offers different redundancy options, including zone-redundant storage in certain regions which offers 12 9’s of availability over a given year. All Azure Storage is encrypted at rest by default, with the ability to let Microsoft manage the encryption keys, or manage and rotate the keys yourself. Based on these HA/DR and security features Azure Storage, and specifically, Azure Files offers a variety of certifications and attestations across industries and regions. Most organizations for HPC workloads however choose to implement a private endpoint connection to the share location directly on the Azure Virtual Network and implement a firewall feature.

Performance / Cost Evaluation:

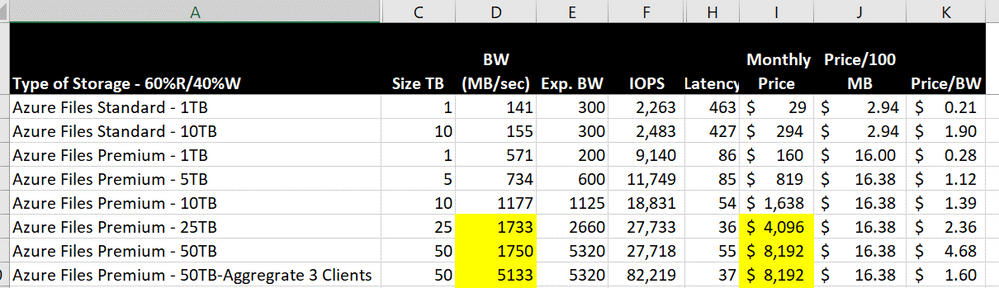

I wanted to perform a storage benchmark similar to HPC Pack environment usage – 60%/40% Reads, typically Sequential, and larger file size (<16k). Using Diskspd, an open-source project from Microsoft (https://github.com/Microsoft/diskspd), I evaluated the options against expected bandwidth and sustained IOPS (non-burst) values from a single client. I used multiple clients when the single client's performance was under the expected performance.

Some things stood out from my testing:

• The cost-effective nature of Azure Standard Files for low bandwidth scenarios requiring AD-integrated shares. Azure Standard Files provide a base performance level regardless of capacity, so for many Dev/Test or Sandbox environments this level of performance may be adequate. All the same networking and identity options apply for Azure Standard Files.

• For most environments, however, Azure Files Premium is the most cost-efficient means for high transactional bandwidth-driven environments.

• For shares 25TB and larger, it required multiple clients to fully utilize the entire share's expected bandwidth and IOPS performance. Single client performance was limited to 1.7-1.9GBps (15Gbps) regardless of the number of file targets, threads on the host, block size of the data, or SKU (Stock Keeping Unit) size of the host.

• I also evaluated latency and performance from nodes in all 3 Availability Zones (AZ) in a single region. For this workload with short durations and generally large block sizes, we found similar performance regardless of which AZ the client VM resided in; however, not all workloads should expect similar results.

• Monthly prices are posted in United States East Azure regions, with no discounts.

• Side Note for Benchmarking: For optimal results, Azure Files requires a brief period to balance the load across multiple backend servers. During my benchmarking, I performed 10 minutes of IO to the share before collecting IOPS/throughput numbers.

Conclusions

Azure Files Premium should be evaluated for hosting your HPC Pack shares due to its high resiliency to failure, high availability, ability to meet high bandwidth requirements for many compute node environments, integration into the customer subnets and IP (Internet Protocol) addressing, and security of the data being hosted both in transit and at rest. It is important to size one’s environment not only on the size of the data being hosted but also on the expected performance of the share. Price per GB (GigaBytes) of storage only tells a portion of the story, but the price per MBps (MegaBytes Per Second) of bandwidth is as important a metric to recognize.

There may be other reasons to select a storage platform other than simply cost and performance:

• Ability to synchronize data as a Service,

• Backup / Snapshotting capabilities,

• Auditing and Security of the Service

• Monitoring / Storage Management Reporting

With this in mind, we plan to release more blog articles in the future discussing this decision matrix for Files-Based Storage options for your HPC workloads. We also encourage feedback to be provided on Azure Files and other storage topics of interest.

#AzureHPCAI

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.