- Home

- Azure

- Azure High Performance Computing (HPC) Blog

- Integrating external Grid Engine Scheduler to CycleCloud (Cloud Bursting scenario)

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Azure CycleCloud is an enterprise-friendly tool for orchestrating and managing High-Performance Computing (HPC) environments on Azure. With CycleCloud, users can provision infrastructure for HPC systems, deploy familiar HPC schedulers, and automatically scale the infrastructure to run jobs efficiently at any scale.

In this blog post, we are discussing about how to integrate an external Grid Engine Scheduler to send jobs to CycleCloud for cloud bursting (Enabling on-premises workloads to be sent to the cloud for processing, known as “cloud bursting”) or hybrid HPC scenarios.

For demo purpose, we are creating 2 subnets and using one subnet for external scheduler node and another for compute nodes in CycleCloud. We are not discussing the complexities of networking involved in Hybrid scenarios.

Environment:

- CycleCloud 8.3

- CycleCloud-Gridengine version 2.0.15

- Openlogic CentOS HPC 7.7

- 2 subnets created for deployment (hpc + default). I selected the hpc subnet for CycleCloud and default for the Scheduler node.

Architecture:

Preparing Grid Engine Scheduler (External)

- Deploy a Standard D4ads v5 VM with Openlogic CentOS-HPC 7.7 for Grid Engine Master.

Hostname is “ge-master”

- Login to ge-master and setup up NFS shares for keeping the Grid Engine installation and shared directory for user’s home directory and other purposes.

# yum install nfs-utils -y

# mkdir /sched

# mkdir /shared

# mkdir -p /sched/sge/sge-2011.11

# echo "/sched *(rw,sync,no_root_squash)" >> /etc/exports

# echo "/shared *(rw,sync,no_root_squash)" >> /etc/exports

# exportfs -rv

# systemctl start nfs-server.service

# systemctl enable nfs-server.service

- Create user “sgeadmin” for SGE installation. We need to keep the UID and GID same across every node. In CycleCloud environment “sgeadmin” is created with UID 536 and GID 536. So, creating the users with same user id and group id.

# groupadd -g 536 sgeadmin

# useradd -u 536 -g 536 sgeadmin

- Update SGE_ROOT directory with correct permissions.

# chown -R sgeadmin:sgeadmin /sched/sge/sge-2011.11/

# ls -ld /sched/sge/sge-2011.11/

drwxr-xr-x. 2 sgeadmin sgeadmin 6 Mar 9 07:41 /sched/sge/sge-2011.11/

- Download the SGE installer to SGE_ROOT directory and extract the content.

# cd /sched/sge/sge-2011.11/

# wget https://github.com/Azure/cyclecloud-gridengine/releases/download/2.0.15/sge-2011.11-64.tgz

# wget https://github.com/Azure/cyclecloud-gridengine/releases/download/2.0.15/sge-2011.11-common.tgz

# tar -zxf sge-2011.11-64.tgz

# tar -zxf sge-2011.11-common.tgz[root@ge-master sge-2011.11]# ls -l

total 26272

drwxr-xr-x. 3 root root 50 May 22 2018 3rd_party

drwxr-xr-x. 3 root root 23 Mar 9 07:51 bin

drwxr-xr-x. 2 root root 4096 May 22 2018 ckpt

drwxr-xr-x. 2 root root 66 May 22 2018 dtrace

drwxr-xr-x. 5 root root 46 Mar 9 07:51 examples

-rwxr-xr-x. 1 root root 1350 May 22 2018 install_execd

-rwxr-xr-x. 1 root root 1350 May 22 2018 install_qmaster

-rwxr-xr-x. 1 root root 61355 May 22 2018 inst_sge

drwxr-xr-x. 3 root root 23 Mar 9 07:51 lib

drwxr-xr-x. 4 root root 207 May 22 2018 mpi

drwxr-xr-x. 3 root root 121 May 22 2018 pvm

drwxr-xr-x. 3 root root 21 May 22 2018 qmon

-rw-r--r--. 1 root root 26083793 Nov 1 12:33 sge-2011.11-64.tgz

-rw-r--r--. 1 root root 735068 Nov 1 12:33 sge-2011.11-common.tgz

drwxr-xr-x. 9 root root 4096 May 22 2018 util

drwxr-xr-x. 3 root root 23 Mar 9 07:51 utilbin

[root@ge-master sge-2011.11]#

- For SGE installation, default parameters are used for installation. However, we are using cluster name as “grid1”. This is needed in CycleCloud cluster deployment.

# cd /sched/sge/sge-2011.11

# ./install_qmasterWelcome to the Grid Engine installation

---------------------------------------

Grid Engine qmaster host installation

-------------------------------------

Before you continue with the installation please read these hints:

- Your terminal window should have a size of at least

80x24 characters

- The INTR character is often bound to the key Ctrl-C.

The term >Ctrl-C< is used during the installation if you

have the possibility to abort the installation

The qmaster installation procedure will take approximately 5-10 minutes.

Hit <RETURN> to continue >>

Grid Engine admin user account

------------------------------

The current directory

/sched/sge/sge-2011.11

is owned by user

sgeadmin

If user >root< does not have write permissions in this directory on *all*

of the machines where Grid Engine will be installed (NFS partitions not

exported for user >root< with read/write permissions) it is recommended to

install Grid Engine that all spool files will be created under the user id

of user >sgeadmin<.

IMPORTANT NOTE: The daemons still have to be started by user >root<.

Do you want to install Grid Engine as admin user >sgeadmin< (y/n) [y] >>

Installing Grid Engine as admin user >sgeadmin<

Hit <RETURN> to continue >>

Checking $SGE_ROOT directory

----------------------------

The Grid Engine root directory is not set!

Please enter a correct path for SGE_ROOT.

If this directory is not correct (e.g. it may contain an automounter

prefix) enter the correct path to this directory or hit <RETURN>

to use default [/sched/sge/sge-2011.11] >>

Your $SGE_ROOT directory: /sched/sge/sge-2011.11

Hit <RETURN> to continue >>

Grid Engine TCP/IP communication service

----------------------------------------

The port for sge_qmaster is currently set as service.

sge_qmaster service set to port 6444

Now you have the possibility to set/change the communication ports by using the

>shell environment< or you may configure it via a network service, configured

in local >/etc/service<, >NIS< or >NIS+<, adding an entry in the form

sge_qmaster <port_number>/tcp

to your services database and make sure to use an unused port number.

How do you want to configure the Grid Engine communication ports?

Using the >shell environment<: [1]

Using a network service like >/etc/service<, >NIS/NIS+<: [2]

(default: 2) >>

Grid Engine TCP/IP service >sge_qmaster<

----------------------------------------

Using the service

sge_qmaster

for communication with Grid Engine.

Hit <RETURN> to continue >>

Grid Engine TCP/IP communication service

----------------------------------------

The port for sge_execd is currently set as service.

sge_execd service set to port 6445

Now you have the possibility to set/change the communication ports by using the

>shell environment< or you may configure it via a network service, configured

in local >/etc/service<, >NIS< or >NIS+<, adding an entry in the form

sge_execd <port_number>/tcp

to your services database and make sure to use an unused port number.

How do you want to configure the Grid Engine communication ports?

Using the >shell environment<: [1]

Using a network service like >/etc/service<, >NIS/NIS+<: [2]

(default: 2) >>

Grid Engine TCP/IP communication service

-----------------------------------------

Using the service

sge_execd

for communication with Grid Engine.

Hit <RETURN> to continue >>

Grid Engine cells

-----------------

Grid Engine supports multiple cells.

If you are not planning to run multiple Grid Engine clusters or if you don't

know yet what is a Grid Engine cell it is safe to keep the default cell name

default

If you want to install multiple cells you can enter a cell name now.

The environment variable

$SGE_CELL=<your_cell_name>

will be set for all further Grid Engine commands.

Enter cell name [default] >>

Unique cluster name

-------------------

The cluster name uniquely identifies a specific Sun Grid Engine cluster.

The cluster name must be unique throughout your organization. The name

is not related to the SGE cell.

The cluster name must start with a letter ([A-Za-z]), followed by letters,

digits ([0-9]), dashes (-) or underscores (_).

Enter new cluster name or hit <RETURN>

to use default [p6444] >> grid1

creating directory: /sched/sge/sge-2011.11/default/common

Your $SGE_CLUSTER_NAME: grid1

Hit <RETURN> to continue >>

Grid Engine qmaster spool directory

-----------------------------------

The qmaster spool directory is the place where the qmaster daemon stores

the configuration and the state of the queuing system.

The admin user >sgeadmin< must have read/write access

to the qmaster spool directory.

If you will install shadow master hosts or if you want to be able to start

the qmaster daemon on other hosts (see the corresponding section in the

Grid Engine Installation and Administration Manual for details) the account

on the shadow master hosts also needs read/write access to this directory.

Enter a qmaster spool directory [/sched/sge/sge-2011.11/default/spool/qmaster] >>

Using qmaster spool directory >/sched/sge/sge-2011.11/default/spool/qmaster<.

Hit <RETURN> to continue >>

Verifying and setting file permissions

--------------------------------------

Did you install this version with >pkgadd< or did you already verify

and set the file permissions of your distribution (enter: y) (y/n) [y] >>

We do not verify file permissions. Hit <RETURN> to continue >>

Select default Grid Engine hostname resolving method

----------------------------------------------------

Are all hosts of your cluster in one DNS domain? If this is

the case the hostnames

>hostA< and >hostA.foo.com<

would be treated as equal, because the DNS domain name >foo.com<

is ignored when comparing hostnames.

Are all hosts of your cluster in a single DNS domain (y/n) [y] >>

Ignoring domain name when comparing hostnames.

Hit <RETURN> to continue >>

Grid Engine JMX MBean server

----------------------------

In order to use the SGE Inspect or the Service Domain Manager (SDM)

SGE adapter you need to configure a JMX server in qmaster. Qmaster

will then load a Java Virtual Machine through a shared library.

NOTE: Java 1.5 or later is required for the JMX MBean server.

Do you want to enable the JMX MBean server (y/n) [n] >>

Making directories

------------------

creating directory: /sched/sge/sge-2011.11/default/spool/qmaster

creating directory: /sched/sge/sge-2011.11/default/spool/qmaster/job_scripts

Hit <RETURN> to continue >>

Berkeley Database spooling parameters

-------------------------------------

Please enter the database directory now.

Default: [/sched/sge/sge-2011.11/default/spool/spooldb] >>

creating directory: /sched/sge/sge-2011.11/default/spool/spooldb

Dumping bootstrapping information

Initializing spooling database

Hit <RETURN> to continue >>

Grid Engine group id range

--------------------------

When jobs are started under the control of Grid Engine an additional group id

is set on platforms which do not support jobs. This is done to provide maximum

control for Grid Engine jobs.

This additional UNIX group id range must be unused group id's in your system.

Each job will be assigned a unique id during the time it is running.

Therefore you need to provide a range of id's which will be assigned

dynamically for jobs.

The range must be big enough to provide enough numbers for the maximum number

of Grid Engine jobs running at a single moment on a single host. E.g. a range

like >20000-20100< means, that Grid Engine will use the group ids from

20000-20100 and provides a range for 100 Grid Engine jobs at the same time

on a single host.

You can change at any time the group id range in your cluster configuration.

Please enter a range [20000-20100] >>

Using >20000-20100< as gid range. Hit <RETURN> to continue >>

Grid Engine cluster configuration

---------------------------------

Please give the basic configuration parameters of your Grid Engine

installation:

<execd_spool_dir>

The pathname of the spool directory of the execution hosts. User >sgeadmin<

must have the right to create this directory and to write into it.

Default: [/sched/sge/sge-2011.11/default/spool] >>

Grid Engine cluster configuration (continued)

---------------------------------------------

<administrator_mail>

The email address of the administrator to whom problem reports are sent.

It is recommended to configure this parameter. You may use >none<

if you do not wish to receive administrator mail.

Please enter an email address in the form >user@foo.com<.

Default: [none] >>

The following parameters for the cluster configuration were configured:

execd_spool_dir /sched/sge/sge-2011.11/default/spool

administrator_mail none

Do you want to change the configuration parameters (y/n) [n] >>

Creating local configuration

----------------------------

Creating >act_qmaster< file

Adding default complex attributes

Adding default parallel environments (PE)

Adding SGE default usersets

Adding >sge_aliases< path aliases file

Adding >qtask< qtcsh sample default request file

Adding >sge_request< default submit options file

Creating >sgemaster< script

Creating >sgeexecd< script

Creating settings files for >.profile/.cshrc<

sed: can't read dist/util/create_settings.sh: No such file or directory

Hit <RETURN> to continue >>

qmaster startup script

----------------------

We can install the startup script that will

start qmaster at machine boot (y/n) [y] >>

Installing startup script /etc/rc.d/rc3.d/S95sgemaster.grid1 and /etc/rc.d/rc3.d/K03sgemaster.grid1

Hit <RETURN> to continue >>

Grid Engine qmaster startup

---------------------------

Starting qmaster daemon. Please wait ...

sed: can't read dist/util/rctemplates/sgemaster_template: No such file or directory

starting sge_qmaster

Hit <RETURN> to continue >>

Adding Grid Engine hosts

------------------------

Please now add the list of hosts, where you will later install your execution

daemons. These hosts will be also added as valid submit hosts.

Please enter a blank separated list of your execution hosts. You may

press <RETURN> if the line is getting too long. Once you are finished

simply press <RETURN> without entering a name.

You also may prepare a file with the hostnames of the machines where you plan

to install Grid Engine. This may be convenient if you are installing Grid

Engine on many hosts.

Do you want to use a file which contains the list of hosts (y/n) [n] >>

Adding admin and submit hosts

-----------------------------

Please enter a blank seperated list of hosts.

Stop by entering <RETURN>. You may repeat this step until you are

entering an empty list. You will see messages from Grid Engine

when the hosts are added.

Host(s):

Finished adding hosts. Hit <RETURN> to continue >>

If you want to use a shadow host, it is recommended to add this host

to the list of administrative hosts.

If you are not sure, it is also possible to add or remove hosts after the

installation with <qconf -ah hostname> for adding and <qconf -dh hostname>

for removing this host

Attention: This is not the shadow host installation

procedure.

You still have to install the shadow host separately

Do you want to add your shadow host(s) now? (y/n) [y] >> n

Creating the default <all.q> queue and <allhosts> hostgroup

-----------------------------------------------------------

root@ge-master.2bdikvxkkxjeffxswkrwrjvvra.bx.internal.cloudapp.net added "@allhosts" to host group list

root@ge-master.2bdikvxkkxjeffxswkrwrjvvra.bx.internal.cloudapp.net added "all.q" to cluster queue list

Hit <RETURN> to continue >>

Scheduler Tuning

----------------

The details on the different options are described in the manual.

Configurations

--------------

1) Normal

Fixed interval scheduling, report limited scheduling information,

actual + assumed load

2) High

Fixed interval scheduling, report limited scheduling information,

actual load

3) Max

Immediate Scheduling, report no scheduling information,

actual load

Enter the number of your preferred configuration and hit <RETURN>!

Default configuration is [1] >>

We're configuring the scheduler with >Normal< settings!

Do you agree? (y/n) [y] >> y

Using Grid Engine

-----------------

You should now enter the command:

source /sched/sge/sge-2011.11/default/common/settings.csh

if you are a csh/tcsh user or

# . /sched/sge/sge-2011.11/default/common/settings.sh

if you are a sh/ksh user.

This will set or expand the following environment variables:

- $SGE_ROOT (always necessary)

- $SGE_CELL (if you are using a cell other than >default<)

- $SGE_CLUSTER_NAME (always necessary)

- $SGE_QMASTER_PORT (if you haven't added the service >sge_qmaster<)

- $SGE_EXECD_PORT (if you haven't added the service >sge_execd<)

- $PATH/$path (to find the Grid Engine binaries)

- $MANPATH (to access the manual pages)

Hit <RETURN> to see where Grid Engine logs messages >>

Grid Engine messages

--------------------

Grid Engine messages can be found at:

/tmp/qmaster_messages (during qmaster startup)

/tmp/execd_messages (during execution daemon startup)

After startup the daemons log their messages in their spool directories.

Qmaster: /sched/sge/sge-2011.11/default/spool/qmaster/messages

Exec daemon: <execd_spool_dir>/<hostname>/messages

Grid Engine startup scripts

---------------------------

Grid Engine startup scripts can be found at:

/sched/sge/sge-2011.11/default/common/sgemaster (qmaster)

/sched/sge/sge-2011.11/default/common/sgeexecd (execd)

Do you want to see previous screen about using Grid Engine again (y/n) [n] >>

Your Grid Engine qmaster installation is now completed

------------------------------------------------------

Please now login to all hosts where you want to run an execution daemon

and start the execution host installation procedure.

If you want to run an execution daemon on this host, please do not forget

to make the execution host installation in this host as well.

All execution hosts must be administrative hosts during the installation.

All hosts which you added to the list of administrative hosts during this

installation procedure can now be installed.

You may verify your administrative hosts with the command

# qconf -sh

and you may add new administrative hosts with the command

# qconf -ah <hostname>

Please hit <RETURN> >>

Update SGE_EXECD_PORT Port number in /sched/sge/sge-2011.11/default/common/settings.sh .

Default installation uses 6444 for QMASTER and 6445 for SGE_EXECD. In CycleCloud environment it uses a different port. So, we need to explicitly update the port for SGE_EXECD.

unset SGE_QMASTER_PORT

unset SGE_EXECD_PORT

SGE_EXECD_PORT=6445; export SGE_EXECD_PORT #new line

Copy /sched/sge/sge-2011.11/default/common/settings.sh to profile.d for setting up the required SGE Variable during user login.

# cp /sched/sge/sge-2011.11/default/common/settings.sh /etc/profile.d/sgesettings.sh

Source the settings.sh file and check the SGE variables are correct.

# source /etc/profile.d/sgesettings.sh

# env | grep SGE

SGE_CELL=default

SGE_EXECD_PORT=6445

SGE_ROOT=/sched/sge/sge-2011.11

SGE_CLUSTER_NAME=grid1

Setting up the Queues, Parallel Environment and Host groups

- Checking the current parallel environment

# qconf -spl

make

- Adding a parallel environment called “mpi” and update/change the following parameters.

# qconf -ap mpi

pe_name mpi

slots 999

user_lists NONE

xuser_lists NONE

start_proc_args /sched/sge/sge-2011.11/mpi/startmpi.sh -unique $pe_hostfile

stop_proc_args /sched/sge/sge-2011.11/mpi/stopmpi.sh

allocation_rule $fill_up

control_slaves TRUE

job_is_first_task FALSE

urgency_slots min

accounting_summary FALSE

- Check the host groups.

# qconf -shgrpl

@allhosts

- Create new host groups for cyclecloud execution nodes as @cyclempi. And it’s an empty hostgroup

# qconf -ahgrp @cyclempi

root@ge-master.2bdikvxkkxjeffxswkrwrjvvra.bx.internal.cloudapp.net added "@cyclempi" to host group list

- Verify the hostgroups.

# qconf -shgrp @cyclempi

group_name @cyclempi

hostlist NONE

# qconf -shgrpl

@allhosts

@cyclempi

- Check the queues available in SGE after installation.

# qconf -sql

all.q

- We need to update couple of parameters in all.q for accepting jobs for CycleCloud execute nodes.

# qconf -mq all.q

qname all.q

hostlist @allhosts @cyclempi

seq_no 0

load_thresholds np_load_avg=1.75

suspend_thresholds NONE

nsuspend 1

suspend_interval 00:05:00

priority 0

min_cpu_interval 00:05:00

processors UNDEFINED

qtype BATCH INTERACTIVE

ckpt_list NONE

pe_list NONE,[@cyclempi=mpi]

rerun FALSE

slots 1

tmpdir /tmp

shell /bin/bash

prolog NONE

epilog NONE

SGE preparation is completed. Now we need to prepare the CycleCloud integration steps.

CycleCloud settings:

Open CycleCloud Portal and create the cluster as usual and in the “Network Attached Storage” Settings

- Cluster name is “hybrid”

- Select “External Scheduler” option.

- Edit NFS shares. Select External NFS and add the NFS Server IP address ( My case my external scheduler has the NFS server). Use option to “Add NFS Mount” for /sched mount point.

- In the “Advanced settings” select “CentOS 7” for all the compute nodes and save the cluster.

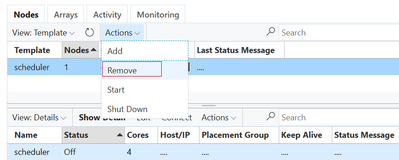

- Before starting the cluster, we need to remove the scheduler node in the cyclecloud cluster. as we have an external scheduler for job submission.

- Start the cluster.

- Add a node in cyclecloud manually and copy the /etc/hosts file to master node for setting the built-in Name resolution. cyclecloud generates a host file during the startup and populated to all nodes. In our case we have an external scheduler, so we need to add it manually for hostname resolution.

The manually added node will show the software configuration error. Ignore it and login to the node and copy the /etc/hosts file to /shared/ location.

[vinil@ip-0ADE012D ~]$ sudo cp /etc/hosts /shared/

Overwriting the /etc/hosts as there is no node specific info. Incase if you have node specific details then use only the CycleCloud execute nodes ip and hostnames from the file.

[root@ge-master ~]# cp /shared/hosts /etc/hosts

cp: overwrite ‘/etc/hosts’? y

[root@ge-master ~]#

- CycleCloud setup is completed.

CycleCloud & Scheduler Integration.

- Download cyclecloud-gridengine-pkg to scheduler.

# wget https://github.com/Azure/cyclecloud-gridengine/releases/download/2.0.15/cyclecloud-gridengine-pkg-2.0.15.tar.gz

- Install cyclecloud-gridengine-pkg in the scheduler using the following commands.

# tar -zxvf cyclecloud-gridengine-pkg-2.0.15.tar.gz

# yum install python3 -y

# cd cyclecloud-gridengine/

# ./install.sh

- We need to generate an autoscale.json file for autoscaling the nodes to CycleCloud from external scheduler.

./generate_autoscale_json.sh --cluster-name burst2cloud --username username --password password --url https://cyclecloudip

Cluster name has to be same as the cluster name given in cyclecloud portal. I used burst2cloud.

Username: cyclecloud username

Password: cyclecloud password.

[root@ge-master cyclecloud-gridengine]# ./generate_autoscale_json.sh --cluster-name hybrid --username <username> --password <password> --url https://10.222.1.36

Complex slot_type does not exist. Skipping default_resource definition.

Complex nodearray does not exist. Skipping default_resource definition.

Hostgroup @cyclehtc does not exist. Creating a default_hostgroup that you can edit manually. Defaulting to @allhosts

Complex slot_type does not exist. Excluding from relevant_complexes.

Complex nodearray does not exist. Excluding from relevant_complexes.

Complex m_mem_free does not exist. Excluding from relevant_complexes.

Complex exclusive does not exist. Excluding from relevant_complexes.

You have new mail in /var/spool/mail/root

[root@ge-master cyclecloud-gridengine]#

- This will create an autoscale.json file under /opt/cycle/gridengine/autoscale.json

Update if there is any parameter need to be corrected like hostgroup and any complex resources etc.

- Create logs directory for autoscale using the following commands. we are not using jetpack on external scheduler. But autoscaler look for /opt/cycle/jetpack/logs location.

# mkdir -p /opt/cycle/jetpack/logs/

# touch /opt/cycle/jetpack/logs/autoscale.log

- Test the autoscale using the following command. it should come out without any error.

# azge autoscale -c /opt/cycle/gridengine/autoscale.json

NAME HOSTNAME JOB_IDS HOSTGROUPS EXISTS REQUIRED MANAGED SLOTS *SLOTS VM_SIZE MEMORY VCPU_COUNT STATE PLACEMENT_GROUP CREATE_TIME_REMAINING IDLE_TIME_REMAINING

- You need to add the scheduler as a submit host.

# qconf -as ge-master.2bdikvxkkxjeffxswkrwrjvvra.bx.internal.cloudapp.net

ge-master.2bdikvxkkxjeffxswkrwrjvvra.bx.internal.cloudapp.net added to submit host list

- Submit a test job and check the functionality. I used root account to test the job. In real scenarios, make sure that users and home directories are present in both external scheduler and compute nodes. or use centralized authentication mechanism for user management. Make sure the GID of the users are in 20000-20100 (default settings) unless you change the GID for job submission.

# echo sleep 1000 | qsub -pe mpi 8

Your job 1 ("STDIN") has been submitted

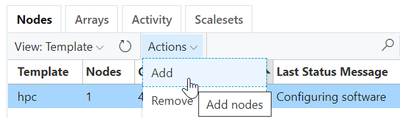

- You will see 2 execute nodes are coming up in CycleCloud portal

The autoscale now shows the nodes from the external scheduler node.

[root@ge-master cyclecloud-gridengine]# azge autoscale

NAME HOSTNAME JOB_IDS HOSTGROUPS EXISTS REQUIRED MANAGED SLOTS *SLOTS VM_SIZE MEMORY VCPU_COUNT STATE PLACEMENT_GROUP CREATE_TIME_REMAINING IDLE_TIME_REMAINING

hpc-1 tbd 1 @cyclempi True True True 4 0 Standard_F4 8.00g 4 Acquiring cyclempi 0.0 -1

hpc-2 tbd 1 @cyclempi True True True 4 0 Standard_F4 8.00g 4 Acquiring cyclempi 0.0 -1

You have new mail in /var/spool/mail/root

[root@ge-master cyclecloud-gridengine]#

NOTE: When you are working with an external scheduler node, make sure that the required network ports for the Grid Engine scheduler, compute nodes, file shares, license server etc. are opened for successful communication.

Reference:

Learn more about CycleCloud

More info on Azure High-Performance computing

Read more on Cyclecloud User Management

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.