Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

- Home

- Azure

- Apps on Azure Blog

- Next-Gen Customer Service: Azure's AI-Powered Speech, Translation and Summarization

Next-Gen Customer Service: Azure's AI-Powered Speech, Translation and Summarization

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

By

Published

Apr 25 2024 12:18 PM

1,517

Views

Apr 25 2024

12:18 PM

Apr 25 2024

12:18 PM

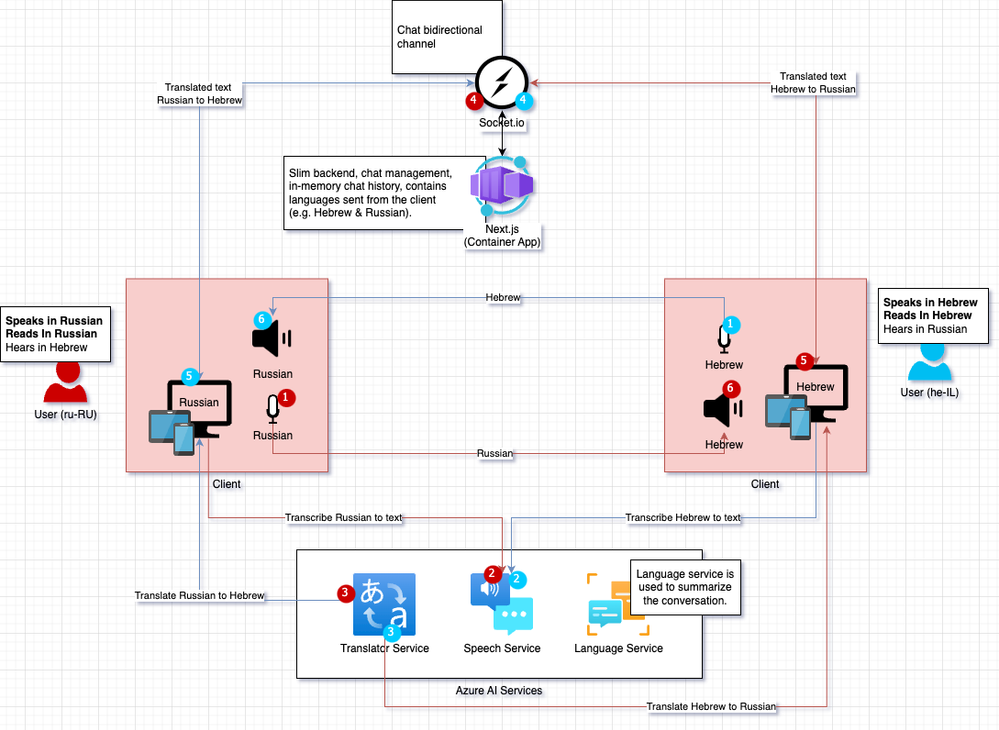

The purpose of this solution is to enable applications to incorporate AI capabilities. In the upcoming demo, I will showcase how to transcribe and speech (Azure AI Speech), translate (Azure AI Translator) and summarize (Azure AI Language) conversations between customers and businesses without significantly modifying your existing apps.

This application can be helpful in various scenarios where two parties speak different languages and require simultaneous translation. For instance, it can be employed in call centers where the representative and the customer do not speak the same language, by bank tellers dealing with foreign clients, by doctors communicating with elderly patients who do not speak the native language well, and in other similar situations where both parties need to converse in their respective native languages.

We will use the React.js client-side SDK and REST APIs of the Azure AI Services in this solution. The application's backend is a slim Next.js Node.js server that uses Azure Web PubSub for Socket.IO

to provide real-time, duplex communication between the client and the server. Furthermore, the Next.js slim backend is hosted using Azure Container Apps.

Scenario explained

Prerequisites

> Note: This solution focus on the client-side of Azure AI Services. In order to keep the solution simple we will create the surrounding resources manually using the Azure portal.

Active Azure subscription. If you don't have an Azure subscription, you can create one for free

Create a Speech resource in the Azure portal.

Create a Translator resource in the Azure portal.

Create a Language resource in the Azure portal

Create a Container Registry in the Azure portal.

Create Azure Web PubSub for Socket.IO resource using

Prepare the environment

Clone the repository

git clone https://github.com/eladtpro/azure-ai-services-client-side.git

Set the Next.js environment variables (.env)

> Note: The Next.js local server requires loading environment variables from the .env file. Use the env.sample template to create a new .env file in the project root and replace the placeholders with the actual values.

> Important: It is essential to keep in mind that the .env file should NEVER be committed to the repository.

The .env file should look like this:

WEBSITE_HOSTNAME=http://localhost:3001

NODE_ENV='development'

PORT=3000

SOCKET_PORT=29011

SOCKET_ENDPOINT=https://<SOCKET_IO_SERVICE>.webpubsub.azure.com

SOCKET_CONNECTION_STRING=<SOCKET_IO_CONNECTION_STRING>

SPEECH_KEY=<SPEECH_KEY>

SPEECH_REGION=westeurope

LANGUAGE_KEY=<LANGUAGE_KEY>

LANGUAGE_REGION=westeurope

LANGUAGE_ENDPOINT=https://<LANGUAGE_SERVICE>.cognitiveservices.azure.com/

TRANSLATE_KEY=<TRANSLATE_KEY>

TRANSLATE_ENDPOINT=https://api.cognitive.microsofttranslator.com/

TRANSLATE_REGION=westeuropeSet the environment variables for the setup.sh script (.env.sh)

> Note: To set up the Azure Container App resource running the Next.js server, you need to set the environment variables for the setup.sh script. The script uses the .env.sh file to load these variables, which should be created at the project's root. You can use the .env.sh.sample template to create a new .env.sh file in the project root. Replace the placeholders in the template with the actual values.

> Important: The .env.sh file must NOT be committed to the repository.

> Important: The .env.sh file depends on the .env file we created in the previous step.

The .env.sh file should look like this:

RESOURCE_GROUP=<RESOURCE_GROUP>

LOCATION=westeurope

CONTAINER_APP_NAME=aiservices

CONTAINER_APP_IMAGE=<CONTAINER_REGISTRY>.azurecr.io/aiservices:latest

CONTAINER_APP_PORT=80

CONTAINER_REGISTRY_SERVER=<CONTAINER_REGISTRY>.azurecr.io

CONTAINER_REGISTRY_IDENTITY=system

CONTAINER_ENVIRONMENT_NAME=env-ai-services

LOGS_WORKSPACE_ID=<LOGS_WORKSPACE_ID>

LOGS_WORKSPACE_KEY=<LOGS_WORKSPACE_KEY>

SUBSCRIPTION_ID=<SUBSCRIPTION_ID>Create a service principal (App registration) and save it as a GitHub secret

> Note: The GitHub action employs the app registration service principal to handle two roles. First, it pushes the images to the Azure Container Registry (ACR). Second, it deploys Azure Container Apps. To perform these roles, the service principal must have a Contributor role on the Azure resource group.

login to azure

az login create app registration service principal:

az ad sp create-for-rbac --name aiservices-github --role Contributor --scopes /subscriptions/${SUBSCRIPTION_ID}/resourceGroups/${RESOURCE_GROUP} --sdk-auth

The command above will result a JSON formatted output that looks like the JSON block below,

{

"clientId": "00000000-0000-0000-0000-000000000000",

"clientSecret": "00000000000000000000000000000000",

"subscriptionId": "00000000-0000-0000-0000-000000000000",

"tenantId": "00000000-0000-0000-0000-000000000000",

"activeDirectoryEndpointUrl": "https://login.microsoftonline.com",

"resourceManagerEndpointUrl": "https://management.azure.com/",

"activeDirectoryGraphResourceId": "https://graph.windows.net/",

"sqlManagementEndpointUrl": "https://management.core.windows.net:8443/",

"galleryEndpointUrl": "https://gallery.azure.com/",

"managementEndpointUrl": "https://management.core.windows.net/"

}

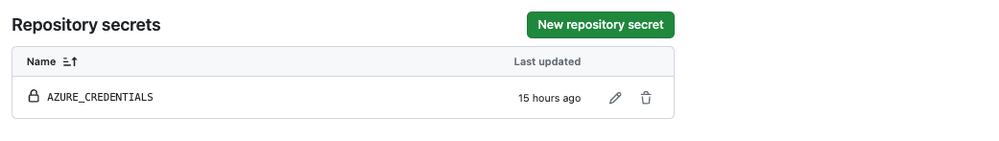

Set other environment variables for the GitHub action

At the GItHub repo setting, move to the Variables tab.

- Set the CONTAINER_REGISTRY variable to the name of the Azure Container Registry.

- Set the RESOURCE_GROUP variable for the resource group where you Container registry.

- Set the CONTAINER_APP_NAME variable to the name of the Azure Container App.

Run it locally

After creating the Azure resources and setting up the environment, you can run the Next.js app locally.

npm install

npm run devThis command will start the Next.js server on port 3000. The server will also serve the client-side static files.

After the app is running, you can access it locally at http://localhost:3000.

Run it as an Azure Container App

Run the setup.sh script to create the Azure Container App resource to run the Next.js server on Azure.

az login

./setup.shWait for the app to be deployed, the result will be the FQDN of the Azure Container App.

> Note: You can get the FQDN by running the following command:

az containerapp show \

--name $CONTAINER_APP_NAME \

--resource-group $RESOURCE_GROUP \

--query properties.configuration.ingress.fqdn > Note: You can also get the Application Url on the Container App resource overview blade.

We have completed the setup part.

Now, you can access the app using the Azure Container App URL.

Sample Application - how to use it

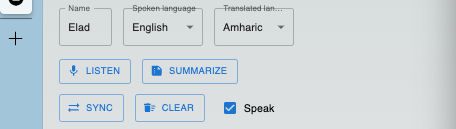

Spoken language: Select the language of the speaker.

Translated language: Select the language to which the spoken language will be translated.

Listen: Start the speech-to-text transcription, this will use the Speech to text SDK for JavaScript package.

// stt.js

// intialize the speech recognizer

const speechConfig = speechsdk.SpeechConfig.fromAuthorizationToken(tokenObj.token, tokenObj.region);

const audioConfig = speechsdk.AudioConfig.fromDefaultMicrophoneInput();

recognizer = new speechsdk.SpeechRecognizer(speechConfig, audioConfig);

// register the event handlers

...

// listen and transcribe

recognizer.startContinuousRecognitionAsync();Stop: Stop the speech-to-text transcription.

// stt.js

// stop the speech recognizer

recognizer.stopContinuousRecognitionAsync();Translate: On the listener side each recognized phrase will be translated to the selected language using the Text Translation REST API.

// utils.js

...

const res = await axios.post(`${config.translateEndpoint}translate?api-version=3.0&from=${from}&to=${to}`, data, headers);

return res.data[0].translations[0].text;Summarize: Summarize the conversation. for this we will use the Azure Language Service Conversation Summarization API.

let res = await axios.post(`${config.languageEndpoint}language/analyze-conversations/jobs?api-version=2023-11-15-preview`, data, headers);

const jobId = res.headers['operation-location'];

let completed = false

while (!completed) {

res = await axios.get(`${jobId}`, headers);

completed = res.data.tasks.completed > 0;

}

const conv = res.data.tasks.items[0].results.conversations[0].summaries.map(summary => {

return { aspect: summary.aspect, text: summary.text }

});

return conv;Speak: The translated text will be synthesized to speech using the Text to Speech JavaScript package.

// stt.js

// intialize the speech synthesizer

speechConfig.speechSynthesisVoiceName = speakLanguage;

const synthAudioConfig = speechsdk.AudioConfig.fromDefaultSpeakerOutput();

synthesizer = new speechsdk.SpeechSynthesizer(speechConfig, synthAudioConfig);

...

// speak the text

synthesizer.speakTextAsync(text,

function (result) {

if (result.reason === speechsdk.ResultReason.SynthesizingAudioCompleted) {

console.log("synthesis finished.");

} else {

console.error("Speech synthesis canceled, " + result.errorDetails +

"\nDid you set the speech resource key and region values?");

}

});Clear: Clear the conversation history.

// socket.js

clearMessages = () =>

socket.emit('clear');Sync: Sync the conversation history between the two parties.

// socket.js

syncMessages = () =>

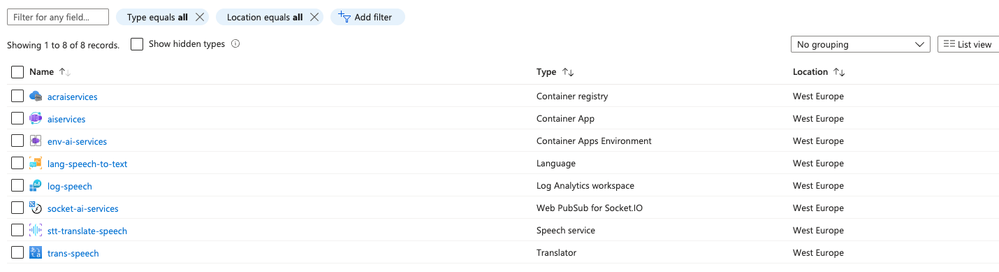

socket.emit('sync');Resources Deployed in this solution (Azure)

- Container Registry: for the Next.js app container image.

- Container App (& Container App Environment): for the Next.js app.

- Language Service: for the conversation summarization.

- Log Analytics Workspace: for the logs of the container app.

- Web PubSub for Socket.IO: for the real-time, duplex communication between the client and the server.

- Speech service: for the speech-to-text transcription capabilities.

- Translator service: for the translation capabilities.

Improve recognition accuracy with custom speech

How does it work?

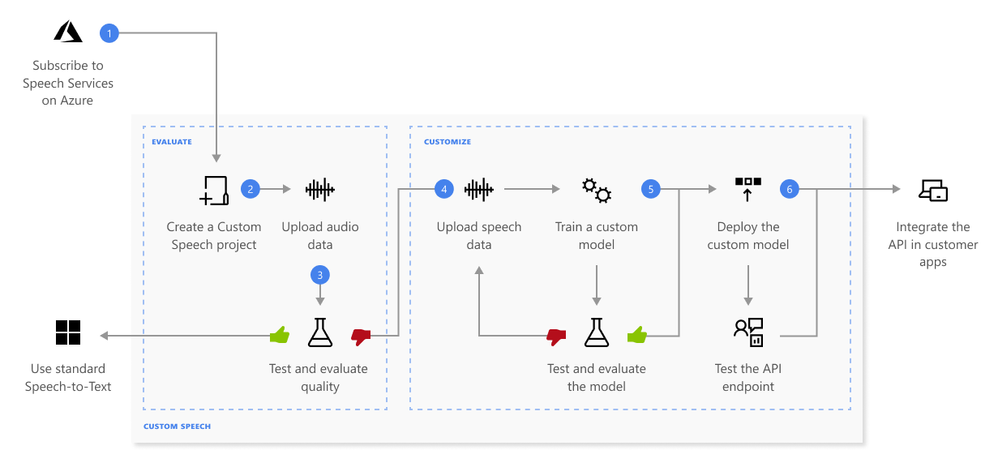

With custom speech, you can upload your own data, test and train a custom model, compare accuracy between models, and deploy a model to a custom endpoint.

Here's more information about the sequence of steps shown in the previous diagram:

- Create a project and choose a model. Use a Speech resource that you create in the Azure portal. If you train a custom model with audio data, choose a Speech resource region with dedicated hardware for training audio data. For more information, see footnotes in the regions table.

- Upload test data. Upload test data to evaluate the speech to text offering for your applications, tools, and products.

- Test recognition quality. Use the Speech Studio to play back uploaded audio and inspect the speech recognition quality of your test data.

- Test model quantitatively. Evaluate and improve the accuracy of the speech to text model. The Speech service provides a quantitative word error rate (WER), which you can use to determine if more training is required.

- Train a model. Provide written transcripts and related text, along with the corresponding audio data. Testing a model before and after training is optional but recommended.

- Deploy a model. Once you're satisfied with the test results, deploy the model to a custom endpoint. Except for batch transcription, you must deploy a custom endpoint to use a custom speech model.

Conclusion

We demonstrated how to use Azure AI Services to enable apps to incorporate AI capabilities for transcribing, translating, summarizing, and speaking conversations between customers and businesses with minimum effort on existing apps and focusing on the client side capabilities. These features can be helpful in scenarios where two parties speak different languages, such as call centers, banks, and medical clinics.

Links

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.