- Home

- Azure

- Apps on Azure Blog

- Autoscaling of Microservice Apps on Azure: Leveraging Azure Kubernetes Service, KEDA, and MSSQL

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

This blog post aims to walk you through the setup of an autoscaled application on Azure Kubernetes Service (AKS) with Kubernetes-based Event-Driven Autoscaling (KEDA), activated by Microsoft SQL Server (MSSQL) queries. By following this guide, you will have an autoscaled application in place, facilitating efficient resource utilization and equipping your application to manage fluctuating workloads, thereby enhancing its performance and responsiveness.

Prerequisites:

- Azure Account: An active Azure account is required. If you do not have one, you can sign up for a free account on the Azure website.

- Kubernetes Familiarity: A basic understanding of Kubernetes concepts, including Deployments, Services, and Persistent Volumes, is essential.

- SQL Server Knowledge: Basic knowledge of SQL Server and SQL queries is necessary, as MSSQL will be used as a KEDA trigger.

- Tools Installation: Ensure that Azure CLI and kubectl are installed on your local machine.

To install the KEDA add-on, use --enable-keda when creating or updating a cluster. You can find the different installation options in this Microsoft documentation: Install the KEDA add-on with Azure CLI.

Create a new AKS cluster using the az aks create command and enable the KEDA add-on using the --enable-keda flag.

az aks create --resource-group myResourceGroup --name myAKSCluster --enable-keda

To begin, we will deploy a SQL Server container on Azure Kubernetes Service (AKS). For guidance, you can consult the official Microsoft quickstart guide on running SQL Server containers on Azure: Deploy a SQL Server container cluster on Azure.

The deployment process is as follows:

- Provision Persistent Storage: We will create a StorageClass for Azure Disk to ensure our MSSQL data has persistent storage.

- Create a Persistent Volume Claim (PVC): We will define a Persistent Volume Claim (PVC) that utilizes the previously created StorageClass. This PVC will dynamically provision Azure Disks for storing MSSQL data.

- Deploy MSSQL: We will deploy MSSQL using Kubernetes manifests, designating the previously created PVC for persistent storage.

The YAML files necessary to create the StorageClass, Persistent Volume Claim, and a Microsoft SQL Server (MSSQL) instance on Azure Kubernetes Service (AKS) can be found in the following GitHub link: GitHub link for Kubernetes Autoscaling with KEDA and MSSQL on AKS.

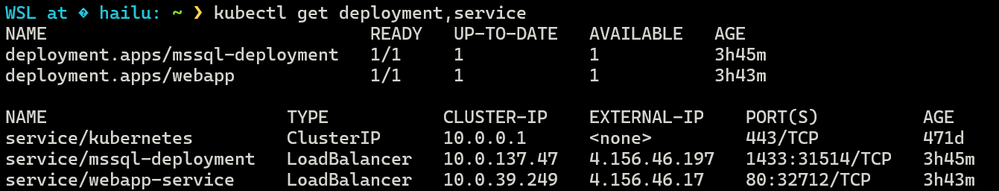

In this blog, we have set up a deployment called "mssql-deployment" for MSSQL with a single replica and a Kubernetes Service of type LoadBalancer to facilitate external access. The external IP provided by this service will be used to connect to the SQL Server container via SSMS from our local machine. The following section will cover the creation of our web app deployment and the process of connecting it to this MSSQL Server instance from the application.

Deploying and Exposing Your Web Application on Azure Kubernetes Service:

In the preceding sections, we covered setting up persistent storage and deploying a Microsoft SQL Server (MSSQL) instance on Azure Kubernetes Service (AKS). We will now proceed to deploy and expose our web application. For further information on deploying an application to Azure Kubernetes Service (AKS), you can refer to this Microsoft tutorial: Tutorial - Deploy an application to Azure Kubernetes Service (AKS).

Below are the sample Kubernetes deployment and service manifest files featured in this blog. These scripts are available at the following GitHub link: GitHub link for Kubernetes Autoscaling with KEDA and MSSQL on AKS.

apiVersion: apps/v1

kind: Deployment

metadata:

name: webapp

spec:

selector:

matchLabels:

app: webapp

replicas: 1

template:

metadata:

labels:

app: webapp

spec:

containers:

- name: webapp

image: yourdotnetimage/webapp:dotnet-v7.0.1.01

ports:

- containerPort: 80

resources:

limits:

cpu: 300m

memory: "100Mi"

requests:

cpu: 100m

memory: "50Mi"

env:

- name: ConnectionStrings__WebAppContext

value: "Server=mssql-deployment;Database=ProdcutsDB;User ID=SA;Password=yourpasswordhere;Encrypt=False;"

---

kind: Service

apiVersion: v1

metadata:

name: webapp-service

spec:

selector:

app: webapp

ports:

- protocol: TCP

port: 80

targetPort: 80

type: LoadBalancer

After applying the manifest files mentioned above, we should see the deployments and services for both the SQL Server and the web app created on our AKS cluster. It's important to note that both services are assigned an external IP, allowing us to connect to our containers via the internet.

To interact with MSSQL from your local machine, follow these steps:

- Get External IP: Retrieve the external IP of the MSSQL Service using the "kubectl get svc" command, as indicated in the screenshot above.

- Configure SSMS: Use the external IP to configure SQL Server Management Studio (SSMS) on your local machine. Specify the external IP as the server's name and use SQL Server Authentication with the provided credentials.

Be aware that this approach makes your SQL Server accessible over the internet, which may not be secure. Always protect your SQL Server with a firewall and establish secure connections. After connecting to the SQL Server with SSMS, proceed to create a table and insert data as illustrated below to emulate workload variations.

--- Check if a table named 'backlog' exists in the 'dbo' schema

IF NOT EXISTS (SELECT * FROM sys.tables WHERE name = 'backlog' AND schema_id = SCHEMA_ID('dbo'))

BEGIN

-- If the 'backlog' table does not exist, create it with columns 'id' and 'state'

CREATE TABLE backlog (

id INT IDENTITY(1,1) PRIMARY KEY, -- 'id' is an auto-incrementing integer

state VARCHAR(50) -- 'state' is a variable character string with a maximum length of 50

);

END

-- Declare a variable named '@counter' and initialize it with the value 1

DECLARE @counter INT = 1;

WHILE @counter <= 50 -- Start a loop that will run 50 times

BEGIN

-- Insert a new row into the 'backlog' table with 'state' set to 'queued'

INSERT INTO backlog (state) VALUES ('queued');

-- Increment the '@counter' variable by 1

SET @counter = @counter + 1;

END;

The script begins by verifying the existence of a table called 'backlog' within the 'dbo' schema. If it doesn't exist, the script proceeds to create this table with 'id' and 'state' columns. In our demonstration, this action will result in the creation of a new 'backlog' table. Subsequently, a loop is initiated that executes 50 times; during each cycle, a new 'queued' state row is added to the 'backlog' table.

Therefore, if we execute a count query on the 'backlog' table for rows where the 'state' is 'queued', we should expect the result to be 50.

SELECT count(*) FROM backlog WHERE state = 'queued'

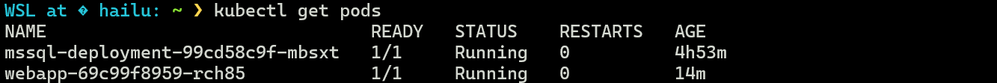

From our AKS cluster, we can verify the number of pods by using the command "kubectl get pods," which shows the web app and the MSSQL pods operating as anticipated (below screenshot)

Autoscaling Applications on Kubernetes with KEDA and MSSQL:

Now, let us examine the YAML configuration that illustrates autoscaling a Kubernetes application in response to the load on a Microsoft SQL Server (MSSQL) database, utilizing Kubernetes Event-Driven Autoscaling (KEDA). Various methods exist for employing the Trigger Specification for MSSQL. In this blog, we demonstrate the deployment of a scaled object using the mssql scale trigger, which incorporates TriggerAuthentication and a connection string: KEDA Documentation.

apiVersion: v1

kind: Secret

metadata:

name: mssql-secrets

type: Opaque

data:

mssql-connection-string: echo "Server=mssql-deployment.default.svc.cluster.local;port=1433;Database=ProdcutsDB;Persist Security Inf

o=False;User ID=SA;Password=MyC0m9l&xP;Encrypt=False;TrustServerCertificate=True;" | base64

---

apiVersion: keda.sh/v1alpha1

kind: TriggerAuthentication

metadata:

name: keda-trigger-auth-mssql-secret

spec:

secretTargetRef:

- parameter: connectionString

name: mssql-secrets

key: mssql-connection-string

---

apiVersion: keda.sh/v1alpha1

kind: ScaledObject

metadata:

name: mssql-scaledobject

spec:

scaleTargetRef:

name: webapp # e.g. the name of the resource to scale

pollingInterval: 1 # Optional. Default: 30 seconds

cooldownPeriod: 30 # Optional. Default: 300 seconds

minReplicaCount: 1 # Optional. Default: 0

maxReplicaCount: 15 # Optional. Default: 100

triggers:

- type: mssql

metadata:

targetValue: "5"

query: "SELECT count(*) FROM backlog WHERE state = 'queued'"

authenticationRef:

name: keda-trigger-auth-mssql-secret

The above configuration begins by creating a Kubernetes Secret named 'mssql-secrets', which securely stores the base64-encoded MSSQL connection string. Kubernetes Secrets are designed to protect sensitive information like passwords, OAuth tokens, and SSH keys. Subsequently, a 'TriggerAuthentication' custom resource named 'keda-trigger-auth-mssql-secret' is established, specifying the authentication method KEDA will use with the MSSQL server, referencing the previously created 'mssql-secrets' Secret.

The configuration then introduces a 'ScaledObject' custom resource, which associates with the 'TriggerAuthentication' and defines how KEDA will modulate the deployment scaling in response to the MSSQL server load. The 'triggers' section contains a single 'mssql' trigger type, signifying KEDA's reliance on a Microsoft SQL Server (MSSQL) database for the metrics needed to make scaling decisions.

In the 'metadata' portion, the 'mssql' trigger configuration is detailed as follows:

- 'targetValue': The metric threshold KEDA targets to maintain by scaling the deployment's pod count up or down, set here to "5".

- 'query': The SQL query "SELECT count(*) FROM backlog WHERE state = 'queued'" tallies the 'backlog' table entries with 'state' set to 'queued'. KEDA executes this query on the MSSQL server to ascertain the current metric value.

This configuration directs KEDA to scale the application based on the number of 'queued' tasks in the 'backlog' table. Should the count surpass 5, KEDA will scale up the application; if it drops below 5, it will scale down.

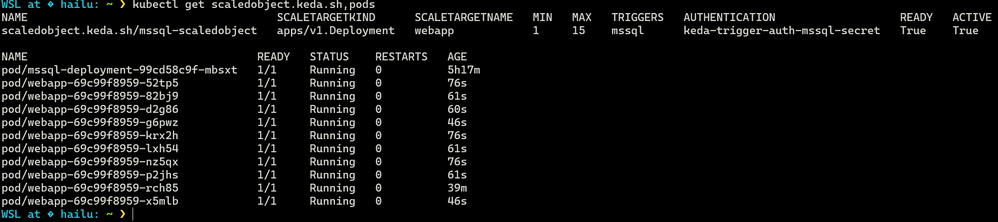

Once KEDA is configured, it's applied to the Kubernetes cluster. KEDA then persistently monitors the defined MSSQL metrics, dynamically adjusting pod numbers to ensure optimal performance and resource efficiency. For instance, if there are 50 'queued' tasks, KEDA will respond accordingly to manage the load. The screenshot below verifies that our web app scaled to 10, as there are 50 'queued' tasks and the "targetValue" is 5.

It is crucial to ensure that the "READY" column in "scaledobject.keda.sh/keda-demo-sql" is "True". If it is not, the scaling process will fail. Common errors typically arise from using an incorrect connection string, referencing an incorrect deployment, or errors in the configuration file specifications.

To demonstrate that our application scales down when the query output is zero, we should delete all rows from the "backlog" table using the below SQL command. It's important to note that this command will only remove the contents of the table, not the table itself.

-- Delete all rows from the 'backlog' table

DELETE FROM backlog;

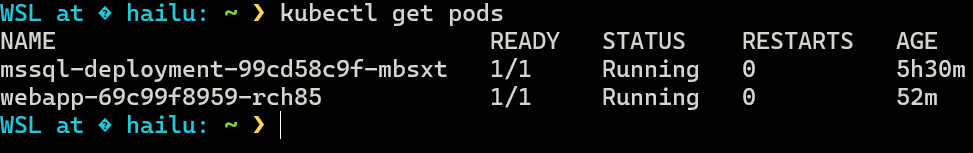

Upon checking the number of pods after a brief interval, the output will be as depicted in the screenshot below. There is one webapp pod due to the "minReplicaCount = 1" setting in our KEDA configuration. Indeed, the KEDA configuration allows scaling down to zero, which is not possible with the Kubernetes native Horizontal Pod Autoscaler (HPA): Horizontal Pod Autoscaler (HPA)

Summary

This guide has detailed the steps for deploying a .NET application and Microsoft SQL Server on Azure Kubernetes Service (AKS). Additionally, we've set up Kubernetes Event-Driven Autoscaling (KEDA) to dynamically scale our application in response to MSSQL queries. This method enables efficient resource management and ensures that our application remains performant and responsive under different load conditions.

Special thanks to Ayobami Ayodeji, Senior Program Manager, who leads teams developing technical assets for Azure's container services, for reviewing this content.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.